源码地址:https://github.com/BBuf/NetRewrite

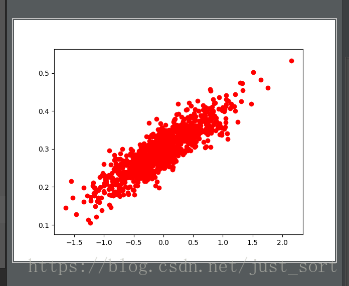

构造一个线性回归模型

#coding = utf-8

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

#随机生成1000个点,围绕在y=0.1x+0.3的直线周围

num_points = 1000

vectors_set = []

for i in range(num_points):

x1 = np.random.normal(0.0, 0.55)

y1 = x1 * 0.1 + 0.3 + np.random.normal(0.0, 0.03)

vectors_set.append([x1, y1])

#生成一些样本

x_data = [v[0] for v in vectors_set]

y_data = [v[1] for v in vectors_set]

plt.scatter(x_data, y_data, c='r')

plt.show()构造的数据形状如下:

然后构造一个线性回归模型拟合这些数据。

#生成1维的W矩阵,取值是[-1,1]之间的随机数

W = tf.Variable(tf.random_uniform([1], -1.0, 1.0), name='W')

#生成1维的矩阵,初始值是0

b = tf.Variable(tf.zeros([1], name='b'))

#经过计算得出预估值

y = W * x_data + b

#以预估值y和实际值y_data之间的均方误差作为损失

loss = tf.reduce_mean(tf.square(y-y_data), name='loss')

#采用梯度下降法来优化参数

optimizer = tf.train.GradientDescentOptimizer(0.5)

#训练的过程就是最小化这个误差

train = optimizer.minimize(loss, name='train')

sess = tf.Session()

init = tf.global_variables_initializer()

sess.run(init)

#初始化的W和b值是多少

print("W =", sess.run(W), "b =", sess.run(b), "loss =", sess.run(loss))

#执行20次训练

for step in range(20):

sess.run(train)

#输出训练好的W和b

print("W =", sess.run(W), "b =", sess.run(b), "loss =", sess.run(loss))逻辑回归模型识别Mnist手写数字

补充:tf.rank(arr).eval()函数可以看矩阵arr的维度,tf.shape().eval()行列,tf.argmax(arr,0).eval()列上最大值索引。

使用逻辑回归模型训练并识别Mnist手写数据集

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data/', one_hot=True)

x = tf.placeholder("float", [None, 784])

y = tf.placeholder("float", [None, 10])

W = tf.Variable(tf.zeros([784, 10]))

b = tf.Variable(tf.zeros([10]))

#LOGISTIC REGRESSAION MODEL

actv = tf.nn.softmax(tf.matmul(x,W)+b)

#COST FUNCTION

cost = tf.reduce_mean(-tf.reduce_sum(y*tf.log(actv), reduction_indices=1))

#OPTIMIZER

learing_rate = 0.01

optm = tf.train.GradientDescentOptimizer(learing_rate).minimize(cost)

#PREDICTION

pred = tf.equal(tf.arg_max(actv, 1), tf.arg_max(y, 1))

#ACCURACY

accr = tf.reduce_mean(tf.cast(pred, "float"))

#INITIALIZER

init = tf.global_variables_initializer()

#迭代次数

training_epochs = 50

#每次迭代选择的样本数

batch_size = 100

#展示

display_step = 5

sess = tf.Session()

sess.run(init)

for epoch in range(training_epochs):

avg_cost = 0

num_batch = int(mnist.train.num_examples/batch_size)

for i in range(num_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

sess.run(optm, feed_dict={x:batch_xs, y:batch_ys})

feeds = {x:batch_xs, y:batch_ys}

avg_cost += sess.run(cost, feed_dict=feeds)/num_batch

#DISPLAY

if epoch % display_step == 0:

feeds_train = {x:batch_xs, y:batch_ys}

feeds_test = {x:mnist.test.images, y:mnist.test.labels}

train_acc = sess.run(accr, feed_dict=feeds_train)

test_acc = sess.run(accr, feed_dict=feeds_test)

print("Epoch: %03d/%03d cost: %.9f train_acc: %.3f test_acc: %.3f"%(epoch, training_epochs, avg_cost, train_acc, test_acc))

print('Done!')

每5次迭代,打印训练和测试的准确率。

Epoch: 000/050 cost: 1.176236500 train_acc: 0.860 test_acc: 0.854

Epoch: 005/050 cost: 0.440927586 train_acc: 0.860 test_acc: 0.896

Epoch: 010/050 cost: 0.383316868 train_acc: 0.920 test_acc: 0.905

Epoch: 015/050 cost: 0.357254738 train_acc: 0.880 test_acc: 0.909

Epoch: 020/050 cost: 0.341469640 train_acc: 0.890 test_acc: 0.912

Epoch: 025/050 cost: 0.330563741 train_acc: 0.900 test_acc: 0.914

Epoch: 030/050 cost: 0.322318071 train_acc: 0.970 test_acc: 0.916

Epoch: 035/050 cost: 0.315941957 train_acc: 0.940 test_acc: 0.916

Epoch: 040/050 cost: 0.310726489 train_acc: 0.870 test_acc: 0.917

Epoch: 045/050 cost: 0.306347878 train_acc: 0.890 test_acc: 0.919双层神经网路识别Mnist数据集

#coding = utf-8

#双层的神经网络

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data/', one_hot=True)

#NETWORK TOPOLOGIES

n_hidden_1 = 256

n_hidden_2 = 128

n_input = 784

n_classes = 10

#INPUTS AND OUTPUTS

x = tf.placeholder("float", [None, 784])

y = tf.placeholder("float", [None, 10])

#NETWORK PARAMETERS

stddev = 0.1

weights = {

'w1': tf.Variable(tf.random_normal([n_input, n_hidden_1], stddev = stddev)),

'w2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2], stddev = stddev)),

'out': tf.Variable(tf.random_normal([n_hidden_2, n_classes], stddev = stddev))

}

bias = {

'b1': tf.Variable(tf.random_normal([n_hidden_1])),

'b2': tf.Variable(tf.random_normal([n_hidden_2])),

'out': tf.Variable(tf.random_normal([n_classes]))

}

print("NETWORK READY")

def multilayer_perceptron(_X, _weights, _bias):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(_X, _weights['w1']), _bias['b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, _weights['w2']), _bias['b2']))

return (tf.matmul(layer_2, _weights['out']) + _bias['out'])

#PREDICTION

pred = multilayer_perceptron(x, weights, bias)

#LOSS AND OPTIMZER

cost = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(labels=tf.argmax(y, 1), logits=pred))

optm = tf.train.GradientDescentOptimizer(learning_rate=0.001).minimize(cost)

corr = tf.equal(tf.arg_max(pred, 1), tf.arg_max(y, 1))

accr = tf.reduce_mean(tf.cast(corr, "float"))

#INITIALIZER

init = tf.global_variables_initializer()

print("FUNCTION READY")

#迭代次数

training_epochs = 20

#每次迭代选择的样本数

batch_size = 100

#展示

display_step = 4

sess = tf.Session()

sess.run(init)

for epoch in range(training_epochs):

avg_cost = 0

num_batch = int(mnist.train.num_examples/batch_size)

for i in range(num_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

sess.run(optm, feed_dict={x:batch_xs, y:batch_ys})

feeds = {x:batch_xs, y:batch_ys}

avg_cost += sess.run(cost, feed_dict=feeds)/num_batch

#DISPLAY

if epoch % display_step == 0:

feeds_train = {x:batch_xs, y:batch_ys}

feeds_test = {x:mnist.test.images, y:mnist.test.labels}

train_acc = sess.run(accr, feed_dict=feeds_train)

test_acc = sess.run(accr, feed_dict=feeds_test)

print("Epoch: %03d/%03d cost: %.9f train_acc: %.3f test_acc: %.3f"%(epoch, training_epochs, avg_cost, train_acc, test_acc))

print('Done!')训练过程:

Epoch: 000/100 cost: 2.349601070 train_acc: 0.160 test_acc: 0.123

Epoch: 004/100 cost: 2.249329622 train_acc: 0.200 test_acc: 0.260

Epoch: 008/100 cost: 2.214439031 train_acc: 0.400 test_acc: 0.419

Epoch: 012/100 cost: 2.174678910 train_acc: 0.530 test_acc: 0.499

Epoch: 016/100 cost: 2.127895809 train_acc: 0.630 test_acc: 0.555

Epoch: 020/100 cost: 2.072003197 train_acc: 0.540 test_acc: 0.597

Epoch: 024/100 cost: 2.004937103 train_acc: 0.610 test_acc: 0.638

Epoch: 028/100 cost: 1.925531760 train_acc: 0.520 test_acc: 0.660

Epoch: 032/100 cost: 1.834181487 train_acc: 0.700 test_acc: 0.677

Epoch: 036/100 cost: 1.733437475 train_acc: 0.740 test_acc: 0.695

Epoch: 040/100 cost: 1.627696041 train_acc: 0.700 test_acc: 0.713

Epoch: 044/100 cost: 1.522093713 train_acc: 0.740 test_acc: 0.726

Epoch: 048/100 cost: 1.420850527 train_acc: 0.710 test_acc: 0.741

Epoch: 052/100 cost: 1.326597604 train_acc: 0.680 test_acc: 0.760

Epoch: 056/100 cost: 1.240468896 train_acc: 0.740 test_acc: 0.770

Epoch: 060/100 cost: 1.162604903 train_acc: 0.810 test_acc: 0.782

Epoch: 064/100 cost: 1.092631850 train_acc: 0.800 test_acc: 0.792

Epoch: 068/100 cost: 1.029950367 train_acc: 0.810 test_acc: 0.802

Epoch: 072/100 cost: 0.973842550 train_acc: 0.790 test_acc: 0.810

Epoch: 076/100 cost: 0.923644384 train_acc: 0.800 test_acc: 0.816

Epoch: 080/100 cost: 0.878630804 train_acc: 0.840 test_acc: 0.822

Epoch: 084/100 cost: 0.838192068 train_acc: 0.800 test_acc: 0.828

Epoch: 088/100 cost: 0.801766150 train_acc: 0.840 test_acc: 0.835

Epoch: 092/100 cost: 0.768919702 train_acc: 0.850 test_acc: 0.838

Epoch: 096/100 cost: 0.739172614 train_acc: 0.830 test_acc: 0.842

Done!卷积神经网路识别Mnist数据集

#coding = utf-8

#卷积神经网络

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data/', one_hot=True)

#NETWORK TOPOLOGIES

n_input = 784

n_output = 10

#NETWORK PARAMETERS

weights = {

'wc1': tf.Variable(tf.random_normal([3,3,1,64], stddev=0.1)),

'wc2': tf.Variable(tf.random_normal([3,3,64,128], stddev=0.1)),

'wd1': tf.Variable(tf.random_normal([7*7*128, 1024], stddev=0.1)),

'wd2': tf.Variable(tf.random_normal([1024, n_output], stddev=0.1))

}

bias = {

'bc1': tf.Variable(tf.random_normal([64], stddev=0.1)),

'bc2': tf.Variable(tf.random_normal([128], stddev=0.1)),

'bd1': tf.Variable(tf.random_normal([1024], stddev=0.1)),

'bd2': tf.Variable(tf.random_normal([n_output], stddev=0.1))

}

def conv_basic(_input, _w, _b, _keepratio):

#INPUT

_input_r = tf.reshape(_input, shape=[-1,28,28,1])

#CONV LAYER 1

_conv1 = tf.nn.conv2d(_input_r, _w['wc1'], strides=[1,1,1,1], padding='SAME')

_conv1 = tf.nn.relu(tf.nn.bias_add(_conv1, _b['bc1']))

_pool1 = tf.nn.max_pool(_conv1, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

_pool_dr1 = tf.nn.dropout(_pool1, _keepratio)

#CONV LAYER 2

_conv2 = tf.nn.conv2d(_pool_dr1, _w['wc2'], strides = [1,1,1,1], padding='SAME')

_conv2 = tf.nn.relu(tf.nn.bias_add(_conv2, _b['bc2']))

_pool2 = tf.nn.max_pool(_conv2, ksize=[1,3,3,1], strides=[1,2,2,1], padding='SAME')

_pool_dr2 = tf.nn.dropout(_pool2, _keepratio)

#VECTORIZE

_dense1 = tf.reshape(_pool_dr2, [-1, _w['wd1'].get_shape().as_list()[0]])

#FULLY CONNECTED LAYER1

_fc1 = tf.nn.relu(tf.add(tf.matmul(_dense1, _w['wd1']), _b['bd1']))

_fc_dr1 = tf.nn.dropout(_fc1, _keepratio)

#FULLY CONNECTED LAYER2

_out = tf.add(tf.matmul(_fc_dr1, _w['wd2']), _b['bd2'])

#RETURN

out = {

'input_r':_input_r, 'conv1':_conv1, 'pool1': _pool1, 'pool1_dr1': _pool_dr1,

'conv2': _conv2, 'pool2': _pool2, 'pool_dr2': _pool_dr2, 'dense1': _dense1,

'fc1': _fc1, 'fc_dr1': _fc_dr1, 'out': _out

}

return out

print("CNN READY")

#INPUTS AND OUTPUTS

x = tf.placeholder("float", [None, 784])

y = tf.placeholder("float", [None, 10])

keepratio = tf.placeholder(tf.float32)

#FUNCTIONS

_pred = conv_basic(x, weights, bias, keepratio)['out']

cost = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(labels=tf.argmax(y, 1), logits=_pred))

optm = tf.train.GradientDescentOptimizer(learning_rate=0.001).minimize(cost)

_corr = tf.equal(tf.arg_max(_pred, 1), tf.arg_max(y, 1))

accr = tf.reduce_mean(tf.cast(_corr, tf.float32))

init = tf.global_variables_initializer()

#SAVER

print("GRAPH READY")

sess = tf.Session()

sess.run(init)

#迭代次数

training_epochs = 15

#每次迭代选择的样本数

batch_size = 16

#展示

display_step = 1

sess = tf.Session()

sess.run(init)

for epoch in range(training_epochs):

avg_cost = 0

# num_batch = int(mnist.train.num_examples/batch_size)

num_batch = 10

for i in range(num_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

sess.run(optm, feed_dict={x:batch_xs, y:batch_ys, keepratio:0.7})

feeds = {x:batch_xs, y:batch_ys,keepratio:1.}

avg_cost += sess.run(cost, feed_dict=feeds)/num_batch

#DISPLAY

if epoch % display_step == 0:

feeds_train = {x:batch_xs, y:batch_ys, keepratio:1.}

feeds_test = {x:mnist.test.images, y:mnist.test.labels,keepratio:1.}

train_acc = sess.run(accr, feed_dict=feeds_train)

test_acc = sess.run(accr, feed_dict=feeds_test)

print("Epoch: %03d/%03d cost: %.9f train_acc: %.3f test_acc: %.3f"%(epoch, training_epochs, avg_cost, train_acc, test_acc))

print('Done!')训练过程:

Epoch: 000/015 cost: 3.722336197 train_acc: 0.188 test_acc: 0.147

Epoch: 001/015 cost: 3.059996700 train_acc: 0.312 test_acc: 0.199

Epoch: 002/015 cost: 2.293635750 train_acc: 0.312 test_acc: 0.244

Epoch: 003/015 cost: 2.008019674 train_acc: 0.375 test_acc: 0.327

Epoch: 004/015 cost: 1.824654555 train_acc: 0.375 test_acc: 0.294

Epoch: 005/015 cost: 1.806769204 train_acc: 0.188 test_acc: 0.347

Epoch: 006/015 cost: 1.961713791 train_acc: 0.312 test_acc: 0.343

Epoch: 007/015 cost: 1.759841669 train_acc: 0.375 test_acc: 0.373

Epoch: 008/015 cost: 1.664015102 train_acc: 0.500 test_acc: 0.351

Epoch: 009/015 cost: 1.805098093 train_acc: 0.375 test_acc: 0.398

Epoch: 010/015 cost: 1.761338234 train_acc: 0.312 test_acc: 0.387

Epoch: 011/015 cost: 1.738769186 train_acc: 0.438 test_acc: 0.315

Epoch: 012/015 cost: 1.846973026 train_acc: 0.688 test_acc: 0.338

Epoch: 013/015 cost: 1.845908678 train_acc: 0.250 test_acc: 0.391

Epoch: 014/015 cost: 1.811384428 train_acc: 0.625 test_acc: 0.401

Done!Tensorflow模型保存(ckpt)—保存变量

import tensorflow as tf

v1 = tf.Variable(tf.random_normal([1,2]), name='v1')

v2 = tf.Variable(tf.random_normal([2,3]), name='v2')

init_op = tf.global_variables_initializer()

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(init_op)

print("V1:", sess.run(v1))

print("V2:", sess.run(v2))

saver_path = saver.save(sess, "save/model.ckpt")

print("Model saved in file: ", saver_path)

with tf.Session() as sess:

saver.restore(sess, "save/model.ckpt")

print("V1:", sess.run(v1))

print("V2:", sess.run(v2))

print("Model restored!")Tensorflow模型保存CNN

#coding = utf-8

#卷积神经网络

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data/', one_hot=True)

testimg = mnist.test.images

testlabel = mnist.test.labels

#NETWORK TOPOLOGIES

n_input = 784

n_output = 10

#NETWORK PARAMETERS

weights = {

'wc1': tf.Variable(tf.random_normal([3,3,1,64], stddev=0.1)),

'wc2': tf.Variable(tf.random_normal([3,3,64,128], stddev=0.1)),

'wd1': tf.Variable(tf.random_normal([7*7*128, 1024], stddev=0.1)),

'wd2': tf.Variable(tf.random_normal([1024, n_output], stddev=0.1))

}

bias = {

'bc1': tf.Variable(tf.random_normal([64], stddev=0.1)),

'bc2': tf.Variable(tf.random_normal([128], stddev=0.1)),

'bd1': tf.Variable(tf.random_normal([1024], stddev=0.1)),

'bd2': tf.Variable(tf.random_normal([n_output], stddev=0.1))

}

def conv_basic(_input, _w, _b, _keepratio):

#INPUT

_input_r = tf.reshape(_input, shape=[-1,28,28,1])

#CONV LAYER 1

_conv1 = tf.nn.conv2d(_input_r, _w['wc1'], strides=[1,1,1,1], padding='SAME')

_conv1 = tf.nn.relu(tf.nn.bias_add(_conv1, _b['bc1']))

_pool1 = tf.nn.max_pool(_conv1, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

_pool_dr1 = tf.nn.dropout(_pool1, _keepratio)

#CONV LAYER 2

_conv2 = tf.nn.conv2d(_pool_dr1, _w['wc2'], strides = [1,1,1,1], padding='SAME')

_conv2 = tf.nn.relu(tf.nn.bias_add(_conv2, _b['bc2']))

_pool2 = tf.nn.max_pool(_conv2, ksize=[1,3,3,1], strides=[1,2,2,1], padding='SAME')

_pool_dr2 = tf.nn.dropout(_pool2, _keepratio)

#VECTORIZE

_dense1 = tf.reshape(_pool_dr2, [-1, _w['wd1'].get_shape().as_list()[0]])

#FULLY CONNECTED LAYER1

_fc1 = tf.nn.relu(tf.add(tf.matmul(_dense1, _w['wd1']), _b['bd1']))

_fc_dr1 = tf.nn.dropout(_fc1, _keepratio)

#FULLY CONNECTED LAYER2

_out = tf.add(tf.matmul(_fc_dr1, _w['wd2']), _b['bd2'])

#RETURN

out = {

'input_r':_input_r, 'conv1':_conv1, 'pool1': _pool1, 'pool1_dr1': _pool_dr1,

'conv2': _conv2, 'pool2': _pool2, 'pool_dr2': _pool_dr2, 'dense1': _dense1,

'fc1': _fc1, 'fc_dr1': _fc_dr1, 'out': _out

}

return out

print("CNN READY")

#INPUTS AND OUTPUTS

x = tf.placeholder("float", [None, 784])

y = tf.placeholder("float", [None, 10])

keepratio = tf.placeholder(tf.float32)

#FUNCTIONS

_pred = conv_basic(x, weights, bias, keepratio)['out']

cost = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(labels=tf.argmax(y, 1), logits=_pred))

optm = tf.train.GradientDescentOptimizer(learning_rate=0.001).minimize(cost)

_corr = tf.equal(tf.arg_max(_pred, 1), tf.arg_max(y, 1))

accr = tf.reduce_mean(tf.cast(_corr, tf.float32))

init = tf.global_variables_initializer()

#SAVER

#每一个epoch保存一次模型参数

save_step = 1

#max_to_keep 表示保存最近的3次模型参数

saver = tf.train.Saver(max_to_keep=3)

print("GRAPH READY")

#do_train = 1 表示在训练 do_train = 0 表示在测试

do_train = 0

sess = tf.Session()

sess.run(init)

#迭代次数

training_epochs = 15

#每次迭代选择的样本数

batch_size = 16

#展示

display_step = 1

sess = tf.Session()

sess.run(init)

if do_train == 1:

for epoch in range(training_epochs):

avg_cost = 0

# num_batch = int(mnist.train.num_examples/batch_size)

num_batch = 10

for i in range(num_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

sess.run(optm, feed_dict={x: batch_xs, y: batch_ys, keepratio: 0.7})

feeds = {x: batch_xs, y: batch_ys, keepratio: 1.}

avg_cost += sess.run(cost, feed_dict=feeds) / num_batch

# DISPLAY

if epoch % display_step == 0:

feeds_train = {x: batch_xs, y: batch_ys, keepratio: 1.}

train_acc = sess.run(accr, feed_dict=feeds_train)

print("Epoch: %03d/%03d cost: %.9f train_acc: %.3f" % (epoch, training_epochs, avg_cost, train_acc))

# Save Net

if epoch % save_step == 0:

saver.save(sess, "save/nets/cnn_mnist_basic.ckpt-" + str(epoch))

if do_train == 0:

epoch = training_epochs-1

saver.restore(sess, "save/nets/cnn_mnist_basic.ckpt-" + str(epoch))

test_acc = sess.run(accr, feed_dict={x:testimg, y:testlabel, keepratio:1.})

print("TEST ACCURACY: %.3f" % (test_acc))

print('Done!')使用VGG-19的参数训练图片

参考文章:https://blog.csdn.net/cskywit/article/details/79187623

本文使用网上下载的VGG19卷积层参数测试一张图片,只使用了VGG19的Conv,Relu,Max-pooling层,没有用到最后三个FC层。参数文件是网上下载的imagenet-vgg-verydeep-19.mat。。第一步是搞清楚参数在该文件中的位置,采用逐渐尝试的方法。

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import scipy.io

import os

import scipy.misc

cwd = os.getcwd()

VGG_PATH = cwd + "\\data\\imagenet-vgg-verydeep-19.mat"

vgg = scipy.io.loadmat(VGG_PATH)

# 先显示一下数据类型,发现是dict

print(type(vgg))

# 字典就可以打印出键值dict_keys(['__header__', '__version__', '__globals__', 'layers', 'classes', 'normalization'])

print(vgg.keys())

# 进入layers字段,我们要的权重和偏置参数应该就在这个字段下

layers = vgg['layers']

# 打印下layers发现输出一大堆括号,好复杂的样子:[[ array([[ (array([[ array([[[[ ,顶级array有两个[[

# 所以顶层是两维,每一个维数的元素是array,array内部还有维数

# print(layers)

# 输出一下大小,发现是(1, 43),说明虽然有两维,但是第一维是”虚的”,也就是只有一个元素

# 根据模型可以知道,这43个元素其实就是对应模型的43层信息(conv1_1,relu,conv1_2…),Vgg-19没有包含Relu和Pool,那么看一层就足以,

# 而且我们现在得到了一个有用的index,那就是layer,layers[layer]

print("layers.shape:", layers.shape)

layer = layers[0]

# 输出的尾部有dtype=[('weights', 'O'), ('pad', 'O'), ('type', 'O'), ('name', 'O'), ('stride', 'O')])

# 可以看出顶层的array有5个元素,分别是weight(含有bias), pad(填充元素,无用), type, name, stride信息,

# 然后继续看一下shape信息,

print("layer.shape:", layer.shape)

# print(layer)输出是(1, 1),只有一个元素

print("layer[0].shape:", layer[0].shape)

# layer[0][0].shape: (1,),说明只有一个元素

print("layer[0][0].shape:", layer[0][0].shape)

# layer[0][0][0].shape: (1,),说明只有一个元素

print("layer[0][0][0].shape:", layer[0][0][0].shape)

# len(layer[0][0]):5,即weight(含有bias), pad(填充元素,无用), type, name, stride信息

print("len(layer[0][0][0]):", len(layer[0][0][0]))

# 所以应该能按照如下方式拿到信息,比如说name,输出为['conv1_1']

print("name:", layer[0][0][0][3])

# 查看一下weights的权重,输出(1,2),再次说明第一维是虚的,weights中包含了weight和bias

print("layer[0][0][0][0].shape", layer[0][0][0][0].shape)

print("layer[0][0][0][0].len", len(layer[0][0][0][0]))

# weights[0].shape: (2,),weights[0].len: 2说明两个元素就是weight和bias

print("layer[0][0][0][0][0].shape:", layer[0][0][0][0][0].shape)

print("layer[0][0][0][0].len:", len(layer[0][0][0][0][0]))

weights = layer[0][0][0][0][0]

# 解析出weight和bias

weight, bias = weights

# weight.shape: (3, 3, 3, 64)

print("weight.shape:", weight.shape)

# bias.shape: (1, 64)

print("bias.shape:", bias.shape)可以发现其中需要的Conv层weight和bias的位置,这也是我们需要从这个网络文件中获取的。至于Relu层没有参数,Max-pooling层stride为2,窗口为2*2,这是从VGG论文中可以知道的。第二步就是用代码加载网络中现成的权重和偏置参数用于测试我们的图片:

def _conv_layer(input, weights, bias):

#由于此处使用的是已经训练好的VGG-19参数,所有weights可以定义为常量

conv = tf.nn.conv2d(input, tf.constant(weights), strides=(1, 1, 1, 1),

padding='SAME')

return tf.nn.bias_add(conv, bias)

def _pool_layer(input):

return tf.nn.max_pool(input, ksize=(1, 2, 2, 1), strides=(1, 2, 2, 1),

padding='SAME')

def preprocess(image, mean_pixel):

return image - mean_pixel

def unprocess(image, mean_pixel):

return image + mean_pixel

def imread(path):

return scipy.misc.imread(path).astype(np.float)

def imsave(path, img):

img = np.clip(img, 0, 255).astype(np.uint8)

scipy.misc.imsave(path, img)

def net(data_path, input_image):

layers = (

'conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1',

'conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2',

'conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3',

'relu3_3', 'conv3_4', 'relu3_4', 'pool3',

'conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3',

'relu4_3', 'conv4_4', 'relu4_4', 'pool4',

'conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3',

'relu5_3', 'conv5_4', 'relu5_4'

)

data = scipy.io.loadmat(data_path)

# 原始VGG中,对输入的数据进行了减均值的操作,借别人的参数使用时也需要进行此步骤

# 获取每个通道的均值,打印输出每个通道的均值为[ 123.68 116.779 103.939]

mean = data['normalization'][0][0][0]

mean_pixel = np.mean(mean, axis=(0,1))

weights = data['layers'][0]

net = {}

current = input_image

# 定义net字典结构,key为层的名字,value保存每一层使用VGG参数运算后的结果

current = input_image

for i, name in enumerate(layers):

kind = name[:4]

if kind == 'conv':

kernels, bias = weights[i][0][0][0][0]

# 注意:Mat中的weights参数和tensorflow中不同

# matconvnet: weights are [width, height, in_channels, out_channels]

# mat weight.shape: (3, 3, 3, 64)

# tensorflow: weights are [height, width, in_channels, out_channels]

kernels = np.transpose(kernels, (1, 0, 2, 3))

# 扁平化

bias = bias.reshape(-1)

current = _conv_layer(current, kernels, bias)

elif kind == 'relu':

current = tf.nn.relu(current)

elif kind == 'pool':

current = _pool_layer(current)

net[name] = current

assert len(net) == len(layers)

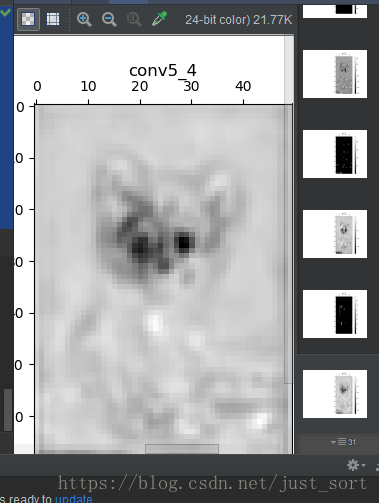

return net, mean_pixel, layers然后将模型和图片加载进行来,进行测试。输出每一个层的feature map。

cwd = os.getcwd()

VGG_PATH = cwd + "\\data\\imagenet-vgg-verydeep-19.mat"

IMG_PATH = cwd + "\\data\\cat.jpg"

input_image = imread(IMG_PATH)

shape = (1,input_image.shape[0],input_image.shape[1],input_image.shape[2])

with tf.Session() as sess:

image = tf.placeholder('float', shape=shape)

nets, mean_pixel, all_layers = net(VGG_PATH, image)

input_image_pre = np.array([preprocess(input_image, mean_pixel)])

layers = all_layers # For all layers

# layers = ('relu2_1', 'relu3_1', 'relu4_1')

for i, layer in enumerate(layers):

print("[%d/%d] %s" % (i + 1, len(layers), layer))

# 数据预处理

# feature:[batch数,H ,W ,深度]

features = nets[layer].eval(feed_dict={image: input_image_pre})

print(" Type of 'features' is ", type(features))

print(" Shape of 'features' is %s" % (features.shape,))

# Plot response

if 1:

plt.figure(i + 1, figsize=(10, 5))

plt.matshow(features[0, :, :, 0], cmap=plt.cm.gray, fignum=i + 1)

plt.title("" + layer)

plt.colorbar()

plt.show()循环神经网络RNN

讲解视频:https://www.bilibili.com/video/av24601590/?p=18

这里用循环神经网络实现了一个对Mnist数据集进行识别的模型训练

#coding = utf-8

#基于mnist数据集的RCNN

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

from tensorflow.contrib import rnn

mnist = input_data.read_data_sets('MNIST_data/', one_hot=True)

testimgs = mnist.test.images

testlabels = mnist.test.labels

n_classes = 10

#数据预处理

diminput = 28

dimhidden = 128

dimoutput = n_classes

nsteps = 28

weights = {

'hidden': tf.Variable(tf.random_normal([diminput,dimhidden])),

'out': tf.Variable(tf.random_normal([dimhidden, dimoutput]))

}

biases = {

'hidden': tf.Variable(tf.random_normal([dimhidden])),

'out': tf.Variable(tf.random_normal([dimoutput]))

}

def _RNN(_X, _W, _b, _nsteps, _name):

# 1. Permute input from [batchsize, nsteps, diminput]

# => [nsteps, batchsize, diminput]

_X = tf.transpose(_X, [1, 0, 2])

# 2. Reshape input to [nsteps*batchsize, diminput]

_X = tf.reshape(_X, [-1, diminput])

# 3. Input layer => Hidden layer

_H = tf.matmul(_X, _W['hidden']) + _b['hidden']

# 4. Splite data to 'nsteps' chunks. An i-th chunck indicates i-th batch data.

#意思就是共享卷积,然后再把序列分开成单个

_Hsplit = tf.split(_H, _nsteps, 0)

# 5. Get LSTM's final output (_LSTM_O) and state (_LSTM_S)

# Both _LSTM_O and _LSTM_S consist of 'batchsize' elements

# Only _LSTM_O will be used to predict the output.

with tf.variable_scope(_name) as scope:

#变量重用

#scope.reuse_variables()

lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(dimhidden, forget_bias=1.0)

_LSTM_O, _LSTM_S = rnn.static_rnn(lstm_cell, _Hsplit, dtype=tf.float32)

#6. Output

_O = tf.matmul(_LSTM_O[-1], _W['out']) + _b['out']

return {

'X': _X, 'H': _H, 'Hsplit': _Hsplit,

'LSTM_O': _LSTM_O, 'LSTM_S': _LSTM_S, 'O': _O

}

print('Network ready')

learning_rate = 0.001

x = tf.placeholder("float", [None, nsteps, diminput])

y = tf.placeholder("float", [None, dimoutput])

keepratio = tf.placeholder(tf.float32)

myrnn = _RNN(x, weights, biases, nsteps, 'basic')

pred = myrnn['O']

cost = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(labels=tf.argmax(y, 1), logits=pred))

optm = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

_corr = tf.equal(tf.arg_max(pred, 1), tf.arg_max(y, 1))

accr = tf.reduce_mean(tf.cast(_corr, tf.float32))

init = tf.global_variables_initializer()

print("Network Ready")

sess = tf.Session()

sess.run(init)

#迭代次数

training_epochs = 5

#每次迭代选择的样本数

batch_size = 16

ntest = mnist.test.images.shape[0]

#展示

display_step = 1

sess = tf.Session()

sess.run(init)

for epoch in range(training_epochs):

avg_cost = 0

#num_batch = int(mnist.train.num_examples/batch_size)

num_batch = 100

for i in range(num_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

batch_xs = batch_xs.reshape((batch_size, nsteps, diminput))

sess.run(optm, feed_dict={x:batch_xs, y:batch_ys, keepratio:0.7})

feeds = {x:batch_xs, y:batch_ys,keepratio:1.}

avg_cost += sess.run(cost, feed_dict=feeds)/num_batch

#DISPLAY

if epoch % display_step == 0:

print("Epoch: %03d/%03d cost: %.9f" % (epoch, training_epochs, avg_cost))

feeds = {x:batch_xs, y:batch_ys}

train_acc = sess.run(accr, feed_dict=feeds)

print("Training accuracy: %.3f" % (train_acc))

testimgs = testimgs.reshape((ntest, nsteps, diminput))

feeds = {x:testimgs, y:testlabels}

test_acc = sess.run(accr, feed_dict=feeds)

print("Test accuracy: %.3f" % test_acc)

print('Done!')

训练过程日志记录:

Epoch: 000/005 cost: 1.725976922

Training accuracy: 0.500

Test accuracy: 0.466

Epoch: 001/005 cost: 1.225094625

Training accuracy: 0.688

Test accuracy: 0.498

Epoch: 002/005 cost: 1.046114697

Training accuracy: 0.625

Test accuracy: 0.571

Epoch: 003/005 cost: 0.927317887

Training accuracy: 0.500

Test accuracy: 0.631

Epoch: 004/005 cost: 0.825420048

Training accuracy: 0.688

Test accuracy: 0.637

Done!验证码识别的demo,利用tensorflow和captcha

#coding = utf-8

import tensorflow as tf

from captcha.image import ImageCaptcha

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import random

import time

number = ['0','1','2','3','4','5','6','7','8','9']

alphabet = ['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k', 'l', 'm', 'n', 'o', 'p', 'q', 'r', 's', 't', 'u', 'v', 'w', 'x', 'y', 'z']

ALPHABET = ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'J', 'K', 'L', 'M', 'N', 'O', 'P', 'Q', 'R', 'S', 'T', 'U', 'V', 'W', 'X', 'Y', 'Z']

IMAGE_HEIGHT = 60

IMAGE_WIDTH = 160

MAX_CAPTCHA = 4

# 把彩色图像转为灰度图像(色彩对识别验证码没有什么用)

def convert2gray(img):

if len(img.shape) > 2:

gray = np.mean(img, -1)

# 上面的转法较快,正规转法如下

# r, g, b = img[:,:,0], img[:,:,1], img[:,:,2]

# gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

else:

return img

# 文本转向量

char_set = number

CHAR_SET_LEN = len(char_set)

def text2vec(text):

text_len = len(text)

if text_len > MAX_CAPTCHA:

raise ValueError('验证码最长4个字符')

vector = np.zeros(MAX_CAPTCHA * CHAR_SET_LEN)

def char2pos(c):

if c == '_':

k = 62

return k

k = ord(c) - 48

if k > 9:

k = ord(c) - 55

if k > 35:

k = ord(c) - 61

if k > 61:

raise ValueError('No Map')

return k

for i, c in enumerate(text):

#print text

idx = i * CHAR_SET_LEN + char2pos(c)

#print i,CHAR_SET_LEN,char2pos(c),idx

vector[idx] = 1

return vector

# 向量转回文本

def vec2text(vec):

char_pos = vec.nonzero()[0]

text = []

for i, c in enumerate(char_pos):

char_at_pos = i # c/63

char_idx = c % CHAR_SET_LEN

if char_idx < 10:

char_code = char_idx + ord('0')

elif char_idx < 36:

char_code = char_idx - 10 + ord('A')

elif char_idx < 62:

char_code = char_idx - 36 + ord('a')

elif char_idx == 62:

char_code = ord('_')

else:

raise ValueError('error')

text.append(chr(char_code))

return "".join(text)

def random_captcha_text(char_set=number, captcha_size=4):

captcha_text = []

for i in range(captcha_size):

c = random.choice(char_set)

captcha_text.append(c)

return captcha_text

def gen_captcha_text_and_image():

image = ImageCaptcha()

#把list转换成字符串

captcha_text = random_captcha_text()

captcha_text = ''.join(captcha_text)

captcha = image.generate(captcha_text)

captcha_image = Image.open(captcha)

captcha_image = np.array(captcha_image)

return captcha_text,captcha_image

# 生成一个训练batch

def get_next_batch(batch_size=128):

batch_x = np.zeros([batch_size, IMAGE_HEIGHT * IMAGE_WIDTH])

batch_y = np.zeros([batch_size, MAX_CAPTCHA * CHAR_SET_LEN])

# 有时生成图像大小不是(60, 160, 3)

def wrap_gen_captcha_text_and_image():

while True:

text, image = gen_captcha_text_and_image()

if image.shape == (60, 160, 3):

return text, image

for i in range(batch_size):

text, image = wrap_gen_captcha_text_and_image()

image = convert2gray(image)

batch_x[i, :] = image.flatten() / 255 # (image.flatten()-128)/128 mean为0

batch_y[i, :] = text2vec(text)

return batch_x, batch_y

X = tf.placeholder(tf.float32, [None, IMAGE_HEIGHT * IMAGE_WIDTH])

Y = tf.placeholder(tf.float32, [None, MAX_CAPTCHA * CHAR_SET_LEN])

keep_prob = tf.placeholder(tf.float32) # dropout

#定义CNN

def crack_captcha_cnn(w_alpha=0.01, b_alpha = 0.1):

x= tf.reshape(X, shape=[-1, IMAGE_HEIGHT, IMAGE_WIDTH, 1])

w_c1 = tf.Variable(w_alpha * tf.random_normal([3, 3, 1, 32]))

b_c1 = tf.Variable(b_alpha * tf.random_normal([32]))

conv1 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(x, w_c1, strides=[1, 1, 1, 1], padding='SAME'), b_c1))

conv1 = tf.nn.max_pool(conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv1 = tf.nn.dropout(conv1, keep_prob)

w_c2 = tf.Variable(w_alpha * tf.random_normal([3, 3, 32, 64]))

b_c2 = tf.Variable(b_alpha * tf.random_normal([64]))

conv2 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv1, w_c2, strides=[1, 1, 1, 1], padding='SAME'), b_c2))

conv2 = tf.nn.max_pool(conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv2 = tf.nn.dropout(conv2, keep_prob)

w_c3 = tf.Variable(w_alpha * tf.random_normal([3, 3, 64, 64]))

b_c3 = tf.Variable(b_alpha * tf.random_normal([64]))

conv3 = tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(conv2, w_c3, strides=[1, 1, 1, 1], padding='SAME'), b_c3))

conv3 = tf.nn.max_pool(conv3, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

conv3 = tf.nn.dropout(conv3, keep_prob)

# Fully connected layer

w_d = tf.Variable(w_alpha * tf.random_normal([8 * 32 * 40, 1024]))

b_d = tf.Variable(b_alpha * tf.random_normal([1024]))

dense = tf.reshape(conv3, [-1, w_d.get_shape().as_list()[0]])

dense = tf.nn.relu(tf.add(tf.matmul(dense, w_d), b_d))

dense = tf.nn.dropout(dense, keep_prob)

w_out = tf.Variable(w_alpha * tf.random_normal([1024, MAX_CAPTCHA * CHAR_SET_LEN]))

b_out = tf.Variable(b_alpha * tf.random_normal([MAX_CAPTCHA * CHAR_SET_LEN]))

out = tf.add(tf.matmul(dense, w_out), b_out)

# out = tf.nn.softmax(out)

return out

#训练

def train_crack_captcha_cnn():

output = crack_captcha_cnn()

loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=output, labels=Y))

optimizer = tf.train.AdamOptimizer(learning_rate=0.001).minimize(loss)

predict = tf.reshape(output, [-1, MAX_CAPTCHA, CHAR_SET_LEN])

max_idx_p = tf.argmax(predict, 2)

max_idx_l = tf.argmax(tf.reshape(Y, [-1, MAX_CAPTCHA, CHAR_SET_LEN]), 2)

correct_pred = tf.equal(max_idx_p, max_idx_l)

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

step = 0

while True:

batch_x, batch_y = get_next_batch(64)

_, loss_ = sess.run([optimizer, loss], feed_dict={X: batch_x, Y: batch_y, keep_prob: 0.75})

print(time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time())), step, loss_)

# 每100 step计算一次准确率

if step % 100 == 0:

batch_x_test, batch_y_test = get_next_batch(100)

acc = sess.run(accuracy, feed_dict={X: batch_x_test, Y: batch_y_test, keep_prob: 1.})

# 如果准确率大于85%,保存模型,完成训练

if acc > 0.85:

saver.save(sess, "./model/crack_capcha.model", global_step=step)

break

step += 1

if __name__ == '__main__':

train = 1

if train == 0:

train_crack_captcha_cnn()

if train == 1:

output = crack_captcha_cnn()

saver = tf.train.Saver()

sess = tf.Session()

saver.restore(sess, "./model/crack_capcha.model-1300")

while (1):

text, image = gen_captcha_text_and_image()

image = convert2gray(image)

image = image.flatten() / 255

predict = tf.argmax(tf.reshape(output, [-1, MAX_CAPTCHA, CHAR_SET_LEN]), 2)

text_list = sess.run(predict, feed_dict={X: [image], keep_prob: 1})

predict_text = text_list[0].tolist()

vector = np.zeros(MAX_CAPTCHA * CHAR_SET_LEN)

i = 0

for t in predict_text:

vector[i * 10 + t] = 1

i += 1

# break

print("正确: {} 预测: {}".format(text, vec2text(vector)))