整体代码

代码在 TensorFlow入门_2_mnist数据集训练与相关函数解释中代码的基础之上修改了一些地方,主要是关于命名空间的

from tensorflow.examples.tutorials.mnist import input_data

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

mnist = input_data.read_data_sets('E:\img\mnist', one_hot=True)

n_batch = mnist.train.num_examples

print(n_batch)

with tf.name_scope("input"):

x = tf.placeholder(tf.float32,[None,784],name = 'x-input')

y = tf.placeholder(tf.float32,[None,10],name = "y-input")

with tf.name_scope('layer'):

with tf.name_scope('wights'):

W = tf.Variable(tf.zeros([784,10]))

with tf.name_scope('biases'):

b = tf.Variable(tf.zeros([10]))

with tf.name_scope('wx_b'):

wx_b = tf.matmul(x,W)+b

with tf.name_scope("prediction"):

prediction = tf.nn.softmax(tf.matmul(x,W)+b)

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels = y,logits = prediction))

with tf.name_scope('train_step'):

train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss)

with tf.name_scope('results'):

with tf.name_scope('prediction_sets'):

c_prediction = tf.equal(tf.argmax(y,1),tf.argmax(prediction,1))

with tf.name_scope('accuracy'):

#将向量转化为float32后求平均值,即得到准确率

accuracy = tf.reduce_mean(tf.cast(c_prediction,tf.float32))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

writer = tf.summary.FileWriter('logs/',sess.graph)

for epoch in range(300):

batch_x,batch_y = mnist.train.next_batch(100)

sess.run(train_step,feed_dict={x:batch_x,y:batch_y})

acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels})

print("Iter {}, Testing Acc : {}".format(epoch,acc))初识summary

tf.summary是对网络中Tensor取值进行监测的一种Operation。这些操作主要用于查看,不影响数据流本身。

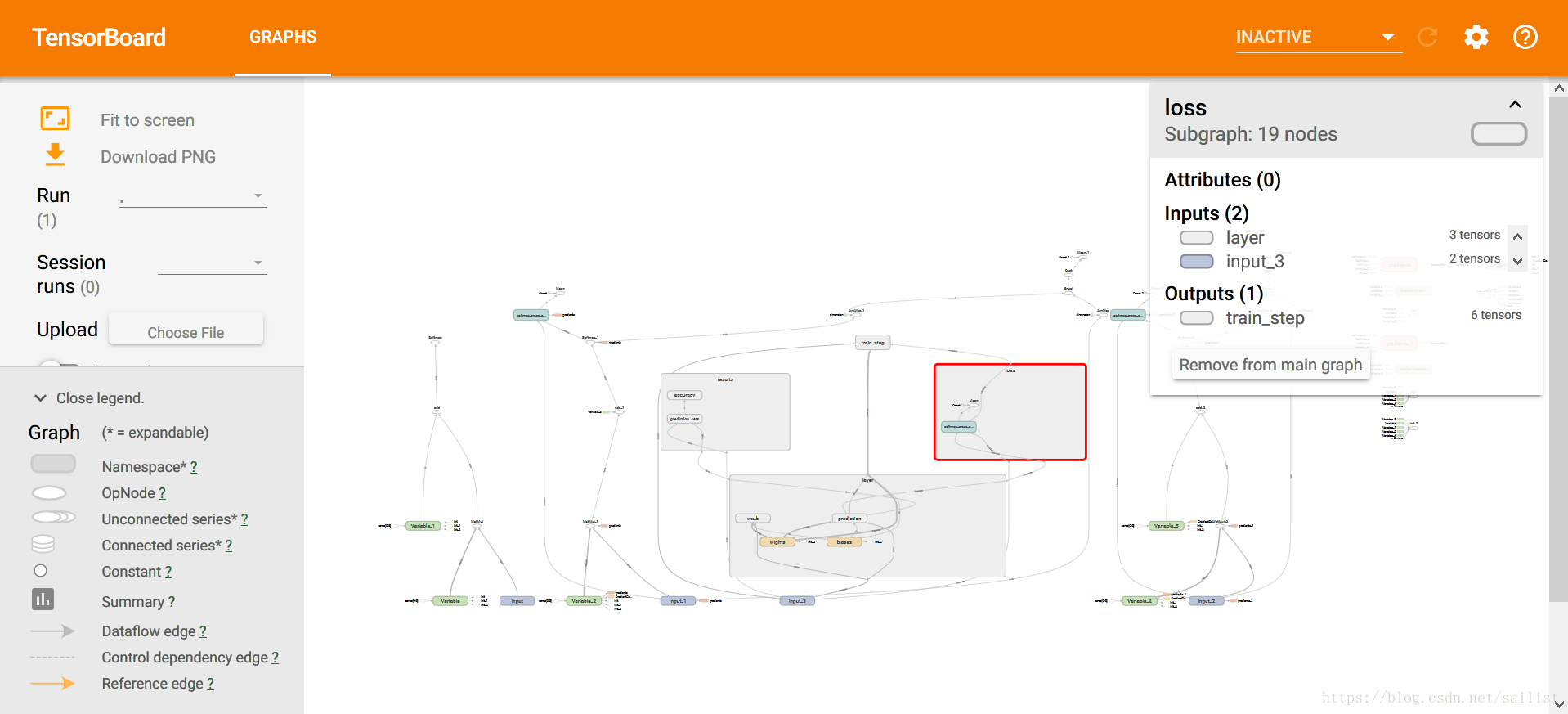

查看图

with tf.Session() as sess:

sess.run(init)

writer = tf.summary.FileWriter('logs/',sess.graph)

for epoch in range(300):

batch_x,batch_y = mnist.train.next_batch(100)

sess.run(train_step,feed_dict={x:batch_x,y:batch_y})

acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels})

print("Iter {}, Testing Acc : {}".format(epoch,acc))在最后会话开启时,运行

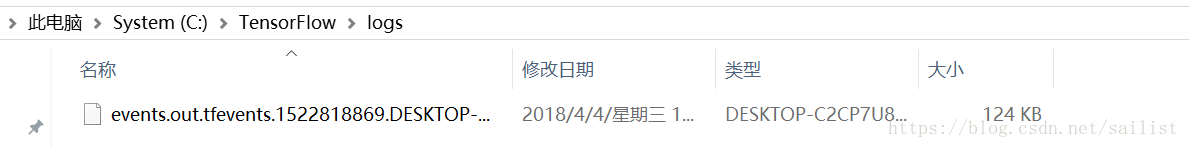

writer = tf.summary.FileWriter('logs/',sess.graph)会在当前第一个参数给定的目录下生成一个文件

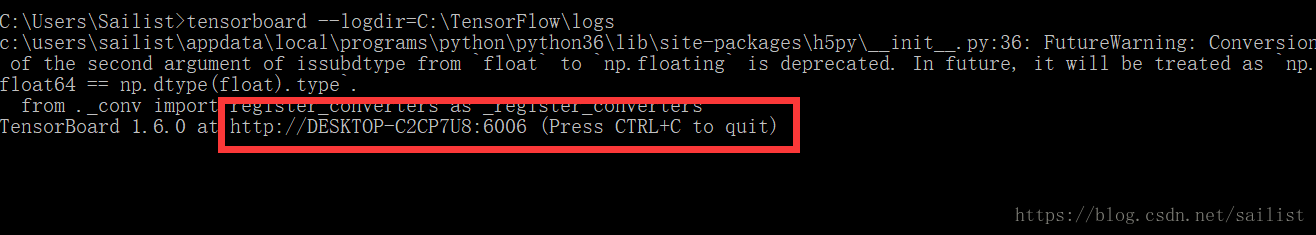

打开命令提示符,运行

tensorboard –logdir=[给定目录],在执行命令后会给出一个链接,在浏览器打开(推荐谷歌、火狐浏览器,搜狗浏览器(亲测)无法正确打开)

左键按住可以移动,滚轮放大缩小,右键可以把节点拆分成单独节点(方便查看),双击圆角矩形的节点可以展开查看节点详情,以上就是这个的基本用法了

命名空间

找到layer节点双击展开,可以看到

恰好对应了我们之前写的代码

with tf.name_scope('layer'):

with tf.name_scope('wights'):

W = tf.Variable(tf.zeros([784,10]))

with tf.name_scope('biases'):

b = tf.Variable(tf.zeros([10]))

with tf.name_scope('wx_b'):

wx_b = tf.matmul(x,W)+b

with tf.name_scope("prediction"):

prediction = tf.nn.softmax(tf.matmul(x,W)+b)命名空间的作用就是用于将变量和操作分类,在一个稍微大一些的网络中,如果不采用这种方式,可能最后生成的图会异常的复杂,没有办法查看理清其结构,在以后网络层级的定义中,应该去主动的为一类变量主动分配命名空间。