1.首先搭建kafka集群

2.搭建阿里云rds云数据库

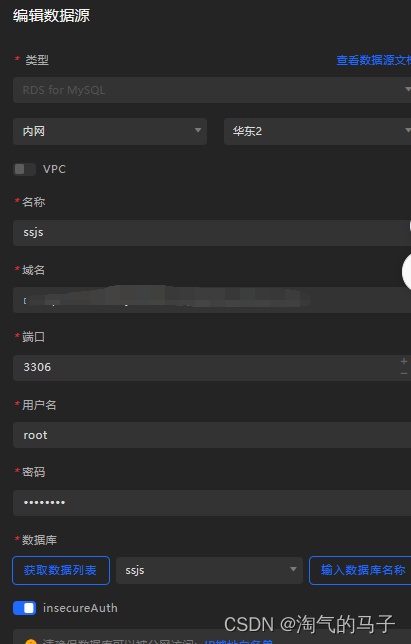

3.datav连接阿里云mysql数据库

4.编写kafka生产者代码

package com.kafka;

import com.opencsv.CSVReader;

import com.opencsv.exceptions.CsvValidationException;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.io.*;

import java.util.ArrayList;

import java.util.Properties;

public class KafkaProducerTest2 {

public static void main(String[] args) throws FileNotFoundException, UnsupportedEncodingException {

Properties props = new Properties();

//1.指定Kafaka集群的ip地址和端口号

props.put("bootstrap.servers", "kafka01:9092,kafka02:9093,kafka03:9094");

//2.等待所有副本节点的应答

props.put("acks", "all");

//3.消息发送最大尝试次数

props.put("retries", 0);

//4.指定一批消息处理次数

props.put("batch.size", 16384);

//5.指定请求延时

props.put("linger.ms", 1);

//6.指定缓存区内存大小

props.put("buffer.memory", 33554432);

//7.设置key序列化

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//8.设置value序列化

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// 9、生产数据

KafkaProducer<String, String> producer = new KafkaProducer<String, String>(props);

// 读取csv文件

String directoryPath = "C:\\Users\\lenovo\\Desktop\\实时计算\\dist";

File directory = new File(directoryPath);

ArrayList<Integer> row = new ArrayList<>();//记录csv文件读到了多少行

int sum=1;

while (true){

int csv=0;//csv文件的个数也代表当前是第几个csv

for (File file : directory.listFiles()) {

if (file.getName().endsWith(".csv")){

csv++;

CSVReader reader = new CSVReader(new InputStreamReader(new FileInputStream(file), "GBK"));

String[] stock; // 存储每一行数据的数组

int now;//行数

int read=1;//跳过前面的行数

try {

now=row.get(csv-1)+1;//若数组里已经存了当前行数则替换

}

catch (IndexOutOfBoundsException e){

now=1;

}

try {

int r=0;

System.out.println("新增数据:");

while ((stock = reader.readNext()) != null) {

if (now==1){

now--;//第一行是标签

continue;

}

// 处理每一行数据

if (read<=now){

read++;

}

else {

r++;

String value = "";

for (int i=0;i< stock.length;i++) {

if(i== stock.length-1)

value = value + stock[i];

else

value = value + stock[i]+ ",";

}

//上传数据到kafka

producer.send(new ProducerRecord<String, String>("topic3",value));

System.out.println(value);

}

}

try{

row.set(csv-1,r+row.get(csv-1));

}

catch (IndexOutOfBoundsException e){

row.add(r);

}

}

catch (IOException e){

System.out.println("文件不存在或打开失败");

producer.close(); // 关闭Kafka生产者

}

catch (CsvValidationException e){

System.out.println("行数与预期的列数不匹配或CSV文件格式不正确,无法解析或该行包含了不正确的数据");

producer.close(); // 关闭Kafka生产者

}

System.out.println("第"+sum+"次"+"读取完毕"+file+'\n'+"当前"+row.get(csv-1)+'行');

System.out.println("------------------------------------------");

}

}

try {

// 暂停当前线程执行1秒钟

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

if (csv==0)

System.out.println("第"+sum+"次读取"+" 没有数据");

sum++;

}

}

}5.编写flink消费者代码

package com.kafka;

import com.mysql.MySQLSink;

import com.mysql.MySQLUpdater;

import com.mysql.time;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.util.Properties;

import java.util.concurrent.atomic.AtomicInteger;

import java.util.concurrent.atomic.AtomicLong;

public class FlinkKafkaConsumer1{

public static void main(String[] args) throws Exception {

// 1 初始化 Flink 环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(3);

// 2 准备 Kafka 配置

Properties properties = new Properties();

properties.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,

"kafka01:9092,kafka02:9093,kafka03:9094");

// 3 创建 Kafka 消费者

FlinkKafkaConsumer<String> kafkaConsumer = new FlinkKafkaConsumer<>(

"topic3",

new SimpleStringSchema(),

properties

);

// 4 将消费者和 Flink 流关联,并对每个消息进行处理

env.addSource(kafkaConsumer)

.map(new MapFunction<String, String>() {

// 原子整数用于统计交易笔数之和

private final AtomicInteger count = new AtomicInteger(0);

// 原子长整型用于统计交易总量之和

private final AtomicLong totalAmount = new AtomicLong(0);

// 开始时间

private final long startTime = System.currentTimeMillis();

@Override

public String map(String value) throws Exception {

System.out.printf("value=%s%n", value);

String[] values = value.split(",");

int tradeCount = 1;

long tradeAmount = Long.parseLong(values[4]);

totalAmount.addAndGet(tradeAmount);

count.addAndGet(tradeCount);

long endTime = System.currentTimeMillis();

System.out.printf("tradeCount=%d,totalAmount=%d%n",count.get(),totalAmount.get());

System.out.printf("total_time=%d ms %n",endTime-startTime);

// MySQLUpdater.updateTradeCount(count.get());

return value;

}

})

// .print();

.addSink(new time());

// .addSink(new MySQLSink());

// 5 执行任务

env.execute();

}

}6.利用flink-jdbc将数据保存到数据库

package com.mysql;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.atomic.AtomicLong;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.ReentrantLock;

public class time extends RichSinkFunction<String> {

private transient Connection connection;

private PreparedStatement preparedStatement;

private PreparedStatement updateLocal;

private PreparedStatement updateStmt;

private final String dbUrl = "数据库域名";

private final String username = "用户";

private final String password = "数据库密码";

private int trade = 0;

private int su = 0;

private AtomicLong totalTradeCount = new AtomicLong(0);

private AtomicLong totalTradeAmount = new AtomicLong(0);

private AtomicLong totalBuyAmount = new AtomicLong(0);

private AtomicLong totalSellAmount = new AtomicLong(0);

private AtomicLong minuteTradeCount = new AtomicLong(0);

private AtomicLong minuteTradeAmount = new AtomicLong(0);

private AtomicLong minuteBuyAmount = new AtomicLong(0);

private AtomicLong minuteSellAmount = new AtomicLong(0);

private AtomicLong guolianTradeVolume = new AtomicLong(0);

private AtomicLong tongdaxinTradeVolume = new AtomicLong(0);

private AtomicLong changchengTradeVolume = new AtomicLong(0);

private AtomicLong guotaijunanTradeVolume = new AtomicLong(0);

private AtomicLong yinheTradeVolume = new AtomicLong(0);

private AtomicLong tonghuashunTradeVolume = new AtomicLong(0);

private AtomicLong numRecords = new AtomicLong(0);

private long lastUpdateTime = System.currentTimeMillis();

private Map<String, StockTradeInfo> stockTradeInfoMap = new HashMap<>();

private Map<String, values> valueMAP = new HashMap<>();

private final ReentrantLock lock = new ReentrantLock();

private static class values {

private AtomicLong value = new AtomicLong(0);

}

// 内部类用于存储股票交易信息

private static class StockTradeInfo {

AtomicLong totalTradeAmount = new AtomicLong(0);

AtomicLong minuteTradeAmount = new AtomicLong(0);

AtomicLong totalBuyAmount = new AtomicLong(0);

AtomicLong totalSellAmount = new AtomicLong(0);

long lastUpdateTime = System.currentTimeMillis();

}

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

// 初始化数据库连接

connection = DriverManager.getConnection(dbUrl, username, password);

String sql = "UPDATE tradeCount SET "

+ "trade = ?, "

+ "totalTradeCount = ?, "

+ "totalTradeAmount = ?, "

+ "totalBuyAmount = ?, "

+ "totalSellAmount = ?, "

+ "minuteTradeCount = ?, "

+ "minuteTradeAmount = ?, "

+ "minuteBuyAmount = ?, "

+ "minuteSellAmount = ?, "

+ "guolianTradeVolume = ?, "

+ "tongdaxinTradeVolume = ?, "

+ "changchengTradeVolume = ?, "

+ "guotaijunanTradeVolume = ?, "

+ "yinheTradeVolume = ?, "

+ "tonghuashunTradeVolume = ? ,"

+ "value = ? "

+ "WHERE id = '股票' ";

preparedStatement = connection.prepareStatement(sql);

String updateSql = "UPDATE tradeCount SET totalTradeAmount = ?, minuteTradeAmount = ?, totalBuyAmount = ?,totalSellAmount = ? WHERE id = ?";

updateStmt = connection.prepareStatement(updateSql);

String Sql = "UPDATE tradeCount SET value= ? WHERE id = ?";

updateLocal = connection.prepareStatement(Sql);

Thread thread = new Thread(() -> {

while (true) {

try {

Thread.sleep(1000);

lock.lock();

try {

if (trade > 0) {

update();

su=0;

}

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

} catch (InterruptedException e) {

e.printStackTrace();

}

}

});

thread.setDaemon(true); // 将线程设置为守护线程

thread.start();

}

@Override

public void invoke(String value, Context context) throws Exception {

lock.lock();

try {

su++;

trade++;

String[] values = value.split(",");

String stockId = values[2]; // 使用values[2]作为股票ID

String local = values[6];

long tradeCount = Long.parseLong(values[4]);

double tradePrice = Double.parseDouble(values[3]);

double tradeAmount = tradeCount * tradePrice;

// 更新累计值

totalTradeCount.addAndGet(tradeCount);

totalTradeAmount.addAndGet((long)tradeAmount);

// 更新近一分钟的值

long currentTime = System.currentTimeMillis();

if (currentTime - lastUpdateTime > 60000) {

minuteTradeCount.set(0);

minuteTradeAmount.set(0);

minuteBuyAmount.set(0);

minuteSellAmount.set(0);

lastUpdateTime = currentTime;

}

minuteTradeCount.addAndGet(tradeCount);

minuteTradeAmount.addAndGet((long)tradeAmount);

// 根据买入或卖出更新相应的值

if (values[5].equals("买入")) {

totalBuyAmount.addAndGet(tradeCount);

minuteBuyAmount.addAndGet(tradeCount);

} else if (values[5].equals("卖出")) {

totalSellAmount.addAndGet(tradeCount);

minuteSellAmount.addAndGet(tradeCount);

}

//更新券商交易量

if (values[7].equals("国联证券")) {

guolianTradeVolume.addAndGet(tradeCount);

}

else if (values[7].equals("通达信")) {

tongdaxinTradeVolume.addAndGet(tradeCount);

}

else if (values[7].equals("长城证券")) {

changchengTradeVolume.addAndGet(tradeCount);

}

else if (values[7].equals("国泰君安证券")) {

guotaijunanTradeVolume.addAndGet(tradeCount);

}

else if (values[7].equals("银河证券")) {

yinheTradeVolume.addAndGet(tradeCount);

}

else if (values[7].equals("同花顺")) {

tonghuashunTradeVolume.addAndGet(tradeCount);

}

//更新地图数据

values v = valueMAP.computeIfAbsent(local, k -> new values());

v.value.addAndGet(tradeCount);

//更新不同股票数据

StockTradeInfo stockTradeInfo = stockTradeInfoMap.computeIfAbsent(stockId, k -> new StockTradeInfo());

// 更新总交易金额

stockTradeInfo.totalTradeAmount.addAndGet((long) tradeAmount);

// 更新近一分钟的交易金额

long Time = System.currentTimeMillis();

if (Time - stockTradeInfo.lastUpdateTime > 60000) {

stockTradeInfo.minuteTradeAmount.set(0);

stockTradeInfo.lastUpdateTime = Time;

}

stockTradeInfo.minuteTradeAmount.addAndGet((long) tradeAmount);

if (values[5].equals("买入")) {

stockTradeInfo.totalBuyAmount.addAndGet(tradeCount);

} else if (values[5].equals("卖出")) {

stockTradeInfo.totalSellAmount.addAndGet(tradeCount);

}

} finally {

lock.unlock();

}

}

private void update() throws Exception {

// 更新数据

preparedStatement.setInt(1, trade);

preparedStatement.setLong(2, totalTradeCount.get());

preparedStatement.setLong(3, totalTradeAmount.get());

preparedStatement.setLong(4, totalBuyAmount.get());

preparedStatement.setLong(5, totalSellAmount.get());

preparedStatement.setLong(6, minuteTradeCount.get());

preparedStatement.setLong(7, minuteTradeAmount.get());

preparedStatement.setLong(8, minuteBuyAmount.get());

preparedStatement.setLong(9, minuteSellAmount.get());

preparedStatement.setLong(10, guolianTradeVolume.get());

preparedStatement.setLong(11, tongdaxinTradeVolume.get());

preparedStatement.setLong(12, changchengTradeVolume.get());

preparedStatement.setLong(13, guotaijunanTradeVolume.get());

preparedStatement.setLong(14, yinheTradeVolume.get());

preparedStatement.setLong(15, tonghuashunTradeVolume.get());

preparedStatement.setLong(16, su);

preparedStatement.executeUpdate();

// 更新股票相关数据

for (Map.Entry<String, StockTradeInfo> entry : stockTradeInfoMap.entrySet()) {

String stockId = entry.getKey();

StockTradeInfo stockTradeInfo = entry.getValue();

// 设置证券公司数据

updateStmt.setLong(1, stockTradeInfo.totalTradeAmount.get());

updateStmt.setLong(2, stockTradeInfo.minuteTradeAmount.get());

updateStmt.setLong(3, stockTradeInfo.totalBuyAmount.get());

updateStmt.setLong(4, stockTradeInfo.totalSellAmount.get());

updateStmt.setString(5, stockId);

updateStmt.executeUpdate();

}

// 更新地图数据

for (Map.Entry<String, values> en : valueMAP.entrySet()) {

String local = en.getKey();

values v = en.getValue();

updateLocal.setLong(1, v.value.get());

updateLocal.setString(2, local);

updateLocal.executeUpdate();

}

}

@Override

public void close() throws Exception {

super.close();

// 关闭连接

if (preparedStatement != null) {

preparedStatement.close();

}

if (connection != null) {

connection.close();

}

}

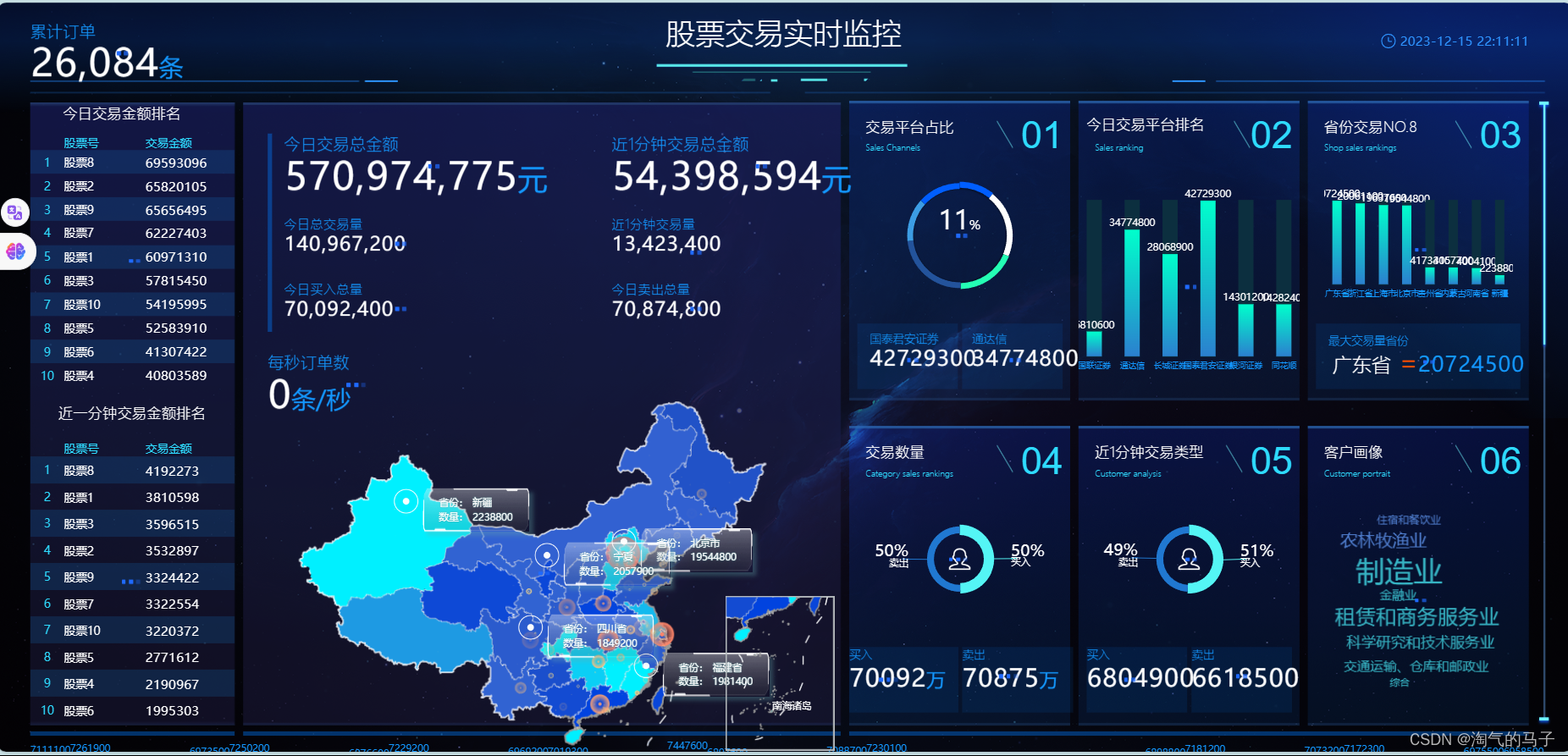

}7.效果展示