前言

最近做了一个图像去雨项目,使用的语言为Python,深度学习框架为Pytorch。去雨效果不错,具体请看效果视频!

图像去雨(GAN,Opencv,Pytorch)

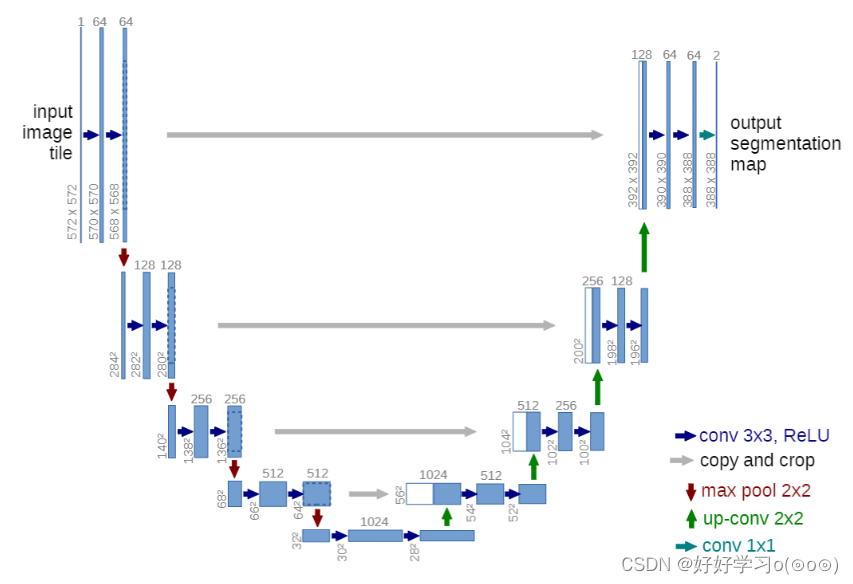

网络模型

去雨的网络架构为Unet模型,模型的结构图如下所示:

代码如下:

import torch

import torch.nn as nn

from torchsummary import summary

from torchvision import models

from torchvision.models.feature_extraction import create_feature_extractor

import torch.nn.functional as F

from torchstat import stat

class Resnet18(nn.Module):

def __init__(self):

super(Resnet18, self).__init__()

self.resnet = models.resnet18(pretrained=False)

# self.resnet = create_feature_extractor(self.resnet, {'relu': 'feat320', 'layer1': 'feat160', 'layer2': 'feat80',

# 'layer3': 'feat40'})

def forward(self,x):

for name,m in self.resnet._modules.items():

x=m(x)

if name=='relu':

x1=x

elif name=='layer1':

x2=x

elif name=='layer2':

x3=x

elif name=='layer3':

x4=x

break

# x=self.resnet(x)

return x1,x2,x3,x4

class Linears(nn.Module):

def __init__(self,a,b):

super(Linears, self).__init__()

self.linear1=nn.Linear(a,b)

self.relu1=nn.LeakyReLU()

self.linear2 = nn.Linear(b, a)

self.sigmoid=nn.Sigmoid()

def forward(self,x):

x=self.linear1(x)

x=self.relu1(x)

x=self.linear2(x)

x=self.sigmoid(x)

return x

class DenseNetBlock(nn.Module):

def __init__(self,inplanes=1,planes=1,stride=1):

super(DenseNetBlock,self).__init__()

self.conv1=nn.Conv2d(inplanes,planes,3,stride,1)

self.bn1 = nn.BatchNorm2d(planes)

self.relu1=nn.LeakyReLU()

self.conv2 = nn.Conv2d(inplanes, planes, 3,stride,1)

self.bn2 = nn.BatchNorm2d(planes)

self.relu2 = nn.LeakyReLU()

self.conv3 = nn.Conv2d(inplanes, planes, 3,stride,1)

self.bn3 = nn.BatchNorm2d(planes)

self.relu3 = nn.LeakyReLU()

def forward(self,x):

ins=x

x=self.conv1(x)

x=self.bn1(x)

x=self.relu1(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu2(x)

x=x+ins

x2=self.conv3(x)

x2 = self.bn3(x2)

x2=self.relu3(x2)

out=ins+x+x2

return out

class SEnet(nn.Module):

def __init__(self,chs,reduction=4):

super(SEnet,self).__init__()

self.average_pooling = nn.AdaptiveAvgPool2d(output_size=(1, 1))

self.fc = nn.Sequential(

# First reduce dimension, then raise dimension.

# Add nonlinear processing to fit the correlation between channels

nn.Linear(chs, chs // reduction),

nn.LeakyReLU(inplace=True),

nn.Linear(chs // reduction, chs)

)

self.activation = nn.Sigmoid()

def forward(self,x):

ins=x

batch_size, chs, h, w = x.shape

x=self.average_pooling(x)

x = x.view(batch_size, chs)

x=self.fc(x)

x = x.view(batch_size,chs,1,1)

return x*ins

class UAFM(nn.Module):

def __init__(self):

super(UAFM, self).__init__()

# self.meanPool_C=torch.max()

self.attention=nn.Sequential(

nn.Conv2d(4, 8, 3, 1,1),

nn.LeakyReLU(),

nn.Conv2d(8, 1, 1, 1),

nn.Sigmoid()

)

def forward(self,x1,x2):

x1_mean_pool=torch.mean(x1,dim=1)

x1_max_pool,_=torch.max(x1,dim=1)

x2_mean_pool = torch.mean(x2, dim=1)

x2_max_pool,_ = torch.max(x2, dim=1)

x1_mean_pool=torch.unsqueeze(x1_mean_pool,dim=1)

x1_max_pool=torch.unsqueeze(x1_max_pool,dim=1)

x2_mean_pool=torch.unsqueeze(x2_mean_pool,dim=1)

x2_max_pool=torch.unsqueeze(x2_max_pool,dim=1)

cat=torch.cat((x1_mean_pool,x1_max_pool,x2_mean_pool,x2_max_pool),dim=1)

a=self.attention(cat)

out=x1*a+x2*(1-a)

return out

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.resnet18=Resnet18()

self.SENet=SEnet(chs=256)

self.UAFM=UAFM()

self.DenseNet1=DenseNetBlock(inplanes=256,planes=256)

self.transConv1=nn.ConvTranspose2d(256,128,3,2,1,output_padding=1)

self.DenseNet2 = DenseNetBlock(inplanes=128, planes=128)

self.transConv2 = nn.ConvTranspose2d(128, 64, 3, 2, 1, output_padding=1)

self.DenseNet3 = DenseNetBlock(inplanes=64, planes=64)

self.transConv3 = nn.ConvTranspose2d(64, 64, 3, 2, 1, output_padding=1)

self.transConv4 = nn.ConvTranspose2d(64, 32, 3, 2, 1, output_padding=1)

self.DenseNet4=DenseNetBlock(inplanes=32,planes=32)

self.out=nn.Sequential(

nn.Conv2d(32,3,1,1),

nn.Sigmoid()

)

def forward(self,x):

"""

下采样部分

"""

x1,x2,x3,x4=self.resnet18(x)

# feat320=features['feat320']

# feat160=features['feat160']

# feat80=features['feat80']

# feat40=features['feat40']

feat320=x1

feat160=x2

feat80=x3

feat40=x4

"""

上采样部分

"""

x=self.SENet(feat40)

x=self.DenseNet1(x)

x=self.transConv1(x)

x=self.UAFM(x,feat80)

x=self.DenseNet2(x)

x=self.transConv2(x)

x=self.UAFM(x,feat160)

x = self.DenseNet3(x)

x = self.transConv3(x)

x = self.UAFM(x, feat320)

x=self.transConv4(x)

x=self.DenseNet4(x)

out=self.out(x)

# out=torch.concat((out,out,out),dim=1)*255.

return out

def freeze_backbone(self):

for param in self.resnet18.parameters():

param.requires_grad = False

def unfreeze_backbone(self):

for param in self.resnet18.parameters():

param.requires_grad = True

if __name__ == '__main__':

net=Net()

print(net)

# stat(net,(3,640,640))

summary(net,input_size=(3,512,512),device='cpu')

aa=torch.ones((6,3,512,512))

out=net(aa)

print(out.shape)

# ii=torch.zeros((1,3,640,640))

# outs=net(ii)

# print(outs.shape)

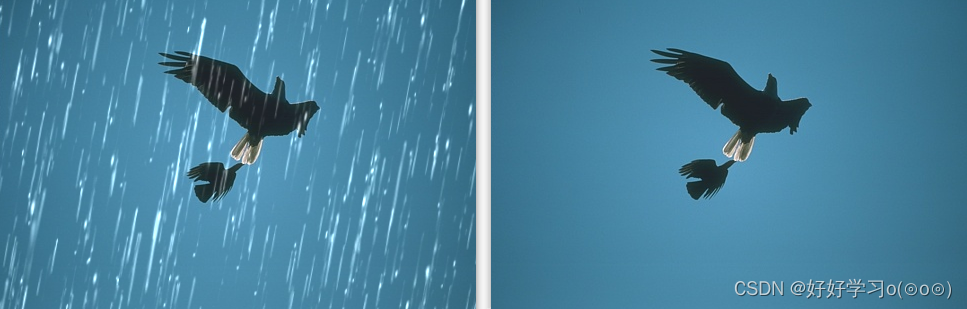

数据集

去雨数据集为rainL,共有1800张有雨图像,每张有雨图像都对应一张无雨图像,如下图所示:

模型训练

训练代码如下:

from model import Net

from myDataset import Mydata

import torch.nn as nn

import torchvision.models as models

import torch

import random

import torch.optim as optim

from torch.utils.data import DataLoader

from tqdm import tqdm

#获取学习率函数

def get_lr(optimizer):

for param_group in optimizer.param_groups:

return param_group['lr']

#计算准确率函数

def metric_func(pred,lab):

_,index=torch.max(pred,dim=-1)

acc=torch.where(index==lab,1.,0.).mean()

return acc

device=torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

#加载resnet18模型

net=Net()

stadic=torch.load('logs/last.pth')

net.load_state_dict(stadic)

net=net.to(device)

train_lines=open('data.txt','r').readlines()

#学习率

lr = 0.0001

#设置batchsize

batch_size = 8

num_train = len(train_lines)

epoch_step = num_train // batch_size

#设置损失函数

loss_fun = nn.L1Loss()

#设置优化器

optimizer = optim.Adam(net.parameters(), lr=lr, betas=(0.5, 0.999))

#学习率衰减

lr_scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=1, gamma=0.99)

"""迭代读取训练数据"""

train_data=Mydata(train_lines,train=True)

train_loader = DataLoader(dataset=train_data,batch_size=batch_size,shuffle=True)

if __name__ == '__main__':

Epoch=100

epoch_step = num_train // batch_size

for epoch in range(1, Epoch + 1):

net.train()

total_loss = 0

with tqdm(total=epoch_step, desc=f'Epoch {

epoch}/{

Epoch}', postfix=dict, mininterval=0.3) as pbar:

for step, (features, labels) in enumerate(train_loader, 1):

features = features.to(device)

labels = labels.to(device)

batch_size = labels.size()[0]

out = net(features)

loss = loss_fun(out, labels)*10

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss

pbar.set_postfix(**{

'loss': total_loss.item() / (step),

'lr': get_lr(optimizer)})

pbar.update(1)

#保存模型

if (epoch) % 1 == 0:

torch.save(net.state_dict(), 'logs/Epoch%d-Loss%.4f_.pth' % (

epoch, total_loss / (epoch_step + 1)))

torch.save(net.state_dict(),'logs/last.pth')

lr_scheduler.step()

项目结构&源码下载

项目结构如下图所示:

运行main.py即可弹出界面。

项目下载:下载地址