引言

为了以后能够顺利的将模型部署在rk3568开发板中,我们首先要在rknn-toolkit2提供的模拟器环境中进行运行测试,从而保证所选模型能够在开发板上进行部署,测试环境是Ubuntu20.04。

rknn-toolkit2的安装教程之前写过:RKNN-ToolKit2 1.5.0安装教程_计算机幻觉的博客-CSDN博客

总体流程:

1、准备训练好的deeplabv3plus模型(pth)文件。

2、将训练好的pth文件转换为onnx格式。

3、将转换好的onnx文件在代码中转化为rknn并进行推理测试。

一、准备pth文件

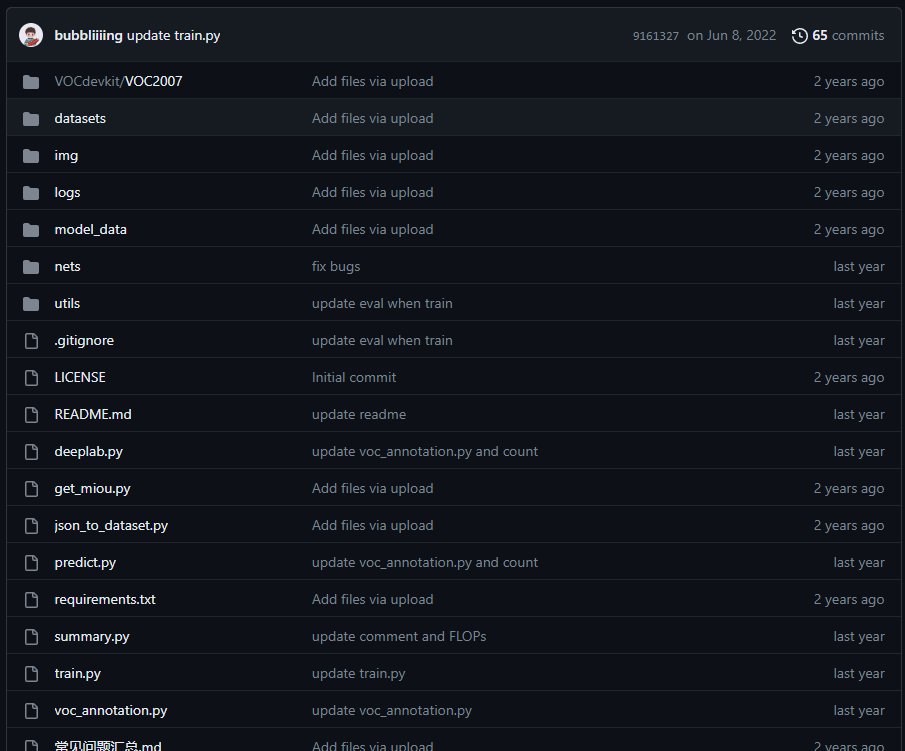

1、博主这里采用的DeeplabV3plus代码是一个B站up主的,代码链接:bubbliiiing/deeplabv3-plus-pytorch: 这是一个deeplabv3-plus-pytorch的源码,可以用于训练自己的模型。 (github.com)

代码有很多中文的注释,对国人相当友好,大家跑起来应该没有什么问题。大家根据自己的需求设置相应的参数进行训练,最终得到训练好的pth文件。

二、转换pth得到ONNX文件

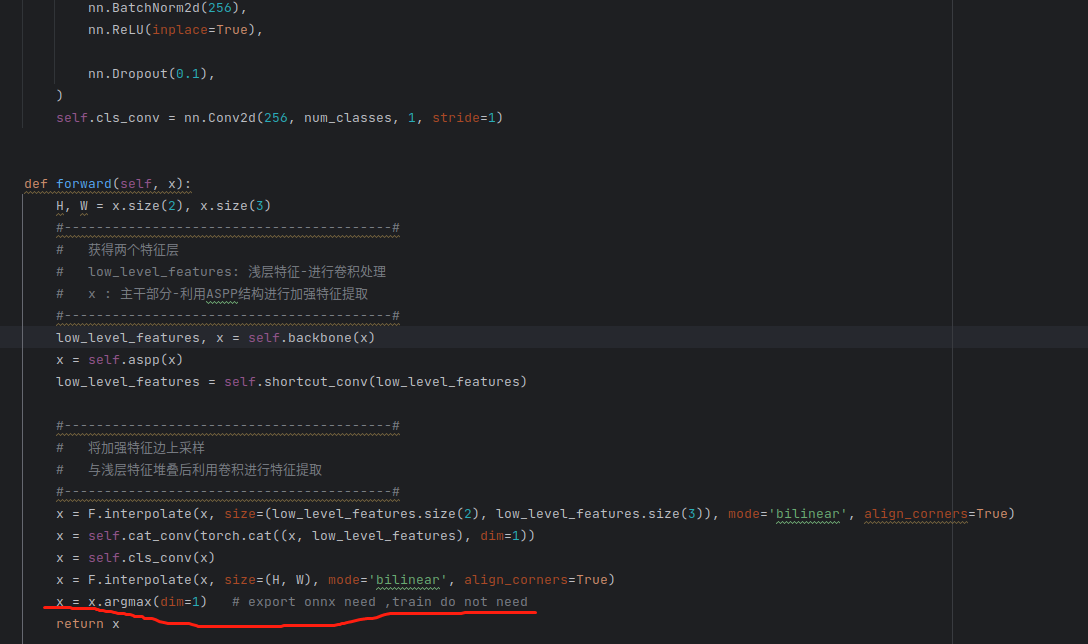

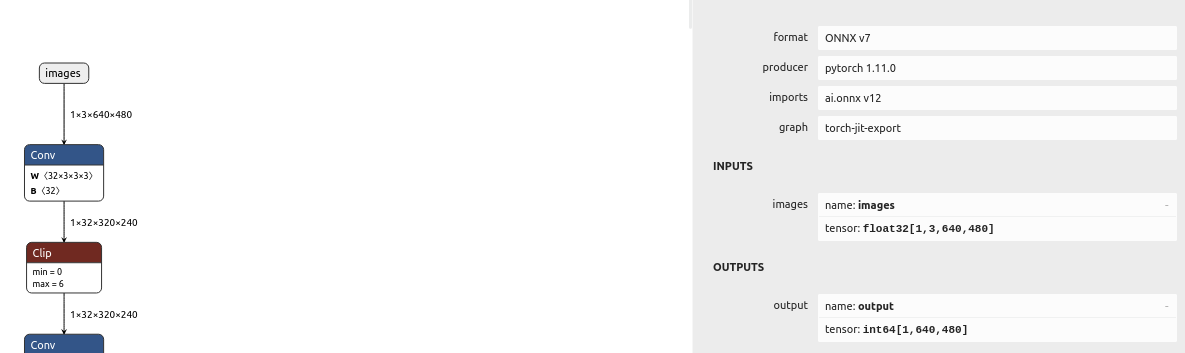

在将pth文件转化为ONNX格式之前,我们还需要对代码进行一定的修改。正常DeeplabV3plus模型的输出格式是[batchsize, num_classes, width, height](onnx可视化如图1),2是类别数(包含背景类)。

如果我们不对输出进行修改,最后测试会出现五颜六色的情况(哭,当时改了半天才发现)。我们打开deeplabv3-plus-pytorch/nets/deeplabv3_plus.py文件,找到模型的最终输出部分,在最后添加一句代码:

x = x.argmax(dim=1) # export onnx need ,train do not need

代码总不能不明不白添加吧!这里做一下解释,为啥要这么做:首先我们要知道,原本的输出是网络对图像中每个像素点在‘num_clasees’维度上输出一个概率分布,表示该像素属于每个类别的概率,而我们只需要知道他最大的那个概率是谁就行,所以在dim=1维度上取最高概率的索引,从而为每个像素点确定其预测的类别。

多说一句:训练时候把这句注释掉,只有在导出onnx格式时候才会用到这句。

修改完代码之后,修改相应参数执行predict.py代码即可得到最终我们需要的ONNX格式,可视化如下:

三、在rknn-toolkit2提供的模拟器中进行推理

简单讲解一下测试代码:

1、首先我们调用一个名为‘get_color_map_list’的函数,用于生成可视化分割掩码的颜色映射,这个颜色映射可以用于为分割掩码的不同种类分配不同的颜色,从而更容易地可视化分割任务的结果。

class_num = 256

color_map = get_color_map_list(class_num)

2、创建RKNN对象,并且加载ONNX模型。这里说一下,因为要在模拟器中运行,rknn不允许直接加载rknn模型进行推理测试,所以这里我们只能将转换和加载两个步骤合并到一起。有几个需要注意的点:1、mean_values和std_mean的值需要和你训练时候设置的一致,不然测试结果会有出入;2、加载onnx模型需要固定好output的名称,不对应会报错。

# Create RKNN object

rknn = RKNN()

# if not os.path.exists(RKNN_MODEL):

# print("model not exist")

# exit(-1)

# Load ONNX model

print("--> Loading model")

# ret = rknn.load_rknn(RKNN_MODEL)

# rknn.config(mean_values=[82.9835, 93.9795, 82.1893], std_values=[54.02, 54.804, 54.0225], target_platform='rk3568')

rknn.config(mean_values=[83.0635, 93.9795, 82.13325], std_values=[53.856, 54.774, 53.9325], target_platform='rk3568')

# rknn.config(mean_values=[0, 0, 0], std_values=[255, 255, 255], target_platform='rk3568')

ret = rknn.load_onnx(model=ONNX_MODEL, outputs=['output']) # 这里一定要根据onnx模型修改

ret = rknn.build(do_quantization=True, dataset='./dataset.txt')

if ret != 0:

print("Load rknn model failed!")

exit(ret)

print("done")

# init runtime environment

print("--> Init runtime environment")

ret = rknn.init_runtime(target=None)

if ret != 0:

print("Init runtime environment failed")

exit(ret)

print("done")3、准备数据,这边的代码因人而异,如果报错就自己debug下,主要注意的就是格式和维度要保持一致。

# 1. Data Preparation

image_size = (640, 480)

src_img = cv2.imread(IMG_PATH)

resize_img = cv2.resize(src_img, image_size)

color_img = cv2.cvtColor(resize_img, cv2.COLOR_BGR2RGB) # hwc rgb

color_img =color_img[np.newaxis,:] #add axis 1

color_img = color_img.transpose((0, 3, 2, 1))

# 2. Inference

print("--> Running model")

# 获取开始时间

start = time.clock()

pred = rknn.inference(inputs=[color_img], data_format='nchw') #nhwc --> nchw

# 获取结束时间

end = time.clock()

# 计算运行时间

runTime = end - start

runTime_ms = runTime * 1000

# 输出运行时间

print("运行时间:", runTime_ms, "毫秒")

# 压缩维度并且转换类型

pred = np.squeeze(pred).astype('uint8')

pred = pred.transpose((1, 0))

# 融合原图与分割图

added_image = visualize(resize_img, pred, color_map, None, weight=0.6)

cv2.imshow("DeeplabV3plus_nomean", added_image)

cv2.imwrite("DeeplabV3plus_nomean.jpg", added_image)

cv2.waitKey(0)

rknn.release()这里放出完整的测试代码:

import os

import time

import numpy as np

import cv2

import torch

from rknn.api import RKNN

import torch.nn.functional as F

# RKNN_MODEL = "/home/zw/Prg/Pycharm/file/RKNN3568/deeplabv3plus_rk3568_out_opt.rknn"

IMG_PATH = "/dataset/images/09R14.png"

ONNX_MODEL = '/home/zw/Prg/Pycharm/file/RKNN3568/onnx/deeplabv3plus/DeeplabV3plusnew.onnx'

def visualize(image, result, color_map, save_dir=None, weight=0.6):

"""

Convert predict result to color image, and save added image.

Args:

image (str): The path of origin image.

result (np.ndarray): The predict result of image.

color_map (list): The color used to save the prediction results.

save_dir (str): The directory for saving visual image. Default: None.

weight (float): The image weight of visual image, and the result weight is (1 - weight). Default: 0.6

Returns:

vis_result (np.ndarray): If `save_dir` is None, return the visualized result.

"""

color_map = [color_map[i:i + 3] for i in range(0, len(color_map), 3)]

color_map = np.array(color_map).astype("uint8")

# Use OpenCV LUT for color mapping

c1 = cv2.LUT(result, color_map[:, 0])

c2 = cv2.LUT(result, color_map[:, 1])

c3 = cv2.LUT(result, color_map[:, 2])

pseudo_img = np.dstack((c3, c2, c1))

im = image

vis_result = cv2.addWeighted(im, weight, pseudo_img, 1 - weight, 0)

if save_dir is not None:

if not os.path.exists(save_dir):

os.makedirs(save_dir)

image_name = os.path.split(image)[-1]

out_path = os.path.join(save_dir, image_name)

cv2.imwrite(out_path, vis_result)

else:

return vis_result

def get_color_map_list(num_classes, custom_color=None):

"""

Returns the color map for visualizing the segmentation mask,

which can support arbitrary number of classes.

Args:

num_classes (int): Number of classes.

custom_color (list, optional): Save images with a custom color map. Default: None, use paddleseg's default color map.

Returns:

(list). The color map.

"""

num_classes += 1

color_map = num_classes * [0, 0, 0]

for i in range(0, num_classes):

j = 0

lab = i

while lab:

color_map[i * 3] |= (((lab >> 0) & 1) << (7 - j))

color_map[i * 3 + 1] |= (((lab >> 1) & 1) << (7 - j))

color_map[i * 3 + 2] |= (((lab >> 2) & 1) << (7 - j))

j += 1

lab >>= 3

color_map = color_map[3:]

if custom_color:

color_map[:len(custom_color)] = custom_color

return color_map

if __name__ == "__main__":

class_num = 256

color_map = get_color_map_list(class_num)

# Create RKNN object

rknn = RKNN()

# if not os.path.exists(RKNN_MODEL):

# print("model not exist")

# exit(-1)

# Load ONNX model

print("--> Loading model")

# ret = rknn.load_rknn(RKNN_MODEL)

# rknn.config(mean_values=[82.9835, 93.9795, 82.1893], std_values=[54.02, 54.804, 54.0225], target_platform='rk3568')

rknn.config(mean_values=[83.0635, 93.9795, 82.13325], std_values=[53.856, 54.774, 53.9325], target_platform='rk3568')

# rknn.config(mean_values=[0, 0, 0], std_values=[255, 255, 255], target_platform='rk3568')

ret = rknn.load_onnx(model=ONNX_MODEL, outputs=['output']) # 这里一定要根据onnx模型修改

ret = rknn.build(do_quantization=True, dataset='./dataset.txt')

if ret != 0:

print("Load rknn model failed!")

exit(ret)

print("done")

# init runtime environment

print("--> Init runtime environment")

ret = rknn.init_runtime(target=None)

if ret != 0:

print("Init runtime environment failed")

exit(ret)

print("done")

# 1. Data Preparation

image_size = (640, 480)

src_img = cv2.imread(IMG_PATH)

resize_img = cv2.resize(src_img, image_size)

color_img = cv2.cvtColor(resize_img, cv2.COLOR_BGR2RGB) # hwc rgb

color_img =color_img[np.newaxis,:] #add axis 1

color_img = color_img.transpose((0, 3, 2, 1))

# 2. Inference

print("--> Running model")

# 获取开始时间

start = time.clock()

pred = rknn.inference(inputs=[color_img], data_format='nchw') #nhwc --> nchw

# 获取结束时间

end = time.clock()

# 计算运行时间

runTime = end - start

runTime_ms = runTime * 1000

# 输出运行时间

print("运行时间:", runTime_ms, "毫秒")

# 压缩维度并且转换类型

pred = np.squeeze(pred).astype('uint8')

pred = pred.transpose((1, 0))

# 融合原图与分割图

added_image = visualize(resize_img, pred, color_map, None, weight=0.6)

cv2.imshow("DeeplabV3plus_nomean", added_image)

cv2.imwrite("DeeplabV3plus_nomean.jpg", added_image)

cv2.waitKey(0)

rknn.release()更新 2023.8.18

上面的代码虽然可以跑通测试,但是模型rknn上会有不小的精度损失。经过一系列的查验,发现是在推理时候处理NCHW顺序存在错误,导致出现精度损失。话不多说,直接贴一下最新的测试代码:

import os

import time

import numpy as np

import cv2

import torch

from rknn.api import RKNN

import torch.nn.functional as F

# RKNN_MODEL = "/home/zw/Prg/Pycharm/file/RKNN3568/deeplabv3plus_rk3568_out_opt.rknn"

IMG_PATH = "/dataset/images/471extraL21.jpg"

ONNX_MODEL = '/home/zw/Prg/Pycharm/file/RKNN3568/onnx/deeplabv3plus/deepnew_48.onnx'

def visualize(image, result, color_map, save_dir=None, weight=0.6):

"""

Convert predict result to color image, and save added image.

Args:

image (str): The path of origin image.

result (np.ndarray): The predict result of image.

color_map (list): The color used to save the prediction results.

save_dir (str): The directory for saving visual image. Default: None.

weight (float): The image weight of visual image, and the result weight is (1 - weight). Default: 0.6

Returns:

vis_result (np.ndarray): If `save_dir` is None, return the visualized result.

"""

color_map = [color_map[i:i + 3] for i in range(0, len(color_map), 3)]

color_map = np.array(color_map).astype("uint8")

# Use OpenCV LUT for color mapping

c1 = cv2.LUT(result, color_map[:, 0])

c2 = cv2.LUT(result, color_map[:, 1])

c3 = cv2.LUT(result, color_map[:, 2])

pseudo_img = np.dstack((c3, c2, c1))

im = image

vis_result = cv2.addWeighted(im, weight, pseudo_img, 1 - weight, 0)

if save_dir is not None:

if not os.path.exists(save_dir):

os.makedirs(save_dir)

image_name = os.path.split(image)[-1]

out_path = os.path.join(save_dir, image_name)

cv2.imwrite(out_path, vis_result)

else:

return vis_result

def get_color_map_list(num_classes, custom_color=None):

"""

Returns the color map for visualizing the segmentation mask,

which can support arbitrary number of classes.

Args:

num_classes (int): Number of classes.

custom_color (list, optional): Save images with a custom color map. Default: None, use paddleseg's default color map.

Returns:

(list). The color map.

"""

num_classes += 1

color_map = num_classes * [0, 0, 0]

for i in range(0, num_classes):

j = 0

lab = i

while lab:

color_map[i * 3] |= (((lab >> 0) & 1) << (7 - j))

color_map[i * 3 + 1] |= (((lab >> 1) & 1) << (7 - j))

color_map[i * 3 + 2] |= (((lab >> 2) & 1) << (7 - j))

j += 1

lab >>= 3

color_map = color_map[3:]

if custom_color:

color_map[:len(custom_color)] = custom_color

return color_map

if __name__ == "__main__":

class_num = 256

color_map = get_color_map_list(class_num)

# Create RKNN object

rknn = RKNN()

# if not os.path.exists(RKNN_MODEL):

# print("model not exist")

# exit(-1)

# Load ONNX model

print("--> Loading model")

# ret = rknn.load_rknn(RKNN_MODEL)

# rknn.config(mean_values=[82.9835, 93.9795, 82.1893], std_values=[54.02, 54.804, 54.0225], target_platform='rk3568')

rknn.config(mean_values=[83.0635, 93.9795, 82.13325], std_values=[53.856, 54.774, 53.9325], target_platform='rk3568')

# rknn.config(mean_values=[0, 0, 0], std_values=[255, 255, 255], target_platform='rk3568')

ret = rknn.load_onnx(model=ONNX_MODEL, outputs=['output']) # 这里一定要根据onnx模型修改

ret = rknn.build(do_quantization=False, dataset='./dataset.txt')

if ret != 0:

print("Load rknn model failed!")

exit(ret)

print("done")

# init runtime environment

print("--> Init runtime environment")

ret = rknn.init_runtime(target=None)

if ret != 0:

print("Init runtime environment failed")

exit(ret)

print("done")

# 1. Data Preparation

src_img = cv2.imread(IMG_PATH)

color_img = cv2.cvtColor(src_img, cv2.COLOR_BGR2RGB) # hwc rgb

color_img =color_img[np.newaxis,:] #add axis 1

# 2. Inference

print("--> Running model")

# 获取开始时间

start = time.clock()

pred = rknn.inference(inputs=[color_img], data_format='nhwc')

# 获取结束时间

end = time.clock()

# 计算运行时间

runTime = end - start

runTime_ms = runTime * 1000

# 输出运行时间

print("运行时间:", runTime_ms, "毫秒")

# 压缩维度并且转换类型

pred = np.squeeze(pred).astype('uint8')

#3 融合原图与分割图

added_image = visualize(src_img, pred, color_map, None, weight=0.6)

cv2.imshow("DeeplabV3plus_167", added_image)

cv2.imwrite("../DeeplabV3plus_167.jpg", added_image)

cv2.waitKey(0)

rknn.release()稍微作一下解释:之前忽视了HW,也就是宽度和高度。在转onnx时候,指定输入的形状最好是(N, C, H,W),这样不需要再转换宽高。新代码中我们直接加载测试图片,注意cv2.imread默认记载的是BGR,需要转成RGB,同时默认通道顺序也是NHWC。

在这里我们没有详细的讲如何运行下面的Deeplabv3plus的代码,因为源代码写的比较详细了。bubbliiiing/deeplabv3-plus-pytorch: 这是一个deeplabv3-plus-pytorch的源码,可以用于训练自己的模型。 (github.com)

如果还有什么其他的问题,可以给博主留言,博主都会一一解答。