一 ORB-SLAM2 安装

ORBSLAM2在Ubuntu14.04上详细配置流程

1 安装必要工具

首先,有两个工具是需要提前安装的。即cmake和git。

sudo apt-get install cmake

sudo apt-get install git

2 安装Pangolin,用于可视化和用户接口

Pangolin: https://github.com/stevenlovegrove/Pangolin

官方样例demo https://github.com/stevenlovegrove/Pangolin/tree/master/examples

安装文件夹内

Pangolin函数的使用:

http://docs.ros.org/fuerte/api/pangolin_wrapper/html/namespacepangolin.html

是一款开源的OPENGL显示库,可以用来视频显示、而且开发容易。

是对OpenGL进行封装的轻量级的OpenGL输入/输出和视频显示的库。

可以用于3D视觉和3D导航的视觉图,可以输入各种类型的视频、并且可以保留视频和输入数据用于debug。

安装依赖项:

http://www.cnblogs.com/liufuqiang/p/5618335.html Pangolin安装问题

Glew:

sudo apt-get install libglew-dev

CMake:

sudo apt-get install cmake

Boost:

sudo apt-get install libboost-dev libboost-thread-dev libboost-filesystem-dev

Python2 / Python3:

sudo apt-get install libpython2.7-dev

sudo apt-get install build-essential

先转到一个要存储Pangolin的路径下,例如~/Documents,然后

git clone https://github.com/stevenlovegrove/Pangolin.git

cd Pangolin

mkdir build

cd build

cmake ..

make -j

sudo make install

3 安装OpenCV

最低的OpenCV版本为2.4.3,建议采用OpenCV 3.2.0以上。从OpenCV官网下载OpenCV3.2.00。

然后安装依赖项:

sudo apt-get install libgtk2.0-dev

sudo apt-get install pkg-config

将下载的OpenCV解压到自己的指定目录,然后cd到OpenCV的目录下。

cd ~/Downloads/opencv-3.2.0

mkdir release

cd release

cmake -D CMAKE_BUILD_TYPE=RELEASE -DCMAKE_INSTALL_PREFIX=/usr/local ..

make

sudo make install

4 安装Eigen3

最低要求版本为3.1.0。在http://eigen.tuxfamily.org 下载Eigen3的最新版本,

一般是一个压缩文件,下载后解压,然后cd到Eigen3的根目录下。

mkdir build

cd build

cmake ..

make

5 安装ORBSLAM2

先转到自己打算存储ORBSLAM2工程的路径,然后执行下列命令

git clone https://github.com/raulmur/ORB_SLAM2.git oRB_SLAM2

cd ORB_SLAM2

修改编译 线程数(不然编译时可能会卡住):

vim build.sh

最后 make -j 改成 make -j4

加执行权限

sudo chmod 777 build.sh

安装

./build.sh

之后会在lib文件夹下生成libORB_SLAM2.so,

并且在Examples文件夹下生成

mono_tum,mono_kitti, mono_euroc in Examples/Monocular 单目相机,

rgbd_tum in Examples/Monocular RGB-D相机,

stereo_kitti 和 stereo_euroc in Examples/Stereo 双目立体相机。

6 数据集

KITTI dataset 对于 单目 stereo 或者 双目 monocular

http://www.cvlibs.net/datasets/kitti/eval_odometry.php

EuRoC dataset 对于 单目 stereo 或者 双目 monocular

http://projects.asl.ethz.ch/datasets/doku.php?id=kmavvisualinertialdatasets

TUM dataset 对于 RGB-D 或者 单目monocular

https://vision.in.tum.de/data/datasets/rgbd-dataset

7论文参考

ORB-SLAM:

[Monocular] Raúl Mur-Artal, J. M. M. Montiel and Juan D. Tardós. ORB-SLAM: A Versatile and Accurate Monocular SLAM System.

IEEE Transactions on Robotics, vol. 31, no. 5, pp. 1147-1163, 2015. (2015 IEEE Transactions on Robotics Best Paper Award).

http://webdiis.unizar.es/%7Eraulmur/MurMontielTardosTRO15.pdf

ORB-SLAM2:

[Stereo and RGB-D] Raúl Mur-Artal and Juan D. Tardós. ORB-SLAM2: an Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras.

IEEE Transactions on Robotics, vol. 33, no. 5, pp. 1255-1262, 2017.

https://128.84.21.199/pdf/1610.06475.pdf

词袋模型:

[DBoW2 Place Recognizer] Dorian Gálvez-López and Juan D. Tardós. Bags of Binary Words for Fast Place Recognition in Image Sequences.

IEEE Transactions on Robotics, vol. 28, no. 5, pp. 1188-1197, 2012.

http://doriangalvez.com/papers/GalvezTRO12.pdf

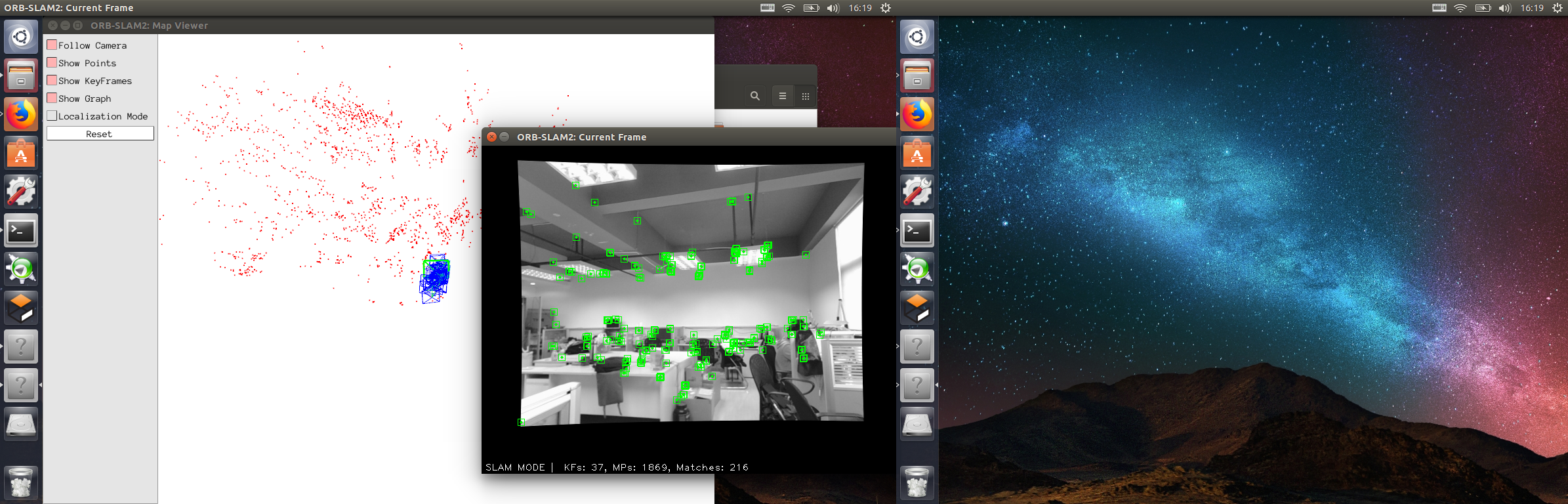

8单目测试

在http://vision.in.tum.de/data/datasets/rgbd-dataset/download下载一个序列,并解压。

转到ORBSLAM2文件夹下,执行下面的命令。

根据下载的视频序列freiburg1, freiburg2 和 freiburg3将TUMX.yaml分别转换为对应的 TUM1.yaml 或 TUM2.yaml 或 TUM3.yaml

(相机参数文件)。

将PATH_TO_SEQUENCE_FOLDER 更改为解压的视频序列文件夹。

./Examples/Monocular/mono_tum 词典路径 参数文件 数据集

例如我下载的 rgbd_dataset_freiburg1_xyz

运行示例:

./Examples/Monocular/mono_tum Vocabulary/ORBvoc.bin Examples/Monocular/TUM1.yaml /home/ewenwan/ewenwan/learn/vSLAM/date/rgbd_dataset_freiburg1_xyz

9双目测试

在 http://projects.asl.ethz.ch/datasets/doku.php?id=kmavvisualinertialdatasets

下载一个序列 Vicon Room 1 02 大小1.2GB

./Examples/Stereo/stereo_euroc Vocabulary/ORBvoc.txt Examples/Stereo/EuRoC.yaml PATH_TO_SEQUENCE/cam0/data PATH_TO_SEQUENCE/cam1/data Examples/Stereo/EuRoC_TimeStamps/SEQUENCE.txt

a. Camera.bf中的b指基线baseline(单位:米),

f是焦距fx(x轴和y轴差距不大),bf=b*f,

和ThDepth一起决定了深度点的范围:

bf * ThDepth / fx

即大致为b * ThDepth。

基线在双目视觉中出现的比较多,

如ORB-SLAM中的双目示例中的EuRoC.yaml中的bf为47.9,ThDepth为35,fx为435.2,

则有效深度为bf * ThDepth / fx = 47.9*35/435.3=3.85米;

KITTI.yaml中的bf为387.57,ThDepth为40,fx为721.54,

则有效深度为387.57*40/721.54=21.5米。

这里的xtion的IR基线(其实也可以不这么叫)bf为40,

ThDepth为50,fx为558.34,则有效深度为3.58米(官方为3.5米)。

b. RGBD中 DepthMapFactor: 1000.0这个很好理解,

depth深度图的值为真实3d点深度*1000.

例如depth值为2683,则真是世界尺度的这点的深度为2.683米。

这个值是可以人为转换的(如opencv中的convert函数,可以设置缩放因子),

如TUM中的深度图的DepthMapFactor为5000,

就代表深度图中的5000个单位为1米。

10 词带 关键字数据库 用于加快 重定位 回环检测

orb词带txt载入太慢,看到有人转换为binary,速度超快,试了下,确实快.

链接:https://github.com/raulmur/ORB_SLAM2/pull/21/commits/4122702ced85b20bd458d0e74624b9610c19f8cc

Vocabulary/ORBvoc.txt >>> Vocabulary/ORBvoc.bin

#CMakeLists.txt

最后添加

## .txt >>> .bin 文件转换

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/tools)

add_executable(bin_vocabulary

tools/bin_vocabulary.cc)

target_link_libraries(bin_vocabulary ${PROJECT_NAME})

# build.sh 转换 .txt >>> .bin

最后添加

cd ..

echo "Converting vocabulary to binary"

./tools/bin_vocabulary

#### 新建转换文件

tools/bin_vocabulary.cc

#include <time.h>

#include "ORBVocabulary.h"

using namespace std;

bool load_as_text(ORB_SLAM2::ORBVocabulary* voc, const std::string infile) {

clock_t tStart = clock();

bool res = voc->loadFromTextFile(infile);

printf("Loading fom text: %.2fs\n", (double)(clock() - tStart)/CLOCKS_PER_SEC);

return res;

}

void load_as_xml(ORB_SLAM2::ORBVocabulary* voc, const std::string infile) {

clock_t tStart = clock();

voc->load(infile);

printf("Loading fom xml: %.2fs\n", (double)(clock() - tStart)/CLOCKS_PER_SEC);

}

void load_as_binary(ORB_SLAM2::ORBVocabulary* voc, const std::string infile) {

clock_t tStart = clock();

voc->loadFromBinaryFile(infile);

printf("Loading fom binary: %.2fs\n", (double)(clock() - tStart)/CLOCKS_PER_SEC);

}

void save_as_xml(ORB_SLAM2::ORBVocabulary* voc, const std::string outfile) {

clock_t tStart = clock();

voc->save(outfile);

printf("Saving as xml: %.2fs\n", (double)(clock() - tStart)/CLOCKS_PER_SEC);

}

void save_as_text(ORB_SLAM2::ORBVocabulary* voc, const std::string outfile) {

clock_t tStart = clock();

voc->saveToTextFile(outfile);

printf("Saving as text: %.2fs\n", (double)(clock() - tStart)/CLOCKS_PER_SEC);

}

void save_as_binary(ORB_SLAM2::ORBVocabulary* voc, const std::string outfile) {

clock_t tStart = clock();

voc->saveToBinaryFile(outfile);

printf("Saving as binary: %.2fs\n", (double)(clock() - tStart)/CLOCKS_PER_SEC);

}

int main(int argc, char **argv) {

cout << "BoW load/save benchmark" << endl;

ORB_SLAM2::ORBVocabulary* voc = new ORB_SLAM2::ORBVocabulary();

load_as_text(voc, "Vocabulary/ORBvoc.txt");

save_as_binary(voc, "Vocabulary/ORBvoc.bin");

return 0;

}

修改读入文件:

Thirdparty/DBoW2/DBoW2/TemplatedVocabulary.h

line 248

添加

// WYW ADD 2017.11.4

/**

* Loads the vocabulary from a Binary file

* @param filename

*/

bool loadFromBinaryFile(const std::string &filename);

/**

* Saves the vocabulary into a Binary file

* @param filename

*/

void saveToBinaryFile(const std::string &filename) const;

line 1460

// WYW ADD 2017.11.4 读取二进制 词带

// --------------------------------------------------------------------------

template<class TDescriptor, class F>

bool TemplatedVocabulary<TDescriptor,F>::loadFromBinaryFile(const std::string &filename) {

fstream f;

f.open(filename.c_str(), ios_base::in|ios::binary);

unsigned int nb_nodes, size_node;

f.read((char*)&nb_nodes, sizeof(nb_nodes));

f.read((char*)&size_node, sizeof(size_node));

f.read((char*)&m_k, sizeof(m_k));

f.read((char*)&m_L, sizeof(m_L));

f.read((char*)&m_scoring, sizeof(m_scoring));

f.read((char*)&m_weighting, sizeof(m_weighting));

createScoringObject();

m_words.clear();

m_words.reserve(pow((double)m_k, (double)m_L + 1));

m_nodes.clear();

m_nodes.resize(nb_nodes+1);

m_nodes[0].id = 0;

char buf[size_node]; int nid = 1;

while (!f.eof()) {

f.read(buf, size_node);

m_nodes[nid].id = nid;

// FIXME

const int* ptr=(int*)buf;

m_nodes[nid].parent = *ptr;

//m_nodes[nid].parent = *(const int*)buf;

m_nodes[m_nodes[nid].parent].children.push_back(nid);

m_nodes[nid].descriptor = cv::Mat(1, F::L, CV_8U);

memcpy(m_nodes[nid].descriptor.data, buf+4, F::L);

m_nodes[nid].weight = *(float*)(buf+4+F::L);

if (buf[8+F::L]) { // is leaf

int wid = m_words.size();

m_words.resize(wid+1);

m_nodes[nid].word_id = wid;

m_words[wid] = &m_nodes[nid];

}

else

m_nodes[nid].children.reserve(m_k);

nid+=1;

}

f.close();

return true;

}

// --------------------------------------------------------------------------

template<class TDescriptor, class F>

void TemplatedVocabulary<TDescriptor,F>::saveToBinaryFile(const std::string &filename) const {

fstream f;

f.open(filename.c_str(), ios_base::out|ios::binary);

unsigned int nb_nodes = m_nodes.size();

float _weight;

unsigned int size_node = sizeof(m_nodes[0].parent) + F::L*sizeof(char) + sizeof(_weight) + sizeof(bool);

f.write((char*)&nb_nodes, sizeof(nb_nodes));

f.write((char*)&size_node, sizeof(size_node));

f.write((char*)&m_k, sizeof(m_k));

f.write((char*)&m_L, sizeof(m_L));

f.write((char*)&m_scoring, sizeof(m_scoring));

f.write((char*)&m_weighting, sizeof(m_weighting));

for(size_t i=1; i<nb_nodes;i++) {

const Node& node = m_nodes[i];

f.write((char*)&node.parent, sizeof(node.parent));

f.write((char*)node.descriptor.data, F::L);

_weight = node.weight; f.write((char*)&_weight, sizeof(_weight));

bool is_leaf = node.isLeaf(); f.write((char*)&is_leaf, sizeof(is_leaf)); // i put this one at the end for alignement....

}

f.close();

}

##### 修改slam系统文件 src/System.cc

line 28

// wyw添加 2017.11.4

#include <time.h>

bool has_suffix(const std::string &str, const std::string &suffix) {

std::size_t index = str.find(suffix, str.size() - suffix.size());

return (index != std::string::npos);

}

line 68

/////// ////////////////////////////////////

//// wyw 修改 2017.11.4

clock_t tStart = clock();

mpVocabulary = new ORBVocabulary();

//bool bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);

bool bVocLoad = false; // chose loading method based on file extension

if (has_suffix(strVocFile, ".txt"))

bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);//txt格式打开

else

bVocLoad = mpVocabulary->loadFromBinaryFile(strVocFile);//bin格式打开

if(!bVocLoad)

{

cerr << "Wrong path to vocabulary. " << endl;

cerr << "Failed to open at: " << strVocFile << endl;

exit(-1);

}

//cout << "Vocabulary loaded!" << endl << endl;

printf("Vocabulary loaded in %.2fs\n", (double)(clock() - tStart)/CLOCKS_PER_SEC);//显示文件载入时间

11 双目相机 矫正

双目相机采集

/*

双目相机采集

双目相机类型:

双设备, video1 video2

单设备,拼接

1280*960 + 1280*960 >>> 2560*960

640*480 + 640*480 >>> 1280*480

*/

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int main()

{

cv::VideoCapture CapAll(1);

//VideoCapture capture;//捕获相机对象

//cv::VideoCapture capl(1);

//v::VideoCapture capr(2);

if( !CapAll.isOpened() )//在线 矫正

printf("打开摄像头失败\r\n");

CapAll.set(CV_CAP_PROP_FRAME_WIDTH,1280);

CapAll.set(CV_CAP_PROP_FRAME_HEIGHT, 480);

int i = 1;

cv::Mat src_img;

cv::Mat src_imgl;

cv::Mat src_imgr;

char filename_l[15];

char filename_r[15];

//CapAll.read(src_img);

// Size imageSize=src_img.size();

//printf( "%2d %2d", imageSize.width,imageSize.height);//图像大小);

//while(capl.read(src_imgl) && capr.read(src_imgr))

while(CapAll.read(src_img))

{

//相机图像分离源码

//双目相机图像2560*960 1280*480

//单个相机图像1280*960 640*480

cv::imshow("src_img", src_img);

src_imgl = src_img(cv::Range(0, 480), cv::Range(0, 640));

src_imgr = src_img(cv::Range(0, 480), cv::Range(640, 1280));

cv::imshow("src_imgl", src_imgl);

cv::imshow("src_imgr", src_imgr);

char c = cv::waitKey(1);

if(c==' ') //按空格采集图像

{

sprintf(filename_l, "%s%02d%s","left", i,".jpg");

imwrite(filename_l, src_imgl);

sprintf(filename_r, "%s%02d%s","right", i++,".jpg");

imwrite(filename_r, src_imgr);

//++i;

//ReleaseImage(& src_imgl;

}

if( c == 27 || c == 'q' || c == 'Q' )//按ESC/q/Q退出

break;

}

return 0;

}

由图片文件夹生成 图片文件路径xml文件 generate stereo_calib.xml 文件

/*this creates a yaml or xml list of files from the command line args

* 由图片文件夹生成 图片文件路径 xml文件

* 示例用法 ./imagelist_creator wimagelist.yaml ../t/*JPG

*

*/

#include "opencv2/core/core.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <string>

#include <iostream>

using std::string;

using std::cout;

using std::endl;

using namespace cv;

static void help(char** av)//argv参数

{

cout << "\nThis creates a yaml or xml list of files from the command line args\n"

"用法 usage:\n./" << av[0] << " imagelist.yaml *.png\n" //命令行 用法

<< "Try using different extensions.(e.g. yaml yml xml xml.gz etc...)\n"

<< "This will serialize this list of images or whatever with opencv's FileStorage framework" << endl;

}

int main(int ac, char** av)

{

cv::CommandLineParser parser(ac, av, "{help h||}{@output||}");//解析参数 得到帮助参数 和 输出文件名

if (parser.has("help"))//有帮助参数

{

help(av);//显示帮助信息

return 0;

}

string outputname = parser.get<string>("@output");

if (outputname.empty())

{

help(av);

return 1;

}

Mat m = imread(outputname); //check if the output is an image - prevent overwrites!

if(!m.empty()){

std::cerr << "fail! Please specify an output file, don't want to overwrite you images!" << endl;

help(av);

return 1;

}

FileStorage fs(outputname, FileStorage::WRITE);//opencv 读取文件类

fs << "images" << "[";//文件流重定向

for(int i = 2; i < ac; i++){

fs << string(av[i]);//从第二个参数开始为 图片名

}

fs << "]";

return 0;

}

双目相机 矫正

由图片文件夹生成 图片文件路径 stereo_calib.xml 文件

get 内参数 intrinsics.yml

外参数 extrinsics.yml

/* This is sample from the OpenCV book. The copyright notice is below

* 双目相机 矫正

* 用法 ./Stereo_Calibr -w=6 -h=8 -s=24.3 stereo_calib.xml

* 我的 ./Stereo_Calibr -w=8 -h=10 -s=200 stereo_calib.xml

* ./stereo_calib -w=9 -h=6 stereo_calib.xml 标准图像

* 实际的正方格尺寸在程序中指定 const float squareSize = 2.43f; 2.43cm mm为单位的话为 24.3 0.1mm为精度的话为 243 注意 标定结果单位(纲量)和此一致

* https://www.cnblogs.com/polly333/p/5013505.html 原理分析

* http://blog.csdn.net/zc850463390zc/article/details/48975263

*

* 径向畸变矫正 光学透镜特效 凸起 k1 k2 k3 三个参数确定

* Xp=Xd(1 + k1*r^2 + k2*r^4 + k3*r^6)

* Yp=Yd(1 + k1*r^2 + k2*r^4 + k3*r^6)

* 切向畸变矫正 装配误差 p1 p2 两个参数确定

* Xp=Xd + ( 2 * p1 * y +p2 * (r^2 +2 * x^2) )

* Yp=Yd + ( p1 * (r^2 + 2 * y^2) + 2 * p2 * x )

* r^2 = x^2+y^2 | Xw|

* | u| |fx 0 ux 0| | R T| | Yw|

* | v| = |0 fy uy 0| * | | * | Zw|=M*W

* |1| |0 0 1 0| | 0 0 0 1| | 1 |

* http://wiki.opencv.org.cn/index.php/Cv 相机标定和三维重建

* 像素坐标齐次表示(3*1) = 内参数矩阵 齐次表示 3*4 × 外参数矩阵齐次表示 4*4 × 物体世界坐标 齐次表示 4*1

* 内参数齐次 × 外参数齐次 整合 得到 投影矩阵 3*4 左右两个相机 投影矩阵 P1 P2

* Q为 视差转深度矩阵 disparity-to-depth mapping matrix

*

* http://blog.csdn.net/angle_cal/article/details/50800775

* Q= | 1 0 0 -C_x |

* | 0 1 0 -C_y |

* | 0 0 0 f |

* | 1 0 -1/T (c_x-c_x')/T |

*

* 以左相机光心为世界坐标系原点 左手坐标系Z 垂直向后指向 相机平面

* |x| | x-C_x | |X|

* |y| | y-C_y | |Y|

* Q * |d| = |f | = |Z|======> Z Z/W = - f*T/(d-c_x+c_x')

* |1| |(-d+c_x-c_x')/T | |W|

* Z = f * T / D f 焦距 量纲为像素点 T 左右相机基线长度 量纲和标定时 所给标定板尺寸 相同 D视差 量纲也为 像素点 分子分母约去,Z的量纲同 T

*

*

* CCD的尺寸是8mm X 6mm,帧画面的分辨率设置为640X480,那么毫米与像素点之间的转换关系就是80pixel/mm

* CCD传感器每个像素点的物理大小为dx*dy,相应地,就有 dx=dy=1/80

* 假设像素点的大小为k x l,其中 fx = f / k, fy = f / (l * sinA), A一般假设为 90°,是指摄像头坐标系的偏斜度(就是镜头坐标和CCD是否垂直)。

* 摄像头矩阵(内参)的目的是把图像的点从图像坐标转换成实际物理的三维坐标。因此其中的fx,y, cx, cy 都是使用类似上面的纲量。

* 同样,Q 中的变量 f,cx, cy 也应该是一样的。

函数名称:reprojectImageTo3D

函数原型:void reprojectImageTo3D(InputArray disparity,OutputArray _3dImage,InputArray Q, bool handleMissingValues=false, int ddepth=-1 )

函数作用:根据一组差异图像构建3D空间

参数说明:disparity 视差图像。可以是8位无符号,16位有符号或者32位有符号的浮点图像。

cvReprojectImageTo3D ()

{

for( y = 0; y < rows; y++ )

{

const float* sptr = (const float*)(src->data.ptr + src->step*y); // 视差矩阵指针

float* dptr0 = (float*)(dst->data.ptr + dst->step*y), *dptr = dptr0; // 三维坐标矩阵指针

// 每一行运算开始时,用 当前行号y 乘以Q阵第2列、再加上Q阵第4列,作为初始值

// 记 qq=[qx, qy, qz, qw]’

double qx = q[0][1]*y + q[0][3], qy = q[1][1]*y + q[1][3];

double qz = q[2][1]*y + q[2][3], qw = q[3][1]*y + q[3][3];

…

// 每算完一个像素的三维坐标,向量qq 累加一次q阵第1列

// 即:qq = qq + q(:,1)

for( x = 0; x < cols; x++, qx += q[0][0], qy += q[1][0], qz += q[2][0], qw += q[3][0] )

{

double d = sptr[x];

// 计算当前像素三维坐标

// 将向量qq 加上 Q阵第3列与当前像素视差d的乘积,用所得结果的第4元素除前三位元素即可

// [X,Y,Z,W]’ = qq + q(:,3) * d; iW = 1/W; X=X*iW; Y=Y*iW; Z=Z*iW;

double iW = 1./(qw + q[3][2]*d);

double X = (qx + q[0][2]*d)*iW;

double Y = (qy + q[1][2]*d)*iW;

double Z = (qz + q[2][2]*d)*iW;

if( fabs(d-minDisparity) <= FLT_EPSILON )

Z = bigZ; // 02713 const double bigZ = 10000.;

dptr[x*3] = (float)X;

dptr[x*3+1] = (float)Y;

dptr[x*3+2] = (float)Z;

}

}

*

************************************************** */

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include <vector>//容器

#include <string>//字符串

#include <algorithm>//算法

#include <iostream>//输入输出流

#include <iterator>//迭代器

#include <stdio.h>//标志io

#include <stdlib.h>//标志库

#include <ctype.h>//c标志函数库

using namespace cv;

using namespace std;

static int print_help()

{

cout <<

" Given a list of chessboard images, the number of corners (nx, ny)\n"

" on the chessboards, and a flag: useCalibrated for \n"

" calibrated (0) or\n"

" uncalibrated \n"

" (1: use cvStereoCalibrate(), 2: compute fundamental\n"

" matrix separately) stereo. \n"

" Calibrate the cameras and display the\n"

" rectified results along with the computed disparity images. \n" << endl;

cout << "Usage:\n ./stereo_calib -w=<board_width default=9> -h=<board_height default=6> <image list XML/YML file default=../data/stereo_calib.xml>\n" << endl;

return 0;

}

static void

StereoCalib(const vector<string>& imagelist, Size boardSize, float squareSize_, bool displayCorners = false, bool useCalibrated=true, bool showRectified=true)

{

if( imagelist.size() % 2 != 0 )//成对的图像

{

cout << "Error: the image list contains odd (non-even) number of elements\n";

return;

}

const int maxScale = 2;

const float squareSize = squareSize_; // Set this to your actual square size 我的 实际的正方格尺寸 2.43cm 原来作者的 1.0f

// ARRAY AND VECTOR STORAGE:

//创建图像坐标和世界坐标系坐标矩阵

vector<vector<Point2f> > imagePoints[2];//图像点(存储角点)

vector<vector<Point3f> > objectPoints;//物体三维坐标点

Size imageSize;

int i, j, k, nimages = (int)imagelist.size()/2;//左右两个相机图片

//确定左右视图矩阵的数量,比如10副图,左右矩阵分别为5个

imagePoints[0].resize(nimages);

imagePoints[1].resize(nimages);

vector<string> goodImageList;

// 文件列表 需要交替 "left01.jpg" "right01.jpg"...

for( i = j = 0; i < nimages; i++ )//5对图像

{

for( k = 0; k < 2; k++ )//左右两个相机图片 k=0,1

{

//逐个读取图片

const string& filename = imagelist[i*2+k];

Mat img_src,img;

img_src = imread(filename, 1);//图像数据

cvtColor(img_src, img, COLOR_BGR2GRAY);//转换到 灰度图

if(img.empty())//图像打不开

break;

if( imageSize == Size() )

imageSize = img.size();

else if( img.size() != imageSize )// 图片需要保持一样的大小

{

cout << "The image " << filename << " has the size different from the first image size. Skipping the pair\n";

break;

}

bool found = false;

//设置图像矩阵的引用(指针),此时指向左右视图的矩阵首地址

vector<Point2f>& corners = imagePoints[k][j];//指向 每个图片的角点容器的首地址

for( int scale = 1; scale <= maxScale; scale++ )

{

Mat timg;

//图像是8bit的灰度或彩色图像

if( scale == 1 )

timg = img;

else

//如果为多通道图像

resize(img, timg, Size(), scale, scale);//转换成 8bit的灰度或彩色图像

//参数需为 8bit的灰度或彩色图像

found = findChessboardCorners(timg, boardSize, corners,//得到棋盘内角点坐标 存入 imagePoints[k][j] 中

CALIB_CB_ADAPTIVE_THRESH | CALIB_CB_NORMALIZE_IMAGE);

if( found )

{

//如果为多通道图像

if( scale > 1 )

{

Mat cornersMat(corners);

cornersMat *= 1./scale;

}

break;

}

}

//显示角点

if( displayCorners )

{

cout << filename << endl;

Mat cimg, cimg1;

cvtColor(img, cimg, COLOR_GRAY2BGR);//转换成灰度图

drawChessboardCorners(cimg, boardSize, corners, found);//显示

double sf = 640./MAX(img.rows, img.cols);//尺度因子

resize(cimg, cimg1, Size(), sf, sf);//变换到合适大小

imshow("corners", cimg1);

char c = (char)waitKey(500);//等待500ms

if( c == 27 || c == 'q' || c == 'Q' ) //Allow ESC (27) to quit ESC键可退出

exit(-1);

}

else

putchar('.');

if( !found )

break;

// 亚像素级优化

cornerSubPix(img, corners, Size(11,11), Size(-1,-1),

TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,

30, 0.01));

}

if( k == 2 )//上面的for循环结束后 k=2

{

goodImageList.push_back(imagelist[i*2]);

goodImageList.push_back(imagelist[i*2+1]);

j++;

}

}

cout << j << " pairs have been successfully detected.\n";

nimages = j;

if( nimages < 2 )

{

cout << "Error: too little pairs to run the calibration\n";

return;

}

imagePoints[0].resize(nimages);//左相机 角点位置

imagePoints[1].resize(nimages);//右相机 角点位置

// 角点实际 位置 按照 squareSize 得出

objectPoints.resize(nimages);

for( i = 0; i < nimages; i++ )//5对图

{

for( j = 0; j < boardSize.height; j++ )//每一行

for( k = 0; k < boardSize.width; k++ )//每一列

objectPoints[i].push_back(Point3f(k*squareSize, j*squareSize, 0));//直接转为float类型,坐标为行、列

}

cout << "Running stereo calibration ...\n";

//创建内参矩阵

Mat cameraMatrix[2], distCoeffs[2];//左右两个相机 的内参数矩阵和 畸变参数

cameraMatrix[0] = initCameraMatrix2D(objectPoints,imagePoints[0],imageSize,0);//初始化内参数矩阵

cameraMatrix[1] = initCameraMatrix2D(objectPoints,imagePoints[1],imageSize,0);

Mat R, T, E, F; //R 旋转矢量 T平移矢量 E本征矩阵 F基础矩阵

// 求解获取双标定的参数

double rms = stereoCalibrate(objectPoints, imagePoints[0], imagePoints[1],//真实点坐标 左右两个相机点坐标

cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

imageSize, R, T, E, F,

CALIB_FIX_ASPECT_RATIO +

CALIB_ZERO_TANGENT_DIST +

CALIB_USE_INTRINSIC_GUESS +

CALIB_SAME_FOCAL_LENGTH +

CALIB_RATIONAL_MODEL +

CALIB_FIX_K3 + CALIB_FIX_K4 + CALIB_FIX_K5,

TermCriteria(TermCriteria::COUNT+TermCriteria::EPS, 100, 1e-5) );

cout << "done with RMS error=" << rms << endl;

// CALIBRATION QUALITY CHECK

// because the output fundamental matrix implicitly

// includes all the output information,

// we can check the quality of calibration using the

// epipolar geometry constraint: m2^t*F*m1=0

//计算标定误差

double err = 0;

int npoints = 0;

vector<Vec3f> lines[2];//极线

for( i = 0; i < nimages; i++ )

{

int npt = (int)imagePoints[0][i].size();

Mat imgpt[2];

for( k = 0; k < 2; k++ )

{

imgpt[k] = Mat(imagePoints[k][i]);

undistortPoints(imgpt[k], imgpt[k], cameraMatrix[k], distCoeffs[k], Mat(), cameraMatrix[k]);//未去畸变

computeCorrespondEpilines(imgpt[k], k+1, F, lines[k]);

}

for( j = 0; j < npt; j++ )

{

double errij = fabs(imagePoints[0][i][j].x*lines[1][j][0] +

imagePoints[0][i][j].y*lines[1][j][1] + lines[1][j][2]) +

fabs(imagePoints[1][i][j].x*lines[0][j][0] +

imagePoints[1][i][j].y*lines[0][j][1] + lines[0][j][2]);

err += errij;

}

npoints += npt;

}

cout << "average epipolar err = " << err/npoints << endl;

// 内参数 save intrinsic parameters

FileStorage fs("intrinsics.yml", FileStorage::WRITE);

if( fs.isOpened() )

{

fs << "M1" << cameraMatrix[0] << "D1" << distCoeffs[0] <<

"M2" << cameraMatrix[1] << "D2" << distCoeffs[1];

fs.release();

}

else

cout << "Error: can not save the intrinsic parameters\n";

//外参数

// R--右相机相对左相机的旋转矩阵

// T--右相机相对左相机的平移矩阵

// R1,R2--左右相机校准变换(旋转)矩阵 3×3

// P1,P2--左右相机在校准后坐标系中的投影矩阵 3×4

// Q--视差-深度映射矩阵,我利用它来计算单个目标点的三维坐标

Mat R1, R2, P1, P2, Q;//由stereoRectify()求得

Rect validRoi[2];//图像校正之后,会对图像进行裁剪,这里的validROI就是指裁剪之后的区域

// 校准双目图像 摆正两幅图像

// https://en.wikipedia.org/wiki/Image_rectification

stereoRectify(cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

imageSize, R, T, R1, R2, P1, P2, Q,

CALIB_ZERO_DISPARITY, 1, imageSize, &validRoi[0], &validRoi[1]);

/*

* R T 左相机到右相机 的 旋转 平移矩阵 R 3*3 T 3*1 T中第一个Tx为 基线长度

立体校正的时候需要两幅图像共面并且行对准 以使得立体匹配更加的可靠

使得两幅图像共面的方法就是把两个摄像头的图像投影到一个公共成像面上,这样每幅图像从本图像平面投影到公共图像平面都需要一个旋转矩阵R

stereoRectify 这个函数计算的就是从图像平面投影都公共成像平面的旋转矩阵Rl,Rr。 Rl,Rr即为左右相机平面行对准的校正旋转矩阵。

左相机经过Rl旋转,右相机经过Rr旋转之后,两幅图像就已经共面并且行对准了。

其中Pl,Pr为两个相机的投影矩阵,其作用是将3D点的坐标转换到图像的2D点的坐标:P*[X Y Z 1]' =[x y w]

Q矩阵为重投影矩阵,即矩阵Q可以把2维平面(图像平面)上的点投影到3维空间的点:Q*[x y d 1] = [X Y Z W]。其中d为左右两幅图像的视差

*/

fs.open("extrinsics.yml", FileStorage::WRITE);

if( fs.isOpened() )

{

fs << "R" << R << "T" << T << "R1" << R1 << "R2" << R2 << "P1" << P1 << "P2" << P2 << "Q" << Q;

fs.release();

}

else

cout << "Error: can not save the extrinsic parameters\n";

// 辨认 左右结构的相机 或者 上下结构的相机

// OpenCV can handle left-right

// or up-down camera arrangements

bool isVerticalStereo = fabs(P2.at<double>(1, 3)) > fabs(P2.at<double>(0, 3));

// COMPUTE AND DISPLAY RECTIFICATION

if( !showRectified )

return;

Mat rmap[2][2]; //映射表

// IF BY CALIBRATED (BOUGUET'S METHOD)

if( useCalibrated )

{

// we already computed everything

}

// OR ELSE HARTLEY'S METHOD

else

// use intrinsic parameters of each camera, but

// compute the rectification transformation directly

// from the fundamental matrix

{

vector<Point2f> allimgpt[2];

for( k = 0; k < 2; k++ )

{

for( i = 0; i < nimages; i++ )

std::copy(imagePoints[k][i].begin(), imagePoints[k][i].end(), back_inserter(allimgpt[k]));

}

F = findFundamentalMat(Mat(allimgpt[0]), Mat(allimgpt[1]), FM_8POINT, 0, 0);

Mat H1, H2;

stereoRectifyUncalibrated(Mat(allimgpt[0]), Mat(allimgpt[1]), F, imageSize, H1, H2, 3);

R1 = cameraMatrix[0].inv()*H1*cameraMatrix[0];

R2 = cameraMatrix[1].inv()*H2*cameraMatrix[1];

P1 = cameraMatrix[0];

P2 = cameraMatrix[1];

}

/*

根据stereoRectify 计算出来的R 和 P 来计算图像的映射表 mapx,mapy

mapx,mapy这两个映射表接下来可以给remap()函数调用,来校正图像,使得两幅图像共面并且行对准

ininUndistortRectifyMap()的参数newCameraMatrix就是校正后的摄像机矩阵。在openCV里面,校正后的计算机矩阵Mrect是跟投影矩阵P一起返回的。

所以我们在这里传入投影矩阵P,此函数可以从投影矩阵P中读出校正后的摄像机矩阵

*/

//Precompute maps for cv::remap()

initUndistortRectifyMap(cameraMatrix[0], distCoeffs[0], R1, P1, imageSize, CV_16SC2, rmap[0][0], rmap[0][1]);

initUndistortRectifyMap(cameraMatrix[1], distCoeffs[1], R2, P2, imageSize, CV_16SC2, rmap[1][0], rmap[1][1]);

/*

把校正结果显示出来

把左右两幅图像显示到同一个画面上

这里只显示了最后一副图像的校正结果。并没有把所有的图像都显示出来

*/

Mat canvas;

double sf;

int w, h;

if( !isVerticalStereo )

{

sf = 600./MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width*sf);

h = cvRound(imageSize.height*sf);

canvas.create(h, w*2, CV_8UC3);

}

else

{

sf = 300./MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width*sf);

h = cvRound(imageSize.height*sf);

canvas.create(h*2, w, CV_8UC3);

}

for( i = 0; i < nimages; i++ )

{

for( k = 0; k < 2; k++ )

{

Mat img = imread(goodImageList[i*2+k], 0), rimg, cimg;

remap(img, rimg, rmap[k][0], rmap[k][1], INTER_LINEAR);//经过remap之后,左右相机的图像已经共面并且行对准了

cvtColor(rimg, cimg, COLOR_GRAY2BGR);

Mat canvasPart = !isVerticalStereo ? canvas(Rect(w*k, 0, w, h)) : canvas(Rect(0, h*k, w, h));//得到画布的一部分

resize(cimg, canvasPart, canvasPart.size(), 0, 0, INTER_AREA);

if( useCalibrated )

{

Rect vroi(cvRound(validRoi[k].x*sf), cvRound(validRoi[k].y*sf),

cvRound(validRoi[k].width*sf), cvRound(validRoi[k].height*sf)); //获得被截取的区域

rectangle(canvasPart, vroi, Scalar(0,0,255), 3, 8);////画上一个矩形

}

}

//画上对应的线条

if( !isVerticalStereo )

for( j = 0; j < canvas.rows; j += 16 )

line(canvas, Point(0, j), Point(canvas.cols, j), Scalar(0, 255, 0), 1, 8);//画平行线

else

for( j = 0; j < canvas.cols; j += 16 )

line(canvas, Point(j, 0), Point(j, canvas.rows), Scalar(0, 255, 0), 1, 8);

imshow("rectified", canvas);

char c = (char)waitKey();

if( c == 27 || c == 'q' || c == 'Q' )

break;

}

}

static bool readStringList( const string& filename, vector<string>& l )

{

l.resize(0);

FileStorage fs(filename, FileStorage::READ);

if( !fs.isOpened() )

return false;

FileNode n = fs.getFirstTopLevelNode();

if( n.type() != FileNode::SEQ )

return false;

FileNodeIterator it = n.begin(), it_end = n.end();

for( ; it != it_end; ++it )

l.push_back((string)*it);

return true;

}

int main(int argc, char** argv)

{

Size boardSize;

string imagelistfn;

bool showRectified;

float squareSize;// 格子大小

cv::CommandLineParser parser(argc, argv, "{w|9|}{h|6|}{s|1|}{nr||}{help||}{@input|../data/std/stereo_calib.xml|}");

if (parser.has("help"))

return print_help();

showRectified = !parser.has("nr");

imagelistfn = parser.get<string>("@input");

boardSize.width = parser.get<int>("w");

boardSize.height = parser.get<int>("h");

squareSize = parser.get<float>("s");//正方形棋盘格子 边长

if ( squareSize <= 0 )//标定板 格子尺寸参数错误

return fprintf( stderr, "Invalid board square width\n" ), -1;

if (!parser.check())

{

parser.printErrors();

return 1;

}

vector<string> imagelist;

bool ok = readStringList(imagelistfn, imagelist);

if(!ok || imagelist.empty())

{

cout << "can not open " << imagelistfn << " or the string list is empty" << endl;

return print_help();

}

StereoCalib(imagelist, boardSize, squareSize, true, true, showRectified);//

return 0;

}

intrinsics.yml

%YAML:1.0

M1: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 4.8485678458503560e+02, 0., 3.1984271341779277e+02, 0.,

4.7849342106773673e+02, 2.4258677342694295e+02, 0., 0., 1. ]

D1: !!opencv-matrix

rows: 1

cols: 14

dt: d

data: [ 4.7488030104738663e-02, -1.9113503447123523e-01, 0., 0., 0.,

0., 0., -1.2213153211085856e-01, 0., 0., 0., 0., 0., 0. ]

M2: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 4.8485678458503560e+02, 0., 3.1871078452903032e+02, 0.,

4.7849342106773673e+02, 2.2926181081291159e+02, 0., 0., 1. ]

D2: !!opencv-matrix

rows: 1

cols: 14

dt: d

data: [ 4.5512536376610728e-02, 4.2067428940605327e-02, 0., 0., 0.,

0., 0., 3.2197725828382462e-01, 0., 0., 0., 0., 0., 0. ]

extrinsics.yml

%YAML:1.0

R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 9.9994108826376249e-01, -9.8220327150479857e-03,

-4.6203544481922664e-03, 9.7318529043163553e-03,

9.9976922982512684e-01, -1.9151452527718670e-02,

4.8073944014561838e-03, 1.9105359672543194e-02,

9.9980591826156529e-01 ]

T: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [ -1.6275078772819168e+03, -2.3257053592704903e+01,

2.8278971161131913e+01 ]

R1: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 9.9974366364060896e-01, 4.1316356790615238e-03,

-2.2260651317250052e-02, -4.3430473505841278e-03,

9.9994584874335801e-01, -9.4571412507126733e-03,

2.2220372412794197e-02, 9.5513961042798783e-03,

9.9970747015429262e-01 ]

R2: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 9.9974703804411835e-01, 1.4286364304283433e-02,

-1.7371232454182674e-02, -1.4120596989111897e-02,

9.9985394315871845e-01, 9.6281405594897534e-03,

1.7506246390446973e-02, -9.3804128335326278e-03,

9.9980275014244158e-01 ]

P1: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [ 3.9018919929094244e+02, 0., 3.3265590286254883e+02, 0., 0.,

3.9018919929094244e+02, 2.3086411857604980e+02, 0., 0., 0., 1.,

0. ]

P2: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [ 3.9018919929094244e+02, 0., 3.3265590286254883e+02,

-6.3519667606988060e+05, 0., 3.9018919929094244e+02,

2.3086411857604980e+02, 0., 0., 0., 1., 0. ]

Q: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ 1., 0., 0., -3.3265590286254883e+02, 0., 1., 0.,

-2.3086411857604980e+02, 0., 0., 0., 3.9018919929094244e+02, 0.,

0., 6.1428092115522364e-04, 0. ]

generate my_stereo.yaml

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 484.8567845850356

Camera.fy: 478.4934210677367

Camera.cx: 319.8427134177927

Camera.cy: 242.5867734269429

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 20.0

# stereo baseline times fx

Camera.bf: 78.9108236

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 0

# Close/Far threshold. Baseline times.

ThDepth: 50

#--------------------------------------------------------------------------------------------

# Stereo Rectification. Only if you need to pre-rectify the images.

# Camera.fx, .fy, etc must be the same as in LEFT.P

#--------------------------------------------------------------------------------------------

LEFT.height: 480

LEFT.width: 640

LEFT.D: !!opencv-matrix

rows: 1

cols: 14

dt: d

data: [ 4.7488030104738663e-02, -1.9113503447123523e-01, 0., 0., 0., 0., 0., -1.2213153211085856e-01, 0., 0., 0., 0., 0., 0. ]

LEFT.K: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 4.8485678458503560e+02, 0., 3.1984271341779277e+02, 0., 4.7849342106773673e+02, 2.4258677342694295e+02, 0., 0., 1. ]

LEFT.R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 9.9974366364060896e-01, 4.1316356790615238e-03, -2.2260651317250052e-02, -4.3430473505841278e-03, 9.9994584874335801e-01, -9.4571412507126733e-03, 2.2220372412794197e-02, 9.5513961042798783e-03, 9.9970747015429262e-01 ]

LEFT.P: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [ 3.9018919929094244e+02, 0., 3.3265590286254883e+02, 0., 0., 3.9018919929094244e+02, 2.3086411857604980e+02, 0., 0., 0., 1., 0. ]

RIGHT.height: 480

RIGHT.width: 640

RIGHT.D: !!opencv-matrix

rows: 1

cols: 14

dt: d

data: [ 4.5512536376610728e-02, 4.2067428940605327e-02, 0., 0., 0., 0., 0., 3.2197725828382462e-01, 0., 0., 0., 0., 0., 0. ]

RIGHT.K: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 4.8485678458503560e+02, 0., 3.1871078452903032e+02, 0., 4.7849342106773673e+02, 2.2926181081291159e+02, 0., 0., 1. ]

RIGHT.R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 9.9974703804411835e-01, 1.4286364304283433e-02, -1.7371232454182674e-02, -1.4120596989111897e-02, 9.9985394315871845e-01, 9.6281405594897534e-03, 1.7506246390446973e-02, -9.3804128335326278e-03, 9.9980275014244158e-01 ]

RIGHT.P: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [ 3.9018919929094244e+02, 0., 3.3265590286254883e+02,-6.3519667606988060e+05, 0., 3.9018919929094244e+02, 2.3086411857604980e+02, 0., 0., 0., 1., 0. ]

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1200

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

under Examples/Stereo creat my_stereo.cc

/*

*/

#include<iostream>

#include<algorithm>

#include<fstream>

#include<iomanip>

#include<chrono>//chrono是一个time library, 源于boost,现在已经是C++标准。

#include<opencv2/core/core.hpp>

#include<System.h>

using namespace std;

using namespace cv;

#include <boost/format.hpp> // 格式化字符串 for formating strings 处理图像文件格式

#include <boost/thread/thread.hpp>

static void print_help()

{

printf("\n orbslam2 stereo test : \n");//生成视差和点云图

printf("\n Usage: my_stereo [-v=<path_to_vocabulary>] [-s=<path_to_settings>]\n"

"\n [--d=<camera_id>] \n");

}

int main(int argc, char **argv)

{

//std::uint32_t timestamp;

double time=0.0, ttrack=0;

//vector<IMUData> imudatas;

std::string setting_filename = ""; //配置文件

std::string vocabulary_filepath = ""; //关键帧数据库 词典 重定位

int deviceid = 1; //相机设备id

cv::CommandLineParser parser(argc, argv,

"{help h||}{d|1|}{v|../../Vocabulary/ORBvoc.bin|}{s|my_stereo.yaml|}");

//=======打印帮助信息============

if(parser.has("help"))

{

print_help();

return 0;

}

if( parser.has("d") )

deviceid = parser.get<int>("d");//相机设备id

if( parser.has("s") )

setting_filename = parser.get<std::string>("s");//

if( parser.has("v") )

vocabulary_filepath = parser.get<std::string>("v");//

if (!parser.check()) {

parser.printErrors();

return 1;

}

//if(setting_filename.empty())//{

// Read rectification parameters

cv::FileStorage fsSettings(setting_filename, cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

cerr << "ERROR: Wrong path to setting file : " << setting_filename << endl;

return -1;

}

cv::Mat K_l, K_r, D_l, D_r, P_l, P_r, R_l, R_r;

fsSettings["LEFT.K"] >> K_l;//内参数

if(K_l.empty()) cout << "K_l missing "<<endl;

fsSettings["RIGHT.K"] >> K_r;//

if(K_r.empty()) cout << "K_r missing "<<endl;

fsSettings["LEFT.D"] >> D_l;// 畸变矫正

if(D_l.empty()) cout << "D_l missing "<<endl;

fsSettings["RIGHT.D"] >> D_r;

if(D_r.empty()) cout << "D_r missing "<<endl;

fsSettings["LEFT.P"] >> P_l;// P_l,P_r --左右相机在校准后坐标系中的投影矩阵 3×4

if(P_l.empty()) cout << "P_l missing "<<endl;

fsSettings["RIGHT.P"] >> P_r;

if(K_r.empty()) cout << "P_r missing "<<endl;

fsSettings["LEFT.R"] >> R_l;// R_l,R_r --左右相机校准变换(旋转)矩阵 3×3

if(R_l.empty()) cout << "R_l missing "<<endl;

fsSettings["RIGHT.R"] >> R_r;

if(R_r.empty()) cout << "R_r missing "<<endl;

int rows_l = fsSettings["LEFT.height"];

int cols_l = fsSettings["LEFT.width"];

int rows_r = fsSettings["RIGHT.height"];

int cols_r = fsSettings["RIGHT.width"];

if(K_l.empty() || K_r.empty() || P_l.empty() || P_r.empty() || R_l.empty() || R_r.empty() || D_l.empty() || D_r.empty() || rows_l==0 || rows_r==0 || cols_l==0 || cols_r==0)

{

cerr << "ERROR: Calibration parameters to rectify stereo are missing!" << endl;

return -1;

}

//}

//外参数

// Mat R, T, R1, P1, R2, P2;

//fs["R"] >> R;

//fs["T"] >> T;

//图像矫正摆正 映射计算

//stereoRectify( K_l, D_l, K_r, D_r, cv::Size(cols_l,rows_l), R, T, R1, R2, P1, P2, Q, CALIB_ZERO_DISPARITY, -1, cv::Size(cols_l,rows_l), &roi1, &roi2 );

// 获取矫正映射矩阵

cv::Mat M1l,M2l,M1r,M2r;

cv::initUndistortRectifyMap(K_l,D_l,R_l,P_l.rowRange(0,3).colRange(0,3),cv::Size(cols_l,rows_l),CV_32F,M1l,M2l);

cv::initUndistortRectifyMap(K_r,D_r,R_r,P_r.rowRange(0,3).colRange(0,3),cv::Size(cols_r,rows_r),CV_32F,M1r,M2r);

// Create SLAM system. It initializes all system threads and gets ready to process frames.

ORB_SLAM2::System SLAM(vocabulary_filepath, setting_filename, ORB_SLAM2::System::STEREO, true);

cout << endl << "-------" << endl;

cout << "Start processing sequence ..." << endl;

cv::VideoCapture CapAll(deviceid); //打开相机设备

if( !CapAll.isOpened() )

{

printf("打开摄像头失败\r\n");

printf("再试一次\r\n");

//sleep(1);//延时1秒

cv::VideoCapture CapAll(1); //打开相机设备

if( !CapAll.isOpened() ) {

printf("打开摄像头失败\r\n");

printf("再试一次..\r\n");

//sleep(1);//延时1秒

cv::VideoCapture CapAll(1); //打开相机设备

if( !CapAll.isOpened() ) {

printf("打开摄像头失败\r\n");

return -1;

}

}

}

//设置分辨率 1280*480 分成两张 640*480 × 2 左右相机

CapAll.set(CV_CAP_PROP_FRAME_WIDTH,1280);

CapAll.set(CV_CAP_PROP_FRAME_HEIGHT, 480);

cv::Mat src_img, imLeft, imRight, imLeftRect, imRightRect;

while(CapAll.read(src_img))

{

imLeft = src_img(cv::Range(0, 480), cv::Range(0, 640));

imRight = src_img(cv::Range(0, 480), cv::Range(640, 1280));

cv::remap(imLeft,imLeftRect,M1l,M2l,cv::INTER_LINEAR);

cv::remap(imRight,imRightRect,M1r,M2r,cv::INTER_LINEAR);

#ifdef COMPILEDWITHC11

std::chrono::steady_clock::time_point t1 = std::chrono::steady_clock::now();

#else

std::chrono::monotonic_clock::time_point t1 = std::chrono::monotonic_clock::now();

#endif

time += ttrack ;

SLAM.TrackStereo(imLeftRect, imRightRect, time);

#ifdef COMPILEDWITHC11

std::chrono::steady_clock::time_point t2 = std::chrono::steady_clock::now();

#else

std::chrono::monotonic_clock::time_point t2 = std::chrono::monotonic_clock::now();

#endif

ttrack= std::chrono::duration_cast<std::chrono::duration<double> >(t2 - t1).count();

// if(ttrack<T)

// usleep((T-ttrack)*1e6); //sleep

}

SLAM.Shutdown();

SLAM.SaveTrajectoryKITTI("myCameraTrajectory.txt");

CapAll.release();

cv::destroyAllWindows();

return 0;

}

gedit oRB_SLAM2/CMakeLists.txt

#cmake 版本限制

cmake_minimum_required(VERSION 2.8)

#工程名

project(ORB_SLAM2)

#编译模式 使用 IF(NOT ) ENDIF 放置重复设置

IF(NOT CMAKE_BUILD_TYPE)

SET(CMAKE_BUILD_TYPE Release)

ENDIF()

# 显示 编译模式信息

MESSAGE("Build type: " ${CMAKE_BUILD_TYPE})

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O3 -march=native ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O3 -march=native")

# 检查c++11或者 c++0x 编译支持 Check C++11 or C++0x support

include(CheckCXXCompilerFlag)

CHECK_CXX_COMPILER_FLAG("-std=c++11" COMPILER_SUPPORTS_CXX11)

CHECK_CXX_COMPILER_FLAG("-std=c++0x" COMPILER_SUPPORTS_CXX0X)

if(COMPILER_SUPPORTS_CXX11)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")

add_definitions(-DCOMPILEDWITHC11)

message(STATUS "Using flag -std=c++11.")

elseif(COMPILER_SUPPORTS_CXX0X)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++0x")

add_definitions(-DCOMPILEDWITHC0X)

message(STATUS "Using flag -std=c++0x.")

else()

message(FATAL_ERROR "The compiler ${CMAKE_CXX_COMPILER} has no C++11 support. Please use a different C++ compiler.")

endif()

# 附加 模块cmakeList.txt

LIST(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules)

#opencv 模块 版本大于 2.4.3

find_package(OpenCV 3.0 QUIET)

if(NOT OpenCV_FOUND)

find_package(OpenCV 2.4.3 QUIET)

if(NOT OpenCV_FOUND)

message(FATAL_ERROR "OpenCV > 2.4.3 not found.")

endif()

endif()

# 矩阵 Eigen3

find_package(Eigen3 3.1.0 REQUIRED)

# 可视化gui Pangolin

find_package(Pangolin REQUIRED)

# 点云显示 adding for point cloud viewer and mapper

find_package( PCL 1.7 REQUIRED )

#包含库文件

include_directories(

${PROJECT_SOURCE_DIR}

${PROJECT_SOURCE_DIR}/include

${EIGEN3_INCLUDE_DIR}

${Pangolin_INCLUDE_DIRS}#

${PCL_INCLUDE_DIRS}#点云库

)

add_definitions( ${PCL_DEFINITIONS} )

link_directories( ${PCL_LIBRARY_DIRS} )

# 自建库生成路径

set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/lib)

# 创建共享库 SHARED 动态链接库

add_library(${PROJECT_NAME} SHARED

src/System.cc

src/Tracking.cc

src/LocalMapping.cc

src/LoopClosing.cc

src/ORBextractor.cc

src/ORBmatcher.cc

src/FrameDrawer.cc

src/Converter.cc

src/MapPoint.cc

src/KeyFrame.cc

src/Map.cc

src/MapDrawer.cc

src/Optimizer.cc

src/PnPsolver.cc

src/Frame.cc

src/KeyFrameDatabase.cc

src/Sim3Solver.cc

src/Initializer.cc

src/Viewer.cc

src/pointcloudmapping.cc

)

# 连接库文件

target_link_libraries(

${PROJECT_NAME}

${OpenCV_LIBS}

${EIGEN3_LIBS}

${Pangolin_LIBRARIES}

${PROJECT_SOURCE_DIR}/Thirdparty/DBoW2/lib/libDBoW2.so

${PROJECT_SOURCE_DIR}/Thirdparty/g2o/lib/libg2o.so

${PCL_LIBRARIES}

)

#可执行文件放入 /bin 目录

set(EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin)

# Build examples

# rgb-d 相机示例

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/Examples/RGB-D)

add_executable(rgbd_tum

Examples/RGB-D/rgbd_tum.cc)

target_link_libraries(rgbd_tum ${PROJECT_NAME})

# 双目相机示例

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/Examples/Stereo)

add_executable(stereo_kitti #KITTI 数据集

Examples/Stereo/stereo_kitti.cc)

target_link_libraries(stereo_kitti ${PROJECT_NAME})

add_executable(stereo_euroc # EuRoC数据集

Examples/Stereo/stereo_euroc.cc)

target_link_libraries(stereo_euroc ${PROJECT_NAME})

add_executable(my_stereo # stereo_on_line

Examples/Stereo/my_stereo.cc)

target_link_libraries(my_stereo ${PROJECT_NAME})

#单目相机示例

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/Examples/Monocular)

add_executable(mono_tum # TUM数据集

Examples/Monocular/mono_tum.cc)

target_link_libraries(mono_tum ${PROJECT_NAME})

add_executable(mono_kitti #KITTI 数据集

Examples/Monocular/mono_kitti.cc)

target_link_libraries(mono_kitti ${PROJECT_NAME})

add_executable(mono_euroc # EuRoC数据集

Examples/Monocular/mono_euroc.cc)

target_link_libraries(mono_euroc ${PROJECT_NAME})

## .txt >>> .bin 文件转换

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/tools)

add_executable(

bin_vocabulary

tools/bin_vocabulary.cc)

target_link_libraries(bin_vocabulary ${PROJECT_NAME})

~/ewenwan/learn/vSLAM/test/vSLAM/ch9project/oRB_SLAM2/Examples/Stereo$ ./my_stereo