基于 paddlepaddle 的多标签分类实验

最近自己基于paddlepaddle做了一系列实验和工作,这里跟大家分享一下一个简单的多标签分类实验,希望对大家有帮助。

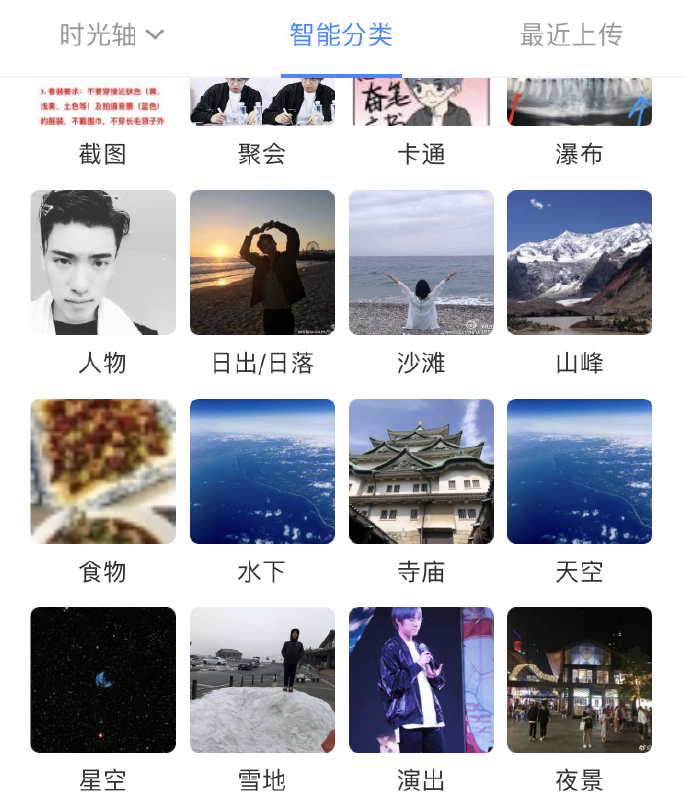

这里仿照各个网盘APP、相册APP的智能分类功能做了一个简易版的智能相册分类实验。与单标签的图像分类任务不同,由于一张相片可能属于多个目标类别,所以在进行图像分类时,需要将相片所属的所有类别找出,这一类图像分类任务也称为多标签分类任务。

所有图片如果侵权,请联系我删掉,谢谢!

图1 智能相册分类示意图

接下来就进入到具体的实验环节,首先导入所有环境:

# coding=utf-8

# 导入环境

import os

import math

import random

import matplotlib.pyplot as plt

# 在notebook中使用matplotlib.pyplot绘图时,需要添加该命令进行显示

%matplotlib inline

import numpy as np

from PIL import Image

import cv2

from sklearn.metrics import hamming_loss, multilabel_confusion_matrix

from sklearn.preprocessing import binarize

from collections import OrderedDict

import paddle

from paddle.io import Dataset

import paddle.nn as nn

from paddle.nn import Conv2D, MaxPool2D, Linear, Dropout, BatchNorm, AdaptiveAvgPool2D, MaxPool2D, AvgPool2D

from paddle.nn.initializer import Uniform

import paddle.nn.functional as F

from paddle.optimizer.lr import CosineAnnealingDecay

from paddle.regularizer import L2Decay

from paddle import ParamAttr

一、数据准备

1.1 数据准备

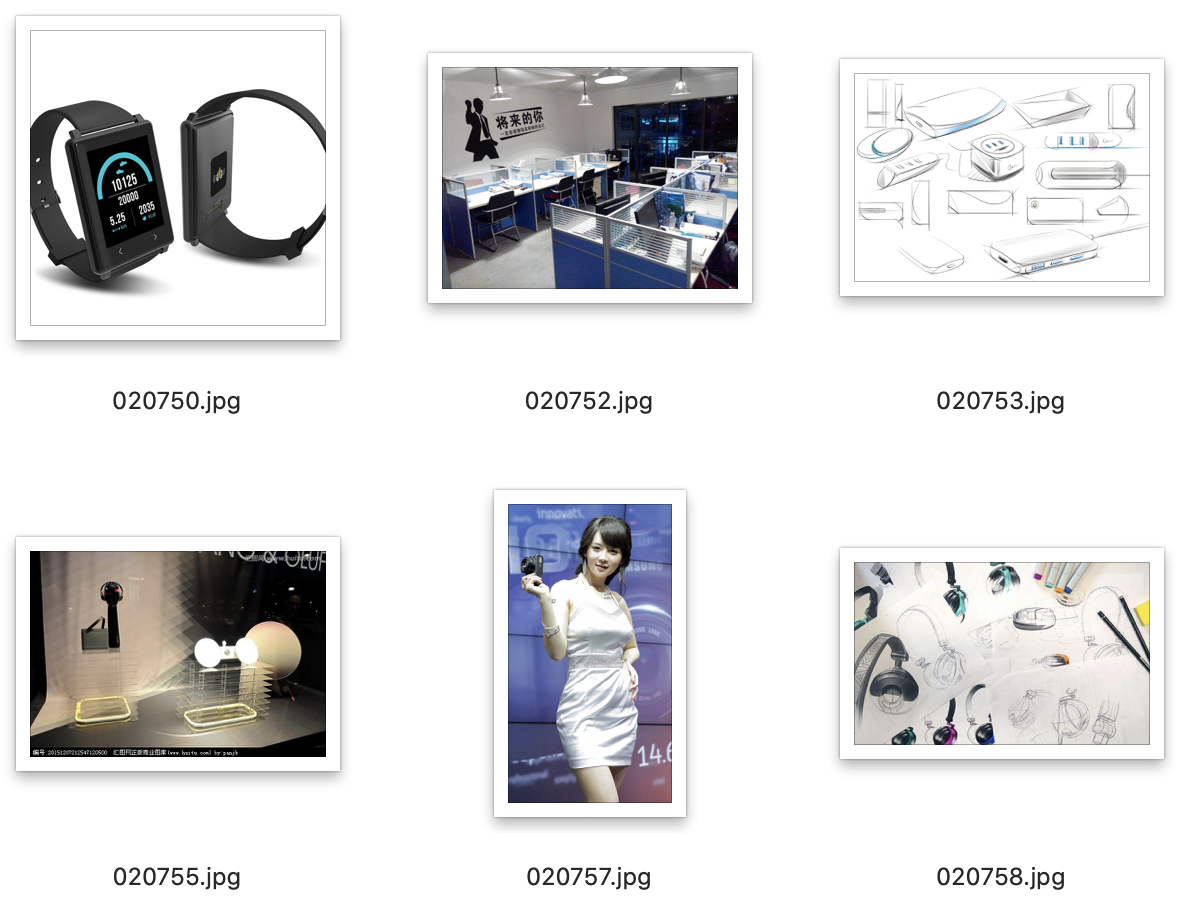

这里我在网上收集了包含20个常见的相片类别的图片数据并进行了标注,自己实验用,不公开,大家可以自己按照下面的格式整理自己的数据。其中,训练集共16706张图片,验证集共2168张图片,测试集共2117张图片。

图1 智能相册数据集示意图

20个类别包括:Vehicle, Sky, Food, Person, Building, Animal, Cartoons, Certificate, Electronic, Screenshot, BankCard, Mountain, Sea, Bill, Selfie, Night, Aircraft, Flower, Child, Ship

| 图像文件名 | 图像标注信息 |

|---|---|

| 028012.png | 1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0 |

使用PIL库,随机选取一张图片可视化,观察该数据集的图片数据。

img = Image.open('/home/aistudio/work/dataset/album/img/028012.png')

img = np.array(img)

plt.figure(figsize=(10, 10))

plt.imshow(img)

1.2 数据预处理

图像分类网络对输入图片的格式、大小有一定的要求,数据灌入模型前,需要对数据进行预处理操作,使图片满足网络训练以及预测的需要。另外,为了扩大训练数据集、抑制过拟合,提升模型的泛化能力,实验中还使用了几种基础的数据增广方法。

本实验的数据预处理共包括如下方法:

- 图像解码:将图像转为Numpy格式;

- 调整图片大小:将原图片中短边尺寸统一缩放到256;

- 随机裁剪图像:从原始图像和注释图像中随机裁剪一个子图像。 如果目标裁切尺寸大于原始图像,则将添加右下角的填充。裁剪尺寸为[512, 512];

- 随机翻转图像:以一定的概率水平翻转图像。这里使用0.5的概率进行图像翻转;

- 图像裁剪:将图像的长宽统一裁剪为224×224,确保模型读入的图片数据大小统一;

- 归一化(normalization):通过规范化手段,把神经网络每层中任意神经元的输入值分布改变成均值为0,方差为1的标准正太分布,使得最优解的寻优过程明显会变得平缓,训练过程更容易收敛;

- 通道变换:图像的数据格式为[H, W, C](即高度、宽度和通道数),而神经网络使用的训练数据的格式为[C, H, W],因此需要对图像数据重新排列,例如[224, 224, 3]变为[3, 224, 224]。

下面分别介绍数据预处理方法的代码实现。

# 定义decode_image函数,将图片转为Numpy格式

def decode_image(img, to_rgb=True):

data = np.frombuffer(img, dtype='uint8')

img = cv2.imdecode(data, 1)

if to_rgb:

assert img.shape[2] == 3, 'invalid shape of image[%s]' % (

img.shape)

img = img[:, :, ::-1]

return img

# 定义rand_crop_image函数,对图片进行随机裁剪

def rand_crop_image(img, size, scale=None, ratio=None, interpolation=-1):

interpolation = interpolation if interpolation >= 0 else None

if type(size) is int:

size = (size, size) # (h, w)

else:

size = size

scale = [0.08, 1.0] if scale is None else scale

ratio = [3. / 4., 4. / 3.] if ratio is None else ratio

# 在ratio范围内随机生成一个值作为宽高比

aspect_ratio = math.sqrt(random.uniform(*ratio))

w = 1. * aspect_ratio

h = 1. / aspect_ratio

img_h, img_w = img.shape[:2]

bound = min((float(img_w) / img_h) / (w**2),

(float(img_h) / img_w) / (h**2))

scale_max = min(scale[1], bound)

scale_min = min(scale[0], bound)

target_area = img_w * img_h * random.uniform(scale_min, scale_max)

target_size = math.sqrt(target_area)

# 得到裁剪框的宽和高

w = int(target_size * w)

h = int(target_size * h)

# 随机生成裁剪框的左上角坐标

i = random.randint(0, img_w - w)

j = random.randint(0, img_h - h)

# 裁剪该区域的图像作为新图片

img = img[j:j + h, i:i + w, :]

# 将裁剪后的图片缩放到指定大小

if interpolation is None:

return cv2.resize(img, size)

else:

return cv2.resize(img, size, interpolation=interpolation)

# 定义rand_flip_image函数,对图片进行随机翻转,其中通过flip_code指定翻转类型

# flip_code=1时为水平翻转;flip_code=0时为垂直翻转;flip_code=-1时同时进行水平和垂直翻转

def rand_flip_image(img, flip_code=1):

assert flip_code in [-1, 0, 1

], "flip_code should be a value in [-1, 0, 1]"

# 使用opencv随机翻转图片

if random.randint(0, 1) == 1:

return cv2.flip(img, flip_code)

else:

return img

# 定义resize_image函数,对图片大小进行调整

def resize_image(img, size=None, resize_short=None, interpolation=-1):

interpolation = interpolation if interpolation >= 0 else None

if resize_short is not None and resize_short > 0:

resize_short = resize_short

w = None

h = None

elif size is not None:

resize_short = None

w = size if type(size) is int else size[0]

h = size if type(size) is int else size[1]

else:

raise ValueError("invalid params for ReisizeImage for '\

'both 'size' and 'resize_short' are None")

img_h, img_w = img.shape[:2]

if resize_short is not None:

percent = float(resize_short) / min(img_w, img_h)

w = int(round(img_w * percent))

h = int(round(img_h * percent))

else:

w = w

h = h

if interpolation is None:

return cv2.resize(img, (w, h))

else:

return cv2.resize(img, (w, h), interpolation=interpolation)

# 定义crop_image函数,对图片进行裁剪

def crop_image(img, size):

if type(size) is int:

size = (size, size)

else:

size = size # (h, w)

w, h = size

img_h, img_w = img.shape[:2]

w_start = (img_w - w) // 2

h_start = (img_h - h) // 2

w_end = w_start + w

h_end = h_start + h

return img[h_start:h_end, w_start:w_end, :]

# 定义normalize_image函数,对图片进行归一化

def normalize_image(img, scale=None, mean=None, std=None, order=''):

if isinstance(scale, str):

scale = eval(scale)

scale = np.float32(scale if scale is not None else 1.0 / 255.0)

mean = mean if mean is not None else [0.485, 0.456, 0.406]

std = std if std is not None else [0.229, 0.224, 0.225]

shape = (3, 1, 1) if order == 'chw' else (1, 1, 3)

mean = np.array(mean).reshape(shape).astype('float32')

std = np.array(std).reshape(shape).astype('float32')

if isinstance(img, Image.Image):

img = np.array(img)

assert isinstance(img, np.ndarray), "invalid input 'img' in NormalizeImage"

# 对图片进行归一化

return (img.astype('float32') * scale - mean) / std

# 定义to_CHW_image函数,对图片进行通道变换,将原通道为‘hwc’的图像转为‘chw‘

def to_CHW_image(img):

if isinstance(img, Image.Image):

img = np.array(img)

# 对图片进行通道变换

return img.transpose((2, 0, 1))

# 图像预处理方法汇总

def transform(data, mode='train'):

# 图像解码

data = decode_image(data)

if mode == 'train':

# 随机裁剪

data = rand_crop_image(data, 224)

# 随机翻转

data = rand_flip_image(data)

else:

# 图像缩放

data = resize_image(data, resize_short=256)

# 图像裁剪

data = crop_image(data, size=224)

# 标准化

data = normalize_image(data, scale=1./255., mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# 通道变换

data = to_CHW_image(data)

return data

1.3 批量数据读取

上面的代码仅展示了读取一张图片和预处理的方法,但在真实场景的模型训练与评估过程中,通常会使用批量数据读取和预处理的方式。

# 随即打乱数据顺序

def shuffle_lines(full_lines, seed=None):

if seed is not None:

np.random.RandomState(seed).shuffle(full_lines)

else:

np.random.shuffle(full_lines)

return full_lines

# 读取数据,如果是训练数据,随即打乱数据顺序

def get_file_list(file_list, mode='train'):

with open(file_list) as flist:

full_lines = [line.strip() for line in flist]

if mode == "train":

full_lines = shuffle_lines(full_lines)

return full_lines

# 定义数据读取器

class AlbumDataset(Dataset):

def __init__(self, data_dir, file_list, mode='train'):

self.mode = mode

self.full_lines = get_file_list(file_list, mode=self.mode)

self.delimiter = '\t'

self.num_samples = len(self.full_lines)

self.data_dir = data_dir

return

def __getitem__(self, idx):

try:

line = self.full_lines[idx]

img_path, label_str = line.split(self.delimiter)

img_path = os.path.join(self.data_dir, img_path)

with open(img_path, 'rb') as f:

img = f.read()

labels = label_str.split(',')

labels = [int(i) for i in labels]

transformed_img = transform(img, self.mode)

return (transformed_img, np.array(labels).astype("float32"))

except Exception as e:

return self.__getitem__(random.randint(0, len(self)))

def __len__(self):

return self.num_samples

二、模型定义

这里使用 ResNet50_vd 进行多标签分类。

class ConvBNLayer(nn.Layer):

def __init__(self,

num_channels,

num_filters,

filter_size,

stride=1,

groups=1,

is_vd_mode=False,

act=None,

lr_mult=1.0):

super(ConvBNLayer, self).__init__()

self.is_vd_mode = is_vd_mode

self._pool2d_avg = AvgPool2D(kernel_size=2, stride=2, padding=0, ceil_mode=True)

self._conv = Conv2D(

in_channels=num_channels,

out_channels=num_filters,

kernel_size=filter_size,

stride=stride,

padding=(filter_size - 1) // 2,

groups=groups,

weight_attr=ParamAttr(learning_rate=lr_mult),

bias_attr=False)

self._batch_norm = BatchNorm(

num_filters,

act=act,

param_attr=ParamAttr(learning_rate=lr_mult),

bias_attr=ParamAttr(learning_rate=lr_mult))

def forward(self, inputs):

if self.is_vd_mode:

inputs = self._pool2d_avg(inputs)

y = self._conv(inputs)

y = self._batch_norm(y)

return y

class BottleneckBlock(nn.Layer):

def __init__(self,

num_channels,

num_filters,

stride,

shortcut=True,

if_first=False,

lr_mult=1.0):

super(BottleneckBlock, self).__init__()

self.conv0 = ConvBNLayer(

num_channels=num_channels,

num_filters=num_filters,

filter_size=1,

act='relu',

lr_mult=lr_mult)

self.conv1 = ConvBNLayer(

num_channels=num_filters,

num_filters=num_filters,

filter_size=3,

stride=stride,

act='relu',

lr_mult=lr_mult)

self.conv2 = ConvBNLayer(

num_channels=num_filters,

num_filters=num_filters * 4,

filter_size=1,

act=None,

lr_mult=lr_mult)

if not shortcut:

self.short = ConvBNLayer(

num_channels=num_channels,

num_filters=num_filters * 4,

filter_size=1,

stride=1,

is_vd_mode=False if if_first else True,

lr_mult=lr_mult)

self.shortcut = shortcut

def forward(self, inputs):

y = self.conv0(inputs)

conv1 = self.conv1(y)

conv2 = self.conv2(conv1)

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

y = paddle.add(x=short, y=conv2)

y = F.relu(y)

return y

class BasicBlock(nn.Layer):

def __init__(self,

num_channels,

num_filters,

stride,

shortcut=True,

if_first=False,

lr_mult=1.0):

super(BasicBlock, self).__init__()

self.stride = stride

self.conv0 = ConvBNLayer(

num_channels=num_channels,

num_filters=num_filters,

filter_size=3,

stride=stride,

act='relu',

lr_mult=lr_mult)

self.conv1 = ConvBNLayer(

num_channels=num_filters,

num_filters=num_filters,

filter_size=3,

act=None,

lr_mult=lr_mult)

if not shortcut:

self.short = ConvBNLayer(

num_channels=num_channels,

num_filters=num_filters,

filter_size=1,

stride=1,

is_vd_mode=False if if_first else True,

lr_mult=lr_mult)

self.shortcut = shortcut

def forward(self, inputs):

y = self.conv0(inputs)

conv1 = self.conv1(y)

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

y = paddle.add(x=short, y=conv1)

y = F.relu(y)

return y

class ResNet_vd(nn.Layer):

def __init__(self,

class_dim=20,

lr_mult_list=[1.0, 1.0, 1.0, 1.0, 1.0]):

super(ResNet_vd, self).__init__()

self.lr_mult_list = lr_mult_list

depth = [3, 4, 6, 3]

num_channels = [64, 256, 512, 1024]

num_filters = [64, 128, 256, 512]

self.conv1_1 = ConvBNLayer(

num_channels=3,

num_filters=32,

filter_size=3,

stride=2,

act='relu',

lr_mult=self.lr_mult_list[0])

self.conv1_2 = ConvBNLayer(

num_channels=32,

num_filters=32,

filter_size=3,

stride=1,

act='relu',

lr_mult=self.lr_mult_list[0])

self.conv1_3 = ConvBNLayer(

num_channels=32,

num_filters=64,

filter_size=3,

stride=1,

act='relu',

lr_mult=self.lr_mult_list[0])

self.pool2d_max = MaxPool2D(kernel_size=3, stride=2, padding=1)

self.block_list = []

for block in range(len(depth)):

shortcut = False

for i in range(depth[block]):

bottleneck_block = self.add_sublayer('bb_%d_%d' % (block, i),

BottleneckBlock(

num_channels=num_channels[block]

if i == 0 else num_filters[block] * 4,

num_filters=num_filters[block],

stride=2 if i == 0 and block != 0 else 1,

shortcut=shortcut,

if_first=block == i == 0,

lr_mult=self.lr_mult_list[block + 1]))

self.block_list.append(bottleneck_block)

shortcut = True

self.pool2d_avg = AdaptiveAvgPool2D(1)

self.pool2d_avg_channels = num_channels[-1] * 2

stdv = 1.0 / math.sqrt(self.pool2d_avg_channels * 1.0)

self.out = Linear(

self.pool2d_avg_channels,

class_dim,

weight_attr=ParamAttr(initializer=Uniform(-stdv, stdv)),

bias_attr=ParamAttr())

def forward(self, inputs):

y = self.conv1_1(inputs)

y = self.conv1_2(y)

y = self.conv1_3(y)

y = self.pool2d_max(y)

for block in self.block_list:

y = block(y)

y = self.pool2d_avg(y)

y = paddle.reshape(y, shape=[-1, self.pool2d_avg_channels])

y = self.out(y)

return y

model = ResNet_vd()

三、损失函数定义

class Loss(object):

def __init__(self, class_dim=1000, epsilon=None):

assert class_dim > 1, "class_dim=%d is not larger than 1" % (class_dim)

self._class_dim = class_dim

if epsilon is not None and epsilon >= 0.0 and epsilon <= 1.0:

self._epsilon = epsilon

self._label_smoothing = True

else:

self._epsilon = None

self._label_smoothing = False

def _labelsmoothing(self, target):

if target.shape[-1] != self._class_dim:

one_hot_target = F.one_hot(target, self._class_dim)

else:

one_hot_target = target

soft_target = F.label_smooth(one_hot_target, epsilon=self._epsilon)

soft_target = paddle.reshape(soft_target, shape=[-1, self._class_dim])

return soft_target

def _binary_crossentropy(self, input, target):

if self._label_smoothing:

target = self._labelsmoothing(target)

cost = F.binary_cross_entropy_with_logits(logit=input, label=target)

else:

cost = F.binary_cross_entropy_with_logits(logit=input, label=target)

avg_cost = paddle.mean(cost)

return avg_cost

def __call__(self, input, target):

pass

class MultiLabelLoss(Loss):

def __init__(self, class_dim=20, epsilon=None):

super(MultiLabelLoss, self).__init__(class_dim, epsilon)

def __call__(self, input, target):

cost = self._binary_crossentropy(input, target)

return cost

multi_label_loss = MultiLabelLoss(epsilon=0.1)

四、模型训练

4.1 训练配置

(1)加载模型参数并微调。

通过下边的命令可以下载所需的预训练模型。

!wget https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/ResNet50_vd_pretrained.pdparams

# 也可以使用paddle提供的带蒸馏的模型

!wget https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/ResNet50_vd_ssld_pretrained.pdparams

使用预训练好的模型参数进行模型微调。

# 使用已有的预训练模型来初始化网络

def init_model(net, pretrained_model):

if not (os.path.isdir(pretrained_model) or os.path.exists(pretrained_model + '.pdparams')):

raise ValueError("Model pretrain path {} does not "

"exists.".format(pretrained_model))

param_state_dict = paddle.load(pretrained_model + ".pdparams")

net.set_dict(param_state_dict)

return

# 使用预训练权重初始化模型

init_model(model, 'ResNet50_vd_pretrained')

(2) 加载训练集和测试集数据

batch_size = 64

DATADIR = 'album/img'

TRAIN_FILE_LIST = 'album/onehot_train.txt'

VAL_FILE_LIST = 'album/onehot_valid.txt'

# 创建数据读取类

train_dataset = AlbumDataset(DATADIR, TRAIN_FILE_LIST, mode='train')

val_dataset = AlbumDataset(DATADIR, VAL_FILE_LIST, mode='valid')

# 组建batch

train_loader = paddle.io.DataLoader(train_dataset, batch_size=batch_size, num_workers=0, drop_last=True)

valid_loader = paddle.io.DataLoader(val_dataset, batch_size=batch_size, num_workers=0)

(3) 定义优化器

# 训练总轮数

epoch_num = 10

# 训练集样本总数

total_images = len(train_dataset)

# 每轮的batch数量

step_each_epoch = total_images // batch_size

# 学习率

lr = 0.07

# 使用 cosine annealing 的策略来动态调整学习率,初始学习率设置为0.001

learning_rate = CosineAnnealingDecay(lr, step_each_epoch * epoch_num)

# 使用Momentum优化器

optimizer = paddle.optimizer.Momentum(

learning_rate=learning_rate,

momentum=0.9,

parameters=model.parameters(),

weight_decay=L2Decay(0.000070))

(4) 定义评估指标

对于多标签分类问题,可以使用汉明距离以及top-1准确率来评估模型精度。

def accuracy_score(output, target):

mcm = multilabel_confusion_matrix(target, output)

tns = mcm[:, 0, 0]

fns = mcm[:, 1, 0]

tps = mcm[:, 1, 1]

fps = mcm[:, 0, 1]

accuracy = (sum(tps) + sum(tns)) / (sum(tps) + sum(tns) + sum(fns) + sum(fps))

return accuracy

def create_metric(out, label):

fetchs = OrderedDict()

metric_names = set()

out = F.sigmoid(out)

preds = binarize(out.numpy(), threshold=0.5)

targets = label.numpy()

ham_dist = paddle.to_tensor(hamming_loss(preds, targets))

accuracy = paddle.to_tensor(accuracy_score(preds, targets))

ham_dist_name = "hamming_distance"

accuracy_name = "multilabel_accuracy"

metric_names.add(ham_dist_name)

metric_names.add(accuracy_name)

fetchs[accuracy_name] = accuracy

fetchs[ham_dist_name] = ham_dist

return fetchs

4.2 模型训练

# 定义训练过程

# 调用GPU进行运算

use_gpu = True

paddle.set_device('gpu:0') if use_gpu else paddle.set_device('cpu')

print('start training ... ')

# 将模型切换到训练模式

model.train()

# 训练模型

for epoch in range(epoch_num):

for batch_id, data in enumerate(train_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data.numpy().astype('float32').reshape(-1, 20))

# 运行模型前向计算,得到预测值

logits = model(img)

# 计算损失函数

loss = multi_label_loss(logits, label)

if batch_id % 20 == 0:

print("epoch: {}, batch_id: {}, loss is: {}".format(epoch, batch_id, loss.numpy()))

# 反向传播,更新权重,清除梯度

loss.backward()

optimizer.step()

optimizer.clear_grad()

lr_value = optimizer._global_learning_rate().numpy()[0]

learning_rate.step()

# 每个epoch训练完成后,进行模型评估

# 将模型切换到评估模式

model.eval()

accuracies = []

losses = []

for batch_id, data in enumerate(valid_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data.numpy().astype('float32').reshape(-1, 20))

# 运行模型前向计算,得到预测值

logits = model(img)

# 计算损失函数

loss = multi_label_loss(logits, label)

metric = create_metric(logits, label)

losses.append(loss.numpy())

print("[validation] loss: {} hamming_distance: {} multilabel_accuracy: {}".format(

loss.numpy(), metric['hamming_distance'].numpy(), metric['multilabel_accuracy'].numpy()))

# 完成评估后,模型切换回训练模式继续训练

model.train()

start training ...

epoch: 0, batch_id: 0, loss is: [0.7192106]

epoch: 0, batch_id: 20, loss is: [0.24829534]

epoch: 0, batch_id: 40, loss is: [0.20408101]

epoch: 0, batch_id: 60, loss is: [0.19108313]

epoch: 0, batch_id: 80, loss is: [0.16176441]

epoch: 0, batch_id: 100, loss is: [0.14179903]

epoch: 0, batch_id: 120, loss is: [0.1749525]

epoch: 0, batch_id: 140, loss is: [0.13995571]

epoch: 0, batch_id: 160, loss is: [0.12687533]

epoch: 0, batch_id: 180, loss is: [0.14006634]

epoch: 0, batch_id: 200, loss is: [0.12756442]

epoch: 0, batch_id: 220, loss is: [0.14656875]

epoch: 0, batch_id: 240, loss is: [0.15922141]

epoch: 0, batch_id: 260, loss is: [0.11737509]

[validation] loss: [0.14424866] hamming_distance: [0.03928572] multilabel_accuracy: [0.9607143]

epoch: 1, batch_id: 0, loss is: [0.11617111]

epoch: 1, batch_id: 20, loss is: [0.12141372]

epoch: 1, batch_id: 40, loss is: [0.1454503]

epoch: 1, batch_id: 60, loss is: [0.147155]

epoch: 1, batch_id: 80, loss is: [0.1385282]

epoch: 1, batch_id: 100, loss is: [0.12295955]

epoch: 1, batch_id: 120, loss is: [0.14739689]

epoch: 1, batch_id: 140, loss is: [0.13650046]

epoch: 1, batch_id: 160, loss is: [0.11932946]

epoch: 1, batch_id: 180, loss is: [0.12400924]

epoch: 1, batch_id: 200, loss is: [0.10366694]

epoch: 1, batch_id: 220, loss is: [0.13126066]

epoch: 1, batch_id: 240, loss is: [0.1284022]

epoch: 1, batch_id: 260, loss is: [0.11365855]

[validation] loss: [0.13109535] hamming_distance: [0.03571429] multilabel_accuracy: [0.96428573]

epoch: 2, batch_id: 0, loss is: [0.111964]

epoch: 2, batch_id: 20, loss is: [0.11162999]

epoch: 2, batch_id: 40, loss is: [0.12943123]

epoch: 2, batch_id: 60, loss is: [0.1452783]

epoch: 2, batch_id: 80, loss is: [0.12580377]

epoch: 2, batch_id: 100, loss is: [0.11047614]

epoch: 2, batch_id: 120, loss is: [0.13516384]

epoch: 2, batch_id: 140, loss is: [0.11171752]

epoch: 2, batch_id: 160, loss is: [0.12167928]

epoch: 2, batch_id: 180, loss is: [0.12258511]

epoch: 2, batch_id: 200, loss is: [0.09977267]

epoch: 2, batch_id: 220, loss is: [0.11836746]

epoch: 2, batch_id: 240, loss is: [0.13338491]

epoch: 2, batch_id: 260, loss is: [0.11356007]

[validation] loss: [0.12784383] hamming_distance: [0.03571429] multilabel_accuracy: [0.96428573]

epoch: 3, batch_id: 0, loss is: [0.12162372]

epoch: 3, batch_id: 20, loss is: [0.11657746]

epoch: 3, batch_id: 40, loss is: [0.12935811]

epoch: 3, batch_id: 60, loss is: [0.13975267]

epoch: 3, batch_id: 80, loss is: [0.13158603]

epoch: 3, batch_id: 100, loss is: [0.1125318]

epoch: 3, batch_id: 120, loss is: [0.12533425]

epoch: 3, batch_id: 140, loss is: [0.10992051]

epoch: 3, batch_id: 160, loss is: [0.1161565]

epoch: 3, batch_id: 180, loss is: [0.10817074]

epoch: 3, batch_id: 200, loss is: [0.10297374]

epoch: 3, batch_id: 220, loss is: [0.12469847]

epoch: 3, batch_id: 240, loss is: [0.11957179]

epoch: 3, batch_id: 260, loss is: [0.11390025]

[validation] loss: [0.12930362] hamming_distance: [0.03214286] multilabel_accuracy: [0.9678571]

epoch: 4, batch_id: 0, loss is: [0.11296538]

epoch: 4, batch_id: 20, loss is: [0.10746139]

epoch: 4, batch_id: 40, loss is: [0.12223774]

epoch: 4, batch_id: 60, loss is: [0.12957215]

epoch: 4, batch_id: 80, loss is: [0.12120795]

epoch: 4, batch_id: 100, loss is: [0.10759816]

epoch: 4, batch_id: 120, loss is: [0.10706748]

epoch: 4, batch_id: 140, loss is: [0.10441463]

epoch: 4, batch_id: 160, loss is: [0.12018487]

epoch: 4, batch_id: 180, loss is: [0.1068358]

epoch: 4, batch_id: 200, loss is: [0.09596813]

epoch: 4, batch_id: 220, loss is: [0.10846743]

epoch: 4, batch_id: 240, loss is: [0.11947981]

epoch: 4, batch_id: 260, loss is: [0.10540524]

[validation] loss: [0.12597199] hamming_distance: [0.03482143] multilabel_accuracy: [0.96517855]

epoch: 5, batch_id: 0, loss is: [0.1053249]

epoch: 5, batch_id: 20, loss is: [0.09972227]

epoch: 5, batch_id: 40, loss is: [0.1135644]

epoch: 5, batch_id: 60, loss is: [0.12807007]

epoch: 5, batch_id: 80, loss is: [0.11112165]

epoch: 5, batch_id: 100, loss is: [0.10442357]

epoch: 5, batch_id: 120, loss is: [0.10723296]

epoch: 5, batch_id: 140, loss is: [0.09574118]

epoch: 5, batch_id: 160, loss is: [0.10538233]

epoch: 5, batch_id: 180, loss is: [0.09416173]

epoch: 5, batch_id: 200, loss is: [0.09502217]

epoch: 5, batch_id: 220, loss is: [0.11428049]

epoch: 5, batch_id: 240, loss is: [0.10403752]

epoch: 5, batch_id: 260, loss is: [0.1097267]

[validation] loss: [0.12121929] hamming_distance: [0.03303571] multilabel_accuracy: [0.9669643]

epoch: 6, batch_id: 0, loss is: [0.10973155]

epoch: 6, batch_id: 40, loss is: [0.10586993]

epoch: 6, batch_id: 60, loss is: [0.12340867]

epoch: 6, batch_id: 80, loss is: [0.10952523]

epoch: 6, batch_id: 100, loss is: [0.1043926]

epoch: 6, batch_id: 120, loss is: [0.10537393]

epoch: 6, batch_id: 140, loss is: [0.10136109]

epoch: 6, batch_id: 160, loss is: [0.10438278]

epoch: 6, batch_id: 180, loss is: [0.10190848]

epoch: 6, batch_id: 200, loss is: [0.08585998]

epoch: 6, batch_id: 220, loss is: [0.09801853]

epoch: 6, batch_id: 240, loss is: [0.1061273]

epoch: 6, batch_id: 260, loss is: [0.09472618]

[validation] loss: [0.11974805] hamming_distance: [0.02589286] multilabel_accuracy: [0.97410715]

epoch: 7, batch_id: 0, loss is: [0.10242014]

epoch: 7, batch_id: 20, loss is: [0.09664041]

epoch: 7, batch_id: 40, loss is: [0.11024401]

epoch: 7, batch_id: 60, loss is: [0.11703146]

epoch: 7, batch_id: 80, loss is: [0.10296616]

epoch: 7, batch_id: 100, loss is: [0.09473763]

epoch: 7, batch_id: 120, loss is: [0.11214614]

epoch: 7, batch_id: 140, loss is: [0.08823032]

epoch: 7, batch_id: 160, loss is: [0.10222819]

epoch: 7, batch_id: 180, loss is: [0.09796342]

epoch: 7, batch_id: 200, loss is: [0.09887124]

epoch: 7, batch_id: 220, loss is: [0.09719805]

epoch: 7, batch_id: 240, loss is: [0.09651403]

epoch: 7, batch_id: 260, loss is: [0.09195333]

[validation] loss: [0.11878593] hamming_distance: [0.02946429] multilabel_accuracy: [0.9705357]

epoch: 8, batch_id: 0, loss is: [0.09954296]

epoch: 8, batch_id: 20, loss is: [0.09171855]

epoch: 8, batch_id: 40, loss is: [0.09925689]

epoch: 8, batch_id: 60, loss is: [0.11354373]

epoch: 8, batch_id: 80, loss is: [0.10011038]

epoch: 8, batch_id: 100, loss is: [0.09674358]

epoch: 8, batch_id: 120, loss is: [0.10774907]

epoch: 8, batch_id: 140, loss is: [0.08995349]

epoch: 8, batch_id: 160, loss is: [0.10529572]

epoch: 8, batch_id: 180, loss is: [0.10399586]

epoch: 8, batch_id: 200, loss is: [0.08143127]

epoch: 8, batch_id: 220, loss is: [0.0980135]

epoch: 8, batch_id: 240, loss is: [0.1025499]

epoch: 8, batch_id: 260, loss is: [0.08864929]

[validation] loss: [0.11925511] hamming_distance: [0.02946429] multilabel_accuracy: [0.9705357]

epoch: 9, batch_id: 0, loss is: [0.10794401]

epoch: 9, batch_id: 20, loss is: [0.09459089]

epoch: 9, batch_id: 40, loss is: [0.10164409]

epoch: 9, batch_id: 60, loss is: [0.11481477]

epoch: 9, batch_id: 80, loss is: [0.0996296]

epoch: 9, batch_id: 100, loss is: [0.09542734]

epoch: 9, batch_id: 120, loss is: [0.10714017]

epoch: 9, batch_id: 140, loss is: [0.09121912]

epoch: 9, batch_id: 160, loss is: [0.10141321]

epoch: 9, batch_id: 180, loss is: [0.09824826]

epoch: 9, batch_id: 200, loss is: [0.08421607]

epoch: 9, batch_id: 220, loss is: [0.0978547]

epoch: 9, batch_id: 240, loss is: [0.10450318]

epoch: 9, batch_id: 260, loss is: [0.08492423]

[validation] loss: [0.119349] hamming_distance: [0.03035714] multilabel_accuracy: [0.9696429]

五、模型保存

# 保存模型参数

paddle.save(model.state_dict(), 'model.pdparams')

六、模型评估

use_gpu = True

paddle.set_device('gpu:0') if use_gpu else paddle.set_device('cpu')

print('start evaluation .......')

model = ResNet_vd()

params_file_path="model.pdparams"

model_state_dict = paddle.load(params_file_path)

model.load_dict(model_state_dict)

model.eval()

accuracies = []

losses = []

for batch_id, data in enumerate(valid_loader()):

x_data, y_data = data

img = paddle.to_tensor(x_data)

label = paddle.to_tensor(y_data.numpy().astype('float32').reshape(-1, 20))

# 运行模型前向计算,得到预测值

logits = model(img)

# 计算损失函数

loss = multi_label_loss(logits, label)

metric = create_metric(logits, label)

losses.append(loss.numpy())

print("[validation] loss: {} hamming_distance: {} multilabel_accuracy: {}".format(

loss.numpy(), metric['hamming_distance'].numpy(), metric['multilabel_accuracy'].numpy()))

七、模型预测

def read_label_file(label_file_path):

with open(label_file_path, 'r') as label_file:

label_list = [line.split('\n')[0] for line in label_file.readlines()]

return label_list

def get_random_data(test_file_path):

with open(test_file_path) as test_file:

lines = test_file.readlines()

img_name, y_data = random.choice(lines).split('\t')

labels = y_data.split(',')

labels = [int(i) for i in labels]

img_path = os.path.join('/home/aistudio/work/dataset/album/img', img_name)

return img_path, labels

use_gpu = True

paddle.set_device('gpu:0') if use_gpu else paddle.set_device('cpu')

model = ResNet_vd()

params_file_path="model.pdparams"

model_state_dict = paddle.load(params_file_path)

model.load_dict(model_state_dict)

model.eval()

img_path, labels = get_random_data('/home/aistudio/work/dataset/album/onehot_test.txt')

with open(img_path, 'rb') as f:

img = transform(f.read(), mode='test')

batch_img = np.expand_dims(img, 0)

input_tensor = paddle.to_tensor(batch_img)

print('start evaluation .......')

logits = model(input_tensor)

outs = F.sigmoid(logits)

preds = binarize(outs.numpy(), threshold=0.5)[0]

label_list = read_label_file('/home/aistudio/work/dataset/album/album_labels.txt')

pred_labels = [label_list[i] for i in range(len(preds)) if preds[i]]

true_labels = [label_list[i] for i in range(len(labels)) if labels[i]]

print("The true category is {} and the predicted category is {}".format(true_labels, pred_labels))

img = Image.open(img_path)

img = np.array(img)

plt.figure(figsize=(5, 5))

plt.imshow(img)