kubekey 离线部署 kubesphere

安装版本信息:

- 操作系统: ubuntu 22.04 LTS

- kubernetes:v1.22.10

- container-runtime: containerd

- 镜像仓库:由kubekey自动安装harbor

安装流程如下:

- 离线部署harbor仓库

- 离线安装kubernetes集群

- 部署 NFS 后端存储

- 已有kubernetes集群上离线部署kubesphere

仔细确认兼容性要求:

- kubekey支持的kubernetes版本:https://github.com/kubesphere/kubekey#kubernetes-versions

- kubesphere支持的kubernetes版本:https://kubesphere.io/docs/v3.3/installing-on-kubernetes/introduction/prerequisites/

说明:安装前务必确认版本兼容范围,本次采用分阶段安装方式,在未进行充分测试前不要轻易尝试一键安装registry、kubernetes、后端存储和kubesphere,否则可能遇到安装失败时无法清理干净环境,出现进退两难的情况。

节点安装清单:

| 主机名 | IP地址 | 说明 |

|---|---|---|

| node1 | 192.168.72.40 | master |

| node2 | 192.168.72.41 | worker |

| node3 | 192.168.72.42 | worker |

| harbor | 192.168.72.43 | harbor仓库 |

| nfs-server | 192.168.72.61 | NFS服务器 |

1、制作kubernetes离线安装包

说明:以下步骤在一台能够联网的机器上操作。

安装 kubekey v2.2.2 版本

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v2.2.2 sh -

创建离线安装包保存目录

mkdir /data/kubekey

cd /data/kubekey

手动创建manifest-sample.yaml文件,定义要安装的集群版本和组件信息,修改组件版本时要格外小心,kubesphere官方并未给出各个组件之间版本应该如何组合。

root@ubuntu:/data/kubekey# cat manifest-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Manifest

metadata:

name: sample

spec:

arches:

- amd64

operatingSystems:

- arch: amd64

type: linux

id: ubuntu

version: "22.04"

osImage: Ubuntu 22.04 LTS

repository:

iso:

localPath:

url: https://github.com/kubesphere/kubekey/releases/download/v2.2.2/ubuntu-22.04-debs-amd64.iso

kubernetesDistributions:

- type: kubernetes

version: v1.22.10

components:

helm:

version: v3.6.3

cni:

version: v0.9.1

etcd:

version: v3.4.13

containerRuntimes:

- type: containerd

version: 1.6.4

crictl:

version: v1.24.0

harbor:

version: v2.4.1

docker-compose:

version: v2.2.2

images:

- docker.io/calico/cni:v3.23.2

- docker.io/calico/kube-controllers:v3.23.2

- docker.io/calico/node:v3.23.2

- docker.io/calico/pod2daemon-flexvol:v3.23.2

- docker.io/coredns/coredns:1.8.0

- docker.io/kubesphere/k8s-dns-node-cache:1.15.12

- docker.io/kubesphere/kube-apiserver:v1.22.10

- docker.io/kubesphere/kube-controller-manager:v1.22.10

- docker.io/kubesphere/kube-proxy:v1.22.10

- docker.io/kubesphere/kube-scheduler:v1.22.10

- docker.io/kubesphere/pause:3.5

导出制品 artifacts,从docerkhub和github下载镜像和文件可能并不容易,建议采取科学手段。

kk artifact export -m manifest-sample.yaml -o kubernetes-v1.22.10.tar.gz

制作的离线包仅包含kubernetes集群镜像和操作系统依赖,约1.5G大小,将该离线包以及kk二进制文件复制到离线环境/data/kubekey目录下。

root@ubuntu:/data/kubekey# du -sh kubernetes-v1.22.10.tar.gz

1.5G kubernetes-v1.22.10.tar.gz

root@ubuntu:/data/kubekey# which kk

/usr/local/bin/kk

2、部署harbor仓库

说明:以下操作在离线环境执行。这里复用harbor节点,所以以下操作在harbor节点执行。

cd /data/kubekey

mv kk /usr/local/bin/

创建集群配置文件

kk create config --with-kubernetes v1.22.10 -f config-sample.yaml

修改集群配置文件:

root@ubuntu:/data/kubekey# cat config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {

name: node1, address: 192.168.72.40, internalAddress: 192.168.72.40, user: root, password: "123456"}

- {

name: node2, address: 192.168.72.41, internalAddress: 192.168.72.41, user: root, password: "123456"}

- {

name: node3, address: 192.168.72.42, internalAddress: 192.168.72.42, user: root, password: "123456"}

- {

name: harbor, address: 192.168.72.43, internalAddress: 192.168.72.43, user: root, password: "123456"}

roleGroups:

etcd:

- node1

control-plane:

- node1

worker:

- node1

- node2

- node3

registry:

- harbor

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.22.10

clusterName: cluster.local

autoRenewCerts: true

containerManager: containerd

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

multusCNI:

enabled: false

registry:

type: harbor

auths:

"dockerhub.kubekey.local":

username: admin

password: Harbor12345

certsPath: "/etc/docker/certs.d/dockerhub.kubekey.local"

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

registryMirrors: []

insecureRegistries: []

addons: []

部署 harbor 镜像仓库

kk init registry -f config-sample.yaml -a kubernetes-v1.22.10.tar.gz

登录harbor节点确认部署成功

root@harbor:~# cd /opt/harbor

root@harbor:/opt/harbor# docker-compose ps -a

NAME COMMAND SERVICE STATUS PORTS

chartmuseum "./docker-entrypoint…" chartmuseum running (healthy)

harbor-core "/harbor/entrypoint.…" core running (healthy)

harbor-db "/docker-entrypoint.…" postgresql running (healthy)

harbor-jobservice "/harbor/entrypoint.…" jobservice running (healthy)

harbor-log "/bin/sh -c /usr/loc…" log running (healthy) 127.0.0.1:1514->10514/tcp

harbor-portal "nginx -g 'daemon of…" portal running (healthy)

nginx "nginx -g 'daemon of…" proxy running (healthy) 0.0.0.0:4443->4443/tcp, 0.0.0.0:80->8080/tcp, 0.0.0.0:443->8443/tcp, :::4443->4443/tcp, :::80->8080/tcp, :::443->8443/tcp

notary-server "/bin/sh -c 'migrate…" notary-server running

notary-signer "/bin/sh -c 'migrate…" notary-signer running

redis "redis-server /etc/r…" redis running (healthy)

registry "/home/harbor/entryp…" registry running (healthy)

registryctl "/home/harbor/start.…" registryctl running (healthy)

trivy-adapter "/home/scanner/entry…" trivy-adapter running (healthy)

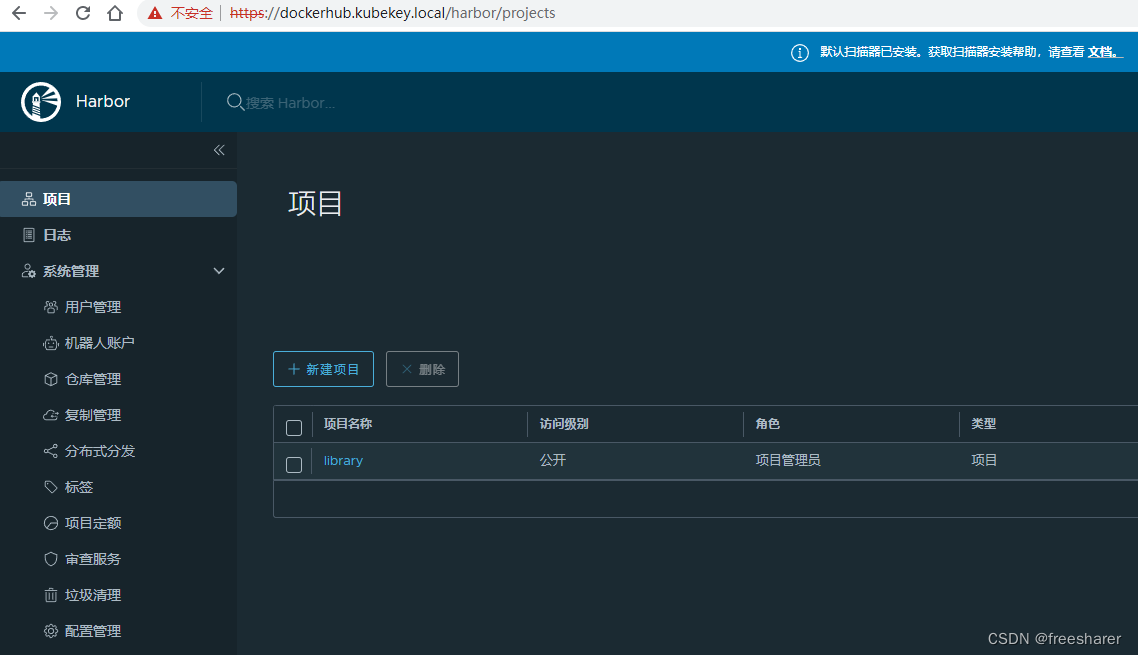

本地配置hosts解析,浏览器登录harbor仓库验证

192.168.72.43 dockerhub.kubekey.local

成功登陆如下图所示

登陆harbor仓库,提示x509错误

root@ubuntu:~# docker login dockerhub.kubekey.local

Username: admin

Password:

Error response from daemon: Get "https://dockerhub.kubekey.local/v2/": x509: certificate signed by unknown authority

harbor节点复制证书到/etc/docker/certs.d/dockerhub.kubekey.local/目录下

mkdir -p /etc/docker/certs.d/dockerhub.kubekey.local

cp /etc/ssl/registry/ssl/ca.pem /etc/docker/certs.d/dockerhub.kubekey.local/ca.crt

cp /etc/ssl/registry/ssl/dockerhub.kubekey.local-key.pem /etc/docker/certs.d/dockerhub.kubekey.local/dockerhub.kubekey.local.key

cp /etc/ssl/registry/ssl/dockerhub.kubekey.local.pem /etc/docker/certs.d/dockerhub.kubekey.local/dockerhub.kubekey.local.cert

重启docker使配置生效

systemctl daemon-reload

systemctl restart docker

再次验证harbor登录

root@harbor:~# docker login dockerhub.kubekey.local

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

如果要在其他节点登陆harbor仓库,也需要复制/etc/docker/certs.d/dockerhub.kubekey.local/到对应节点下。

推送离线安装包中的镜像到harbor仓库

联网环境下载用于创建 Harbor 项目的脚本文件

curl -O https://raw.githubusercontent.com/kubesphere/ks-installer/master/scripts/create_project_harbor.sh

修改脚本文件内容如下, 另外注意curl命令末尾加上 -k 参数

root@harbor:~# vim create_project_harbor.sh

#!/usr/bin/env bash

url="https://dockerhub.kubekey.local"

user="admin"

passwd="Harbor12345"

harbor_projects=(library

kubesphereio

kubesphere

calico

coredns

openebs

csiplugin

minio

mirrorgooglecontainers

osixia

prom

thanosio

jimmidyson

grafana

elastic

istio

jaegertracing

jenkins

weaveworks

openpitrix

joosthofman

nginxdemos

fluent

kubeedge

)

for project in "${harbor_projects[@]}"; do

echo "creating $project"

curl -u "${user}:${passwd}" -X POST -H "Content-Type: application/json" "${url}/api/v2.0/projects" -d "{ \"project_name\": \"${project}\", \"public\": true}" -k

done

harbor节点离线环境执行脚本,创建harbor项目

root@harbor:~# chmod +x create_project_harbor.sh

root@harbor:~# ./create_project_harbor.sh

creating library

{

"errors":[{

"code":"CONFLICT","message":"The project named library already exists"}]}

creating kubesphereio

creating kubesphere

creating calico

creating coredns

creating openebs

creating csiplugin

creating minio

creating mirrorgooglecontainers

creating osixia

creating prom

creating thanosio

creating jimmidyson

creating grafana

creating elastic

creating istio

creating jaegertracing

creating jenkins

creating weaveworks

creating openpitrix

creating joosthofman

creating nginxdemos

creating fluent

creating kubeedge

推送kubernetes镜像到harbor仓库

kk artifact image push -f config-sample.yaml -a kubernetes-v1.22.10.tar.gz

3、部署kubernetes集群

在harbor节点执行以下命令,离线部署kubernetes集群

kk create cluster -f config-sample.yaml -a kubernetes-v1.22.10.tar.gz --with-packages

查看kubernetes集群状态

root@node1:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node1 Ready control-plane,master,worker 9h v1.22.10 192.168.72.40 <none> Ubuntu 22.04 LTS 5.15.0-27-generic containerd://1.6.4

node2 Ready worker 9h v1.22.10 192.168.72.41 <none> Ubuntu 22.04 LTS 5.15.0-39-generic containerd://1.6.4

node3 Ready worker 9h v1.22.10 192.168.72.42 <none> Ubuntu 22.04 LTS 5.15.0-27-generic containerd://1.6.4

4、部署NFS server 存储

在nfs-server节点执行,离线环境可以搭建本地建yum源自行解决

root@nfs-server:~# apt install -y nfs-kernel-server

root@nfs-server:~# mkdir -p /data/nfs_share/k8s

配置权限

sudo chown -R nobody:nogroup /data/nfs_share/k8s

sudo chmod 777 /data/nfs_share/k8s

cat >>/etc/exports<<EOF

/data/nfs_share/k8s 192.168.72.0/24(rw,sync,no_subtree_check,no_root_squash)

EOF

导出共享目录

sudo exportfs -a

sudo systemctl restart nfs-kernel-server

所有kubernetes节点部署nfs客户端

sudo apt install -y nfs-common

在kubernetes集群中部署nfs-subdir-external-provisioner

helm charts和镜像需要自行处理,在线环境执行

helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner

helm pull nfs-subdir-external-provisioner/nfs-subdir-external-provisioner --version=4.0.17

docker pull kubesphere/nfs-subdir-external-provisioner:v4.0.2

docker tag kubesphere/nfs-subdir-external-provisioner:v4.0.2 dockerhub.kubekey.local/library/nfs-subdir-external-provisioner:v4.0.2

docker push dockerhub.kubekey.local/library/nfs-subdir-external-provisioner:v4.0.2

离线环境kubernetes node1节点执行以下命令

helm install nfs-subdir-external-provisioner ./nfs-subdir-external-provisioner-4.0.17.tgz \

--namespace=nfs-provisioner --create-namespace \

--set image.repository=dockerhub.kubekey.local/library/nfs-subdir-external-provisioner \

--set image.tag=v4.0.2 \

--set replicaCount=2 \

--set storageClass.name=nfs-client \

--set storageClass.defaultClass=true \

--set nfs.server=192.168.72.61 \

--set nfs.path=/data/nfs_share/k8s

确认默认storage-class已经就绪

root@node1:~# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client (default) cluster.local/nfs-subdir-external-provisioner Delete Immediate true 5h3m

5、部署kubesphere

说明:以下操作在联网机器执行。

联网环境下载kubesphere镜像清单文件 images-list.txt:

curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/images-list.txt

处理字符问题

cat -A images-list.txt

sed -i 's/\r$//' images-list.txt

下载 offline-installation-tool.sh。

curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/offline-installation-tool.sh

chmod +x offline-installation-tool.sh

执行 offline-installation-tool.sh 拉取镜像到本地

./offline-installation-tool.sh -s -l images-list.txt -d ./kubesphere-images

将kubesphere-images/目录复制到离线环境,约11G大小

root@ubuntu:/data/kubekey# ll kubesphere-images/

total 11225116

drwxr-xr-x 2 root root 4096 Sep 10 19:57 ./

drwxr-xr-x 4 root root 4096 Sep 10 21:36 ../

-rw-r--r-- 1 root root 1305886169 Sep 10 20:08 example-images.tar.gz

-rw-r--r-- 1 root root 24158869 Sep 10 19:16 gatekeeper-images.tar.gz

-rw-r--r-- 1 root root 504847253 Sep 10 20:02 istio-images.tar.gz

-rw-r--r-- 1 root root 751242548 Sep 10 19:06 k8s-images.tar.gz

-rw-r--r-- 1 root root 36864448 Sep 10 19:16 kubeedge-images.tar.gz

-rw-r--r-- 1 root root 6833486886 Sep 10 19:48 kubesphere-devops-images.tar.gz

-rw-r--r-- 1 root root 818288843 Sep 10 19:14 kubesphere-images.tar.gz

-rw-r--r-- 1 root root 800497114 Sep 10 19:59 kubesphere-logging-images.tar.gz

-rw-r--r-- 1 root root 354155978 Sep 10 19:53 kubesphere-monitoring-images.tar.gz

-rw-r--r-- 1 root root 34787481 Sep 10 19:16 openpitrix-images.tar.gz

-rw-r--r-- 1 root root 30274818 Sep 10 20:08 weave-scope-images.tar.gz

root@ubuntu:/data/kubekey# du -sh kubesphere-images/

11G kubesphere-images/

离线环境harbor节点执行,推送镜像至harbor仓库

./offline-installation-tool.sh -l images-list.txt -d ./kubesphere-images -r dockerhub.kubekey.local

联网下载KubeSphere 部署文件,复制到kubernetes node1节点

curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/cluster-configuration.yaml

curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/kubesphere-installer.yaml

编辑 cluster-configuration.yaml 添加私有镜像仓库,另外可选启用需要的插件。

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

local_registry: dockerhub.kubekey.local

修改kubesphere-installer.yaml文件,将文件中的 ks-installer 镜像替换为私有仓库的地址。

sed -i "s#^\s*image: kubesphere.*/ks-installer:.*# image: dockerhub.kubekey.local/kubesphere/ks-installer:v3.0.0#" kubesphere-installer.yaml

开始安装kubesphere

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

kubesphere部署完成后确认所有pods运行正常

root@node1:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

argocd devops-argocd-application-controller-0 1/1 Running 0 40m

argocd devops-argocd-applicationset-controller-7db9699bf7-9z5xs 1/1 Running 0 40m

argocd devops-argocd-dex-server-7879dcc866-xqjct 1/1 Running 0 47s

argocd devops-argocd-notifications-controller-5c76d597bc-tvdnp 1/1 Running 0 40m

argocd devops-argocd-redis-57d6568bc5-f8gzc 1/1 Running 0 40m

argocd devops-argocd-repo-server-68d88f8c4d-tt592 1/1 Running 0 40m

argocd devops-argocd-server-8d48dcff5-4dcxc 1/1 Running 0 40m

kube-system calico-kube-controllers-846ddd49bc-mwdtp 1/1 Running 3 (133m ago) 31h

kube-system calico-node-6ksj2 1/1 Running 0 31h

kube-system calico-node-dms6q 1/1 Running 0 31h

kube-system calico-node-nv5xq 1/1 Running 0 31h

kube-system coredns-558b97598-6c98j 1/1 Running 0 31h

kube-system coredns-558b97598-l2jr7 1/1 Running 0 31h

kube-system kube-apiserver-node1 1/1 Running 1 (136m ago) 31h

kube-system kube-controller-manager-node1 1/1 Running 5 (134m ago) 31h

kube-system kube-proxy-4r54v 1/1 Running 0 31h

kube-system kube-proxy-cjtfh 1/1 Running 0 31h

kube-system kube-proxy-zsz6f 1/1 Running 0 31h

kube-system kube-scheduler-node1 1/1 Running 4 (140m ago) 31h

kube-system metrics-server-7fcfb4f77c-q8hlt 1/1 Running 4 (134m ago) 9h

kube-system nodelocaldns-k2jqj 1/1 Running 0 31h

kube-system nodelocaldns-rxf4j 1/1 Running 0 31h

kube-system nodelocaldns-xvnrl 1/1 Running 0 31h

kube-system snapshot-controller-0 1/1 Running 0 9h

kubesphere-controls-system default-http-backend-5c4485fdc-7zp75 1/1 Running 0 4h30m

kubesphere-controls-system kubectl-admin-67db7946fd-n6dmp 1/1 Running 0 4h22m

kubesphere-devops-system devops-27713550-lgfsc 0/1 Completed 0 72m

kubesphere-devops-system devops-27713580-fckls 0/1 Completed 0 42m

kubesphere-devops-system devops-27713610-z7p2k 0/1 Completed 0 12m

kubesphere-devops-system devops-apiserver-84497594bb-4cxn6 1/1 Running 0 4h28m

kubesphere-devops-system devops-controller-c895c456c-l8m6w 1/1 Running 0 4h28m

kubesphere-devops-system devops-jenkins-9569bc9d5-v4zh6 1/1 Running 0 4h28m

kubesphere-devops-system s2ioperator-0 1/1 Running 0 4h28m

kubesphere-logging-system elasticsearch-logging-data-0 1/1 Running 0 4h33m

kubesphere-logging-system elasticsearch-logging-data-1 1/1 Running 0 4h31m

kubesphere-logging-system elasticsearch-logging-discovery-0 1/1 Running 0 4h33m

kubesphere-logging-system fluent-bit-8g8lc 1/1 Running 0 4h32m

kubesphere-logging-system fluent-bit-k7j4z 1/1 Running 0 4h32m

kubesphere-logging-system fluent-bit-sntkq 1/1 Running 0 4h32m

kubesphere-logging-system fluentbit-operator-67587cc876-s2b79 1/1 Running 0 4h32m

kubesphere-logging-system logsidecar-injector-deploy-7967df78d-4wc5x 2/2 Running 0 4h28m

kubesphere-logging-system logsidecar-injector-deploy-7967df78d-wxvv9 2/2 Running 0 4h28m

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 4h25m

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 4h25m

kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 4h25m

kubesphere-monitoring-system kube-state-metrics-9895669cb-xvc68 3/3 Running 0 4h25m

kubesphere-monitoring-system node-exporter-6w47d 2/2 Running 0 4h25m

kubesphere-monitoring-system node-exporter-82f8z 2/2 Running 0 4h25m

kubesphere-monitoring-system node-exporter-pbrjk 2/2 Running 0 4h25m

kubesphere-monitoring-system notification-manager-deployment-dc69cfcd8-d7t8d 2/2 Running 0 4h24m

kubesphere-monitoring-system notification-manager-deployment-dc69cfcd8-dmcvc 2/2 Running 0 4h24m

kubesphere-monitoring-system notification-manager-operator-547bf6dc98-bm6j2 2/2 Running 2 (139m ago) 4h24m

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 1 (137m ago) 4h25m

kubesphere-monitoring-system prometheus-k8s-1 2/2 Running 1 (136m ago) 4h25m

kubesphere-monitoring-system prometheus-operator-69f895bd45-qtnb7 2/2 Running 0 4h26m

kubesphere-system ks-apiserver-5c5df4d76f-2gz74 1/1 Running 0 4h30m

kubesphere-system ks-console-858b489f87-7zdrj 1/1 Running 0 4h30m

kubesphere-system ks-controller-manager-df69bd65f-747tz 1/1 Running 2 (139m ago) 4h30m

kubesphere-system ks-installer-8656f95dc8-pb4dx 1/1 Running 0 4h34m

kubesphere-system minio-84f5764688-vzwqf 1/1 Running 1 (138m ago) 9h

kubesphere-system openldap-0 1/1 Running 1 (4h35m ago) 9h

kubesphere-system openpitrix-import-job-gkcds 0/1 Completed 0 4h29m

nfs-provisioner nfs-subdir-external-provisioner-5fb45d6cf7-qrxwv 1/1 Running 2 4h35m

nfs-provisioner nfs-subdir-external-provisioner-5fb45d6cf7-t7bhg 1/1 Running 1 (142m ago) 4h35m

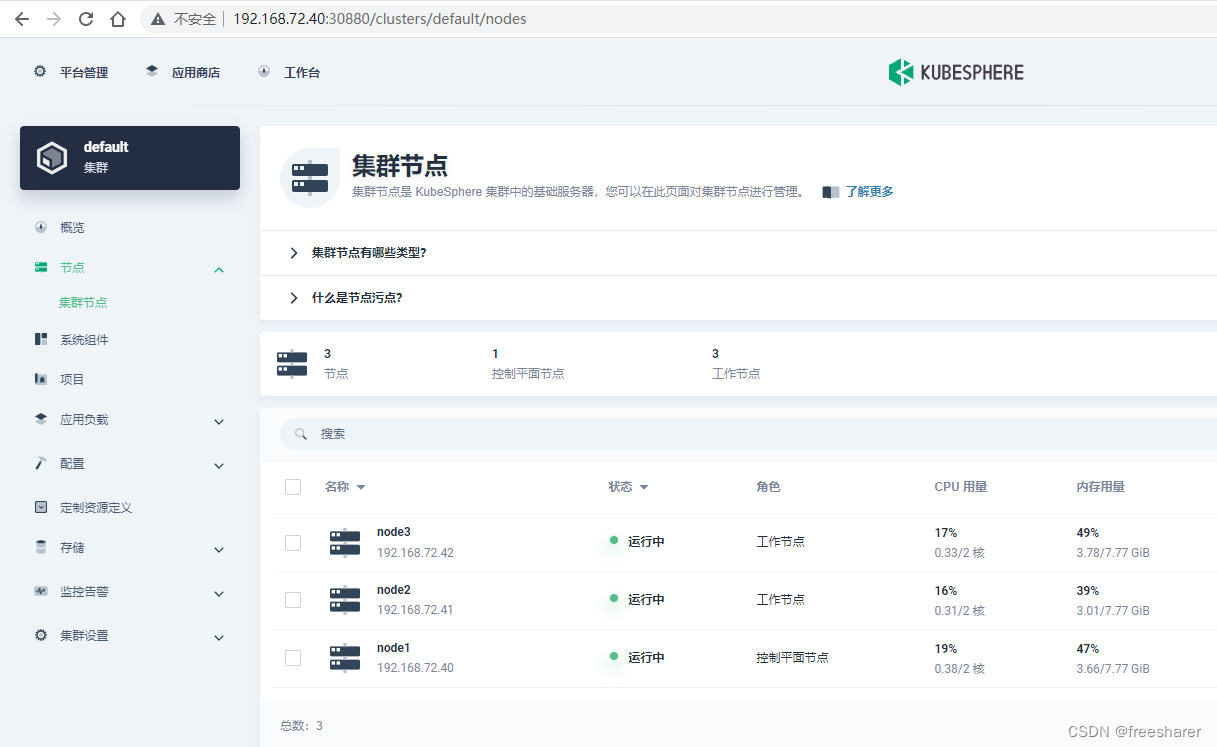

通过 http://{IP}:30880 使用默认帐户和密码 admin/P@88w0rd 访问 KubeSphere 的 Web 控制台。

确认节点状态

确认系统组件状态