前言

在上两篇,讲解了基于Kubernetes部署和基于Linux单节点部署KubeSphere。在生产环境中,由于单节点集群资源有限、计算能力不足,无法满足大部分需求,因此不建议在处理大规模数据时使用单节点集群。此外,单节点集群只有一个节点,因此也不具有高可用性。相比之下,在应用程序部署和分发方面,多节点架构是最常见的首选架构。

概念

多节点集群由至少一个主节点和一个工作节点组成。您可以使用任何节点作为任务机来执行安装任务,也可以在安装之前或之后根据需要新增节点(例如,为了实现高可用性)。

Master:主节点,通常托管控制平面,控制和管理整个系统。

Worker:工作节点,运行部署在工作节点上的实际应用程序。

官方部署文档:https://kubesphere.com.cn/docs/installing-on-linux/introduction/multioverview/

环境说明

CentOS7.9

每台机器:4VCPU+4G

使用华为云的服务器

配置前置环境(在所有节点做操作)

1、修改主机名

hostnamectl set-hostname master

2、关闭防火墙,Selinux,iptables

systemctl stop firewalld

systemctl enable firewalld

setenforce 0

sed -i s/SELINUX=enforcing/SELINUX=disabled/g /etc/selinux/config

iptables -F

下载KubeKey文件(在master节点执行)

[root@master ~]# export KKZONE=cn #导入个环境变量

[root@master ~]# curl -sfL https://get-kk.kubesphere.io | VERSION=v2.0.0 sh - #下载kk(KubeKey)文件

[root@master ~]# chmod +x kk #赋予kk文件可执行权限

创建集群(在master节点执行)

注意,和单节点部署不同的是,对于多节点安装,我们需要通过指定配置文件来创建集群。

先创建示例配置文件,之后修改相关配置,然后根据此配置文件创建集群

1、创建示例配置文件

./kk create config --with-kubernetes v1.21.5 --with-kubesphere v3.2.1 #指定Kubernetes版本,指定KubeSphere版本

[root@master ~]# ll

total 68480

-rw-r--r-- 1 root root 4777 Apr 8 20:35 config-sample.yaml #运行此命令之后,会产生个config-sample.yaml文件,它就是集群的配置文件。

-rwxr-xr-x 1 1001 121 53764096 Mar 8 13:05 kk

-rw-r--r-- 1 root root 16348932 Apr 8 20:24 kubekey-v2.0.0-linux-amd64.tar.gz

当然如果你想,你还可以通过自己写的配置文件创建集群,这个方法复杂此处采用第一种方法

./kk create config [-f ~/myfolder/abc.yaml]

2、编辑配置文件

以下是多节点集群(具有一个主节点)配置文件的示例:

我们必须修改的有两处,其余的看情况:

[root@master ~]# cat config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts: #下面的有几台机器,就写几条信息,其中:name:节点的主机名。address:任务机和其他实例通过 SSH 相互连接所使用的 IP 地址,就填写自己节点的内网IP。internalAddress:填写节点的内网IP,user:指定Linux操作系统的用户,这里我的是root用户。password:root用户的密码。

- {

name: master, address: 192.168.0.223, internalAddress: 192.168.0.223, user: root, password: "Zbzr0823"}

- {

name: node1, address: 192.168.0.108, internalAddress: 192.168.0.108, user: root, password: "Zbzr0823"}

- {

name: node2, address: 192.168.0.14, internalAddress: 192.168.0.14, user: root, password: "Zbzr0823"}

roleGroups:

etcd: #指定etcd安装在那个节点

- master #安装在master节点

control-plane: #指定控制平面的节点

- master #指定master控制平面是master节点

worker: #指定有哪几个work节点,如果也想要master节点加入work节点,也可以如下格式进行填写。

- node1

- node2

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.21.5

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

plainHTTP: false

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

---

#以下部分是可选的修改,就是关于开启插件的配置,我在基于Kubernetes平台安装KubeSphere那篇文章有详细说明,如果你的集群配置性能非常好,那你可以选择性的开启插件,如果不做修改,默认是最小化安装KubeSphere。(就是将false--->true)

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.2.1

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

local_registry: ""

namespace_override: ""

# dev_tag: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

# apiserver:

# resources: {}

# controllerManager:

# resources: {}

redis:

enabled: false

volumeSize: 2Gi

openldap:

enabled: false

volumeSize: 2Gi

minio:

volumeSize: 20Gi

monitoring:

# type: external

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

GPUMonitoring:

enabled: false

gpu:

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchHost: ""

externalElasticsearchPort: ""

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

# operator:

# resources: {}

# webhook:

# resources: {}

devops:

enabled: false

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events:

enabled: false

# operator:

# resources: {}

# exporter:

# resources: {}

# ruler:

# enabled: true

# replicas: 2

# resources: {}

logging:

enabled: false

containerruntime: docker

logsidecar:

enabled: true

replicas: 2

# resources: {}

metrics_server:

enabled: false

monitoring:

storageClass: ""

# kube_rbac_proxy:

# resources: {}

# kube_state_metrics:

# resources: {}

# prometheus:

# replicas: 1

# volumeSize: 20Gi

# resources: {}

# operator:

# resources: {}

# adapter:

# resources: {}

# node_exporter:

# resources: {}

# alertmanager:

# replicas: 1

# resources: {}

# notification_manager:

# resources: {}

# operator:

# resources: {}

# proxy:

# resources: {}

gpu:

nvidia_dcgm_exporter:

enabled: false

# resources: {}

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

kubeedge:

enabled: false

cloudCore:

nodeSelector: {

"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress:

- ""

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {

"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {

"node-role.kubernetes.io/worker": ""}

tolerations: []

3、 使用配置文件创建集群

安装前置包(在所有节点上操作)

yum install -y conntrack

然后执行如下命令:

./kk create cluster -f config-sample.yaml

(Continue this installation? [yes/no]: yes ) #就会自动安装了。

#剩下就是15分钟的等待

4、查看进度

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

Collecting installation results ...

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.0.223:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2022-04-08 21:25:49

#####################################################

到这里就代表安装行了

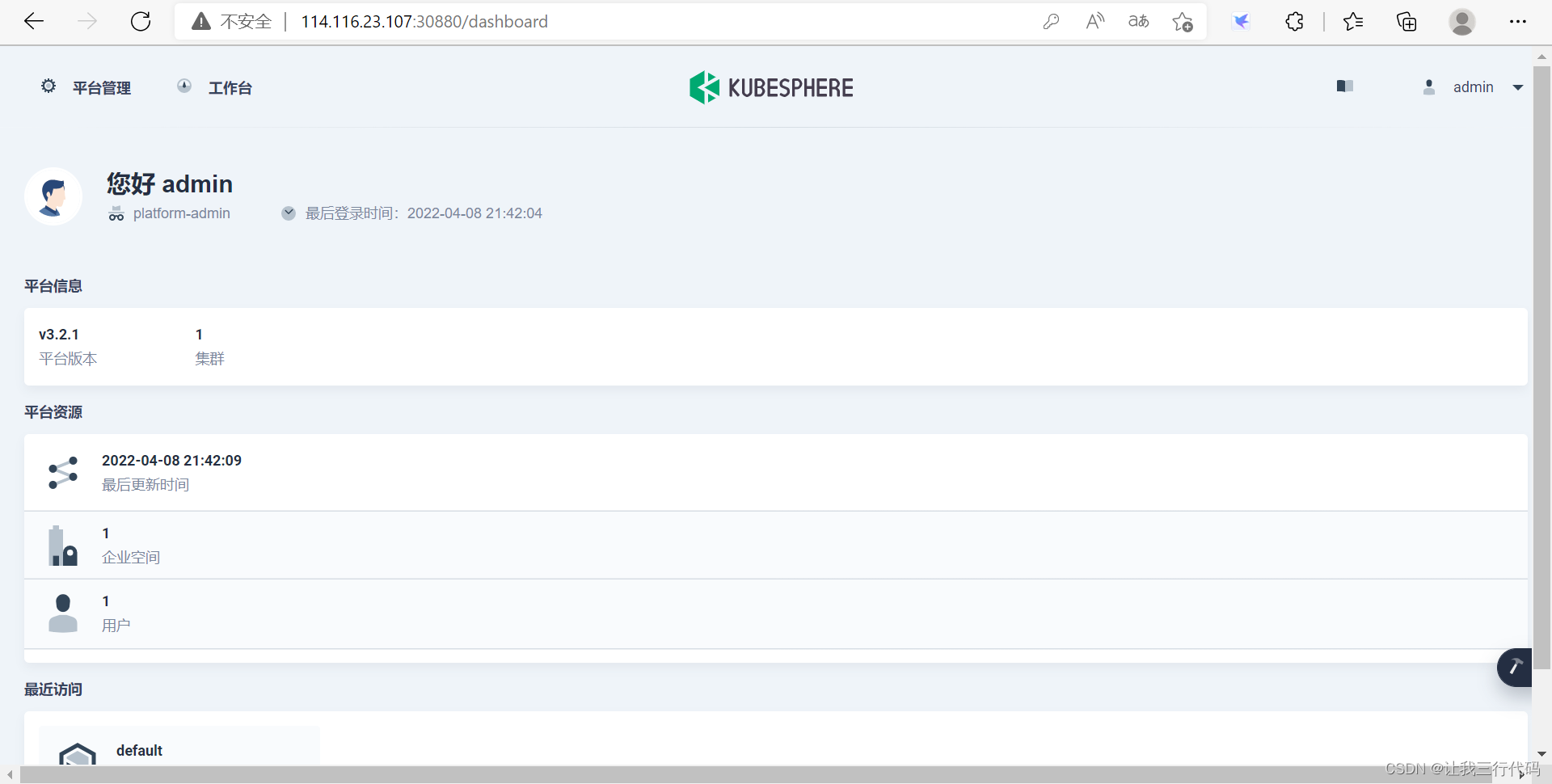

访问KubeSphere

节点IP:30880

用户:root

初始密码:P@88w0rd

之后设置新的密码,然后就进入KubeSphere的主界面了: