环境:Storm-1.2.2,Kafka_2.10-0.10.2.1,zookeeper-3.4.10,Idea(Linux版)

该测试用例都是在Linux下完成。

1.Bolt实现

package com.strorm.kafka;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.IRichBolt;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import java.util.Map;

/**

* @Author zhang

* @Date 18-6-11 下午9:12

*/

public class SplitBolt implements IRichBolt {

private TopologyContext context;

private OutputCollector collector;

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

this.context = context;

this.collector = collector;

}

public void execute(Tuple input) {

String line = input.getString(0);

System.out.println(line);

}

public void cleanup() {

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "count"));

}

public Map<String, Object> getComponentConfiguration() {

return null;

}

}

2.Topology提交

package com.strorm.kafka;

import com.strorm.wordcount.SplitBolt;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.kafka.*;

import org.apache.storm.spout.SchemeAsMultiScheme;

import org.apache.storm.topology.TopologyBuilder;

import java.util.UUID;

/**

* @Author zhang

* @Date 18-6-11 下午9:15

*/

public class kafkaApp {

public static void main(String[] args) {

TopologyBuilder builder = new TopologyBuilder();

String zkConnString = "Server1:2181";

BrokerHosts hosts = new ZkHosts(zkConnString);

SpoutConfig spoutConfig = new SpoutConfig(hosts,"stormkafka","/stormkafka",UUID.randomUUID().toString());

spoutConfig.scheme= new SchemeAsMultiScheme(new StringScheme());

KafkaSpout kafkaSpout = new KafkaSpout(spoutConfig);

builder.setSpout("kafkaspout",kafkaSpout);

builder.setBolt("split-bolt",new SplitBolt()).shuffleGrouping("kafkaspout");

Config config = new Config();

config.setDebug(true);

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("wc",config,builder.createTopology());

}

}

3.pom.xml依赖

<dependencies>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-core</artifactId>

<version>1.2.2</version>

<!--本地测试关闭,集群打开-->

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-kafka</artifactId>

<version>1.2.2</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.10</artifactId>

<version>0.10.2.1</version>

<exclusions>

<exclusion>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

</exclusion>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.10.2.1</version>

</dependency>

</dependencies>

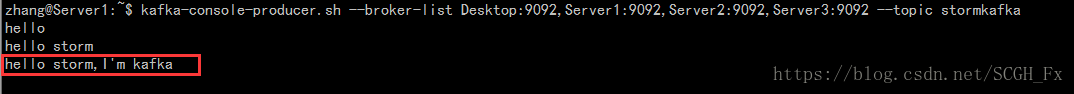

4.开启一个消息生产者

kafka-console-producer.sh --broker-list Desktop:9092,Server1:9092,Server2:9092,Server3:9092 --topic stormkafka

在这里,kafka是生产者,storm是消费者。

5.测试

启动zkserver,再启动kafka,在启动nimbus,supervisor。

在kafka生产者端发送消息,如下:

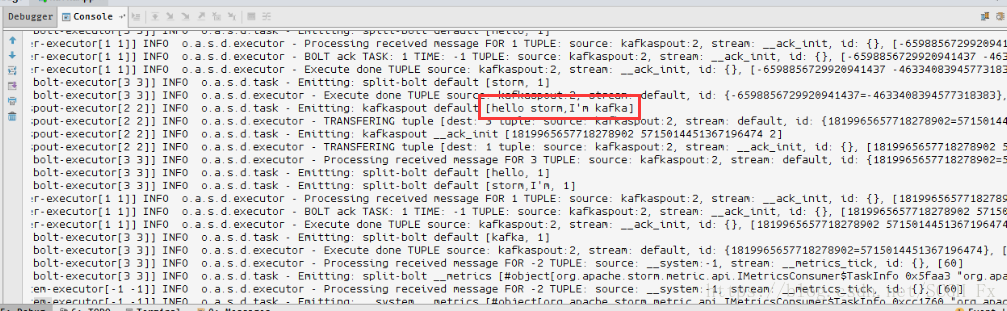

在Idea中查看接收效果:

可以看到已经接收到来自kafka的消息。

解压文件后,只需要以导入maven工程的方式导入root目录下的pom.xml