基于Detectron2 & AdelaiDet的BlendMask训练 BlendMask环境配置 COCO数据集

博客参考:https://blog.csdn.net/weixin_43796496/article/details/125179243

博客参考:https://blog.csdn.net/weixin_43823854/article/details/108980188

训练的数据为:竞赛数据集

配置环境为:windows环境 pytorch1.10.0 cuda11.3 显卡 GTX 1650S 单卡

一、下载Detectron2

在github搜索BlendMask,出现的界面为AdelaiDet

AdelaiDet是基于Detectron2之上,可以包含多个实例级识别任务的开源工具箱,所以它也可以看作是Detectron2的一个拓展插件。BlendMask也是属于AdelaiDet其中一个任务。

二、配置步骤

1.基础环境配置

在终端中输入

conda create -n detectron2 python=3.8 #创建虚拟环境

conda activate detectron2 #激活虚拟环境

nvcc --sersion #查看cuda版本

详细配置请参考Pytorch环境配置

2.安装Detectron2

按照官方安装步骤进行安装

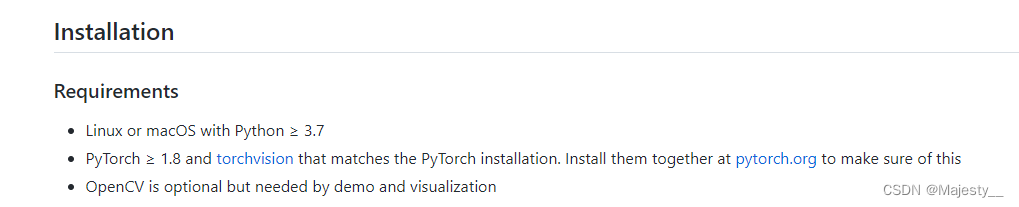

基本需求

官方文档

https://github.com/facebookresearch/detectron2/blob/main/INSTALL.md

下载Detectron2相关代码

git clone https://github.com/facebookresearch/detectron2.git #用git下载代码

python -m pip install -e detectron2 #安装detectron2

出现问题,参考博客 https://blog.csdn.net/iracer/article/details/125755029

3.安装AdelaiDet

windows下

git clone https://github.com/aim-uofa/AdelaiDet.git

cd AdelaiDet

python setup.py build develop

如果你正在使用docker,一个预构建的映像可以用:

docker pull tianzhi0549/adet:latest

如果torch版本大于1.11.0

会出现以下错误,原因是新版pytorch移除了 THC/THC.h

fatal error: THC/THC.h: No such file or directory

将路径下的 AdelaiDet/adet/layers/csrc/ml_nms/ml_nms.cu 改为以下内容

具体参考:https://github.com/aim-uofa/AdelaiDet/issues/550 & https://github.com/aim-uofa/AdelaiDet/pull/518

// Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

#include <ATen/ATen.h>

#include <ATen/cuda/CUDAContext.h>

// #include <THC/THC.h>

#include <ATen/cuda/DeviceUtils.cuh>

#include <ATen/ceil_div.h>

#include <ATen/cuda/ThrustAllocator.h>

#include <vector>

#include <iostream>

int const threadsPerBlock = sizeof(unsigned long long) * 8;

__device__ inline float devIoU(float const * const a, float const * const b) {

if (a[5] != b[5]) {

return 0.0;

}

float left = max(a[0], b[0]), right = min(a[2], b[2]);

float top = max(a[1], b[1]), bottom = min(a[3], b[3]);

float width = max(right - left + 1, 0.f), height = max(bottom - top + 1, 0.f);

float interS = width * height;

float Sa = (a[2] - a[0] + 1) * (a[3] - a[1] + 1);

float Sb = (b[2] - b[0] + 1) * (b[3] - b[1] + 1);

return interS / (Sa + Sb - interS);

}

__global__ void ml_nms_kernel(const int n_boxes, const float nms_overlap_thresh,

const float *dev_boxes, unsigned long long *dev_mask) {

const int row_start = blockIdx.y;

const int col_start = blockIdx.x;

// if (row_start > col_start) return;

const int row_size =

min(n_boxes - row_start * threadsPerBlock, threadsPerBlock);

const int col_size =

min(n_boxes - col_start * threadsPerBlock, threadsPerBlock);

__shared__ float block_boxes[threadsPerBlock * 6];

if (threadIdx.x < col_size) {

block_boxes[threadIdx.x * 6 + 0] =

dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 6 + 0];

block_boxes[threadIdx.x * 6 + 1] =

dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 6 + 1];

block_boxes[threadIdx.x * 6 + 2] =

dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 6 + 2];

block_boxes[threadIdx.x * 6 + 3] =

dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 6 + 3];

block_boxes[threadIdx.x * 6 + 4] =

dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 6 + 4];

block_boxes[threadIdx.x * 6 + 5] =

dev_boxes[(threadsPerBlock * col_start + threadIdx.x) * 6 + 5];

}

__syncthreads();

if (threadIdx.x < row_size) {

const int cur_box_idx = threadsPerBlock * row_start + threadIdx.x;

const float *cur_box = dev_boxes + cur_box_idx * 6;

int i = 0;

unsigned long long t = 0;

int start = 0;

if (row_start == col_start) {

start = threadIdx.x + 1;

}

for (i = start; i < col_size; i++) {

if (devIoU(cur_box, block_boxes + i * 6) > nms_overlap_thresh) {

t |= 1ULL << i;

}

}

const int col_blocks = at::ceil_div(n_boxes, threadsPerBlock);

dev_mask[cur_box_idx * col_blocks + col_start] = t;

}

}

namespace adet {

// boxes is a N x 6 tensor

at::Tensor ml_nms_cuda(const at::Tensor boxes, const float nms_overlap_thresh) {

using scalar_t = float;

AT_ASSERTM(boxes.type().is_cuda(), "boxes must be a CUDA tensor");

auto scores = boxes.select(1, 4);

auto order_t = std::get<1>(scores.sort(0, /* descending=*/true));

auto boxes_sorted = boxes.index_select(0, order_t);

int boxes_num = boxes.size(0);

const int col_blocks = at::ceil_div(boxes_num, threadsPerBlock);

scalar_t* boxes_dev = boxes_sorted.data<scalar_t>();

// THCState *state = at::globalContext().lazyInitCUDA(); // TODO replace with getTHCState

unsigned long long* mask_dev = NULL;

//THCudaCheck(THCudaMalloc(state, (void**) &mask_dev,

// boxes_num * col_blocks * sizeof(unsigned long long)));

mask_dev = (unsigned long long*) c10::cuda::CUDACachingAllocator::raw_alloc(boxes_num * col_blocks * sizeof(unsigned long long));

dim3 blocks(at::ceil_div(boxes_num, threadsPerBlock),

at::ceil_div(boxes_num, threadsPerBlock));

dim3 threads(threadsPerBlock);

ml_nms_kernel<<<blocks, threads>>>(boxes_num,

nms_overlap_thresh,

boxes_dev,

mask_dev);

std::vector<unsigned long long> mask_host(boxes_num * col_blocks);

c10::cuda::CUDACachingAllocator::raw_alloc(cudaMemcpy(&mask_host[0],

mask_dev,

sizeof(unsigned long long) * boxes_num * col_blocks,

cudaMemcpyDeviceToHost));

std::vector<unsigned long long> remv(col_blocks);

memset(&remv[0], 0, sizeof(unsigned long long) * col_blocks);

at::Tensor keep = at::empty({

boxes_num}, boxes.options().dtype(at::kLong).device(at::kCPU));

int64_t* keep_out = keep.data<int64_t>();

int num_to_keep = 0;

for (int i = 0; i < boxes_num; i++) {

int nblock = i / threadsPerBlock;

int inblock = i % threadsPerBlock;

if (!(remv[nblock] & (1ULL << inblock))) {

keep_out[num_to_keep++] = i;

unsigned long long *p = &mask_host[0] + i * col_blocks;

for (int j = nblock; j < col_blocks; j++) {

remv[j] |= p[j];

}

}

}

c10::cuda::CUDACachingAllocator::raw_delete(mask_dev);

// TODO improve this part

return std::get<0>(order_t.index({

keep.narrow(/*dim=*/0, /*start=*/0, /*length=*/num_to_keep).to(

order_t.device(), keep.scalar_type())

}).sort(0, false));

}

} // namespace adet

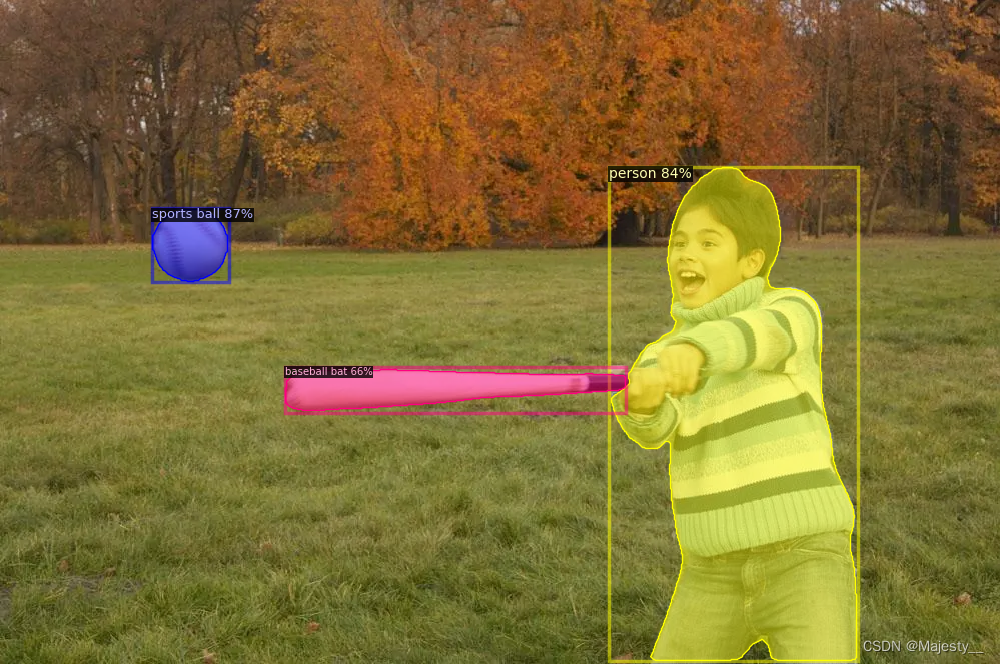

4.用官方权重调通demo

- 文件依赖的requirements要记得安装,一般都在requirements.txt里面

- 选择一个官方已经训练好的模型权重,如blendmask_r101_dcni3_5x.pth

- 将blendmask_r101_dcni3_5x.pth放于AdelaiDet目录下

- 在终端运行

python demo/demo.py \

--config-file configs/BlendMask/R_101_dcni3_5x.yaml \

--input input1.jpg \

--confidence-threshold 0.35 \

--opts MODEL.WEIGHTS blendmask_r101_dcni3_5x.pth

python demo/demo.py --config-file configs/BlendMask/R_101_dcni3_5x.yaml --input ./datasets/00000.jpg --confidence-threshold 0.35 --opts MODEL.WEIGHTS ./blendmask_r101_dcni3_5x.pth

input1.jpg是自己选择的图片放在datasets下面的

若出现实例分割图片则为成功

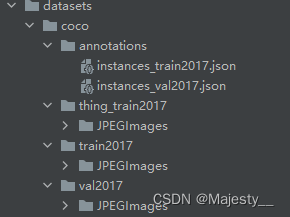

5.使用COCO数据集2017

- 首先使用自己的COCO数据集

目录结构为

/dataset/coco2017/annotations

/dataset/coco2017/train2017

/dataset/coco2017/val2017

- cd AdelaiDet/datasets,修改文件prepare_thing_sem_from_instance.py,将文件中的coco改为自己数据集所在的位置,split_name改为训练集所在文件夹名

def get_parser():

parser = argparse.ArgumentParser(description="Keep only model in ckpt")

parser.add_argument(

"--dataset-name",

default="coco2017",

help="dataset to generate",

)

return parser

if __name__ == "__main__":

args = get_parser().parse_args()

dataset_dir = os.path.join(os.path.dirname(__file__), args.dataset_name)

if args.dataset_name == "coco2017":

thing_id_to_contiguous_id = _get_coco_instances_meta()["thing_dataset_id_to_contiguous_id"]

split_name = 'train2017'

annotation_name = "annotations/instances_{}.json"

else:

thing_id_to_contiguous_id = {

1: 0}

split_name = 'train'

annotation_name = "annotations/{}_person.json"

for s in ["train2017"]:

create_coco_semantic_from_instance(

os.path.join(dataset_dir, "annotations/instances_{}.json".format(s)),

os.path.join(dataset_dir, "thing_{}".format(s)),

thing_id_to_contiguous_id

)

- 运行

python datasets/prepare_thing_sem_from_instance.py #从实例标注中提取语义信息

错误: No such file or directory

如果train2017下还有文件夹,请在thing_train2017文件夹下加上同名问价夹

6.修改相关配置

- cd AdelaiDet/adet/config/defaults.py ,将文档中SynBN改为BN,因为我是单GPU训练,Syn表示需要同时训练,有多GPU的不用改这一步

#_C.MODEL.BASIS_MODULE.NORM = "SyncBN"

_C.MODEL.BASIS_MODULE.NORM = "BN"

#_C.MODEL.FCPOSE.BASIS_MODULE.BN_TYPE = "SyncBN"

_C.MODEL.FCPOSE.BASIS_MODULE.BN_TYPE = "BN"

- 注册数据集

data/datasets/builtin.py/register_all_coco(root="datasets")

data/datasets/builtin_meta.py/_get_builtin_metadata(dataset_name)

data/datasets/builtin_meta.py/_get_coco_instances_meta()

data/datasets/register_coco.py/register_coco_instances(name, metadata, json_file, image_root)

#上面4个都和register dataset息息相关,一定要掌握上面4个函数

data/datasets/coco.py/load_coco_json(json_file, image_root, dataset_name=None, extra_annotation_keys=None)

3.首先还是要把训练集按照文档的要求放置。详细的操作请看Detectron2的datasets目录下的 README.MD

#COCO-format

coco/

annotations/

instances_{

train,val}2017.json

person_keypoints_{

train,val}2017.json

{

train,val}2017/

# image files that are mentioned in the corresponding json

** 字典_PREDEFINED_SPLITS_COCO**

_PREDEFINED_SPLITS_COCO字典中,添加你的your_dataset_name : (image/root, json_file)

_PREDEFINED_SPLITS_COCO = {

}

_PREDEFINED_SPLITS_COCO["coco"] = {

"coco_2014_train": ("coco/train2014", "coco/annotations/instances_train2014.json"),

"coco_2014_val": ("coco/val2014", "coco/annotations/instances_val2014.json"),

"coco_2014_minival": ("coco/val2014", "coco/annotations/instances_minival2014.json"),

"coco_2014_minival_100": ("coco/val2014", "coco/annotations/instances_minival2014_100.json"),

"coco_2014_valminusminival": (

"coco/val2014",

"coco/annotations/instances_valminusminival2014.json",

),

"coco_2017_train": ("coco/train2017", "coco/annotations/instances_train2017.json"),

"coco_2017_val": ("coco/val2017", "coco/annotations/instances_val2017.json"),

"coco_2017_test": ("coco/test2017", "coco/annotations/image_info_test2017.json"),

"coco_2017_test-dev": ("coco/test2017", "coco/annotations/image_info_test-dev2017.json"),

"coco_2017_val_100": ("coco/val2017", "coco/annotations/instances_val2017_100.json"),

#我新注册的数据集,注意路径别写错了,以及注册的名字如coco_raw_train别写错了。

"coco_raw_train": ("coco_raw/train2017", "coco_raw/annotations/raw_train.json"),

"coco_raw_val": ("coco_raw/val2017", "coco_raw/annotations/raw_val.json"),

#"coco_raw_frcnn_train": ("coco_raw/train2017", "coco_raw/annotations/raw_train.json"),

#"coco_raw_frcnn_val": ("coco_raw/val2017", "coco_raw/annotations/raw_val.json"),

}

字典COCO_CATEGORIES

COCO_CATEGORIES = [

{

"color": [220, 20, 60], "isthing": 1, "id": 1, "name": "person"},

{

"color": [119, 11, 32], "isthing": 1, "id": 2, "name": "bicycle"},

{

"color": [0, 0, 142], "isthing": 1, "id": 3, "name": "car"},

{

"color": [0, 0, 230], "isthing": 1, "id": 4, "name": "motorcycle"},

{

"color": [106, 0, 228], "isthing": 1, "id": 5, "name": "airplane"},

{

"color": [0, 60, 100], "isthing": 1, "id": 6, "name": "bus"},

{

"color": [0, 80, 100], "isthing": 1, "id": 7, "name": "train"},

{

"color": [0, 0, 70], "isthing": 1, "id": 8, "name": "truck"},

{

"color": [0, 0, 192], "isthing": 1, "id": 9, "name": "boat"},

##省略

]

# 仿造制作数据集 注意此处的id,从1开始(我不知道0开始如何)

# 以及name 必须必须要和json_file相对应,也就是categoried的顺序,需要认真检查。

COCO_CATEGORIES_raw = [

{

"color": [119, 11, 32], "isthing": 1, "id": 1, "name": "bicycle"},

{

"color": [0, 0, 142], "isthing": 1, "id": 2, "name": "car"},

{

"color": [220, 20, 60], "isthing": 1, "id": 3, "name": "person"},

]

函数_get_builtin_metadata(dataset_name)

接下来为数据集注册元数据

def _get_builtin_metadata(dataset_name):

if dataset_name == "coco":

return _get_coco_instances_meta() #入口依然是coco

if dataset_name == "coco_panoptic_separated":

return _get_coco_panoptic_separated_meta()

elif dataset_name == "coco_person":

return {

"thing_classes": ["person"],

"keypoint_names": COCO_PERSON_KEYPOINT_NAMES,

"keypoint_flip_map": COCO_PERSON_KEYPOINT_FLIP_MAP,

"keypoint_connection_rules": KEYPOINT_CONNECTION_RULES,

}

函数_get_coco_instances_meta()

#注意: 可以在原来的基础之上进行修改,这里我把原来的COCO_CATEGORIES改成了我使用的 标注列表COCO_CATEGORIES_raw 改成你们自己的就好~

def _get_coco_instances_meta():

thing_ids = [k["id"] for k in COCO_CATEGORIES_raw if k["isthing"] == 1]

thing_colors = [k["color"] for k in COCO_CATEGORIES_raw if k["isthing"] == 1]

assert len(thing_ids) == 3, len(thing_ids)

# Mapping from the incontiguous COCO category id to an id in [0, 79]

thing_dataset_id_to_contiguous_id = {

k: i for i, k in enumerate(thing_ids)}

thing_classes = [k["name"] for k in COCO_CATEGORIES_raw if k["isthing"] == 1]

#print("_get_coco_instances_meta thing_classes:", thing_classes)

'''

_get_coco_instances_meta thing_classes: ['bicycle', 'car', 'person']

'''

ret = {

"thing_dataset_id_to_contiguous_id": thing_dataset_id_to_contiguous_id,

"thing_classes": thing_classes,

"thing_colors": thing_colors,

}

print("ret:", ret)

'''

ret = {

'thing_dataset_id_to_contiguous_id': {1: 0, 2: 1, 3: 2}, 'thing_classes': ['bicycle', 'car', 'person'], 'thing_colors': [[119, 11, 32], [0, 0, 142], [220, 20, 60]]}

'''

return ret

以上的几个内容添加或者修改完后,就可以进行对yaml参数文件的配置了。

cd AdelaiDet/configs/BlendMask/Base-BlendMask.yaml

DATASETS:

TRAIN: ("coco_2017_train",)

TEST: ("coco_2017_val",)

SOLVER:

IMS_PER_BATCH: 4

BASE_LR: 0.01 # Note that RetinaNet uses a different default learning rate

STEPS: (60000, 80000)

MAX_ITER: 90000

7.进行训练

- cd AdelaiDet

OMP_NUM_THREADS=1 python tools/train_net.py \

--config-file configs/BlendMask/R_50_1x.yaml \ #配置文档的路径

--num-gpus 1 \ #设置的GPU为1

OUTPUT_DIR training_dir/blendmask_R_50_1x #训练完输出的路径

OMP_NUM_THREADS=1

python tools/train_net.py --config-file configs/BlendMask/R_50_1x.yaml --num-gpus 1 OUTPUT_DIR training_dir/blendmask_R_50_1x