GPT1开山之作:Improving language understanding by generative pre-training

本文提出了gpt1,即使用无标签的数据对模型先进行训练,让模型学习能够适应各个任务的通用表示;后使用小部分 task-aware的数据对模型进行微调,可以在各个task上实现更强大的功能。

设计框架

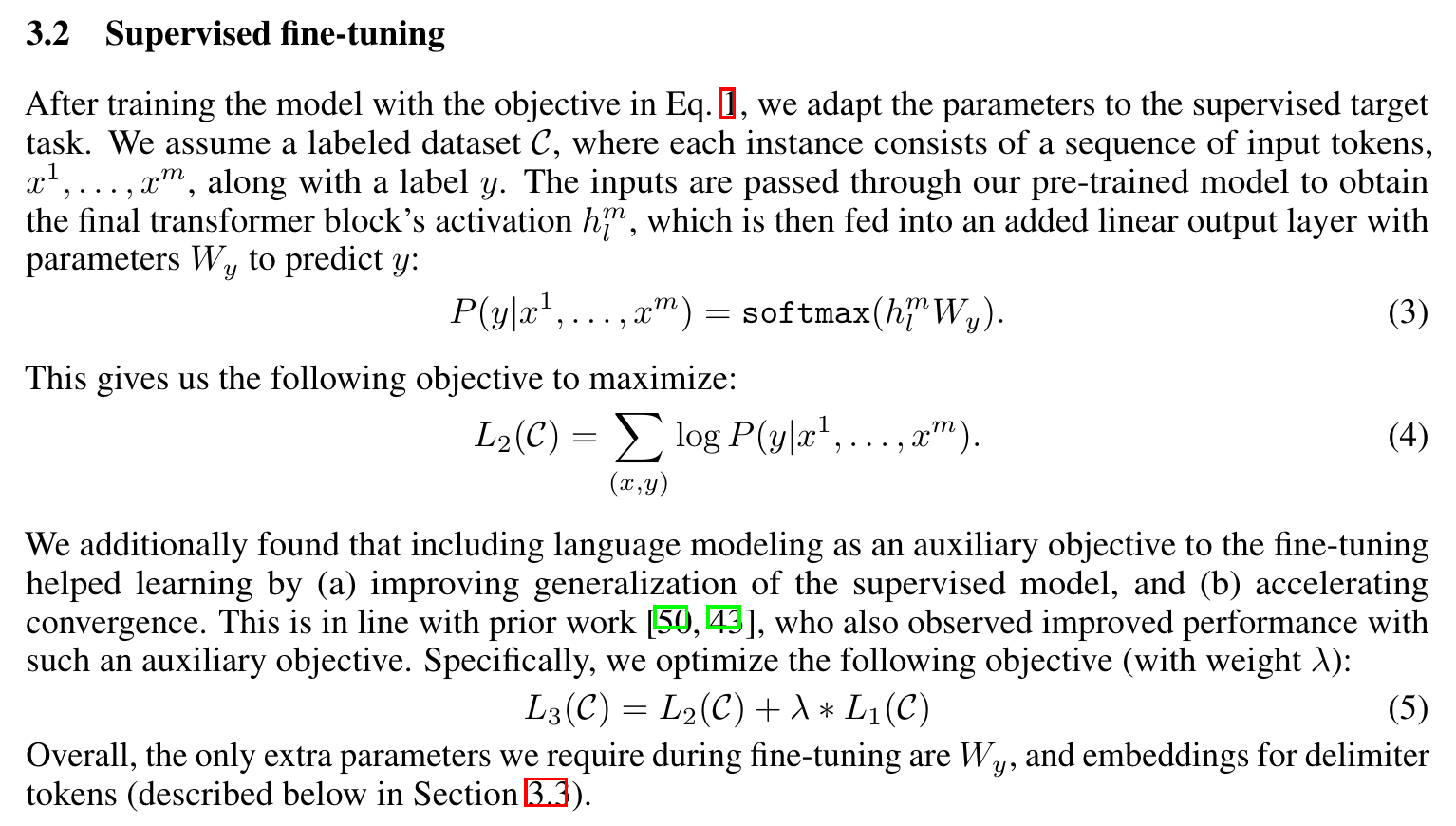

分为两块,pre-train和fine-tune,使用transformer模型的解码器部分。

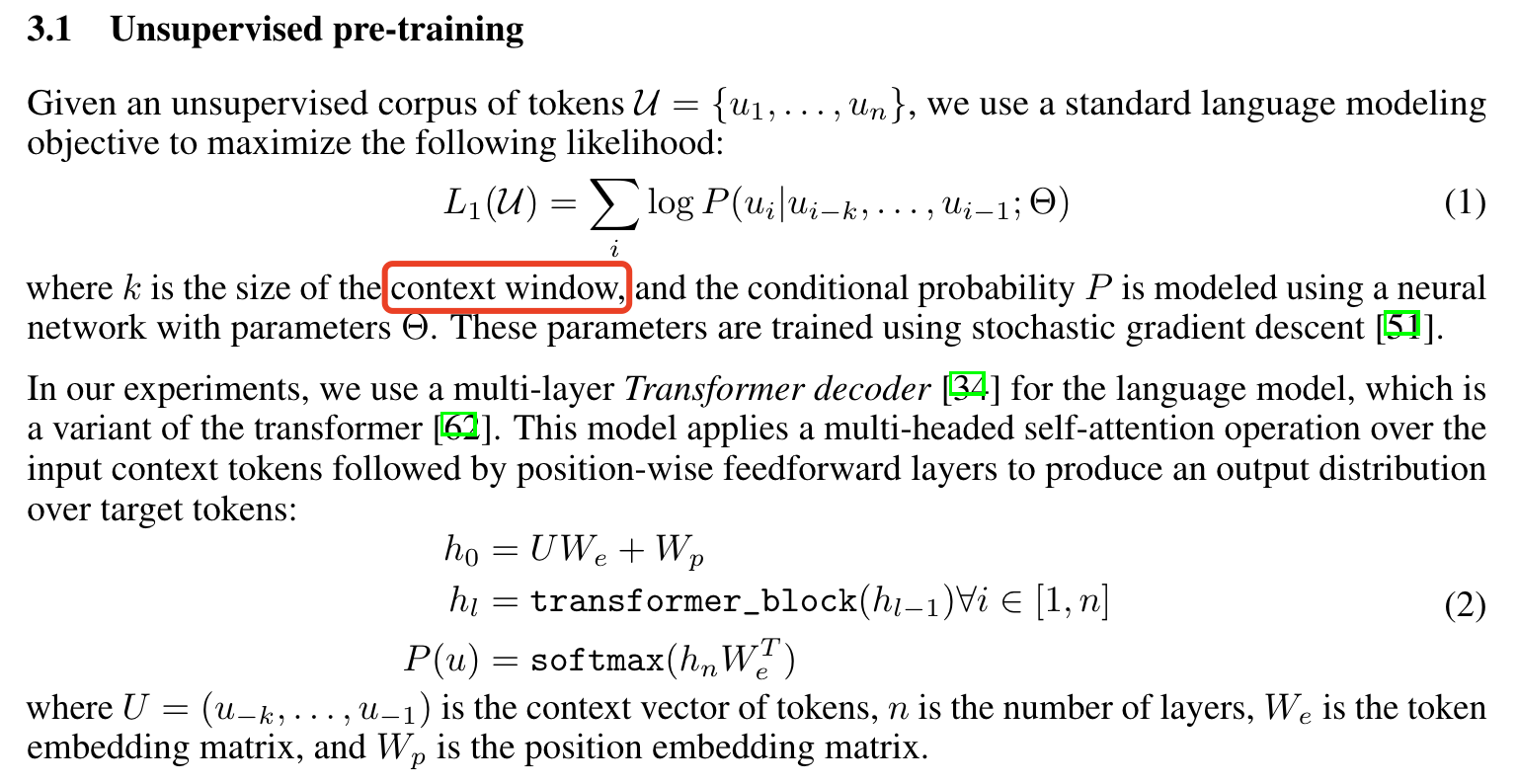

第一阶段:Unsupervised pre-training

预测连续的k个词的下一个词的概率,本质就是最大似然估计,让模型下一个输出的单词的最大概率的输出是真实样本的下一个单词的 u i u_i ui。后面的元素不会看,只看前k个元素,这就和transformer的解码器极为相似。

第二阶段:Supervised fine-tuning

训练下游的task的数据集拥有以下形式的数据:假设每句话中有m个单词,输入序列 { x 1 , x 2 , . . . , x m } \{x^1,x^2,...,x^m\} {

x1,x2,...,xm} 和一个标签 y y y(忧下游任务决定)。

在这个阶段,作者定义了两个优化函数,L1保证句子的连贯性,L2保证下游任务的准确率。

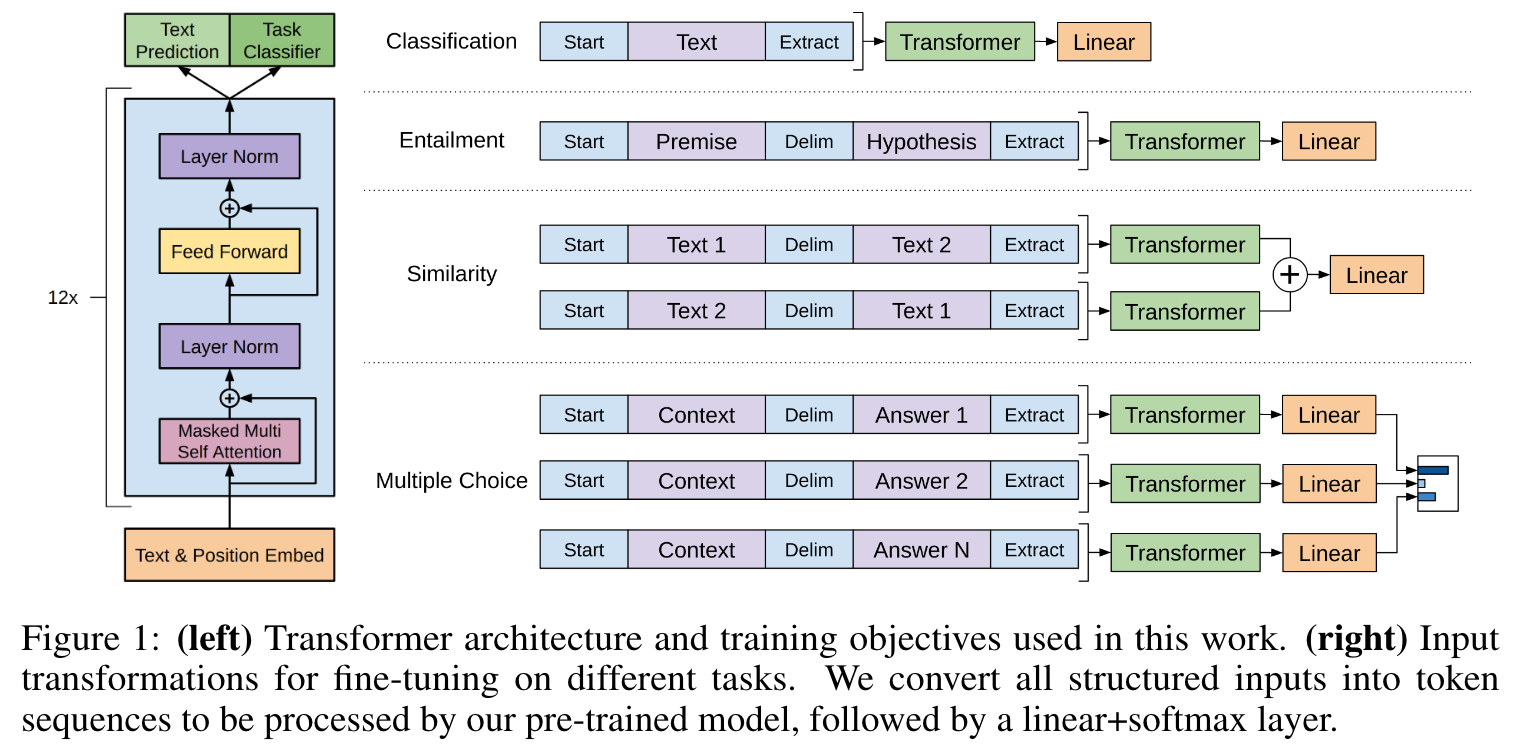

下游任务

针对不同的下游任务,制定了不同的训练方案,其完整的内部框架结构如下:

复现代码

一个十分简易的复现:

import torch

import torch.nn as nn

import torch.nn.functional as F

import math

import copy

import torch.nn.functional as F

# accepts input in [ batch x channels x shape ] format

class Attention(nn.Module):

def __init__(self, in_channels, heads, dropout=None):

assert in_channels % heads == 0

super().__init__()

self.in_channels = in_channels

self.heads = heads

self.dropout = dropout

def forward(self, queries, keys, values, mask=None):

attention = torch.bmm(queries, keys.permute(0,2,1)) / self.in_channels**0.5

if mask is not None:

attention = attention.masked_fill(mask, -1e9)

attention = F.softmax(attention, dim=-1)

if self.dropout is not None:

attention = F.dropout(attention, self.dropout)

output = torch.bmm(attention, values)

return output

# adds positional encodings

class PositionalEncoding(nn.Module):

def forward(self, input_):

_, channels, length = input_.shape

numerator = torch.arange(length, dtype=torch.float)

denominator = 1e-4 ** (2 * torch.arange(channels, dtype=torch.float) / channels)

positional_encodings = torch.sin(torch.ger(denominator, numerator))

return input_ + positional_encodings

class EncoderLayer(nn.Module):

def __init__(self, in_channels, heads, dropout=None):

super().__init__()

self.in_channels = in_channels

self.heads = heads

self.produce_qkv = nn.Linear(in_channels, 3*in_channels)

self.attention = Attention(in_channels, heads, dropout)

self.linear = nn.Linear(in_channels, in_channels)

def forward(self, inputs):

qkv = self.produce_qkv(inputs)

queries, keys, values = qkv.split(self.in_channels, -1)

attention = self.attention(queries, keys, values)

outputs = F.layer_norm(attention + inputs, (self.in_channels,))

outputs = F.layer_norm(self.linear(outputs) + outputs, (self.in_channels,))

return outputs

class DecoderLayer(nn.Module):

def __init__(self, in_channels, heads, dropout=None):

super().__init__()

self.in_channels = in_channels

self.heads = heads

self.produce_qkv = nn.Linear(in_channels, 3*in_channels)

self.produce_kv = nn.Linear(in_channels, 2*in_channels)

self.masked_attention = Attention(in_channels, heads, dropout)

self.attention = Attention(in_channels, heads, dropout)

self.linear = nn.Linear(in_channels, in_channels)

def forward(self, inputs, outputs):

qkv = self.produce_qkv(outputs)

queries, keys, values = qkv.split(self.in_channels, -1)

n = inputs.shape[1]

mask = torch.tril(torch.ones((n, n), dtype=torch.uint8))

attention = self.masked_attention(queries, keys, values, mask)

outputs = F.layer_norm(attention + outputs, (self.in_channels,))

kv = self.produce_kv(inputs)

keys, values = kv.split(self.in_channels, -1)

attention = self.attention(outputs, keys, values)

outputs = F.layer_norm(attention + outputs, (self.in_channels,))

outputs = F.layer_norm(self.linear(outputs) + outputs, (self.in_channels,))

return outputs

if __name__ == '__main__':

print("Running...")

test_in = torch.rand([3,4,5])

encoder = EncoderLayer(5, 1)

test_out = encoder(test_in) # torch.Size([3, 4, 5])

assert test_out.shape == (3, 4, 5)

print("encoder passed")

decoder = DecoderLayer(5, 1) # 这就是gpt模型

test_mask = torch.tril(torch.ones((4, 4), dtype=torch.uint8))

test_out = decoder(test_in, test_in)

assert test_out.shape == (3, 4, 5) # torch.Size([3, 4, 5])

print("decoder passed")

GPT2:Language Models are Unsupervised Multitask Learners

延续了gpt1的工作,本文提出了gpt2,相比初代gpt,用了更大的结构,并且可以实现 zero-shot task transfer,即下游任务不需要指定的数据集进行微调。

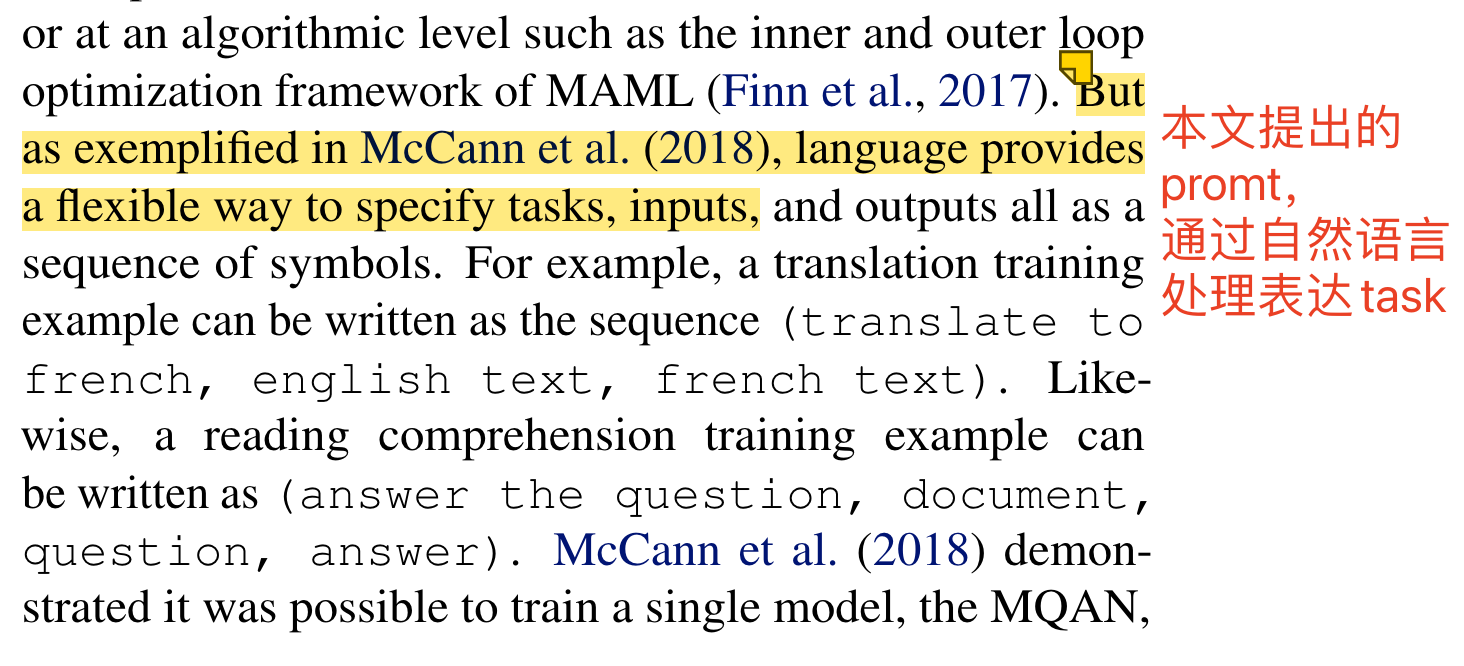

如何 prompt?

由于gpt1在对下游任务进行微调时引入了分隔符等符号,而在 zero-shot 场景中无法引入,因此如何让模型学习这种模式成为了新的问题。那么本文是怎么解决的?通过自然语言的prompt让模型执行指定命令:

训练数据

不同于前人采用的数据集,本文采用了更大的互联网爬虫数据集。