因为毕设的需求,项目中要用到Memcache服务,来降低对数据库的请求压力。虽然只有我一个人访问,看起来加不加缓存都没有必要;但是从设计上来讲,一个稳健的服务,没有缓存怎么能行呢?经过一些搜索,发现一致性哈希算法是目前较为流行的缓存服务选择方案。因此来整理总结下,以便于自己的应用。

本文代码都放到了gitee仓库,有兴趣的可以拿去测一测。

https://gitee.com/marksinoberg/consistent_hash

概念

百科释义

一致性哈希算法简单来说就是一种分布式哈希(DHT)实现算法,设计目标是为了解决因特网中的热点(Hot spot)问题,初衷和CARP十分类似。一致性哈希修正了CARP使用的简 单哈希算法带来的问题,使得分布式哈希(DHT)可以在P2P环境中真正得到应用。

哈希算法评价标准

在动态的缓存环境中,有下面这么几条标准,可以用来判断一个哈希算法的好坏。借用网上 一篇文章 对此的描述。

平衡性(Balance):平衡性是指哈希的结果能够尽可能分布到所有的缓冲中去,这样可以使得所有的缓冲空间都得到利用。很多哈希算法都能够满足这一条件。单调性(Monotonicity):单调性是指如果已经有一些内容通过哈希分派到了相应的缓冲中,又有新的缓冲加入到系统中。哈希的结果应能够保证原有已分配的内容可以被映射到原有的或者新的缓冲中去,而不会被映射到旧的缓冲集合中的其他缓冲区。分散性(Spread):在分布式环境中,终端有可能看不到所有的缓冲,而是只能看到其中的一部分。当终端希望通过哈希过程将内容映射到缓冲上时,由于不同终端所见的缓冲范围有可能不同,从而导致哈希的结果不一致,最终的结果是相同的内容被不同的终端映射到不同的缓冲区中。这种情况显然是应该避免的,因为它导致相同内容被存储到不同缓冲中去,降低了系统存储的效率。分散性的定义就是上述情况发生的严重程度。好的哈希算法应能够尽量避免不一致的情况发生,也就是尽量降低分散性。负载(Load):负载问题实际上是从另一个角度看待分散性问题。既然不同的终端可能将相同的内容映射到不同的缓冲区中,那么对于一个特定的缓冲区而言,也可能被不同的用户映射为不同 的内容。与分散性一样,这种情况也是应当避免的,因此好的哈希算法应能够尽量降低缓冲的负荷。

通用代码

在正式解释一致性哈希算法之前,先贴一下待会要用到的通用的代码。

constrants.py

#coding: utf8

servers = [

"192.168.0.1:11211",

"192.168.0.2:11211",

"192.168.0.3:11211",

"192.168.0.4:11211",

]

entries = [

"a",

"b",

"c",

"d",

"e",

"f",

"g",

"h",

"i",

"j",

"k",

"l",

"m",

"n",

"o",

"p",

"q",

"r",

"s",

"t",

"u",

"v",

"w",

"x",

"y",

"z",

]

func.py

#coding: utf8

from hashlib import md5

def hashcode(key=""):

if key == None or key == "":

return 0

return int(md5(str(key).encode('utf8')).hexdigest(), 16)

def print_pretty_list(ls=[]):

for item in ls:

print(item)

def print_pretty_dict(dc={}):

for key, value in dc.items():

print(f'{key}: {value}')

def compute_cache_percentage(oldcache, newcache):

result = {key: 0 for key in oldcache.keys()}

for key, value in oldcache.items():

if key in newcache.keys():

result[key] = len(list(set(value).intersection(set(newcache[key]))))

return result

def get_map_list_key(maplist):

result = []

for map in maplist:

result.extend(map.keys())

return result

def compute_cache_percentage_ring(oldcache, newcache):

result = {key: 0 for key in oldcache.keys()}

# 这里每一个value其实都是一个装了字典map的列表

for node, maplist in oldcache.items():

if node in newcache.keys():

oldkeys = get_map_list_key(maplist)

newkeys = get_map_list_key(newcache[node])

result[node] = len(list(set(oldkeys).intersection(set(newkeys))))

return result

def compute_cache_percentage_virtual_ring(oldcache, newcache):

result = {key.split("#")[0]: 0 for key in oldcache.keys()}

# 这里每一个value其实都是一个装了字典map的列表

for node, maplist in oldcache.items():

if node in newcache.keys():

oldkeys = get_map_list_key(maplist)

newkeys = get_map_list_key(newcache[node])

temp = str(node).split("#")[0]

result[temp] += len(list(set(oldkeys).intersection(set(newkeys))))

return result

硬哈希

硬哈希,一般又被称为普通哈希。其原理较为简单,当然实现方式也多种多样。比如取余数法等。单调性也能很好的满足。

硬哈希在缓存服务的适用场景一般是缓存环境不怎么发生变化,对于缓存服务器群的稳定性要求较高。一旦服务器群出现故障,就有可能导致井喷现象,出现缓存服务瞬间崩溃。这么说可能不太容易理解,下面举个通俗点的例子。

张大胖负责维护公司的缓存服务群,手里现在有10台机器,每台服务器的内存使用率都达到了95%, 而且老板还贼抠,就是不给配新机器。这天,实习生小李写了个日志分析的脚本,没有考虑到目前服务器内存已然吃紧的现状,就直接执行了。结果第三台服务器出现了严重的Out Of Memory问题,导致服务器直接死掉了。然后缓存数据直接全部丢失。这个时候原本的10台服务器,变成了9台。原本可以命中的缓存内容这下都失效了。导致了缓存服务被迫更新,瞬间服务器压力都上来了。不仅缓存服务器挂了,后台的MySQL服务器也撑不住这么大规模的请求啊,公司的整个业务线都陷入了瘫痪的状态。

简易实现硬哈希 hard-hash.py

# coding: utf8

from hashlib import *

from constraints import servers, entries

from func import *

length = len(servers)

details1 = {key:[] for key in servers}

for entry in entries:

# keyhash = sum([ord(c) for c in entry])

keyhash = hashcode(entry)

server = servers[keyhash%length]

details1[server].append(entry)

print_pretty_dict(details1)

print("---"*28)

del servers[0]

length = len(servers)

details2 = {key:[] for key in servers}

for entry in entries:

# keyhash = sum([ord(c) for c in entry])

keyhash = hashcode(entry)

server = servers[keyhash%length]

details2[server].append(entry)

print_pretty_dict(details2)

print("---"*28)

servers.insert(0, "192.168.0.1:11211")

servers.append("192.168.0.5:11211")

length = len(servers)

details3 = {key:[] for key in servers}

for entry in entries:

# keyhash = sum([ord(c) for c in entry])

keyhash = hashcode(entry)

server = servers[keyhash%length]

details3[server].append(entry)

print_pretty_dict(details3)

# -------计算缓存度

print("==="*7)

print_pretty_dict(compute_cache_percentage(details1, details2))

print("---"*20)

print_pretty_dict(compute_cache_percentage(details1, details3))硬哈希缓存效果

python hard-hash.py

192.168.0.1:11211: ['o', 's', 'u', 'w']

192.168.0.2:11211: ['a', 'd', 'g', 'h', 'i', 'j', 'n', 'q', 'r', 'y']

192.168.0.3:11211: ['e', 'p', 't', 'v', 'x']

192.168.0.4:11211: ['b', 'c', 'f', 'k', 'l', 'm', 'z']

------------------------------------------------------------------------------------

192.168.0.2:11211: ['d', 'k', 'l', 'm', 's', 't', 'v', 'x']

192.168.0.3:11211: ['a', 'b', 'c', 'e', 'h', 'i', 'n', 'o', 'p', 'r', 'u', 'w', 'y']

192.168.0.4:11211: ['f', 'g', 'j', 'q', 'z']

------------------------------------------------------------------------------------

192.168.0.1:11211: ['j', 'n', 't']

192.168.0.2:11211: ['e', 'm', 'p', 'q', 'w']

192.168.0.3:11211: ['a', 'i', 'l']

192.168.0.4:11211: ['b', 'c', 'd', 'g', 'h', 'k', 'o', 's', 'v', 'x', 'z']

192.168.0.5:11211: ['f', 'r', 'u', 'y']

=====================

192.168.0.1:11211: 0

192.168.0.2:11211: 1

192.168.0.3:11211: 2

192.168.0.4:11211: 2

------------------------------------------------------------

192.168.0.1:11211: 0

192.168.0.2:11211: 1

192.168.0.3:11211: 0

192.168.0.4:11211: 4可以看出,动态缓存环境下,硬哈希的命中率并不高。

一致哈希

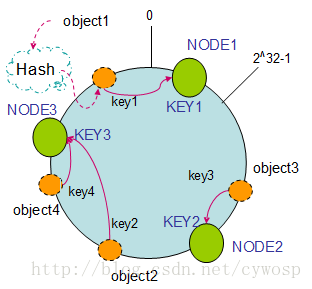

一致性哈希算法的基本实现原理是将机器节点和key值都按照一样的hash算法映射到一个0~2^32(也不一定非得是2^32, 理论上能让节点分布均匀的‘环’就够了)的圆环上。当有一个写入缓存的请求到来时,计算Key值k对应的哈希值Hash(k),如果该值正好对应之前某个机器节点的Hash值,则直接写入该机器节点,如果没有对应的机器节点,则顺时针查找下一个节点,进行写入,如果超过2^32还没找到对应节点,则从0开始查找(因为是环状结构)。比如下面盗的一张图。

简易代码实现consisthash.py

# coding: utf8

# 简单一致性hash实现

from constraints import servers, entries

from func import print_pretty_dict, hashcode, compute_cache_percentage, compute_cache_percentage_ring

class ConsistHash(object):

"""

简单一致性哈希算法实现

"""

def __init__(self, servers=[]):

self.servers = servers

# sorted list which contains server nodes.

self.ring = []

# node:[hashcode1, hashcode2, ...]

self.hashnodemap = {}

for server in self.servers:

self.addNode(server)

def addNode(self, node):

code = hashcode(node)

self.hashnodemap[code] = node

self.ring.append(code)

self.ring.sort()

def removeNode(self, node):

del self.hashnodemap[hashcode(node)]

self.ring.remove(hashcode(node))

def getNode(self, key):

code = hashcode(key)

for ringitem in self.ring[::-1]:

if ringitem <= code:

return self.hashnodemap[ringitem]

return self.hashnodemap[self.ring[0]]

class Cacher(object):

"""

普通一致性哈希算法的应用

"""

def __init__(self, servers):

self.c = ConsistHash(servers=servers)

self.container = {key:[] for key in servers}

def addServer(self, server):

self.c.addNode(server)

self.container[server] = []

def removeServer(self, server):

self.c.removeNode(server)

del self.container[server]

def cache(self, key, value):

server = self.c.getNode(key)

self.container[server].append({key: value})

def get(self, key):

server = self.c.getNode(key)

return self.container[server].items()[key]

if __name__ == "__main__":

# c = ConsistHash(servers=servers)

# print_pretty_list(c.ring)

# print_pretty_dict(c.hashnodemap)

cacher1 = Cacher(servers)

for entry in entries:

cacher1.cache(entry, entry)

print_pretty_dict(cacher1.container)

# 删除一个服务器

cacher3 = Cacher(servers)

cacher3.removeServer("192.168.0.1:11211")

for entry in entries:

cacher3.cache(entry, entry)

print_pretty_dict(cacher3.container)

# 添加一个服务器

cacher2 = Cacher(servers)

cacher2.addServer("192.168.0.5:11211")

for entry in entries:

cacher2.cache(entry, entry)

print_pretty_dict(cacher2.container)

# 计算缓存有效度

print_pretty_dict(compute_cache_percentage_ring(cacher1.container, cacher2.container))

print_pretty_dict(compute_cache_percentage_ring(cacher1.container, cacher3.container))

缓存效果

python consisthash.py

192.168.0.1:11211: [{'a': 'a'}, {'c': 'c'}, {'h': 'h'}, {'j': 'j'}, {'l': 'l'}, {'r': 'r'}, {'s': 's'}, {'y': 'y'}]

192.168.0.2:11211: [{'b': 'b'}, {'d': 'd'}, {'f': 'f'}, {'g': 'g'}, {'i': 'i'}, {'k': 'k'}, {'m': 'm'}, {'n': 'n'}, {'p': 'p'}, {'q': 'q'}, {'u': 'u'}, {'v': 'v'}, {'x': 'x'}]

192.168.0.3:11211: []

192.168.0.4:11211: [{'e': 'e'}, {'o': 'o'}, {'t': 't'}, {'w': 'w'}, {'z': 'z'}]

192.168.0.2:11211: [{'a': 'a'}, {'b': 'b'}, {'c': 'c'}, {'d': 'd'}, {'f': 'f'}, {'g': 'g'}, {'h': 'h'}, {'i': 'i'}, {'j': 'j'}, {'k': 'k'}, {'l': 'l'}, {'m': 'm'}, {'n': 'n'}, {'p': 'p'}, {'q': 'q'}, {'r': 'r'}, {'s': 's'}, {'u': 'u'}, {'v': 'v'}, {'x': 'x'}, {'y': 'y'}]

192.168.0.3:11211: []

192.168.0.4:11211: [{'e': 'e'}, {'o': 'o'}, {'t': 't'}, {'w': 'w'}, {'z': 'z'}]

192.168.0.1:11211: []

192.168.0.2:11211: [{'b': 'b'}, {'d': 'd'}, {'f': 'f'}, {'g': 'g'}, {'i': 'i'}, {'k': 'k'}, {'m': 'm'}, {'n': 'n'}, {'p': 'p'}, {'q': 'q'}, {'u': 'u'}, {'v': 'v'}, {'x': 'x'}]

192.168.0.3:11211: []

192.168.0.4:11211: [{'e': 'e'}, {'o': 'o'}, {'t': 't'}, {'w': 'w'}, {'z': 'z'}]

192.168.0.5:11211: [{'a': 'a'}, {'c': 'c'}, {'h': 'h'}, {'j': 'j'}, {'l': 'l'}, {'r': 'r'}, {'s': 's'}, {'y': 'y'}]

192.168.0.1:11211: 0

192.168.0.2:11211: 13

192.168.0.3:11211: 0

192.168.0.4:11211: 5

192.168.0.1:11211: 0

192.168.0.2:11211: 13

192.168.0.3:11211: 0

192.168.0.4:11211: 5结果表明: 与硬哈希缓存命中率相比,一致哈希的缓存命中率确实提高了不少。

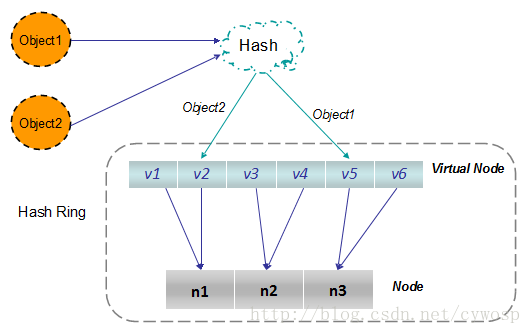

“虚拟”一致哈希

虽然缓存命中率得到了提高,但是仅仅这样还不能够真正的应用到实际生产环境中,因为目前的一致哈希还缺少了平衡性。

在此基础上,算法大佬们又引入了虚拟节点的概念。

“虚拟节点”( virtual node )是实际节点(机器)在 hash 空间的复制品( replica ),一实际个节点(机器)对应了若干个“虚拟节点”,这个对应个数也成为“复制个数”,“虚拟节点”在 hash 空间中以hash值排列

带有虚拟节点的一致哈希virtualconstisthash.py

# coding: utf8

# 带有虚拟节点的一致性哈希算法实现

from constraints import servers, entries

from func import print_pretty_dict, hashcode, print_pretty_list

from func import compute_cache_percentage

from func import compute_cache_percentage_ring

from func import compute_cache_percentage_virtual_ring

class VirtualConsistHash(object):

"""

带有虚拟节点的一致性哈希算法实现

"""

def __init__(self, servers=[], replicas=3):

self.servers = servers

# sorted list which contains server nodes.

self.ring = []

# node:[hashcode1, hashcode2, ...]

# 虚拟节点的个数,其实这个名字叫虚拟节点的个数不太合适,每个真实节点“虚拟化”后的节点个数比较好

self.replicas = replicas

self.hashnodemap = dict()

for server in self.servers:

self.addNode(server)

def addNode(self, node):

for i in range(0, self.replicas):

temp = "{}#{}".format(node, i)

code = hashcode(temp)

self.hashnodemap[code] = temp

self.ring.append(code)

self.ring.sort()

def removeNode(self, node):

for i in range(0, self.replicas):

temp = "{}#{}".format(node, i)

code = hashcode(temp)

self.ring.remove(code)

del self.hashnodemap[code]

def getNode(self, key):

code = hashcode(key)

for ringitem in self.ring[::-1]:

if ringitem <= code:

return self.hashnodemap[ringitem]

return self.hashnodemap[self.ring[0]]

class Cacher(object):

"""

带有虚拟节点的一致性哈希算法的应用

"""

def __init__(self, servers):

self.c = VirtualConsistHash(servers=servers)

self.container = {"{}#{}".format(server, index): [] for index in range(0, self.c.replicas) for server in self.c.servers}

def addServer(self, server):

self.c.addNode(server)

for i in range(0, self.c.replicas):

temp = "{}#{}".format(server, i)

self.container[temp] = []

def removeServer(self, server):

self.c.removeNode(server)

for i in range(0, self.c.replicas):

temp = "{}#{}".format(server, i)

del self.container[temp]

def cache(self, key, value):

server = self.c.getNode(key)

self.container[server].append({key: value})

def get(self, key):

server = self.c.getNode(key)

return self.container[server].items()[key]

if __name__ == "__main__":

c = VirtualConsistHash(servers=servers)

print_pretty_list(c.ring)

print_pretty_dict(c.hashnodemap)

cacher1 = Cacher(servers)

for entry in entries:

cacher1.cache(entry, entry)

print_pretty_dict(cacher1.container)

# 删除一个服务器

cacher3 = Cacher(servers)

cacher3.removeServer("192.168.0.1:11211")

for entry in entries:

cacher3.cache(entry, entry)

print_pretty_dict(cacher3.container)

# 添加一个服务器

cacher2 = Cacher(servers)

cacher2.addServer("192.168.0.5:11211")

for entry in entries:

cacher2.cache(entry, entry)

print_pretty_dict(cacher2.container)

# 计算缓存有效度

print("==="*19, "删除一个缓存服务器后~")

print_pretty_dict(

compute_cache_percentage_virtual_ring(cacher1.container, cacher2.container))

print("==="*19, "添加一个缓存服务器后~")

print_pretty_dict(

compute_cache_percentage_virtual_ring(cacher1.container, cacher3.container))实现效果

python virtualconsisthash.py

25929580212780940911456562527067013

12101104964982711566768785763136289074

74170601562041857353724622613970855161

77290231086376083997830514397772133017

108956197245253243279835718906668306846

119181851294818588345880953329601308254

148120148621525998622527044630882426909

156975434986591250703568213828815453515

166356565783230552968534833801964089480

200325646817984951237589036984080642913

314164546590207529500398448833042413158

322409963387938480044046299781174104628

74170601562041857353724622613970855161: 192.168.0.1:11211#0

148120148621525998622527044630882426909: 192.168.0.1:11211#1

77290231086376083997830514397772133017: 192.168.0.1:11211#2

12101104964982711566768785763136289074: 192.168.0.2:11211#0

166356565783230552968534833801964089480: 192.168.0.2:11211#1

25929580212780940911456562527067013: 192.168.0.2:11211#2

119181851294818588345880953329601308254: 192.168.0.3:11211#0

156975434986591250703568213828815453515: 192.168.0.3:11211#1

108956197245253243279835718906668306846: 192.168.0.3:11211#2

200325646817984951237589036984080642913: 192.168.0.4:11211#0

314164546590207529500398448833042413158: 192.168.0.4:11211#1

322409963387938480044046299781174104628: 192.168.0.4:11211#2

192.168.0.1:11211#0: []

192.168.0.2:11211#0: [{'a': 'a'}, {'h': 'h'}, {'j': 'j'}, {'l': 'l'}]

192.168.0.3:11211#0: []

192.168.0.4:11211#0: [{'e': 'e'}, {'g': 'g'}, {'o': 'o'}, {'t': 't'}, {'v': 'v'}, {'x': 'x'}]

192.168.0.1:11211#1: [{'m': 'm'}]

192.168.0.2:11211#1: [{'b': 'b'}, {'d': 'd'}, {'f': 'f'}, {'i': 'i'}, {'k': 'k'}, {'p': 'p'}]

192.168.0.3:11211#1: [{'n': 'n'}, {'q': 'q'}, {'u': 'u'}]

192.168.0.4:11211#1: [{'w': 'w'}]

192.168.0.1:11211#2: [{'c': 'c'}, {'r': 'r'}, {'y': 'y'}]

192.168.0.2:11211#2: [{'s': 's'}]

192.168.0.3:11211#2: []

192.168.0.4:11211#2: [{'z': 'z'}]

192.168.0.2:11211#0: [{'a': 'a'}, {'c': 'c'}, {'h': 'h'}, {'j': 'j'}, {'l': 'l'}, {'r': 'r'}, {'y': 'y'}]

192.168.0.3:11211#0: [{'m': 'm'}]

192.168.0.4:11211#0: [{'e': 'e'}, {'g': 'g'}, {'o': 'o'}, {'t': 't'}, {'v': 'v'}, {'x': 'x'}]

192.168.0.2:11211#1: [{'b': 'b'}, {'d': 'd'}, {'f': 'f'}, {'i': 'i'}, {'k': 'k'}, {'p': 'p'}]

192.168.0.3:11211#1: [{'n': 'n'}, {'q': 'q'}, {'u': 'u'}]

192.168.0.4:11211#1: [{'w': 'w'}]

192.168.0.2:11211#2: [{'s': 's'}]

192.168.0.3:11211#2: []

192.168.0.4:11211#2: [{'z': 'z'}]

192.168.0.1:11211#0: []

192.168.0.2:11211#0: [{'a': 'a'}]

192.168.0.3:11211#0: []

192.168.0.4:11211#0: [{'g': 'g'}, {'v': 'v'}, {'x': 'x'}]

192.168.0.1:11211#1: [{'m': 'm'}]

192.168.0.2:11211#1: [{'b': 'b'}, {'d': 'd'}, {'f': 'f'}, {'i': 'i'}, {'k': 'k'}, {'p': 'p'}]

192.168.0.3:11211#1: [{'n': 'n'}, {'q': 'q'}, {'u': 'u'}]

192.168.0.4:11211#1: [{'w': 'w'}]

192.168.0.1:11211#2: [{'c': 'c'}, {'r': 'r'}, {'y': 'y'}]

192.168.0.2:11211#2: [{'s': 's'}]

192.168.0.3:11211#2: []

192.168.0.4:11211#2: [{'z': 'z'}]

192.168.0.5:11211#0: [{'h': 'h'}, {'j': 'j'}, {'l': 'l'}]

192.168.0.5:11211#1: [{'e': 'e'}, {'o': 'o'}, {'t': 't'}]

192.168.0.5:11211#2: []

========================================================= 删除一个缓存服务器后~

192.168.0.1:11211: 4

192.168.0.2:11211: 8

192.168.0.3:11211: 3

192.168.0.4:11211: 5

========================================================= 添加一个缓存服务器后~

192.168.0.1:11211: 0

192.168.0.2:11211: 11

192.168.0.3:11211: 3

192.168.0.4:11211: 8结果表明: 带有了虚拟节点的一致哈希实现,使得缓存的命中率得到了进一步的提高。而且平衡性也更趋于平和。

总结

通过上述分析,不难发现。

硬哈希适用场景为“稳定”的缓存服务群,因此实际生产环境不怎么被用到。

简单一致哈希,缓存命中率还算可以,但是缺乏平衡性,容易导致某台节点压力过大而有些节点空闲的状况。

带有虚拟节点的一致哈希可以很好的解决上面的两个问题,但是具体的虚拟节点的设置replicas可能还需要根据实际的生产环境来进行设置。以此来达到一个最优的效果。

[参考文章]

[1]. https://blog.csdn.net/cywosp/article/details/23397179/

[2]. http://blog.huanghao.me/?p=14