Mask R-CNN是一个实例分割算法,可以用来做目标检测、目标实例分割、目标关键点检测。

下面测试它的onnx版本。

onnx的model和相关资料在此处下载。

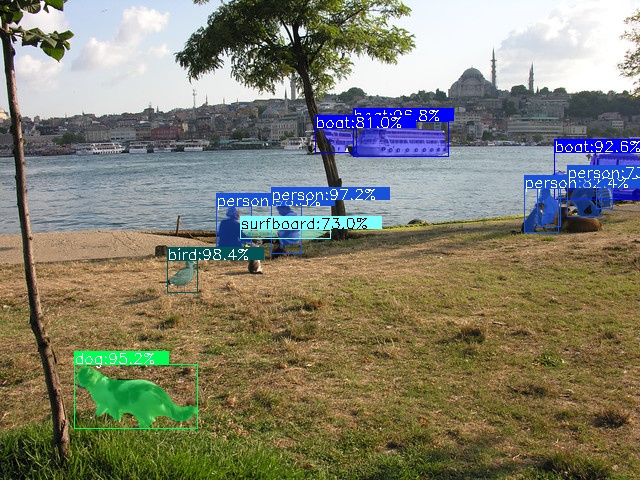

测试图片:

图片的预处理部分,参考中用了Image来读图片,这里改成了opencv版本。

import numpy as np

import cv2

import onnxruntime

from utils import _COLORS #自己定义一些mask颜色

#这里把Image版本改成了opencv版本

def preprocess(image):

# Resize

ratio = 800.0 / min(image.shape[0], image.shape[1])

image = cv2.resize(image, (int(ratio * image.shape[1]), int(ratio * image.shape[0])))

# Convert to BGR

image = np.array(image)[:, :, [2, 1, 0]].astype('float32')

# HWC -> CHW

image = np.transpose(image, [2, 0, 1])

# Normalize

mean_vec = np.array([102.9801, 115.9465, 122.7717])

for i in range(image.shape[0]):

image[i, :, :] = image[i, :, :] - mean_vec[i]

# Pad to be divisible of 32

import math

padded_h = int(math.ceil(image.shape[1] / 32) * 32)

padded_w = int(math.ceil(image.shape[2] / 32) * 32)

padded_image = np.zeros((3, padded_h, padded_w), dtype=np.float32)

padded_image[:, :image.shape[1], :image.shape[2]] = image

image = padded_image

return image

后处理部分。

原版是画轮廓,这里改成了画mask区域。

def display_objdetect_image(image, boxes, labels, scores, masks, score_threshold=0.7):

# Resize boxes

ratio = 800.0 / min(image.shape[0], image.shape[1])

boxes /= ratio

image = np.array(image)

for mask, box, label, score in zip(masks, boxes, labels, scores):

# Showing boxes with score > 0.7

if score <= score_threshold:

continue

# Finding contour based on mask

#mask:(1,28,28)

mask = mask[0, :, :, None] #(28,28,1)

int_box = [int(i) for i in box]

mask = cv2.resize(mask, (int_box[2]-int_box[0]+1,int_box[3]-int_box[1]+1)) #box区域大小

mask = mask > 0.5

im_mask = np.zeros((image.shape[0], image.shape[1]), dtype=np.uint8) #image大小的全0mask

x_0 = max(int_box[0], 0)

x_1 = min(int_box[2] + 1, image.shape[1])

y_0 = max(int_box[1], 0)

y_1 = min(int_box[3] + 1, image.shape[0])

mask_y_0 = max(y_0 - box[1], 0)

mask_y_1 = max(0, mask_y_0 + y_1 - y_0)

mask_x_0 = max(x_0 - box[0], 0)

mask_x_1 = max(0, mask_x_0 + x_1 - x_0)

im_mask[y_0:y_1, x_0:x_1] = mask[ #box区域填上mask,(480,640,1)

mask_y_0 : mask_y_1, mask_x_0 : mask_x_1

]

im_mask = im_mask[:, :, None] #(480,640,1)

mask3 = image.copy() #填上颜色的mask

color = (_COLORS[label]*255).astype(np.uint8).tolist()

mask3[im_mask[:,:,0]>0] = color

image = cv2.addWeighted(image, 0.5, mask3, 0.5, 0)

text = "{}:{:.1f}%".format(classes[label], score * 100)

txt_color = (0, 0, 0) if np.mean(_COLORS[label]) > 0.5 else (255, 255, 255)

font = cv2.FONT_HERSHEY_SIMPLEX

txt_size = cv2.getTextSize(text, font, 0.5, 2)[0]

#画box框

cv2.rectangle(image, (x_0, y_0), (x_1, y_1), color, 1)

#标签区域框

cv2.rectangle(

image,

(x_0, y_0 - txt_size[1] - 1),

(x_0 + txt_size[0] + txt_size[1], y_0 - 1),

color,

-1,

)

#填上标签

cv2.putText(image, text, (x_0, y_0 - 1), font, 0.5, txt_color, thickness=1)

cv2.imshow("image", image)

cv2.waitKey(0)

ONNX推理部分(main函数)

if __name__ == '__main__':

img = cv2.imread('./demo.jpg')

#前处理

img_data = preprocess(img)

#onnx process

session = onnxruntime.InferenceSession("./MaskRCNN-12.onnx",

providers=['CUDAExecutionProvider',

'CPUExecutionProvider'])

model_inputs = session.get_inputs()

input_names = [model_inputs[i].name for i in range(len(model_inputs))]

input_shape = model_inputs[0].shape

input_height = input_shape[1]

input_width = input_shape[2]

model_outputs = session.get_outputs()

output_names = [model_outputs[i].name for i in range(len(model_outputs))]

# time1 = time.time()

outputs = session.run(output_names, {

input_names[0]: img_data})

# time2 = time.time()

boxes = outputs[0]

labels = outputs[1]

scores = outputs[2]

masks = outputs[3]

#后处理

display_objdetect_image(img, boxes, labels, scores, masks)