一、代码下载地址

GitHub - facebookresearch/mae: PyTorch implementation of MAE https//arxiv.org/abs/2111.06377

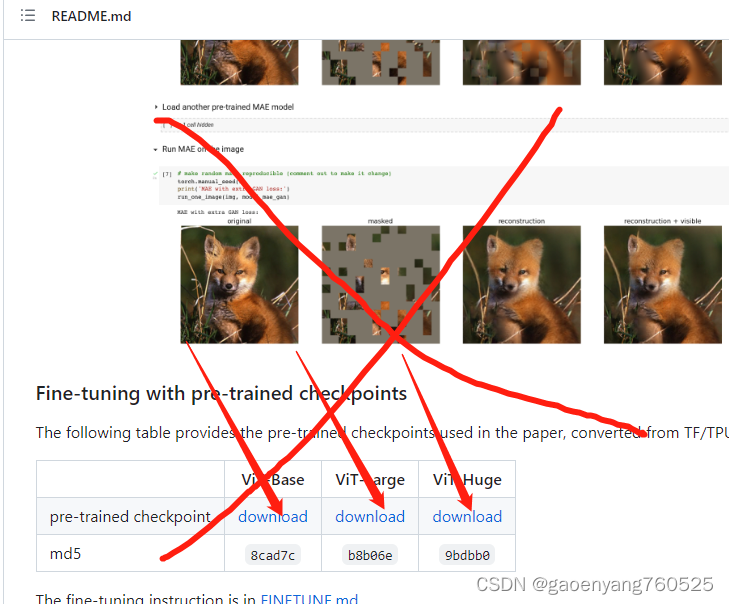

二、预训练模型下载地址

注意不是下面这个:

而是下面这个:

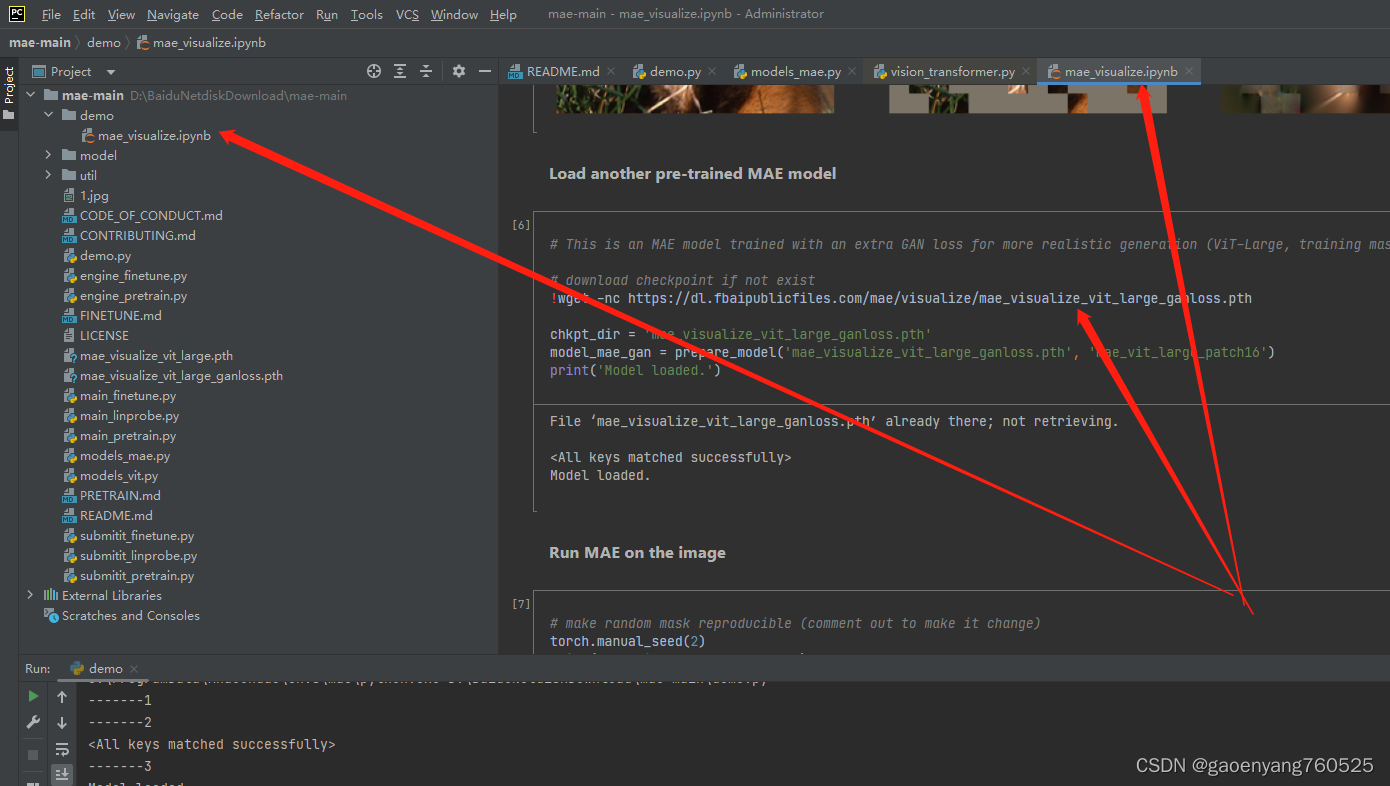

三、测试代码

在根目录下,建立demo.py文件,如下

import sys

import os

import requests

import torch

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

matplotlib.use('TKAgg')

from PIL import Image

import models_mae

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

# define the utils

imagenet_mean = np.array([0.485, 0.456, 0.406])

imagenet_std = np.array([0.229, 0.224, 0.225])

def show_image(image, title=''):

# image is [H, W, 3]

assert image.shape[2] == 3

plt.imshow(torch.clip((image * imagenet_std + imagenet_mean) * 255, 0, 255).int())

plt.title(title, fontsize=16)

plt.axis('off')

return

def prepare_model(chkpt_dir, arch='mae_vit_large_patch16'):

# build model

model = getattr(models_mae, arch)()

# load model

checkpoint = torch.load(chkpt_dir, map_location='cpu')

msg = model.load_state_dict(checkpoint['model'], strict=False)

print(msg)

return model

def run_one_image(img, model):

x = torch.tensor(img)

# make it a batch-like

x = x.unsqueeze(dim=0)

x = torch.einsum('nhwc->nchw', x)

# run MAE

loss, y, mask = model(x.float(), mask_ratio=0.75) # y是重构出的tokens

y = model.unpatchify(y)

y = torch.einsum('nchw->nhwc', y).detach().cpu()

# visualize the mask

mask = mask.detach()

mask = mask.unsqueeze(-1).repeat(1, 1, model.patch_embed.patch_size[0] ** 2 * 3) # (N, H*W, p*p*3)

mask = model.unpatchify(mask) # 1 is removing, 0 is keeping

mask = torch.einsum('nchw->nhwc', mask).detach().cpu()

x = torch.einsum('nchw->nhwc', x)

# masked image

im_masked = x * (1 - mask) # 掩盖75%后的原图

# MAE reconstruction pasted with visible patches

im_paste = x * (1 - mask) + y * mask # 25%原图+75%生成图

# make the plt figure larger

plt.rcParams['figure.figsize'] = [24, 24]

plt.subplot(1, 4, 1)

show_image(x[0], "original")

plt.subplot(1, 4, 2)

show_image(im_masked[0], "masked")

plt.subplot(1, 4, 3)

show_image(y[0], "reconstruction")

plt.subplot(1, 4, 4)

show_image(im_paste[0], "reconstruction + visible")

plt.show()

# load an image

img_path = '1.jpg'

# img = Image.open(requests.get(img_url, stream=True).raw)

img = Image.open(img_path)

img = img.resize((224, 224))

img = np.array(img) / 255.

assert img.shape == (224, 224, 3)

# normalize by ImageNet mean and std

img = img - imagenet_mean

img = img / imagenet_std

plt.rcParams['figure.figsize'] = [5, 5]

show_image(torch.tensor(img))

print('-------1')

# --------------------------mae_model-----------------------------

chkpt_dir = 'mae_visualize_vit_large.pth'

print('-------2')

model_mae = prepare_model(chkpt_dir, 'mae_vit_large_patch16')

print('-------3')

print('Model loaded.')

torch.manual_seed(2)

print('MAE with pixel reconstruction:')

run_one_image(img, model_mae)

# --------------------------mae_ganloss---------------------------

chkpt_dir = 'mae_visualize_vit_large_ganloss.pth'

model_mae_gan = prepare_model(chkpt_dir, 'mae_vit_large_patch16')

print('Model loaded.')

torch.manual_seed(2)

print('MAE with extra GAN loss:')

run_one_image(img, model_mae_gan)

注意,其中的

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

是为了解决OMP: Hint This means that multiple copies of the OpenMP runtime have been linked into the program.我自己加的。

两个预训练模型,跟demo.py放在同一个文件夹下。

四、改一下models_mae.py

我把那个qk_scale=None删掉了