这个系列的其他文章:

CrateDB初探(一):CrateDB集群的Docker部署

CrateDB初探(二):PARTITION, SHARDING AND REPLICATION

CrateDB初探(四):乐观并发控制 (Optimistic Concurrency Control )

k8s部署cratedb集群的官方文档:

statefulset

采用statefulset方式部署3个节点的crate集群(基本复制了官方的yaml)

这里实验用的存储是nfs,采用动态方式,需要提前准备好storageclass

另需要提前创建namespace:cratedb

注意:官方文档中没有在env中设置环境变量POD_NAME,会导致容器启动失败

kind: StatefulSet

apiVersion: "apps/v1"

metadata:

# This is the name used as a prefix for all pods in the set.

name: crate

namespace: cratedb

spec:

serviceName: "crate-internal-service"

# Our cluster has three nodes.

replicas: 3

selector:

matchLabels:

# The pods in this cluster have the `app:crate` app label.

app: crate

template:

metadata:

labels:

app: crate

spec:

# InitContainers run before the main containers of a pod are

# started, and they must terminate before the primary containers

# are initialized. Here, we use one to set the correct memory

# map limit.

initContainers:

- name: init-sysctl

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

# This final section is the core of the StatefulSet configuration.

# It defines the container to run in each pod.

containers:

- name: crate

# Use the CrateDB 4.2.4 Docker image.

image: crate:4.2.4

# Pass in configuration to CrateDB via command-line options.

# We are setting the name of the node's explicitly, which is

# needed to determine the initial master nodes. These are set to

# the name of the pod.

# We are using the SRV records provided by Kubernetes to discover

# nodes within the cluster.

args:

- -Cnode.name=${POD_NAME}

- -Ccluster.name=${CLUSTER_NAME}

- -Ccluster.initial_master_nodes=crate-0,crate-1,crate-2

- -Cdiscovery.seed_providers=srv

- -Cdiscovery.srv.query=_crate-internal._tcp.crate-internal-service.${NAMESPACE}.svc.cluster.local

- -Cgateway.recover_after_nodes=2

- -Cgateway.expected_nodes=${EXPECTED_NODES}

- -Cpath.data=/data

volumeMounts:

# Mount the `/data` directory as a volume named `data`.

- mountPath: /data

name: data

resources:

limits:

# How much memory each pod gets.

memory: 512Mi

ports:

# Port 4300 for inter-node communication.

- containerPort: 4300

name: crate-internal

# Port 4200 for HTTP clients.

- containerPort: 4200

name: crate-web

# Port 5432 for PostgreSQL wire protocol clients.

- containerPort: 5432

name: postgres

# Environment variables passed through to the container.

env:

# This is variable is detected by CrateDB.

- name: CRATE_HEAP_SIZE

value: "256m"

# The rest of these variables are used in the command-line

# options.

- name: EXPECTED_NODES

value: "3"

- name: CLUSTER_NAME

value: "my-crate"

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME # added

valueFrom:

fieldRef:

fieldPath: metadata.name

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 300Msvc

crate-internal-service 用于集群间的通信

crate-external-service 用于从外部访问集群

apiVersion: v1

kind: Service

metadata:

name: crate-internal-service

namespace: cratedb

labels:

app: crate

spec:

# A static IP address is assigned to this service. This IP address is

# only reachable from within the Kubernetes cluster.

type: ClusterIP

ports:

# Port 4300 for inter-node communication.

- port: 4300

name: crate-internal

selector:

# Apply this to all nodes with the `app:crate` label.

app: crate

---

kind: Service

apiVersion: v1

metadata:

name: crate-external-service

namespace: cratedb

labels:

app: crate

spec:

# Create an externally reachable load balancer.

type: NodePort

ports:

# Port 4200 for HTTP clients.

- port: 4200

name: crate-web

# Port 5432 for PostgreSQL wire protocol clients.

- port: 5432

name: postgres

selector:

# Apply this to all nodes with the `app:crate` label.

app: crate持久卷

采用nfs持久卷

rbac

#rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.ionfs provisioner

# nfs-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default #与RBAC文件中的namespace保持一致

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: nfs-storage #provisioner名称,请确保该名称与 nfs-StorageClass.yaml文件中的provisioner名称保持一致

- name: NFS_SERVER

value: 10.24.17.31 #NFS Server IP地址

- name: NFS_PATH

value: /home/nfs #NFS挂载卷

volumes:

- name: nfs-client-root

nfs:

server: 10.24.17.31 #NFS Server IP地址

path: /home/nfs #NFS 挂载卷storageclass

# nfs-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: nfs-storage #这里的名称要和provisioner配置文件中的环境变量PROVISIONER_NAME保持一致

reclaimPolicy: Retain # 默认为delete

parameters:

archiveOnDelete: "true" # false表示pv被删除时,在nfs下面对应的文件夹也会被删除,true正相反

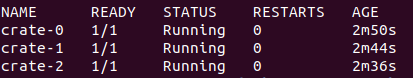

启动集群

kubectl apply -f cratedb-sts.yaml

kubectl apply -f cratedb-svc.yamlstatefulset依次启动3个pod

# 查看crate-1的启动日志

sudo kubectl logs crate-1 -n cratedb --kubeconfig $KUBECONFIG

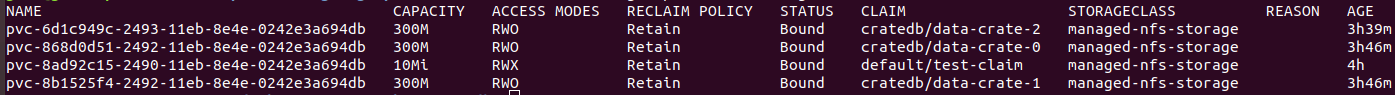

pv

pvc

访问nodeip:nodeport