一、安装nfs

NFS, 网络文件系统,是由SUN公司研制的UNIX表示层协议。通过该协议能够让用户访问网络上的文件。 在这里可以将zookeeper集群中保存每个节点ID的myid文件保存在NFS共享目录下。

- 具体的安装步骤:

第一步:下载rpcbind和nfs-utils软件;

链接:https://pan.baidu.com/s/16zDbfXd_mjSMf1TjMmdcKQ

提取码:123z

第二步:执行本地安装;

yum localinstall nfs-utils-1.3.0-0.68.el7.x86_64.rpm –y

rpm -qa nfs-utils rpcbind第三步:启动rpcbind和nfs服务;

systemctl start rpcbind

systemctl start nfs

systemctl enable rpcbind nfs这里需要注意启动的顺序:先启动rpcbind,然后再启动nfs。

第四步:修改nfs服务端配置文件,指定/var/nfs目录的操作权限;

vi /etc/exports

/var/nfs 10.79.4.0/24(rw,sync,wdelay,hide,no_subtree_check,sec=sys,secure,root_squash,no_all_squash,no_root_squash)最后,在/var/nfs/zk-cluster/目录下,分别新建zk01、zk02、zk03子目录,用于保存myid文件。

mkdir –p /var/nfs/zk-cluster/{

zk01,zk02,zk03}二、安装flanneld

Flanneld作为一个第三方插件,可以为每台主机分配一个子网的方式提供虚拟网络,并借助etcd维护网络的分配情况。简单来说,flanneld就是负责管理跨主机集群IP资源的分配。

2.1 向etcd 写入集群Pod网段信息

2.1.1 如果etcd集群启动了tls认证

第一步:准备一个json文件,保存证书配置信息;

{

"CN": "flanneld",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}]

}第二步:安装cfssl工具,然后再执行下面命令生成证书文件;

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld第三步:向etcd 写入集群Pod网段信息;

/etc/etcd/bin/etcdctl --endpoints="https://[ip]:2379" --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/flanneld/ssl/flanneld.pem --key-file=/etc/flanneld/ssl/flanneld-key.pem set /kubernetes/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'上面ip替换成etcd服务所在主机地址。另外值得注意的是,上面步骤只需要第一次部署flannel网络时执行,后续在其他节点上部署flanneld时无需再次写入该信息。

第四步:增加kube-controller-manager启动参数;

vi /etc/kubernetes/controller-manager

--allocate-node-cidrs=true \

--service-cluster-ip-range=169.169.0.0/16 \

--cluster-cidr=172.17.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \2.1.2 如果etcd集群没有启动tls认证

第一步:向etcd 写入集群Pod网段信息;

etcdctl --endpoints="http://[ip]:2379" set /kubernetes/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'上面ip替换成etcd服务所在主机地址。另外值得注意的是,上面步骤只需要第一次部署flannel网络时执行,后续在其他节点上部署flanneld时无需再次写入该信息。

第二步:增加kube-controller-manager启动参数;

vi /etc/kubernetes/controller-manager

--allocate-node-cidrs=true \

--service-cluster-ip-range=169.169.0.0/16 \

--cluster-cidr=172.17.0.0/16 \

--cluster-name=kubernetes \2.2 安装和配置flanneld

第一步:下载并解压缩flannel-v0.15.0-linux-amd64.tar.gz,然后将flanneld和mk-docker-opts.sh文件拷贝到/etc/kubernetes/bin/目录下;

tar zvxf flannel-v0.10.0-linux-amd64.tar.gz

mkdir -p /etc/kubernetes/bin/

mv flanneld mk-docker-opts.sh /etc/kubernetes/bin/第二步:创建flanneld服务启动文件;

vi /lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/etc/kubernetes/bin/flanneld --etcd-endpoints=http://[ip]:2379 --etcd-prefix=/kubernetes/network

ExecStartPost=/etc/kubernetes/bin/mk-docker-opts.sh -i Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service第三步:配置Docker启动指定子网段;

vi /lib/systemd/system/docker.service

[Service]

...

EnvironmentFile=/run/docker_opts.env

ExecStart=/usr/bin/dockerd \

$DOCKER_OPT_BIP \

$DOCKER_OPT_IPMASQ \

$DOCKER_OPT_MTU第四步:启动服务;

systemctl daemon-reload

systemctl restart docker

systemctl start flanneld

systemctl enable flanneld注意:启动docker前,必须将dockers容器删掉,否则网络配置不生效。

2.3 检查是否成功

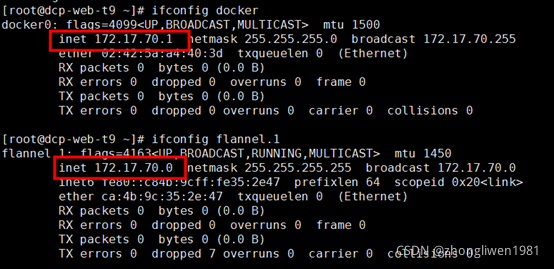

1)查看docker和flannel.1的网段是否相同:

ifconfig docker

ifconfig flannel.1执行效果:

2)查看集群 Pod 网段:

etcdctl --endpoints="http://[ip]:2379" get /kubernetes/network/config执行效果:

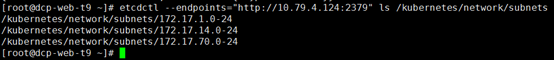

3)查看已分配的 Pod 子网段列表:

etcdctl --endpoints="http://10.79.4.124:2379" ls /kubernetes/network/subnets执行效果:

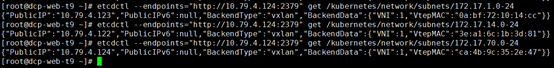

4)查看某一 Pod 网段对应的 flanneld 进程监听的 IP 和网络参数:

etcdctl --endpoints="http://[ip]:2379" get /kubernetes/network/subnets/172.17.1.0-24

etcdctl --endpoints="http://[ip]:2379" get /kubernetes/network/subnets/172.17.14.0-24

etcdctl --endpoints="http://[ip]:2379" get /kubernetes/network/subnets/172.17.70.0-24执行效果:

三、部署dns服务

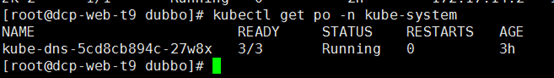

在kubernetes集群中,zk_pod之间是通过域名进行通讯。我们可以通过add-on插件方式启动kubedns服务,来管理k8s集群中的服务名与集群IP的对应关系。所以,只需要在k8s集群的kube-system命名空间下部署一个dns服务即可。

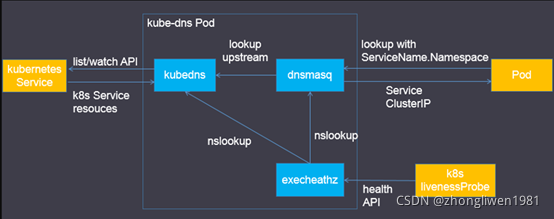

3.1 部署kubedns

KubeDNS由三部分组成:kubedns、dnsmasq和sidecar。Kubedns负责监控k8s集群service的变化,并将service和ip之间的映射关系记录在etcd中;dnsmasq为客户端提供查询缓存功能,它把etcd中查询到的记录解析出来;sidecar负责监控kubedns和dnsmasq组件的工作状态是否正常。它们之间的关系如下图所示:

先准备k8s-dns-kube-dns-amd64、k8s-dns-dnsmasq-nanny-amd64、k8s-dns-sidecar-amd64镜像文件,然后编写部署文件:

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-dns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

volumes:

- name: kube-dns-config

configMap:

name: kube-dns

optional: true

containers:

- name: kubedns

image: k8s-dns-kube-dns-amd64:1.14.8

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

livenessProbe:

httpGet:

path: /healthcheck/kubedns

port: 10054

scheme: HTTP

initialDelaySeconds: 5

timeoutSeconds: 60

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

initialDelaySeconds: 5

timeoutSeconds: 60

args:

- --domain=cluster.local.

- --dns-port=10053

- --config-dir=/kube-dns-config

- --v=2

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

volumeMounts:

- name: kube-dns-config

mountPath: /kube-dns-config

- name: dnsmasq

image: k8s-dns-dnsmasq-nanny-amd64:1.14.8

livenessProbe:

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 5

timeoutSeconds: 60

successThreshold: 1

failureThreshold: 5

args:

- -v=2

- -logtostderr

- -configDir=/etc/k8s/dns/dnsmasq-nanny

- -restartDnsmasq=true

- --

- -k

- --cache-size=1000

- --log-facility=-

- --server=/cluster.local/127.0.0.1#10053

- --server=/in-addr.arpa/127.0.0.1#10053

- --server=/ip6.arpa/127.0.0.1#10053

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

resources:

requests:

cpu: 150m

memory: 20Mi

volumeMounts:

- name: kube-dns-config

mountPath: /etc/k8s/dns/dnsmasq-nanny

- name: sidecar

image: k8s-dns-sidecar-amd64:1.14.8

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 5

timeoutSeconds: 60

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,A

- --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,A

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 20Mi

cpu: 10m

dnsPolicy: Default

serviceAccountName: kube-dns

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 169.169.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP上面--domain=cluster.local.配置项指定了zk集群的域名(比如:zk-0.zk-hs.default.svc.cluster.local)。

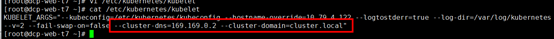

接着,在node节点上修改kubelet配置文件,增加两个参数:--cluster-dns和--cluster-domain,它们的值和kubedns部署文件中相同。

最后执行部署即可。

四、部署zookeeper

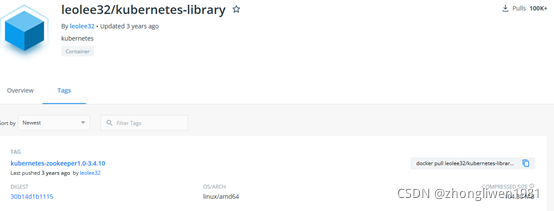

4.1 下载zk镜像

在docker官网上查找kubernetes-library的镜像。

4.2 部署服务

1)配置PV:

apiVersion: v1

kind: PersistentVolume

metadata:

name: k8s-pv-zk01

labels:

app: zk

annotations:

volume.beta.kubernetes.io/storage-class: "anything"

spec:

nfs:

path: /var/nfs/zk-cluster/zk01

server: [nfs_ip_address]

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: k8s-pv-zk02

labels:

app: zk

annotations:

volume.beta.kubernetes.io/storage-class: "anything"

spec:

nfs:

path: /var/nfs/zk-cluster/zk02

server: [nfs_ip_address]

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: k8s-pv-zk03

labels:

app: zk

annotations:

volume.beta.kubernetes.io/storage-class: "anything"

spec:

nfs:

path: /var/nfs/zk-cluster/zk03

server: [nfs_ip_address]

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle2)配置控制器:

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: zk-pdb

spec:

selector:

matchLabels:

app: zk

minAvailable: 1

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zk

spec:

selector:

matchLabels:

app: zk

serviceName: zk-hs

replicas: 3

updateStrategy:

type: RollingUpdate

podManagementPolicy: Parallel

template:

metadata:

labels:

app: zk

spec:

containers:

- name: zk

imagePullPolicy: Always

image: kubernetes-library:kubernetes-zookeeper1.0-3.4.10

resources:

requests:

memory: "500Mi"

cpu: "0.5"

ports:

- containerPort: 2181

name: client

- containerPort: 2888

name: server

- containerPort: 3888

name: leader-election

command:

- sh

- -c

- "start-zookeeper \

--servers=3 \

--data_dir=/var/lib/zookeeper/data \

--data_log_dir=/var/lib/zookeeper/data/log \

--conf_dir=/opt/zookeeper/conf \

--client_port=2181 \

--election_port=3888 \

--server_port=2888 \

--tick_time=2000 \

--init_limit=10 \

--sync_limit=5 \

--heap=512M \

--max_client_cnxns=60 \

--snap_retain_count=3 \

--purge_interval=12 \

--max_session_timeout=120000 \

--min_session_timeout=30000 \

--log_level=INFO"

readinessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- name: datadir

mountPath: /var/lib/zookeeper

volumeClaimTemplates:

- metadata:

name: datadir

annotations:

volume.beta.kubernetes.io/storage-class: "anything"

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi3)配置服务:

apiVersion: v1

kind: Service

metadata:

name: zk-hs

labels:

app: zk

spec:

selector:

app: zk

clusterIP: None

ports:

- port: 2888

name: server

- port: 3888

name: leader-election

---

apiVersion: v1

kind: Service

metadata:

name: zk-cs

labels:

app: zk

spec:

selector:

app: zk

ports:

- port: 2181

name: client执行部署:

kubectl apply -f zk-pv.yaml

kubectl apply -f zk.yaml

kubectl apply -f zk-svc.yaml4.3 部署服务

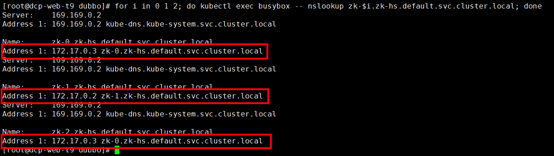

这里可以借助busybox组件完成dns的调试。

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- image: busybox:1.27

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

name: busybox

restartPolicy: Always部署完成后,则可以通过busybox查看dns中是否有对应域名。

for i in 0 1 2; do kubectl exec busybox -- nslookup zk-$i.zk-hs.default.svc.cluster.local; done执行效果:

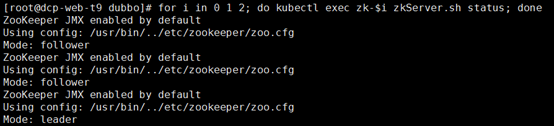

查看zk每个节点的状态是否正常:

for i in 0 1 2; do kubectl exec zk-$i zkServer.sh status; done执行效果:

五、部署dubbo

第一步:先准备好dubboadmin镜像;

第二步:限定安装的节点:

kubectl label node [ip] isDubbo="true"上面ip应该替换成指定节点的地址。

第三步:准备部署文件:

apiVersion: v1

kind: ReplicationController

metadata:

name: dubboadmin

spec:

replicas: 1

selector:

app: dubboadmin

template:

metadata:

labels:

app: dubboadmin

spec:

nodeSelector:

isDubbo: "true"

containers:

- name: dubboadmin

image: dubboadmin:0.1.0

imagePullPolicy: IfNotPresent

command: [ "/bin/bash", "-ce", "java -Dadmin.registry.address=zookeeper://zk-0.zk-hs.default.svc.cluster.local:2181,zk-1.zk-hs.default.svc.cluster.local:2181,zk-2.zk-hs.default.svc.cluster.local:2181 -Dadmin.config-center=zookeeper://zk-0.zk-hs.default.svc.cluster.local:2181,zk-1.zk-hs.default.svc.cluster.local:2181,zk-2.zk-hs.default.svc.cluster.local:2181 -Dadmin.metadata-report.address=zookeeper://zk-0.zk-hs.default.svc.cluster.local:2181,zk-1.zk-hs.default.svc.cluster.local:2181,zk-2.zk-hs.default.svc.cluster.local:2181 -XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap -Djava.security.egd=file:/dev/./urandom -jar /app.jar"]

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: dubboadmin

spec:

selector:

app: dubboadmin

type: NodePort

ports:

- name: dubboadmin

port: 8080

targetPort: 8080

nodePort: 20080上面spec.nodeSelector指定了node的部署节点位置。

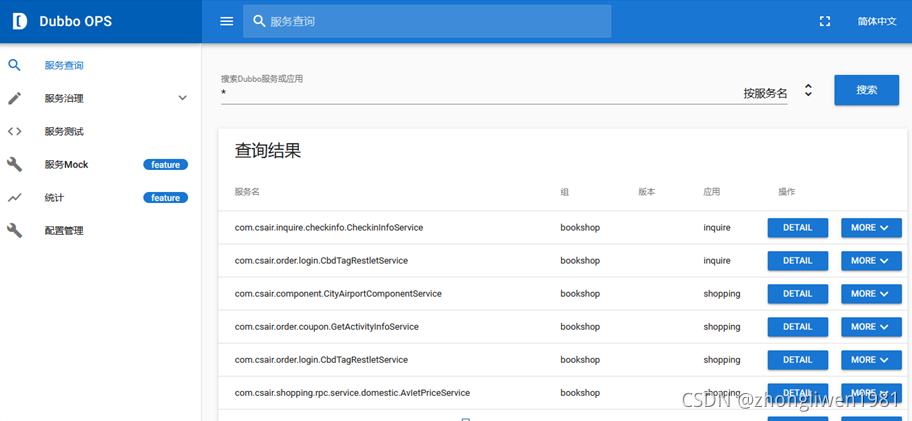

部署完成后,在浏览器上输入http://[ip]:20080,效果如下图所示:

到目前为止,已经完成了k8s集群上zk和dubbo服务的部署。