prometheus 提供了remote_write 以及remote_read 的数据存储方式,可以帮助我们进行数据的长时间存储、方便查询

cratedb 提供了对应的adapter,可以直接进行适配。

以下演示一个简单的cratedb 集群以及通过write 以及read 存储通过grok exporter 暴露的日记prometheus metrics

环境准备

- 数据请求流程

inputlog->grok exporter -> prometheus->cratedb adpater->cratedb cluster - docker-compose 文件

version: "3"

services:

grafana:

image: grafana/grafana

ports:

- "3000:3000"

prometheus:

image: prom/prometheus

volumes:

- "./prometheus.yml:/etc/prometheus/prometheus.yml"

ports:

- "9090:9090"

cratedb-adapter:

image: crate/crate_adapter

command: -config.file /opt/config/config.yml

ports:

- "9268:9268"

volumes:

- "./cratedb-adapter:/opt/config"

grok:

image: dalongrong/grok-exporter

volumes:

- "./grok/example:/opt/example"

- "./grok/grok.yaml:/grok/config.yml"

ports:

- "9144:9144"

crate1:

image: crate

volumes:

- "./cratedb/data1:/data"

- "./cratedb/1.yaml:/crate/config/crate.yml"

ports:

- "4200:4200"

- "4300:4300"

- "5432:5432"

crate2:

image: crate

volumes:

- "./cratedb/data2:/data"

- "./cratedb/2.yaml:/crate/config/crate.yml"

ports:

- "4201:4200"

- "4301:4300"

- "5433:5432"

crate3:

image: crate

volumes:

- "./cratedb/data3:/data"

- "./cratedb/3.yaml:/crate/config/crate.yml"

ports:

- "4202:4200"

- "4302:4300"

- "5434:5432"

- prometheus 配置

通过静态配置的方式添加target,统计配置了remote_write 以及remote_read

scrape_configs:

- job_name: grok

metrics_path: /metrics

scrape_interval: 10s

scrape_timeout: 10s

static_configs:

- targets: ['grok:9144']

- job_name: cratedb-adapter

metrics_path: /metrics

scrape_interval: 10s

scrape_timeout: 10s

static_configs:

- targets: ['cratedb-adapter:9268']

remote_write:

- url: http://cratedb-adapter:9268/write

remote_read:

- url: http://cratedb-adapter:9268/read

- cratedb 集群配置

当前使用的是社区版本,对于集群模式,最大支持的是3个节点的,但是一般场景也够用了

- node1 配置

cluster.name: cratecluster

node.name: crate1

node.master: true

node.data: true

http.port: 4200

psql.port: 5432

transport.tcp.port: 4300

discovery.seed_hosts: ["crate1"]

cluster.initial_master_nodes: ["crate1"]

http.cors.enabled: true

http.cors.allow-origin: "*"

gateway.expected_nodes: 3

gateway.recover_after_nodes: 2

gateway.recover_after_time: 5m

network.host: _local_,_site_

path.logs: /data/log

path.data: /data/data

blobs.path: /data/blobs

- node2 配置

cluster.name: cratecluster

node.name: crate2

node.master: false

node.data: true

http.port: 4200

psql.port: 5432

transport.tcp.port: 4300

discovery.seed_hosts: ["crate1"]

cluster.initial_master_nodes: ["crate1"]

http.cors.enabled: true

http.cors.allow-origin: "*"

gateway.expected_nodes: 3

gateway.recover_after_nodes: 2

gateway.recover_after_time: 5m

network.host: _local_,_site_

path.logs: /data/log

path.data: /data/data

blobs.path: /data/blobs

- node3 配置cluster.name: cratecluster

node.name: crate3

node.master: false

node.data: true

http.port: 4200

psql.port: 5432

transport.tcp.port: 4300

discovery.seed_hosts: ["crate1"]

cluster.initial_master_nodes: ["crate1"]

http.cors.enabled: true

http.cors.allow-origin: "*"

network.host: _local_,_site_

gateway.expected_nodes: 3

gateway.recover_after_nodes: 2

gateway.recover_after_time: 5m

path.logs: /data/log

path.data: /data/data

blobs.path: /data/blobs

- grok exporter配置

定义的日志匹配模式

global:

config_version: 2

input:

type: file

path: /opt/example/examples.log

readall: true

grok:

patterns_dir: ./patterns

metrics:

- type: counter

name: grok_example_lines_total

help: Counter metric example with labels.

match: '%{DATE} %{TIME} %{USER:user} %{NUMBER}'

labels:

user: '{{.user}}'

server:

port: 9144

- cratedb adapter 配置

暴露write 以及read 服务,因为使用集群模式,所以我 配置了多个节点

crate_endpoints:

- host: "crate1" # Host to connect to (default: "localhost").

port: 5432 # Port to connect to (default: 5432).

user: "crate" # Username to use (default: "crate")

password: "" # Password to use (default: "").

schema: "" # Schema to use (default: "").

max_connections: 5 # The maximum number of concurrent connections (default: 5).

enable_tls: false # Whether to connect using TLS (default: false).

allow_insecure_tls: false # Whether to allow insecure / invalid TLS certificates (default: false).

- host: "crate2" # Host to connect to (default: "localhost").

port: 5432 # Port to connect to (default: 5432).

user: "crate" # Username to use (default: "crate")

password: "" # Password to use (default: "").

schema: "" # Schema to use (default: "").

max_connections: 5 # The maximum number of concurrent connections (default: 5).

enable_tls: false # Whether to connect using TLS (default: false).

allow_insecure_tls: false # Whether to allow insecure / invalid TLS certificates (default: false).

- host: "crate3" # Host to connect to (default: "localhost").

port: 5432 # Port to connect to (default: 5432).

user: "crate" # Username to use (default: "crate")

password: "" # Password to use (default: "").

schema: "" # Schema to use (default: "").

max_connections: 5 # The maximum number of concurrent connections (default: 5).

enable_tls: false # Whether to connect using TLS (default: false).

allow_insecure_tls: false # Whether to allow insecure / invalid TLS certificates (default: false).

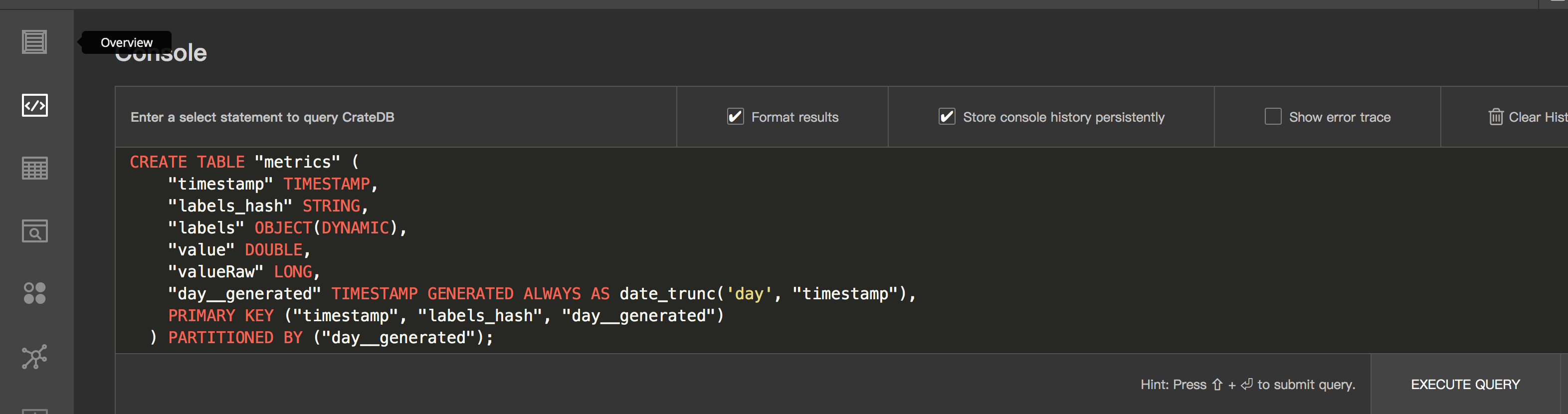

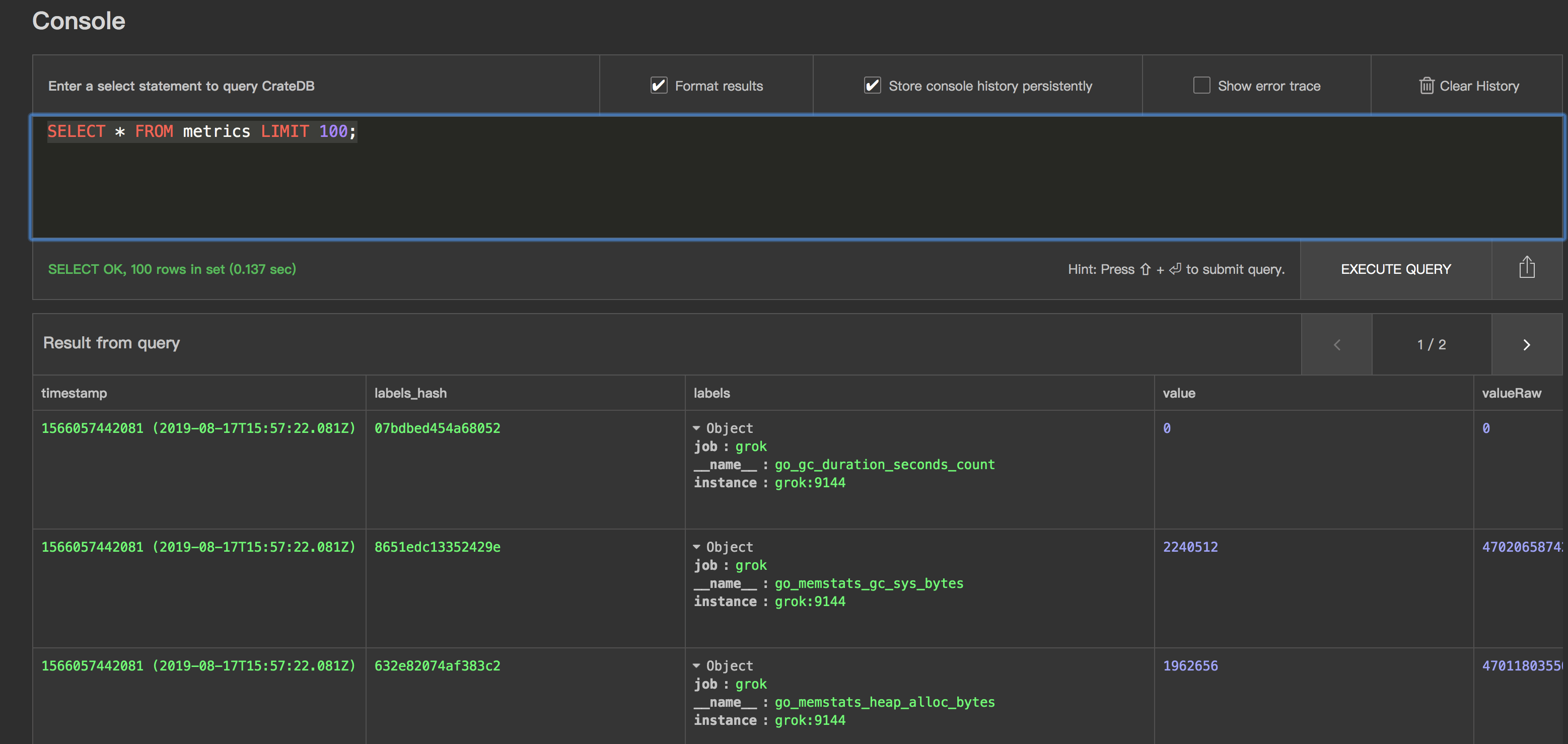

- metrics 的table

使用cratedb 我们需要先定义table,table 的schema 定义官方提供了模版

CREATE TABLE "metrics" (

"timestamp" TIMESTAMP,

"labels_hash" STRING,

"labels" OBJECT(DYNAMIC),

"value" DOUBLE,

"valueRaw" LONG,

"day__generated" TIMESTAMP GENERATED ALWAYS AS date_trunc('day', "timestamp"),

PRIMARY KEY ("timestamp", "labels_hash", "day__generated")

) PARTITIONED BY ("day__generated");

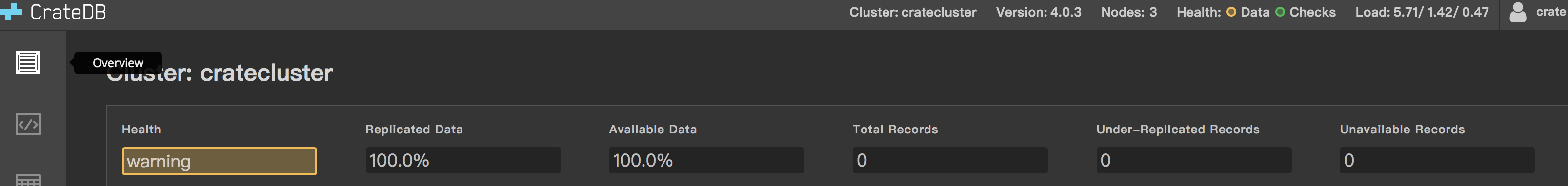

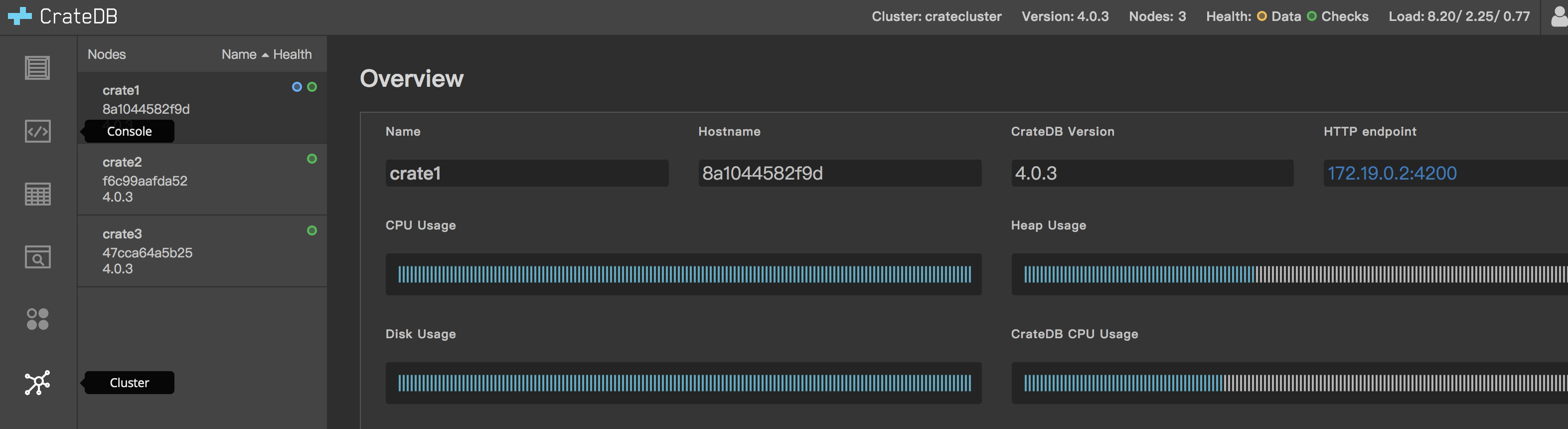

启动&&测试

- 启动集群

docker-compose up -d

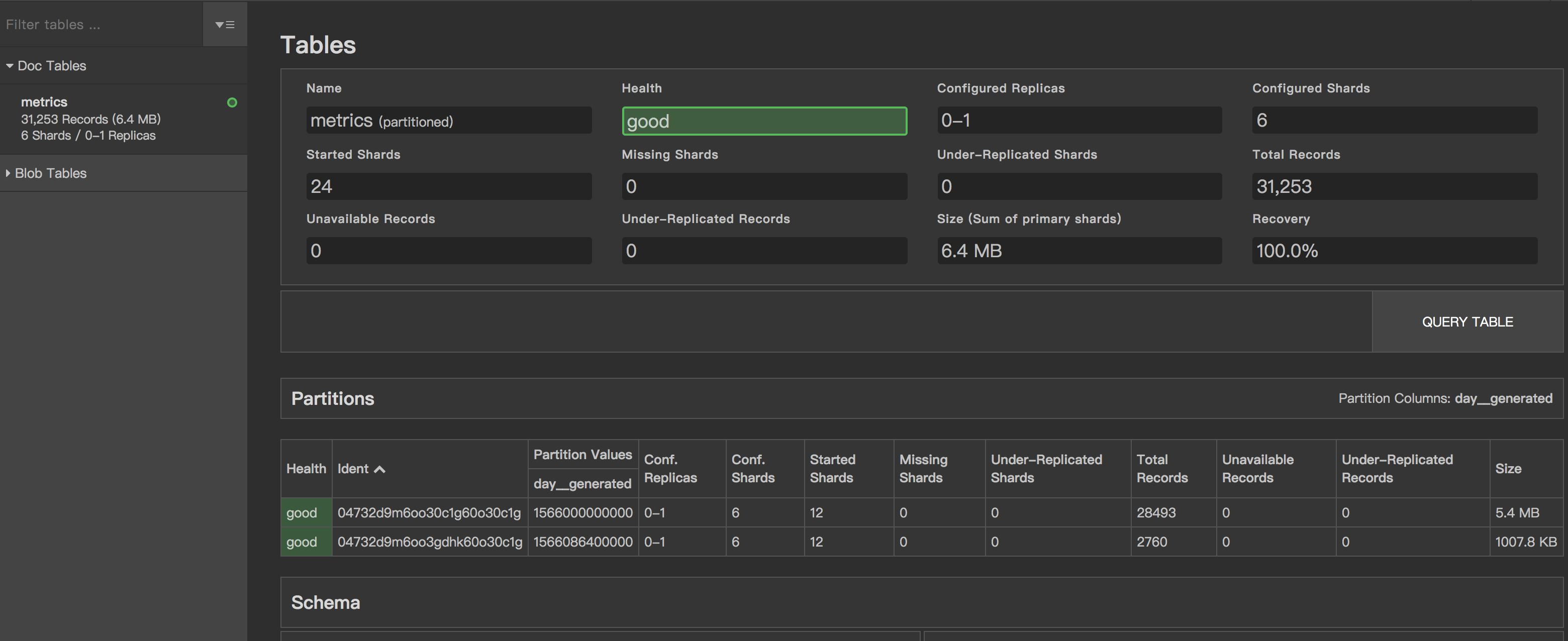

- 效果

- 通过admin ui 创建table

- 写入数据统计

- 数据查询效果

说明

使用crate adapter 进行mtrics 数据的持久化存储也是一个不错的选择,以上演示没有包含关于grafana与prometheus 的集成,可以参考github

的完整配置自己添加下,这样就相对完整了,同时因为暴露了pg 协议的数据,我们可以直接通过grafanna 进行数据查看,展示。

参考资料

https://github.com/crate/crate_adapter

https://github.com/crate/crate

https://crate.io/docs/crate/reference/en/latest/config/cluster.html

https://github.com/fstab/grok_exporter

https://github.com/rongfengliang/prometheus-cratedb-cluster-docker-compose