一、背景介绍

由于传感器技术的快速发展,高光谱(HS)遥感(RS)成像为飞机等数据采集设备远距离观测和分析地球表面提供了大量的空间和光谱信息,航天器和卫星。 HS RS 技术的最新进展甚至革命为实现各种应用的全部潜力提供了机会,同时面临着有效处理和分析大量 HS 采集数据的新挑战。对诸如彩色图像、彩色视频、多光谱图像和磁共振图像之类的多维图像进行滤波在有效性和效率方面都是具有挑战性的。利用图像的非局部自相似性(NLSS)特性和变换域的稀疏表示,基于块匹配和3D滤波(BM3D)的方法显示出强大的去噪性能。最近,提出了许多具有不同正则化项、变换和高级深度神经网络(DNN)架构的新方法来提高去噪质量。在本文中,广泛比较了60多种方法在合成和真实世界的数据集。引入了一个新的彩色图像和视频数据集进行基准测试,评估是从四个不同的角度,包括定量指标,视觉效果,人类评级和计算成本。综合实验证明:(i)BM3D系列用于各种去噪任务的有效性和效率,(ii)与张量算法相比,简单的基于矩阵的算法可以产生类似的结果,以及(iii)用合成高斯噪声训练的几个DNN模型在现实世界的彩色图像和视频数据集上显示出最先进的性能。

高光谱去噪

观察到的 HS 图像经常被混合噪声破坏,包括高斯噪声、椒盐噪声和死线噪声。 HS 图像的几种噪声类型如图 5 所示。HS 图像丰富的空间和光谱信息可以通过 LR 属性、稀疏表示、非局部相似性和总变差等不同的先验约束来提取。 HS去噪引入了不同的LR张量分解模型。因此,一种或两种其他先验约束与这些张量分解模型相结合。

HS图像复原示意图

1) LR 张量分解:

LR 张量分解方法分为两类:1) 基于分解的方法和 2) 基于秩最小化的方法。前者需要预定义等级值并更新分解因子。后者直接最小化张量秩并更新 LR 张量。

(1) Factorization-based approaches

HS图像去噪文献中使用了两个典型代表,即Tucker分解和CP分解。考虑了高斯噪声并提出了 LR 张量近似 (LRTA) 模型来完成 HS 图像去噪任务:

然而,Tucker 分解相关算法之前手动预定义所有模式的多个等级,这在现实中是棘手的。在等式中,Tucker分解约束很容易被其他张量分解替代,比如CP分解使用并行因子分析 (PARAFAC) 分解算法,仍然假设 HS 图像被高斯白噪声破坏。通过 rank-1 张量分解提出了 HS 图像降噪模型,该模型能够提取信号主导特征。但是,最小数量的rank-1因子被用作CP秩,需要较高的计算成本来计算。

(2) Rank minimization approaches

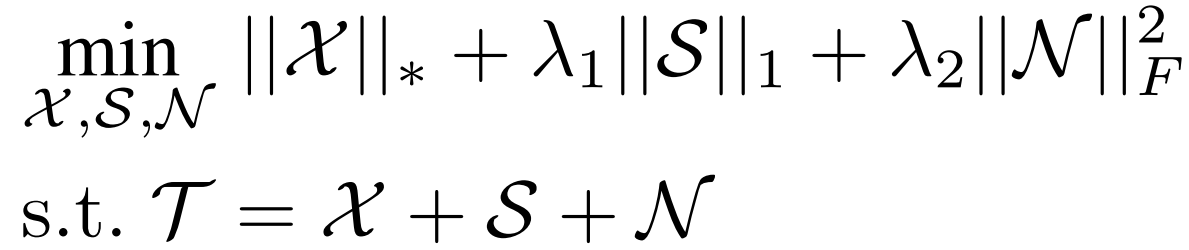

直接最小化张量秩,可以表述如下:

其中 rank(X ) 表示 HS 张量 X 的秩,包括不同的秩定义,如 Tucker 秩、CP 秩、TT 秩和 tubal 秩。由于上述秩最小化属于非凸问题,这些问题是 NP 难计算的。核范数一般用作非凸秩函数的凸代理提出了一种与管状秩相关的 TNN 来表征多线性数据的 3-D 结构复杂性。基于 TNN,提出了一种 LR 张量恢复 (LRTR) 模型来去除高斯噪声和稀疏噪声:

[1] 将非凸对数代理函数应用于 TTN 以完成张量和(张量稳健主成分分析)TRPCA 任务。 [2] 沿三个方向探索了张量的 LR 特性,并提出了两个张量模型:一个三向 TNN (3DTNN) 和一个三向基于对数的TNN (3DLogTNN) 作为其凸和非凸松弛。尽管这些纯 LR 张量分解方法利用了 HS 图像的 LR 先验知识,但由于缺乏其他有用信息,它们很难有效抑制混合噪声。

[1]J. Xue, Y. Zhao, W. Liao, and J. C.-W. Chan, “Nonconvex tensor rank minimization and its applications to tensor recovery,” Inf. Sci., vol. 503, pp. 109–128, 2019.

[2]Y.-B. Zheng, T.-Z. Huang, X.-L. Zhao, T.-X. Jiang, T.-H. Ma, and T.-Y. Ji, “Mixed noise removal in hyperspectral image via low-fibered-rank regularization,” IEEE Trans. Geosci. Remote Sens., vol. 58, no. 1, pp. 734–749, 2020.

2)其他先验正则化LR张量分解:

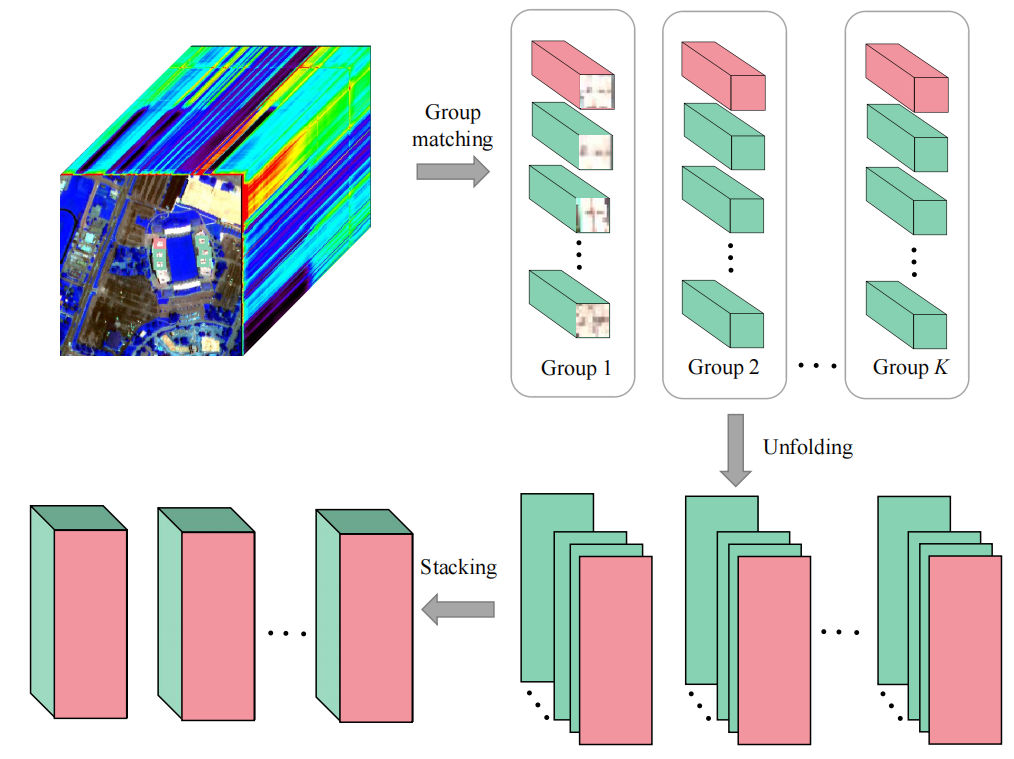

各种类型的先验与LR张量分解模型相结合,以优化模型解决方案,包括非局部相似性、空间和光谱平滑度、空间稀疏性、子空间学习等

基于非局部 LR 张量的方法的流程图

华盛顿特区的空间平滑特性:(a) 原始波段,(b) 沿空间水平方向的梯度图像,(c) 沿空间垂直方向的梯度图像,(d) 沿光谱方向的梯度图像。

子空间表示的示意图

二、张量分解

自 1980 年代以来,HS RS 成像逐渐成为 RS 领域最重要的成就之一 。与最初的单波段全色图像、三波段彩色 RGB 图像和多波段多光谱 (MS) 图像不同,HS 图像包含数百个窄而连续的光谱波段,这是由光谱成像的发展推动的设备和提高光谱分辨率。 HS 光谱的较宽部分可以从紫外线扫描,延伸到可见光谱,并最终到达近红外或短波红外 。 HS 图像的每个像素都对应一个光谱特征,反映了被观察物体的电磁特性。这使得能够以更准确的方式识别和区分底层对象,特别是一些在单波段或多波段 RS 图像(如全色、RGB、MS)中具有相似属性的对象。因此,HS图像丰富的空间和光谱信息极大地提高了地球观测的感知能力,这使得HS RS技术在精准农业(例如监测农作物的生长和健康)等领域发挥着至关重要的作用,太空探索(例如,寻找其他行星上的生命迹象)、污染监测(例如,检测海洋漏油)和军事应用(例如,识别军事目标)。在过去的十年中,在数据采集后处理和分析 HS RS 数据方面付出了巨大的努力。初始 HS 数据处理考虑每个波段的灰度图像或每个像素的光谱特征。一方面,每个HS光谱波段被视为一个灰度图像,传统的二维图像处理算法直接逐波段引入[3],[4]。另一方面,具有相似可见特性(例如颜色、纹理)的光谱特征可用于识别材料 。此外,采用广泛的基于低秩 (LR) 矩阵的方法来探索光谱通道的高度相关性,假设展开的 HS 矩阵具有低秩 [5]-[7]。给定大小为 h×v×z 的 HS 图像,展开的 HS 矩阵 (hv×z) 的恢复通常需要奇异值分解 (SVD),这导致高的计算成本。与矩阵形式相比,张量分解以可容忍的计算复杂度增量实现了出色的性能。然而,这些传统的 LR 模型将每个光谱带重塑为矢量,导致 HS 图像固有的空间光谱完整性遭到破坏。张量分解以可容忍的计算复杂度增量获得了优异的性能。然而,这些传统的LR模型将每个光谱波段重塑为一个向量,导致HS图像固有的空谱完备性遭到破坏。为了缩小HS任务与先进数据处理技术之间的差距,需要确定HS图像的正确解释和智能模型的适当选择。当HS图像建模为三阶张量时,同时考虑二维空间信息和一维光谱信息。

[3]M. Elad and M. Aharon, “Image denoising via sparse and redundant representations over learned dictionaries,” IEEE Trans. Image Process., vol. 15, no. 12, pp. 3736–3745, 2006.

[4] L. I. Rudin, S. Osher, and E. Fatemi, “Nonlinear total variation based noise removal algorithms,” Physica D: Nonlinear Phenom., vol. 60, no. 1, pp. 259–268, 1992.

[5]H. Zhang, W. He, L. Zhang, H. Shen, and Q. Yuan, “Hyperspectral image restoration using low-rank matrix recovery,” IEEE Trans. on Geosci. Remote Sens., vol. 52, no. 8, pp. 4729–4743, Aug. 2014.

[6] J. Peng, W. Sun, H.-C. Li, W. Li, X. Meng, C. Ge, and Q. Du, “Lowrank and sparse representation for hyperspectral image processing: A review,” IEEE Geosc. Remote Sens. Mag., pp. 2–35, 2021.

[7] E. J. Candes and T. Tao, “The power of convex relaxation: Near-optimal matrix completion,” IEEE Trans. Inf. Theory, vol. 56, no. 5, pp. 20532080, 2010.

张量分解起源于Hitchcock在1927年的工作[ 8 ],涉及众多学科,但最近10年在信号处理、机器学习、数据挖掘和融合等领域蓬勃发展[ 9 ] ~ [ 11 ]。早期的综述集中于两种常见的分解方式:Tucker分解和CANDECOMP /平行因子分析算法( CP )分解。2008年,这两种分解首次被引入到HS复原任务中,用于去除高斯噪声[ 12 ]、[ 13 ]。基于张量分解的数学模型避免了对原始维度的转换,也在一定程度上增强了问题建模的可解释性和完备性。考虑HS RS中不同类型的先验知识(例如,空间域的非局部相似性、空间和光谱平滑性),并将其纳入张量分解框架。

[8]F. L. Hitchcock, “The expression of a tensor or a polyadic as a sum of products,” J. Math. Phys., vol. 6, no. 1-4, pp. 164–189, 1927.

[9] T. G. Kolda and B. W. Bader, “Tensor decompositions and applications,” SIAM Rev., vol. 51, no. 3, pp. 455–500, 2009.

[10] E. E. Papalexakis, C. Faloutsos, and N. D. Sidiropoulos, “Tensors for data mining and data fusion: Models, applications, and scalable algorithms,” ACM Trans. Intell. Syst. Technol., vol. 8, no. 2, oct 2016.

[11] N. D. Sidiropoulos, L. De Lathauwer, X. Fu, K. Huang, E. E. Papalexakis, and C. Faloutsos, “Tensor decomposition for signal processing and machine learning,” IEEE Trans. Signal Process., vol. 65, no. 13, pp. 3551–3582, 2017.

[12] N. Renard, S. Bourennane, and J. Blanc-Talon, “Denoising and dimensionality reduction using multilinear tools for hyperspectral images,” IEEE Geosci. Remote Sens. Lett., vol. 5, no. 2, pp. 138–142, 2008.

[13] X. Liu, S. Bourennane, and C. Fossati, “Denoising of hyperspectral images using the parafac model and statistical performance analysis,” IEEE Trans. Geosci. Remote Sens., vol. 50, no. 10, pp. 3717–3724, Oct. 2012.

用于 HS 数据处理的基于主要张量分解的方法的分类

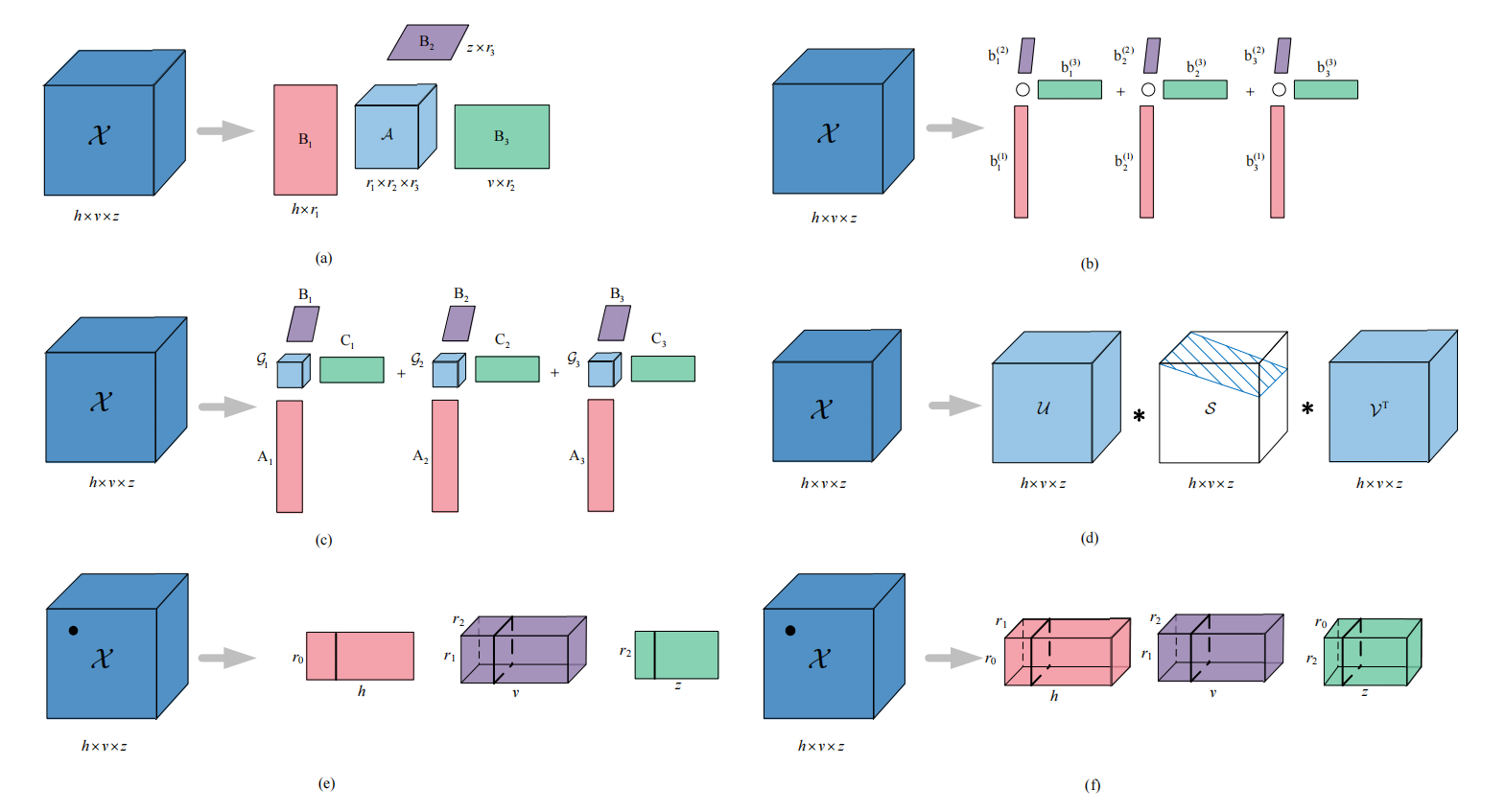

三阶张量的六个张量分解:(a) Tucker 分解,(b) CP 分解,(c) BT 分解,(d) t-SVD,(e) TT 分解,(f) TR 分解

Tucker decomposition[14]

N 阶张量![]() 的 Tucker 分解定义为:

的 Tucker 分解定义为:![]()

![]() 表示核心张量

表示核心张量![]()

![]() 表示因子矩阵,Tucker 秩由

表示因子矩阵,Tucker 秩由![]() 表示。

表示。

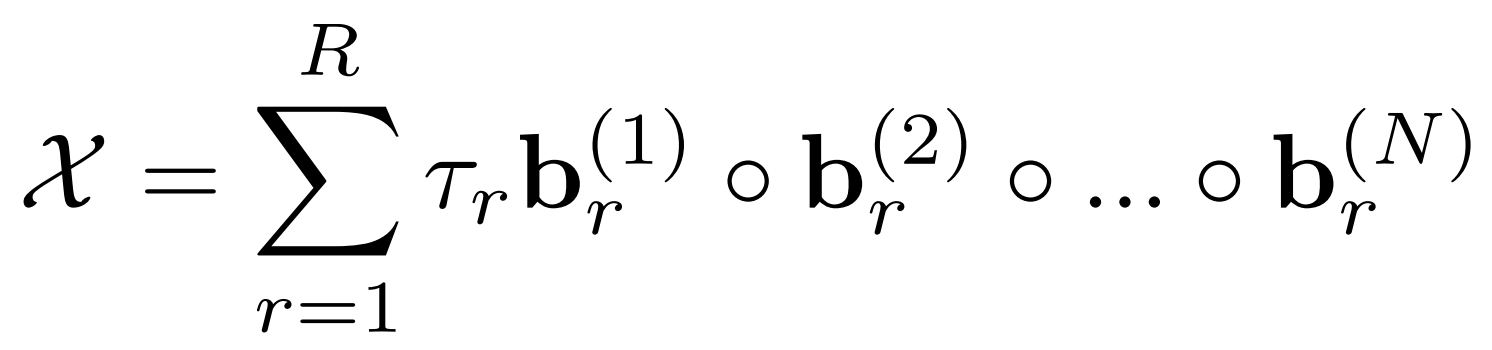

CP decomposition [14]

N阶张量![]() 的CP分解定义为:

的CP分解定义为:

![]() 是非零权重参数,

是非零权重参数,![]()

![]() 表示具有

表示具有![]() 的秩一张量。

的秩一张量。![]() 表示为CP秩是秩一张量的总和。

表示为CP秩是秩一张量的总和。

BT decomposition[15]

三阶张量![]() 的 BT 分解定义为

的 BT 分解定义为

![]()

![]()

每个R分量张量可以通过秩(Lh,Lv,Lz)Tucker分解表示。 BT分解可以看作是Tucker和CP分解的组合。

Tensor Nuclear Norm (TNN)[16]

![]()

![]() 为

为![]() 的t-SVD,TNN 为 X 的奇异值之和:

的t-SVD,TNN 为 X 的奇异值之和:

也可以表示为![]() 的所有frontal slices的核范数之和

的所有frontal slices的核范数之和

t-SVD[16]

![]() 可以被因式分解为

可以被因式分解为![]() ,这里的

,这里的![]() 是正交张量,

是正交张量,

![]() 是对角张量

是对角张量

t-SVD算法:

TT decomposition[17]

N阶的张量![]() 的TT分解由核心

的TT分解由核心![]() ,

,![]()

![]() ,TT分解定义为

,TT分解定义为![]() ,张量的每个条目表示为:

,张量的每个条目表示为:

![]()

TR decomposition[18]

TR 分解的目的是通过循环形式的三阶张量序列的多重线性乘积来表示高阶 X,三阶张量被命名为 TR 因子

![]()

![]() ,

,![]()

![]() 。在这种情况下,TR分解与因子

。在这种情况下,TR分解与因子![]() 的逐元素关系可以写成:

的逐元素关系可以写成:

[14] T. G. Kolda and B. W. Bader, “Tensor decompositions and applications,” SIAM Rev., vol. 51, no. 3, pp. 455–500, 2009.

[15] L. De Lathauwer, “Decompositions of a higher-order tensor in block terms—part ii: Definitions and uniqueness,” SIAM J. Matrix Anal. Appl., vol. 30, no. 3, pp. 1033–1066, 2008.

[16] C. Lu, J. Feng, Y. Chen, W. Liu, Z. Lin, and S. Yan, “Tensor robust principal component analysis: Exact recovery of corrupted low-rank tensors via convex optimization,” in Proc. CVPR, 2016, pp. 5249–5257.

[17] I. V. Oseledets, “Tensor-train decomposition,” SIAM J. Sci. Comput., vol. 33, no. 5, pp. 2295–2317, 2011.

[18] Q. Zhao, G. Zhou, S. Xie, L. Zhang, and A. Cichocki, “Tensor ring decomposition,” arXiv preprint arXiv:1606.05535, 2016.

HS 技术完成了对近乎连续光谱带的采集、利用和分析,并渗透到广泛的实际应用中,越来越受到全球研究人员的关注。在 HS 数据处理中,采集的数据往往涉及大规模和高阶属性。不断增长的 3-D HS 数据量对处理算法提出了更高的要求,以取代基于 2-D 矩阵的方法。张量分解在问题建模和方法论方面都起着至关重要的作用,使得利用每个完整的一维光谱特征的光谱信息和每个完整的二维空间图像的空间结构成为可能。

三、DNN方法

图像去噪的最新发展主要是由深度神经网络 (DNN) 的应用带来的,DNN 方法通常采用由外部先验和数据集指导的监督训练策略,以最小化预测图像和干净图像之间的距离 L

![]()

其中 Xi 和 Yi 是干净/噪声图像(patch)对,具有参数 θ 的 Fθ 是将噪声补丁映射到预测的干净补丁的非线性函数,并且 Ψ(·) 表示某些正则化器 [19],[20]。早期方法 [21]、[22] 使用已知的移位模糊函数和加权因子, [23] 表明普通多层感知器 (MLP) 网络能够在某些噪声水平下与 BM3D 竞争。为了提取潜在特征并利用 NLSS 先验,更常用的模型是卷积神经网络 (CNN) [24],它适用于多维数据处理 [25]-[28] 具有灵活大小的卷积滤波器和局部感受野。卷积运算首先应用于 [29] 中的图像去噪,下图说明了一个简单的具有三个卷积层的 CNN 去噪框架。

具有三个卷积层的简单 CNN 去噪框架的图示

由于 CNN 的简单性和有效性,它被不同的 DNN 去噪算法广泛采用,并且基于 CNN 的网络的变化非常广泛。例如,对于彩色图像,去噪 CNN (DnCNN) [30] 将批量归一化 (BN) [31]、整流线性单元 (ReLU) [32] 和残差学习 [33] 结合到 CNN 模型中。生成对抗网络 (GAN) 盲降噪器 (GCBD) [34] 引入 GAN [35] 来解决未配对噪声图像的问题。最近,图卷积去噪网络(GCDN)在[36]中被提出来捕获自相似信息。对于彩色视频,VNLNet [37] 提出了一种将视频自相似性与 CNN 相结合的新方法。 FastDVDNet [38] 无需昂贵的运动补偿阶段即可实现实时视频降噪。对于 MSI,HSID-CNN [39] 和 HSI-sDeCNN [40] 将 MRI 数据的空间和光谱信息同时分配给 CNN。 3D 准循环神经网络 (QRNN3D) [41] 利用 3D 卷积来提取 MSI 数据的结构空间-光谱相关性,并使用准循环池函数来捕获沿频谱的全局相关性。对于 MRI 去噪,多通道 DnCNN (MCDnCNN) [42] 网络扩展 DnCNN 以处理 3D 体积数据,并且预过滤的基于旋转不变块的 CNN (PRI-PB-CNN) [43] 使用 MRI 数据训练 CNN 模型通过 PRI 非局部主成分分析 (PRI-NLPCA) [44] 进行预过滤。

尽管 DNN 方法有效,但它们可能是双重武器,具有三大优势,也面临着同样的挑战。首先,DNN 方法能够利用外部信息来指导训练过程,因此可能不会局限于传统降噪器的理论和实践范围 [45]。然而,DNN 方法在很大程度上依赖于训练数据集的质量,以及某些先验信息,例如 ISO、快门速度和相机品牌,这些信息在实践中并不总是可用。其次,DNN 方法可以利用先进的 GPU 设备进行加速,并针对某些任务实现实时去噪 [46]、[47]。但普通用户和研究人员可能无法获得昂贵的计算资源。第三,DNN 方法的深层、灵活和复杂的结构能够提取噪声图像的潜在特征,但与只需要存储四个小的预定义变换矩阵的 CBM3D 的实现相比,具有数百万个的复杂网络参数可能会大大增加存储成本。

[19] S. Lefkimmiatis, “Non-local color image denoising with convolutional neural networks,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2017, pp. 3587–3596.

[20] D. Ulyanov, A. Vedaldi, and V. Lempitsky, “Deep image prior,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 94469454.

[21] Y. Zhou, R. Chellappa, and B. Jenkins, “A novel approach to image restoration based on a neural network,” in IEEE International Conference on Neural Networks, vol. 4, 1987, pp. 269–276.

[22] Y.-W. Chiang and B. Sullivan, “Multi-frame image restoration using a neural network,” in Proc. Midwest Symp. Circuits Syst. IEEE, 1989, pp. 744–747.

[23] H. C. Burger, C. J. Schuler, and S. Harmeling, “Image denoising: Can plain neural networks compete with bm3d?” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2012, pp. 2392–2399.

[24] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proc. IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

[25] S. Ji, W. Xu, M. Yang, and K. Yu, “3d convolutional neural networks for human action recognition,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 1, pp. 221–231, 2012.

[26] P. Moeskops, M. A. Viergever, A. M. Mendrik, L. S. De Vries, M. J. Benders, and I. Iˇ sgum, “Automatic segmentation of mr brain images with a convolutional neural network,” IEEE Trans. Med. Imag., vol. 35, no. 5, pp. 1252–1261, 2016.

[27] W. Shi, J. Caballero, F. Husz ́ ar, J. Totz, A. P. Aitken, R. Bishop, D. Rueckert, and Z. Wang, “Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016, pp. 1874–1883.

[28] L. Windrim, A. Melkumyan, R. J. Murphy, A. Chlingaryan, and R. Ramakrishnan, “Pretraining for hyperspectral convolutional neural network classification,” IEEE Trans. Geosci. Remote Sens., vol. 56, no. 5, pp. 2798–2810, 2018.

[29] V. Jain and S. Seung, “Natural image denoising with convolutional networks,” in Proc. Advances Neural Inf. Process. Syst., 2009, pp. 769–776.

[30] K. Zhang, W. Zuo, Y. Chen, D. Meng, and L. Zhang, “Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising,” IEEE Trans. Image Process., vol. 26, no. 7, pp. 31423155, 2017.

[31] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in Proc. Int. Conf. Mach. Learn., 2015, pp. 448–456.

[32] V. Nair and G. E. Hinton, “Rectified linear units improve restricted boltzmann machines,” in Proc. Int. Conf. Mach. Learn., 2010, pp. 807–814.

[33] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016, pp. 770–778.

[34] J. Chen, J. Chen, H. Chao, and M. Yang, “Image blind denoising with generative adversarial network based noise modeling,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 31553164.

[35] A. Radford, L. Metz, and S. Chintala, “Unsupervised representation learning with deep convolutional generative adversarial networks,” arXiv preprint arXiv:1511.06434, 2015.

[36] D. Valsesia, G. Fracastoro, and E. Magli, “Deep graphconvolutional image denoising,” IEEE Trans. Image Process., vol. 29, pp. 8226–8237, 2020.

[37] A. Davy, T. Ehret, J.-M. Morel, P. Arias, and G. Facciolo, “Nonlocal video denoising by cnn,” arXiv preprint arXiv:1811.12758, 2018.

[38] M. Tassano, J. Delon, and T. Veit, “Fastdvdnet: Towards real-time deep video denoising without flow estimation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., June 2020.

[39] Q. Yuan, Q. Zhang, J. Li, H. Shen, and L. Zhang, “Hyperspectral image denoising employing a spatial–spectral deep residual convolutional neural network,” IEEE Trans. Geosci. Remote Sens., vol. 57, no. 2, pp. 1205–1218, 2018.

[40] A. Maffei, J. M. Haut, M. E. Paoletti, J. Plaza, L. Bruzzone, and A. Plaza, “A single model cnn for hyperspectral image denoising,” IEEE Trans. Geosci. Remote Sens., vol. 58, no. 4, pp. 2516–2529, 2019.

[41] K. Wei, Y. Fu, and H. Huang, “3-d quasi-recurrent neural network for hyperspectral image denoising,” IEEE Trans. Neural Netw. Learn. Syst., 2020.

[42] D. Jiang, W. Dou, L. Vosters, X. Xu, Y. Sun, and T. Tan, “Denoising of 3d magnetic resonance images with multi-channel residual learning of convolutional neural network,” Jpn. J. Radiol., vol. 36, no. 9, pp. 566–574, 2018.

[43] J. V. Manj ́ on and P. Coup ́ e, “Mri denoising using deep learning,” in Patch-Based Techniques in Medical Imaging, 2018, pp. 12–19.

[44] J. V. Manj ́ on, P. Coup ́ e, and A. Buades, “Mri noise estimation and denoising using non-local pca,” Med. Image Anal., vol. 22, no. 1, pp. 35–47, 2015.

[45] P. Chatterjee and P. Milanfar, “Is denoising dead?” IEEE Trans. Image Process., vol. 19, no. 4, pp. 895–911, 2009.

[46] M. Tassano, J. Delon, and T. Veit, “Fastdvdnet: Towards real-time deep video denoising without flow estimation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., June 2020.

[47] X. Zhang and R. Wu, “Fast depth image denoising and enhancement using a deep convolutional network,” in Proc. IEEE Int. Conf. Acoust., Speech Signal Process., 2016, pp. 2499–2503.

具有不同噪声建模技术和应用的多维图像数据的相关传统降噪器。 “CI”:彩色图像,“CV”:彩色视频,“MSI”:多光谱成像,“HSI”:高光谱成像,“MRI”:磁共振成像。

具有不同噪声建模技术和应用的多维图像数据的相关DNN去噪方法。 “CI”:彩色图像,“CV”:彩色视频,“MSI”:多光谱成像,“HSI”:高光谱成像,“MRI”:磁共振成像。

[43] D. L. Donoho, “De-noising by soft-thresholding,” IEEE Trans. Inf. Theory, vol. 41, no. 3, pp. 613–627, 1995.

[44] K.-q. Huang, Z.-y. Wu, G. S. Fung, and F. H. Chan, “Color image denoising with wavelet thresholding based on human visual system model,” Signal Process.: Image Commun., vol. 20, no. 2, pp. 115–127, 2005.

[45] H. Othman and S.-E. Qian, “Noise reduction of hyperspectral imagery using hybrid spatial-spectral derivative-domain wavelet shrinkage,” IEEE Trans. Geosci. Remote Sens., vol. 44, no. 2, pp. 397–408, 2006.

[46] L. P. Yaroslavsky, “Local adaptive image restoration and enhancement with the use of dft and dct in a running window,” in Proc. SPIE, vol. 2825, 1996, pp. 2–13.

[47] A. Foi, V. Katkovnik, and K. Egiazarian, “Pointwise shapeadaptive dct for high-quality denoising and deblocking of grayscale and color images,” IEEE Trans. Image Process., vol. 16, no. 5, pp. 1395–1411, 2007.

[48] J. Dai, O. C. Au, L. Fang, C. Pang, F. Zou, and J. Li, “Multichannel nonlocal means fusion for color image denoising,” IEEE Trans. Circuits Syst. Video Technol., vol. 23, no. 11, pp. 1873–1886, 2013.

[49] M. Elad and M. Aharon, “Image denoising via sparse and redundant representations over learned dictionaries,” IEEE Trans. Image Process., vol. 15, no. 12, pp. 3736–3745, 2006.

[50] J. Mairal, M. Elad, and G. Sapiro, “Sparse representation for color image restoration,” IEEE Trans. Image Process., vol. 17, no. 1, pp. 53–69, 2007.

[51] Y. Fu, A. Lam, I. Sato, and Y. Sato, “Adaptive spatial-spectral dictionary learning for hyperspectral image denoising,” in Proc. IEEE Int. Conf. Comput. Vis., 2015, pp. 343–351.

[52] C. Liu and W. T. Freeman, “A high-quality video denoising algorithm based on reliable motion estimation,” in Proc. Eur. Conf. Comput. Vis., 2010, pp. 706–719.

[53] L. Zhang, W. Dong, D. Zhang, and G. Shi, “Two-stage image denoising by principal component analysis with local pixel grouping,” Pattern Recognit., vol. 43, no. 4, pp. 1531–1549, 2010.

[54] W. Dong, G. Shi, and X. Li, “Nonlocal image restoration with bilateral variance estimation: a low-rank approach,” IEEE Trans. Image Process., vol. 22, no. 2, pp. 700–711, 2012.

[55] A. Phophalia and S. K. Mitra, “3d mr image denoising using rough set and kernel pca method,” Magn. Reson. Imaging, vol. 36, pp. 135–145, 2017.

[56] D. Zoran and Y. Weiss, “From learning models of natural image patches to whole image restoration,” in Proc. IEEE Int. Conf. Comput. Vis., 2011, pp. 479–486.

[57] S. Hurault, T. Ehret, and P. Arias, “Epll: an image denoising method using a gaussian mixture model learned on a large set of patches,” Image Processing On Line, vol. 8, pp. 465–489, 2018.

[58] H. Zhang, W. He, L. Zhang, H. Shen, and Q. Yuan, “Hyperspectral image restoration using low-rank matrix recovery,” IEEE Trans. Geosci. Remote Sens., vol. 52, no. 8, pp. 4729–4743, 2013.

[59] J. Xu, L. Zhang, and D. Zhang, “External prior guided internal prior learning for real-world noisy image denoising,” IEEE Trans. Image Process., vol. 27, no. 6, pp. 2996–3010, 2018.

[60] A. Buades, J.-L. Lisani, and M. Miladinovi ́ c, “Patch-based video denoising with optical flow estimation,” IEEE Trans. Image Process., vol. 25, no. 6, pp. 2573–2586, 2016.

[61] M. Rizkinia, T. Baba, K. Shirai, and M. Okuda, “Local spectral component decomposition for multi-channel image denoising,” IEEE Trans. Image Process., vol. 25, no. 7, pp. 3208–3218, 2016.

[62] S. Gu, L. Zhang, W. Zuo, and X. Feng, “Weighted nuclear norm minimization with application to image denoising,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2014, pp. 2862–2869.

[63] J. Xu, L. Zhang, D. Zhang, and X. Feng, “Multi-channel weighted nuclear norm minimization for real color image denoising,” in Proc. IEEE Int. Conf. Comput. Vis., 2017, pp. 1096–1104.

[64] J. Xu, L. Zhang, and D. Zhang, “A trilateral weighted sparse coding scheme for real-world image denoising,” in Proc. Eur. Conf. Comput. Vis., 2018, pp. 20–36.

[65] P. Arias and J.-M. Morel, “Video denoising via empirical bayesian estimation of space-time patches,” J. Math. Imag. Vis., vol. 60, no. 1, pp. 70–93, 2018.

[66] Y. Hou, J. Xu, M. Liu, G. Liu, L. Liu, F. Zhu, and L. Shao, “Nlh: A blind pixel-level non-local method for real-world image denoising,” IEEE Trans. Image Process., vol. 29, pp. 5121–5135, 2020.

[67] R. H. Chan, C.-W. Ho, and M. Nikolova, “Salt-and-pepper noise removal by median-type noise detectors and detail-preserving regularization,” IEEE Trans. Image Process., vol. 14, no. 10, pp. 1479–1485, 2005.

[68] M. Lebrun, M. Colom, and J.-M. Morel, “Multiscale image blind denoising,” IEEE Trans. Image Process., vol. 24, no. 10, pp. 31493161, 2015.

[69] L. Zhuang and J. M. Bioucas-Dias, “Fast hyperspectral image denoising and inpainting based on low-rank and sparse representations,” IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., vol. 11, no. 3, pp. 730–742, 2018.

[70] J. V. Manj ́ on, P. Coup ́ e, A. Buades, D. L. Collins, and M. Robles, “New methods for mri denoising based on sparseness and selfsimilarity,” Med. Image Anal., vol. 16, no. 1, pp. 18–27, 2012.

[71] P. Coup ́ e, P. Yger, S. Prima, P. Hellier, C. Kervrann, and C. Barillot, “An optimized blockwise nonlocal means denoising filter for 3d magnetic resonance images,” IEEE Trans. Med. Imag., vol. 27, no. 4, pp. 425–441, 2008.

[72] J. V. Manj ́ on, P. Coup ́ e, L. Mart ́ı-Bonmat ́ı, D. L. Collins, and M. Robles, “Adaptive non-local means denoising of mr images with spatially varying noise levels,” J. Magn. Reson. Imag., vol. 31, no. 1, pp. 192–203, 2010.

[73] S. Aja-Fern ́ andez, C. Alberola-L ́ opez, and C.-F. Westin, “Noise and signal estimation in magnitude mri and rician distributed images: a lmmse approach,” IEEE Trans. Image Process., vol. 17, no. 8, pp. 1383–1398, 2008.

[74] J. V. Manj ́ on, P. Coup ́ e, and A. Buades, “Mri noise estimation and denoising using non-local pca,” Med. Image Anal., vol. 22, no. 1, pp. 35–47, 2015.

[75] N. Renard, S. Bourennane, and J. Blanc-Talon, “Denoising and dimensionality reduction using multilinear tools for hyperspectral images,” IEEE Geosci. Remote. Sens. Lett., vol. 5, no. 2, pp. 138–142, 2008.

[76] X. Liu, S. Bourennane, and C. Fossati, “Denoising of hyperspectral images using the parafac model and statistical performance analysis,” IEEE Trans. Geosci. Remote Sens., vol. 50, no. 10, pp. 3717–3724, 2012.

[77] A. Rajwade, A. Rangarajan, and A. Banerjee, “Image denoising using the higher order singular value decomposition,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 4, pp. 849–862, 2012.

[78] X. Zhang, Z. Xu, N. Jia, W. Yang, Q. Feng, W. Chen, and Y. Feng, “Denoising of 3d magnetic resonance images by using higherorder singular value decomposition,” Med. Image Anal., vol. 19, no. 1, pp. 75–86, 2015.

[79] X. Zhang, J. Peng, M. Xu, W. Yang, Z. Zhang, H. Guo, W. Chen, Q. Feng, E. X. Wu, and Y. Feng, “Denoise diffusion-weighted images using higher-order singular value decomposition,” Neuroimage, vol. 156, pp. 128–145, 2017.

[80] Y. Peng, D. Meng, Z. Xu, C. Gao, Y. Yang, and B. Zhang, “Decomposable nonlocal tensor dictionary learning for multispectral image denoising,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2014, pp. 2949–2956.

[81] Z. Zhang and S. Aeron, “Denoising and completion of 3d data via multidimensional dictionary learning,” in Proc. 25th Int. Joint Conf. Artif. Intell, 2016, pp. 2371–2377.

[82] W. He, H. Zhang, L. Zhang, and H. Shen, “Total-variationregularized low-rank matrix factorization for hyperspectral image restoration,” IEEE Trans. Geosci. Remote Sens., vol. 54, no. 1, pp. 178–188, 2015.

[83] Q. Xie, Q. Zhao, D. Meng, Z. Xu, S. Gu, W. Zuo, and L. Zhang, “Multispectral images denoising by intrinsic tensor sparsity regularization,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016, pp. 1692–1700.

[84] Y. Chang, L. Yan, and S. Zhong, “Hyper-laplacian regularized unidirectional low-rank tensor recovery for multispectral image denoising,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2017, pp. 4260–4268.

[85] W. He, H. Zhang, H. Shen, and L. Zhang, “Hyperspectral image denoising using local low-rank matrix recovery and global spatial–spectral total variation,” IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., vol. 11, no. 3, pp. 713–729, 2018.

[86] Y. Wu, L. Fang, and S. Li, “Weighted tensor rank-1 decomposition for nonlocal image denoising,” IEEE Trans. Image Process., vol. 28, no. 6, pp. 2719–2730, 2018.

[87] H. Lv and R. Wang, “Denoising 3d magnetic resonance images based on low-rank tensor approximation with adaptive multirank estimation,” IEEE Access, vol. 7, pp. 85 995–86 003, 2019.

[88] Z. Kong, L. Han, X. Liu, and X. Yang, “A new 4-d nonlocal transform-domain filter for 3-d magnetic resonance images denoising,” IEEE Trans. Med. Imag., vol. 37, no. 4, pp. 941–954, 2017.

[89] W. He, Q. Yao, C. Li, N. Yokoya, and Q. Zhao, “Non-local meets global: An integrated paradigm for hyperspectral denoising,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2019, pp. 68616870.

[90] X. Gong, W. Chen, and J. Chen, “A low-rank tensor dictionary learning method for hyperspectral image denoising,” IEEE Trans. Signal Process., vol. 68, pp. 1168–1180, 2020.

[91] W. Dong, G. Li, G. Shi, X. Li, and Y. Ma, “Low-rank tensor approximation with laplacian scale mixture modeling for multiframe image denoising,” in Proc. IEEE Int. Conf. Comput. Vis., 2015, pp. 442–449.

[105] H. C. Burger, C. J. Schuler, and S. Harmeling, “Image denoising: Can plain neural networks compete with bm3d?” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2012, pp. 2392–2399.

[106] Y. Chen and T. Pock, “Trainable nonlinear reaction diffusion: A flexible framework for fast and effective image restoration,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 39, no. 6, pp. 1256–1272, 2016.

[107] K. Zhang, W. Zuo, Y. Chen, D. Meng, and L. Zhang, “Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising,” IEEE Trans. Image Process., vol. 26, no. 7, pp. 31423155, 2017.

[108] D. Jiang, W. Dou, L. Vosters, X. Xu, Y. Sun, and T. Tan, “Denoising of 3d magnetic resonance images with multi-channel residual learning of convolutional neural network,” Jpn. J. Radiol., vol. 36, no. 9, pp. 566–574, 2018.

[109] S. Lefkimmiatis, “Non-local color image denoising with convolutional neural networks,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2017, pp. 3587–3596.

[110] ——, “Universal denoising networks : A novel cnn architecture for image denoising,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 3204–3213.

[111] K. Zhang, W. Zuo, and L. Zhang, “Ffdnet: Toward a fast and flexible solution for cnn-based image denoising,” IEEE Trans. Image Process., vol. 27, no. 9, pp. 4608–4622, 2018.

[112] Q. Yuan, Q. Zhang, J. Li, H. Shen, and L. Zhang, “Hyperspectral image denoising employing a spatial–spectral deep residual convolutional neural network,” IEEE Trans. Geosci. Remote Sens., vol. 57, no. 2, pp. 1205–1218, 2018.

[113] A. Maffei, J. M. Haut, M. E. Paoletti, J. Plaza, L. Bruzzone, and A. Plaza, “A single model cnn for hyperspectral image denoising,” IEEE Trans. Geosci. Remote Sens., vol. 58, no. 4, pp. 2516–2529, 2019.

[114] M. Claus and J. van Gemert, “Videnn: Deep blind video denoising,” in Proc. Conf. Comput. Vis. Pattern Recognit. Workshops, 2019, pp. 1–10.

[115] M. Chang, Q. Li, H. Feng, and Z. Xu, “Spatial-adaptive network for single image denoising,” arXiv preprint arXiv:2001.10291, 2020.

[116] G. Vaksman, M. Elad, and P. Milanfar, “Lidia: Lightweight learned image denoising with instance adaptation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. Workshops, June 2020.

[117] Y. Zhao, D. Zhai, J. Jiang, and X. Liu, “Adrn: Attention-based deep residual network for hyperspectral image denoising,” in Proc. IEEE Int. Conf. Acoust., Speech Signal Process., 2020, pp. 26682672.

[118] Y. Chang, L. Yan, H. Fang, S. Zhong, and W. Liao, “Hsi-denet: Hyperspectral image restoration via convolutional neural network,” IEEE Trans. Geosci. Remote Sens., vol. 57, no. 2, pp. 667–682, 2018.

[119] J. Lehtinen, J. Munkberg, J. Hasselgren, S. Laine, T. Karras, M. Aittala, and T. Aila, “Noise2noise: Learning image restoration without clean data,” in Proc. Int. Conf. Mach. Learn., 2018, pp. 2965–2974.

[120] Z. Yue, H. Yong, Q. Zhao, D. Meng, and L. Zhang, “Variational denoising network: Toward blind noise modeling and removal,” in Proc. Advances Neural Inf. Process. Syst., 2019, pp. 1690–1701.

[121] S. Guo, Z. Yan, K. Zhang, W. Zuo, and L. Zhang, “Toward convolutional blind denoising of real photographs,” in Proc. Conf. Comput. Vis. Pattern Recognit., 2019, pp. 1712–1722.

[122] J. V. Manj ́ on and P. Coup ́ e, “Mri denoising using deep learning,” in Patch-Based Techniques in Medical Imaging, 2018, pp. 12–19.

[123] A. Abbasi, A. Monadjemi, L. Fang, H. Rabbani, and Y. Zhang, “Three-dimensional optical coherence tomography image denoising through multi-input fully-convolutional networks,” Comput. Biol. Med., vol. 108, pp. 1–8, 2019.

[124] S. Yu, B. Park, and J. Jeong, “Deep iterative down-up cnn for image denoising,” in Proc. Conf. Comput. Vis. Pattern Recognit. Workshops, 2019.

[125] Y. Song, Y. Zhu, and X. Du, “Dynamic residual dense network for image denoising,” Sensors, vol. 19, no. 17, p. 3809, 2019.

[126] S. Gu, Y. Li, L. V. Gool, and R. Timofte, “Self-guided network for fast image denoising,” in Proc. IEEE Int. Conf. Comput. Vis., 2019, pp. 2511–2520.

[127] C. Chen, Z. Xiong, X. Tian, Z.-J. Zha, and F. Wu, “Real-world image denoising with deep boosting,” IEEE Trans. Pattern Anal. Mach. Intell., 2019.

[128] S. Anwar and N. Barnes, “Real image denoising with feature attention,” in Proc. IEEE Int. Conf. Comput. Vis., 2019, pp. 31553164.

[129] Y. Kim, J. W. Soh, G. Y. Park, and N. I. Cho, “Transfer learning from synthetic to real-noise denoising with adaptive instance normalization,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2020, pp. 3482–3492.

[130] Y. Quan, M. Chen, T. Pang, and H. Ji, “Self2self with dropout: Learning self-supervised denoising from single image,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2020, pp. 1890–1898.

[131] K. Wei, Y. Fu, and H. Huang, “3-d quasi-recurrent neural network for hyperspectral image denoising,” IEEE Trans. Neural Netw. Learn. Syst., 2020.

[132] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, M.-H. Yang, and L. Shao, “Cycleisp: Real image restoration via improved data synthesis,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2020, pp. 2696–2705.

[133] Z. Yue, Q. Zhao, L. Zhang, and D. Meng, “Dual adversarial network: Toward real-world noise removal and noise generation,” arXiv preprint arXiv:2007.05946, 2020.

[134] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, M.-H. Yang, and L. Shao, “Learning enriched features for real image restoration and enhancement,” in Proc. Eur. Conf. Comput. Vis., 2020.

[135] D. Valsesia, G. Fracastoro, and E. Magli, “Deep graphconvolutional image denoising,” IEEE Trans. Image Process., vol. 29, pp. 8226–8237, 2020.

[136] J. Chen, J. Chen, H. Chao, and M. Yang, “Image blind denoising with generative adversarial network based noise modeling,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 31553164.

[137] H. Yan, X. Chen, V. Y. Tan, W. Yang, J. Wu, and J. Feng, “Unsupervised image noise modeling with self-consistent gan,” arXiv preprint arXiv:1906.05762, 2019.

[138] R. A. Yeh, T. Y. Lim, C. Chen, A. G. Schwing, M. HasegawaJohnson, and M. Do, “Image restoration with deep generative models,” in Proc. IEEE Int. Conf. Acoust., Speech Signal Process., 2018, pp. 6772–6776.

[139] K. Lin, T. H. Li, S. Liu, and G. Li, “Real photographs denoising with noise domain adaptation and attentive generative adversarial network,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. Workshops, June 2019.

真实彩色图像数据集的平均 PSNR、SSIM 值和计算时间(秒)。平均时间是根据 PolyU 数据集计算的,“N/A”表示模型无法处理特定尺寸的图像。最佳结果(不包括 CBM3D 最佳结果)以黑色粗体显示。