目录

1. 操作系统

1.1. 下载Ubuntu安装镜像

1.2. 配置个人win10台式机的Hyper-V虚拟化引擎

- 打开windows自带的虚拟化引擎:打开

开始->设置->应用->可选功能->更多Windows功能,勾选Hyper-V。如果想在家里的Windows家庭版上安装,需将以下代码保存成.cmd文件,右键以管理员身份运行pushd "%~dp0" dir /b %SystemRoot%\servicing\Packages\*Hyper-V*.mum >hyper-v.txt for /f %%i in ('findstr /i . hyper-v.txt 2^>nul') do dism /online /norestart /add-package:"%SystemRoot%\servicing\Packages\%%i" del hyper-v.txt Dism /online /enable-feature /featurename:Microsoft-Hyper-V-All /LimitAccess /ALL - 创建可以访问外部网络的虚拟交换机(默认已有

内部虚拟交换机Default Switch):打开开始->Windows管理工具->Hyper-V管理器->虚拟交换机管理器->新建虚拟网络交换机,选择外部,点击创建虚拟交换机,名称输入External Switch - 配置windows宿主机连接

Default Switch网卡的固定IP:打开开始->设置->网络和Internet->更改适配器选项,双击vEthernet (Default Seitch),打开属性->Internet协议版本4 (TCP/IPv4),在使用下面的IP地址下,将IP地址改为172.28.176.1,将子网掩码改为255.255.240.0

1.3. 安装和配置k8s集群master节点OS

- 在windows宿主机中创建虚拟机

- 打开

开始->Windows管理工具->Hyper-V管理器,选择菜单操作->新建->虚拟机 - 名称输入

k-master-1,下一步 - 选择

第一代(1),下一步 - 内存

4096MB,下一步 - 连接

External Switch,下一步 下一步- 选择

从可启动的CD/DVD-ROM安装操作系统->影像文件(.iso),文件选择前面下载好的ubuntu-22.04-live-server-amd64.iso,下一步 完成

- 打开

- 添加虚拟机内部网卡,增加CPU

- 在

Hyper-V管理器中,选择k-master-1,点击设置->添加硬件->网络适配器->添加,虚拟交换机Default Switch,确定 - 选择

处理器,虚拟处理器的数量改为2,确定

- 在

- 安装虚拟机OS

- 在

Hyper-V管理器中,选择k-master-1,点击连接 - 在虚拟机界面,

启动,在GNU GRUB页面回车,等待, 回车回车- 选择

Ubuntu Server (minimized),选中Done,回车 - 选中

eth1->Edit IPv4,IPv4 Method设为Manual,Subnet输入172.28.176.0/20,Address输入172.28.176.4,Gateway和Name servers都输入172.28.176.1,选中Save,回车 - 选中

Done,回车 - 选中

Done,回车 Mirror address输入http://mirrors.aliyun.com/ubuntu/,选中Done,回车- 选中

update to the new installer,回车 - 等待,选中

Done,回车 - 选中

Done,回车 - 选中

Continue,回车 Your name输入myname,Your server's name输入k-master-1,Pick a username输入user,输入密码,选中Done,回车- 勾选

Install OpenSSH server,选中Done,回车 - 再选中

Done,回车 - 等待,安装完毕后,选中

Reboot Now,回车 - 等待,按提示

回车 - 关闭虚拟机页面

- 在

- 设置root登录

- 使用shell工具(如PuTTY),以用户名

user及其密码登录172.28.176.4 - 执行以下命令

# 修改root密码 $ sudo passwd root # 修改ssh参数,打开PermitRootLogin,并改为yes $ sudo vi /etc/ssh/sshd_config # 重启ssh服务 $ sudo service ssh restart - 退出

user用户登录;使用shell工具(如PuTTY)root登录

- 使用shell工具(如PuTTY),以用户名

- 设置k8s admin配置文件环境变量

打开$ export KUBECONFIG=/etc/kubernetes/admin.conf.bashrc文件,将上述内容(仅执行过的命令部分)添加到文件末尾$ vi .bashrc - 关闭swap

注释掉下面这行$ swapoff -a $ vi /etc/fstab/swap.img none swap sw 0 0

1.4. 安装和配置k8s集群node节点OS

重复1.3中的步骤安装和配置k8s集群node节点OS,除以下3点外,其他步骤完全相同

- 虚拟机名称为

k-node-1 Your server's name为k-node-1eth1静态IPAddress为172.28.176.5- 不执行,也不添加下面的内容进.bashrc

$ export KUBECONFIG=/etc/kubernetes/admin.conf

2. Kubernetes

2.1. 安装containerd(本安装不使用docker)

在k-master-1的shell会话中

$ cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

$ cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

$ sysctl --system

$ wget https://github.com/containerd/containerd/releases/download/v1.6.6/containerd-1.6.6-linux-amd64.tar.gz

$ tar Cxzvf /usr/local containerd-1.6.6-linux-amd64.tar.gz

$ mkdir -p /usr/local/lib/systemd/system/

$ wget https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

$ mv containerd.service /usr/local/lib/systemd/system/

$ systemctl daemon-reload

$ systemctl enable --now containerd

$ wget https://github.com/opencontainers/runc/releases/download/v1.1.3/runc.amd64

install -m 755 runc.amd64 /usr/local/sbin/runc

$ wget https://github.com/containernetworking/plugins/releases/download/v1.1.1/cni-plugins-linux-amd64-v1.1.1.tgz

$ mkdir -p /opt/cni/bin

$ tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.1.1.tgz

$ mkdir /etc/containerd/

$ containerd config default>/etc/containerd/config.toml

$ vi /etc/containerd/config.toml

#将SystemdCgroup改为true,sandbox_image改为"k8s.gcr.io/pause:3.7"

$ systemctl daemon-reload

$ systemctl restart containerd

如果所在环境不能直接访问google,则需先使用以下命令,一一下载列表中的全部镜像(需要baidu找到镜像对应的国内站点)

$ ctr -n k8s.io i pull <以下列表中的镜像名称>

docker.io/calico/apiserver:v3.23.2

docker.io/calico/cni:v3.23.2

docker.io/calico/kube-controllers:v3.23.2

docker.io/calico/node:v3.23.2

docker.io/calico/pod2daemon-flexvol:v3.23.2

docker.io/calico/typha:v3.23.2

docker.io/grafana/grafana:9.0.2

docker.io/istio/examples-bookinfo-productpage-v1:1.16.4

docker.io/istio/examples-bookinfo-ratings-v1:1.16.4

docker.io/istio/examples-bookinfo-reviews-v2:1.16.4

docker.io/istio/examples-bookinfo-reviews-v3:1.16.4

docker.io/istio/pilot:1.14.1

docker.io/istio/proxyv2:1.14.1

docker.io/jimmidyson/configmap-reload:v0.5.0

docker.io/kubernetesui/dashboard:v2.6.0

docker.io/kubernetesui/metrics-scraper:v1.0.8

gcr.io/knative-releases/knative.dev/eventing/cmd/in_memory/channel_controller@sha256:97d7db62ea35f7f9199787722c352091987e8816d549c3193ee5683424fef8d0

gcr.io/knative-releases/knative.dev/eventing/cmd/in_memory/channel_dispatcher@sha256:3163f0a3b3ba5b81c36357df3dd2bff834056f2943c5b395adb497fb97476d20

gcr.io/knative-releases/knative.dev/net-istio/cmd/webhook@sha256:148b0ffa1f4bc9402daaac55f233581f7999b1562fa3079a8ab1d1a8213fd909

gcr.io/knative-releases/knative.dev/serving/cmd/activator@sha256:08315309da4b219ec74bb2017f569a98a7cfecee5e1285b03dfddc2410feb7d7

gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler@sha256:105bdd14ecaabad79d9bbcb8359bf2c317bd72382f80a7c4a335adfea53844f2

gcr.io/knative-releases/knative.dev/serving/cmd/controller@sha256:bac158dfb0c73d13ed42266ba287f1a86192c0ba581e23fbe012d30a1c34837c

gcr.io/knative-releases/knative.dev/serving/cmd/domain-mapping-webhook@sha256:15f1ce7f35b4765cc3b1c073423ab8d8bf2c8c2630eea3995c610f520fb68ca0

gcr.io/knative-releases/knative.dev/serving/cmd/domain-mapping@sha256:e384a295069b9e10e509fc3986cce4fe7be4ff5c73413d1c2234a813b1f4f99b

gcr.io/knative-releases/knative.dev/serving/cmd/webhook@sha256:1282a399cbb94f3b9de4f199239b39e795b87108efe7d8ba0380147160a97abb

k8s.gcr.io/coredns/coredns:v1.8.6

k8s.gcr.io/etcd:3.5.3-0

k8s.gcr.io/kube-apiserver:v1.24.3

k8s.gcr.io/kube-controller-manager:v1.24.3

k8s.gcr.io/kube-proxy:v1.24.3

k8s.gcr.io/kube-scheduler:v1.24.3

k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.5.0

k8s.gcr.io/metrics-server/metrics-server:v0.6.1

k8s.gcr.io/pause:3.7

k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.9.1

quay.io/brancz/kube-rbac-proxy:v0.13.0

quay.io/prometheus-operator/prometheus-config-reloader:v0.57.0

quay.io/prometheus-operator/prometheus-operator:v0.57.0

quay.io/prometheus/alertmanager:v0.24.0

quay.io/prometheus/blackbox-exporter:v0.21.1

quay.io/prometheus/node-exporter:v1.3.1

quay.io/prometheus/prometheus:v2.36.2

quay.io/tigera/operator:v1.27.7

registry.k8s.io/autoscaling/addon-resizer:1.8.14

registry.k8s.io/metrics-server/metrics-server:v0.5.2

2.2. 安装cni

$ mkdir -p /root/go/src/github.com/containerd/

$ cd /root/go/src/github.com/containerd/

$ git clone https://github.com/containerd/containerd.git

$ apt install golang-go

$ cd containerd/script/setup

$ vi install-cni #将"$GOPATH"替换为:/root/go

$ ./install-cni

2.3. 安装kubelet/kubeadm/kubectl

$ cd ~

$ apt-get update

$ apt-get install -y apt-transport-https ca-certificates curl

$ curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg #如不能访问google,需baidu找到国内此文件的镜像地址

$ echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list

$ apt-get update

$ apt-get install -y kubelet kubeadm kubectl

$ apt-mark hold kubelet kubeadm kubectl

2.4. 初始化kubernetes集群

$ kubeadm init --pod-network-cidr=10.244.0.0/16

$ kubectl taint nodes --all node-role.kubernetes.io/control-plane- node-role.kubernetes.io/master-

从此时起,保持打开另一个单独的shell会话,用root登录到172.28.176.4,执行以下命令,实时观察集群内pod的运行(正常pod都应该是Running状态)

$ watch kubectl get pods -A -o wide

2.5. 安装网络插件初始化kubernetes集群calico

$ kubectl create -f https://projectcalico.docs.tigera.io/manifests/tigera-operator.yaml

$ wget https://projectcalico.docs.tigera.io/manifests/custom-resources.yaml

$ vi custom-resources.yaml #将cidr改为:10.244.0.0/16

$ kubectl apply -f custom-resources.yaml

2.6. 创建并添加k8s的node节点

- 在

k-node-1的shell会话中重复2.1、2.2、2.3 - 在

k-master-1的shell会话中,生成token和证书$ kubeadm token create $ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' - 在

k-node-1的shell会话中#token输入上一步第一条命令的输出,sha256:后输入上一步第二条命令的输出 $ kubeadm join 172.28.176.4:6443 --token y0xyix.ree0ap7zll8vicai --discovery-token-ca-cert-hash sha256:3a2d98d658f5bef44a629dc25bb96168ebd01ec203ac88d3854fd3985b3befec

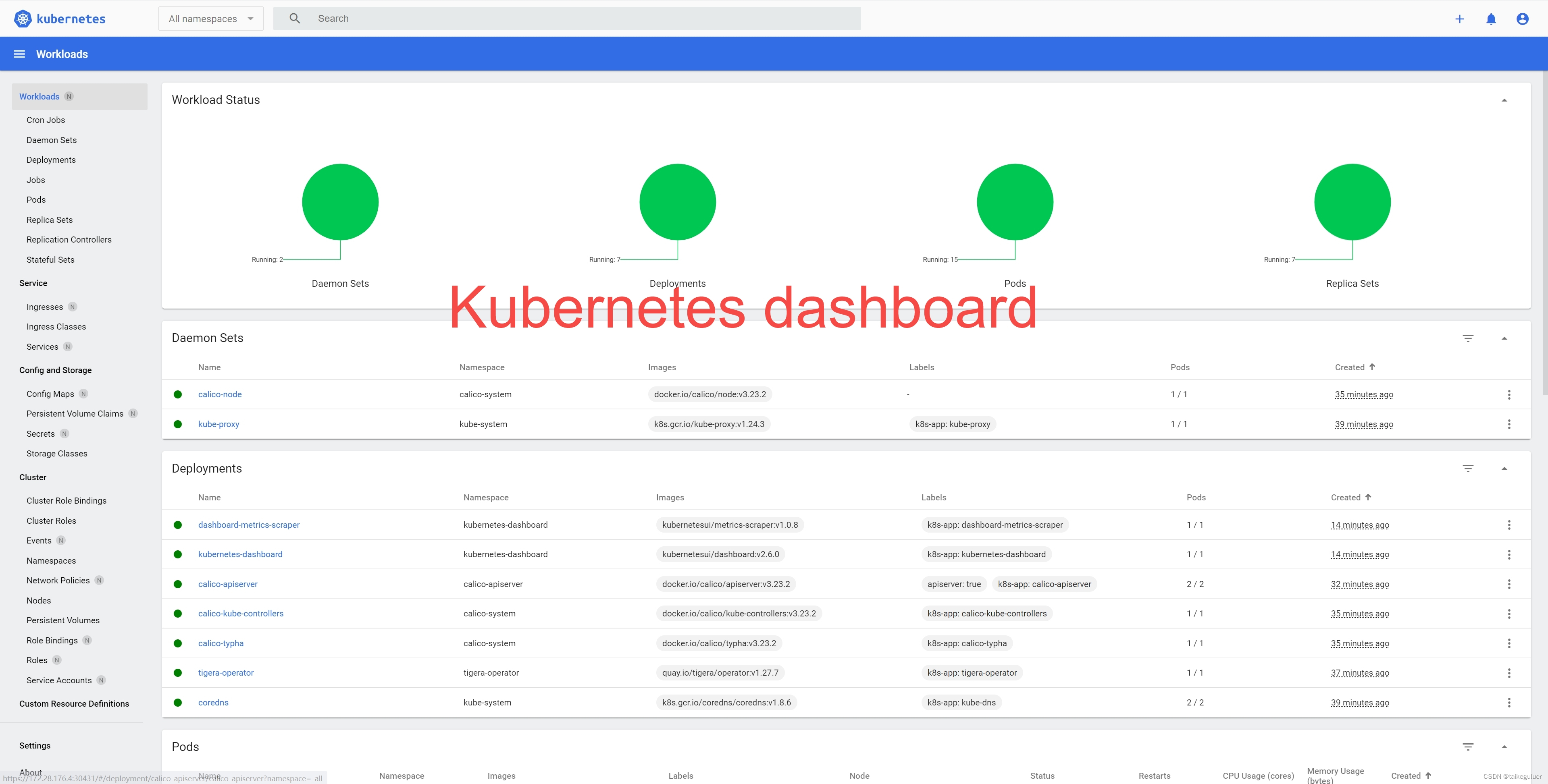

3. 安装kubernetes dashboard

在k-master-1的shell会话中

- 安装

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.0/aio/deploy/recommended.yaml $ kubectl edit service kubernetes-dashboard -n kubernetes-dashboard #将type从ClusterIP改为NodePort $ kubectl get service kubernetes-dashboard -n kubernetes-dashboard #获取到443对应的端口,如30341 - 创建用户

$ vi dashboard-adminuser.yaml #输入以下内容并保存 apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard$ kubectl apply -f dashboard-adminuser.yaml $ kubectl -n kubernetes-dashboard create token admin-user #记录输出的token - 在windows宿主机中打开浏览器,访问https://172.28.176.4:30431(端口为上上步得到的内容),在token处输入上一步生成的内容,应显示如下内容:

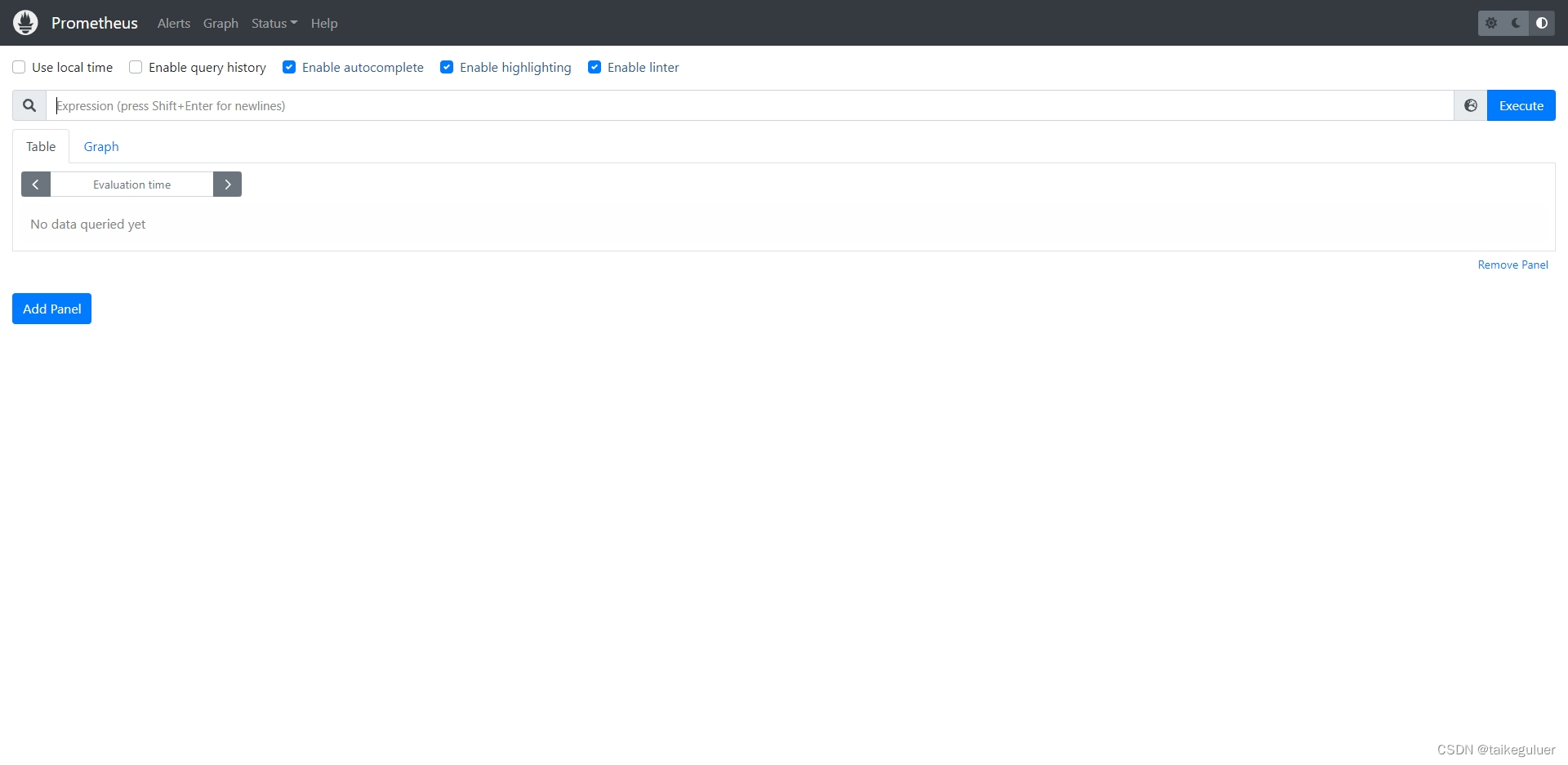

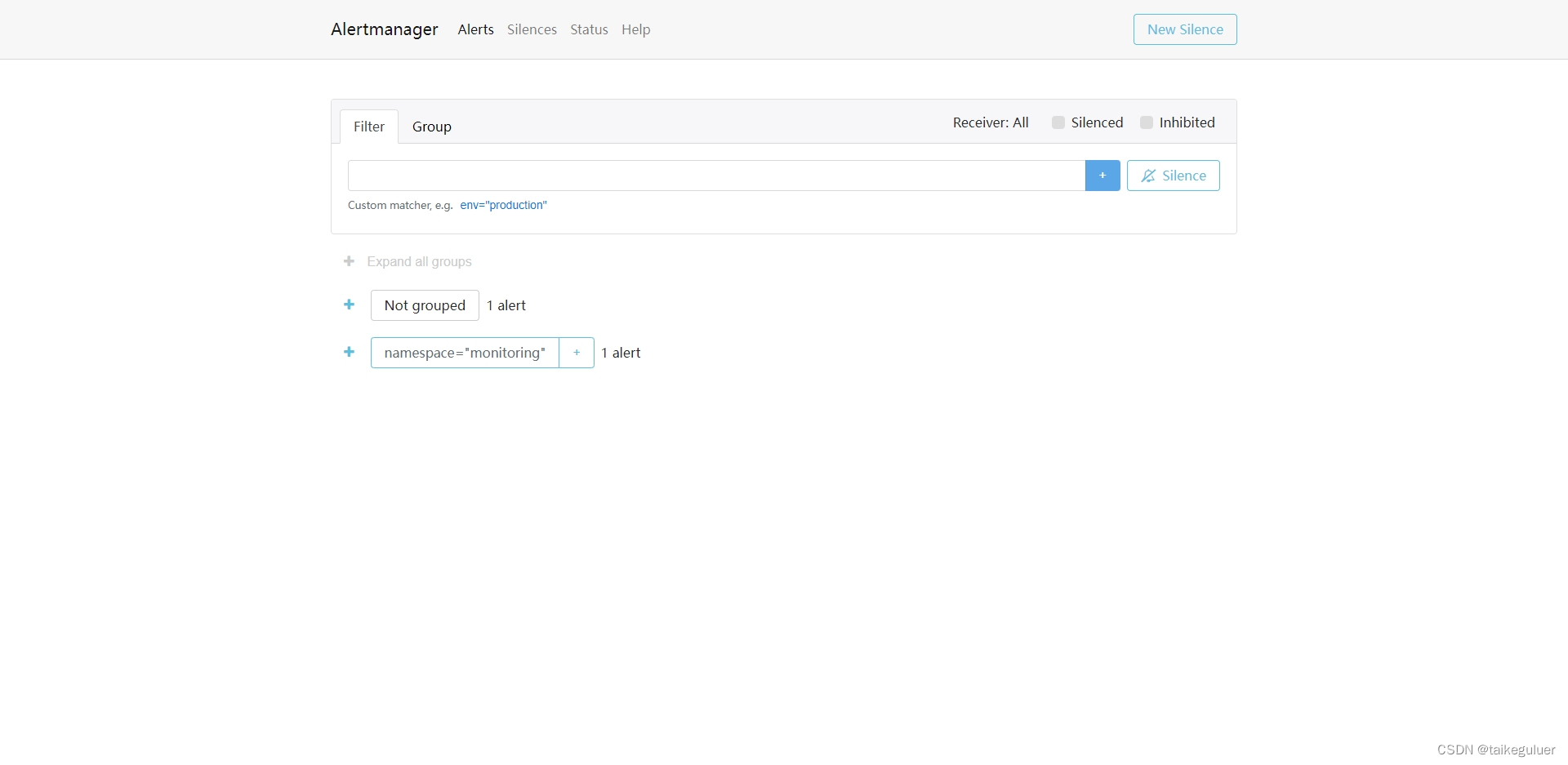

4. 安装prometheus/grafana/alertmanager

$ go install -a github.com/jsonnet-bundler/jsonnet-bundler/cmd/jb@latest

$ ln -s /root/go/bin/jb /usr/local/bin/jb

$ go install github.com/google/go-jsonnet/cmd/jsonnet@latest

$ ln -s /root/go/bin/jsonnet /usr/local/bin/jsonnet

$ go install github.com/brancz/gojsontoyaml@latest

$ ln -s /root/go/bin/gojsontoyaml /usr/local/bin/gojsontoyaml

$ mkdir my-kube-prometheus

$ cd my-kube-prometheus/

$ jb init

$ jb install github.com/prometheus-operator/kube-prometheus/jsonnet/kube-prometheus@main

$ wget https://raw.githubusercontent.com/prometheus-operator/kube-prometheus/main/example.jsonnet -O example.jsonnet

$ wget https://raw.githubusercontent.com/prometheus-operator/kube-prometheus/main/build.sh -O build.sh

$ chmod +x build.sh

$ jb update

$ vi example.jsonnet #取消掉这一行的注释:(import 'kube-prometheus/addons/node-ports.libsonnet') +

$ ./build.sh example.jsonnet

$ kubectl apply --server-side -f manifests/setup

$ kubectl apply -f manifests/

$ cd ~

$ kubectl -n monitoring delete networkpolicies.networking.k8s.io --all

$ kubectl get service -n monitoring #取得alertmanager-main,grafana,prometheus-k8s对应的端口号,如30903,30902,30900

在windows宿主机中打开浏览器,访问https://172.28.176.4:30903、https://172.28.176.4:30902、https://172.28.176.4:30900(端口为上上步得到的内容),应显示如下内容:

5. 安装Istio

$ wget https://github.com/istio/istio/releases/download/1.14.1/istioctl-1.14.1-linux-amd64.tar.gz

$ watch kubectl get pods -A -o wide

$ tar Cxzvf . istioctl-1.14.1-linux-amd64.tar.gz

$ mv istioctl /usr/local/bin/

$ vi /etc/kubernetes/manifests/kube-apiserver.yaml #在- --enable-admission-plugins=NodeRestriction后添加:,MutatingAdmissionWebhook,ValidatingAdmissionWebhook

$ wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

$ vi components.yaml #在- --metric-resolution=15s后添加一行:- --kubelet-insecure-tls

$ kubectl apply -f components.yaml

$ kubectl top pods -A #确认得到pod的资源使用列表

$ curl -L https://istio.io/downloadIstio | sh -

$ cd istio-1.14.1/

$ istioctl install --set profile=default -y

$ kubectl label namespace default istio-injection=enabled

$ kubectl edit service istio-ingressgateway -n istio-system #在这行 externalTrafficPolicy: Cluster的前面添加两行如下:

externalIPs:

- 172.28.176.4

$ kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml #如无法部署成功,可用下一命令更换代理,成功后,应使用下下条命令恢复代理

$ cp ~/http_proxy.conf.ml /etc/systemd/system/containerd.service.d/http_proxy.conf

$ cp ~/http_proxy.conf.www /etc/systemd/system/containerd.service.d/http_proxy.conf

$ cd ~

$ kubectl exec "$(kubectl get pod -l app=ratings -o jsonpath='{.items[0].metadata.name}')" -c ratings -- curl -sS productpage:9080/productpage | grep -o "<title>.*</title>" #应得到如下输出

<title>Simple Bookstore App</title>

6. 安装Knative

$ wget https://github.com/knative/client/releases/download/knative-v1.6.0/kn-linux-amd64

$ chmod +x kn-linux-amd64

$ mv kn-linux-amd64 /usr/local/bin/kn

$ kubectl apply -f https://github.com/knative/operator/releases/download/knative-v1.6.0/operator.yaml

$ vi knative-serving.yaml #写入下面的内容保存

apiVersion: v1

kind: Namespace

metadata:

name: knative-serving

---

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

kubectl apply -f knative-serving.yaml

$ vi knative-eventing.yaml #写入下面的内容保存

apiVersion: v1

kind: Namespace

metadata:

name: knative-eventing

---

apiVersion: operator.knative.dev/v1beta1

kind: KnativeEventing

metadata:

name: knative-eventing

namespace: knative-eventing

$ kubectl apply -f knative-eventing.yaml

$ vi hello.yaml #写入下面的内容保存

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: hello

spec:

template:

spec:

containers:

- image: gcr.io/knative-samples/helloworld-go

ports:

- containerPort: 8080

env:

- name: TARGET

value: "World"

$ kubectl apply -f hello.yaml

在Windows宿主机上,修改C:\Windows\System32\drivers\etc\hosts,添加:

172.28.176.4 hello.default.example.com

浏览器访问如下地址,得到hello world返回,应该pod列表中发现pod随着访问发起和终止而起停:

http://hello.default.example.com

扫描二维码关注公众号,回复:

15268207 查看本文章

7. 其他

- 安装步骤只能保证如下版本可正常工作(其他版本信息参见上一步的镜像列表),如组件版本有更新,需针对错误进行调整

$ kubeadm version -o short v1.24.3 $ kubectl version --short Flag --short has been deprecated, and will be removed in the future. The --short output will become the default. Client Version: v1.24.3 Kustomize Version: v4.5.4 Server Version: v1.24.3 $ ctr version Client: Version: v1.6.6 Revision: 10c12954828e7c7c9b6e0ea9b0c02b01407d3ae1 Go version: go1.17.11 Server: Version: v1.6.6 Revision: 10c12954828e7c7c9b6e0ea9b0c02b01407d3ae1 UUID: 272b3b50-f95d-42aa-9d36-6558b245afb0 $ istioctl version client version: 1.14.1 control plane version: 1.14.1 data plane version: 1.14.1 (7 proxies) $ kn version Version: v20220713-local-bfdc0a21 Build Date: 2022-07-13 09:04:48 Git Revision: bfdc0a21 Supported APIs: * Serving - serving.knative.dev/v1 (knative-serving v0.33.0) * Eventing - sources.knative.dev/v1 (knative-eventing v0.33.0) - eventing.knative.dev/v1 (knative-eventing v0.33.0) - 最终全部安装完毕后的pod列表如下:

$ watch kubectl get pods -A -o wide #以下输出为最终全部安装完毕后的pod列表 NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-apiserver calico-apiserver-bddc5fc5c-2hlct 1/1 Running 3 (24h ago) 38h 10.244.150.133 k-master-1 <none> <none> calico-apiserver calico-apiserver-bddc5fc5c-q6kls 1/1 Running 3 (24h ago) 38h 10.244.150.132 k-master-1 <none> <none> calico-system calico-kube-controllers-86dff98c45-64r9z 1/1 Running 0 38h 10.244.150.131 k-master-1 <none> <none> calico-system calico-node-6n4wm 1/1 Running 5 (15h ago) 25h 172.28.176.5 k-node-1 <none> <none> calico-system calico-node-zx8nn 1/1 Running 0 38h 172.28.176.4 k-master-1 <none> <none> calico-system calico-typha-77d7886577-lccxl 1/1 Running 0 38h 172.28.176.4 k-master-1 <none> <none> default details-v1-b48c969c5-2m8c2 2/2 Running 0 15h 10.244.161.197 k-node-1 <none> <none> default knative-operator-85547459f-m98hf 1/1 Running 0 15h 10.244.161.194 k-node-1 <none> <none> default productpage-v1-74fdfbd7c7-6vw7n 2/2 Running 0 21h 10.244.150.165 k-master-1 <none> <none> default ratings-v1-b74b895c5-krqv4 2/2 Running 0 21h 10.244.150.164 k-master-1 <none> <none> default reviews-v1-68b4dcbdb9-vtgzb 2/2 Running 0 15h 10.244.161.198 k-node-1 <none> <none> default reviews-v2-565bcd7987-47jzb 2/2 Running 0 21h 10.244.150.162 k-master-1 <none> <none> default reviews-v3-d88774f9c-whkcf 2/2 Running 0 21h 10.244.150.163 k-master-1 <none> <none> istio-system istio-ingressgateway-545d46d996-cf7xw 1/1 Running 0 23h 10.244.150.153 k-master-1 <none> <none> istio-system istiod-59876b79cd-wqz64 1/1 Running 0 15h 10.244.161.252 k-node-1 <none> <none> knative-eventing eventing-controller-5c8967885c-4r78h 1/1 Running 0 15h 10.244.161.248 k-node-1 <none> <none> knative-eventing eventing-webhook-7f9b5f7d9-mwxrc 1/1 Running 0 15h 10.244.161.246 k-node-1 <none> <none> knative-eventing imc-controller-7d9b5756cb-fzr5x 1/1 Running 0 15h 10.244.150.174 k-master-1 <none> <none> knative-eventing imc-dispatcher-76665c67df-cvz77 1/1 Running 0 15h 10.244.150.173 k-master-1 <none> <none> knative-eventing mt-broker-controller-b74d7487c-gc2rb 1/1 Running 0 15h 10.244.161.251 k-node-1 <none> <none> knative-eventing mt-broker-filter-545d9f864f-jjrf2 1/1 Running 0 15h 10.244.161.249 k-node-1 <none> <none> knative-eventing mt-broker-ingress-7655d545f5-hn86f 1/1 Running 0 15h 10.244.161.245 k-node-1 <none> <none> knative-serving activator-c7d578d94-2jlwd 1/1 Running 0 20h 10.244.150.166 k-master-1 <none> <none> knative-serving autoscaler-6488988457-gth42 1/1 Running 0 20h 10.244.150.167 k-master-1 <none> <none> knative-serving autoscaler-hpa-7d89d96584-pdzj8 1/1 Running 0 15h 10.244.161.250 k-node-1 <none> <none> knative-serving controller-768d64b7b4-ftdhk 1/1 Running 0 15h 10.244.161.253 k-node-1 <none> <none> knative-serving domain-mapping-7598c5f659-f6vbh 1/1 Running 0 20h 10.244.150.169 k-master-1 <none> <none> knative-serving domainmapping-webhook-8c4c9fdc4-46cjq 1/1 Running 0 15h 10.244.161.254 k-node-1 <none> <none> knative-serving net-istio-controller-7d44658469-n5lg7 1/1 Running 0 15h 10.244.161.247 k-node-1 <none> <none> knative-serving net-istio-webhook-f5ccb98cf-jggpk 1/1 Running 0 20h 10.244.150.172 k-master-1 <none> <none> knative-serving webhook-df8844f6-47zh5 1/1 Running 0 20h 10.244.150.171 k-master-1 <none> <none> kube-system coredns-6d4b75cb6d-2tp79 1/1 Running 0 38h 10.244.150.129 k-master-1 <none> <none> kube-system coredns-6d4b75cb6d-bddjm 1/1 Running 0 38h 10.244.150.130 k-master-1 <none> <none> kube-system etcd-k-master-1 1/1 Running 0 38h 172.28.176.4 k-master-1 <none> <none> kube-system kube-apiserver-k-master-1 1/1 Running 0 24h 172.28.176.4 k-master-1 <none> <none> kube-system kube-controller-manager-k-master-1 1/1 Running 1 (24h ago) 38h 172.28.176.4 k-master-1 <none> <none> kube-system kube-proxy-g8n8v 1/1 Running 5 (15h ago) 25h 172.28.176.5 k-node-1 <none> <none> kube-system kube-proxy-zg5l8 1/1 Running 0 38h 172.28.176.4 k-master-1 <none> <none> kube-system kube-scheduler-k-master-1 1/1 Running 1 (24h ago) 38h 172.28.176.4 k-master-1 <none> <none> kube-system metrics-server-658867cdb7-999wn 1/1 Running 0 15h 10.244.150.175 k-master-1 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-8jmlv 1/1 Running 0 38h 10.244.150.137 k-master-1 <none> <none> kubernetes-dashboard kubernetes-dashboard-5676d8b865-lgtgn 1/1 Running 0 38h 10.244.150.136 k-master-1 <none> <none> monitoring alertmanager-main-0 2/2 Running 0 25h 10.244.150.140 k-master-1 <none> <none> monitoring alertmanager-main-1 2/2 Running 0 25h 10.244.150.141 k-master-1 <none> <none> monitoring alertmanager-main-2 2/2 Running 0 25h 10.244.150.139 k-master-1 <none> <none> monitoring blackbox-exporter-5fb779998c-q7dkx 3/3 Running 0 25h 10.244.150.142 k-master-1 <none> <none> monitoring grafana-66bbbd4f57-v4wtp 1/1 Running 0 25h 10.244.150.144 k-master-1 <none> <none> monitoring kube-state-metrics-98bdf47b9-h28kd 3/3 Running 0 25h 10.244.150.143 k-master-1 <none> <none> monitoring node-exporter-cw2mc 2/2 Running 0 25h 172.28.176.4 k-master-1 <none> <none> monitoring node-exporter-nmhf5 2/2 Running 10 (15h ago) 25h 172.28.176.5 k-node-1 <none> <none> monitoring prometheus-adapter-5f68766c85-clhh7 1/1 Running 0 25h 10.244.150.145 k-master-1 <none> <none> monitoring prometheus-adapter-5f68766c85-hh5d2 1/1 Running 0 25h 10.244.150.146 k-master-1 <none> <none> monitoring prometheus-k8s-0 2/2 Running 0 25h 10.244.150.148 k-master-1 <none> <none> monitoring prometheus-k8s-1 2/2 Running 0 25h 10.244.150.147 k-master-1 <none> <none> monitoring prometheus-operator-6486d45dc7-jjzw9 2/2 Running 0 26h 10.244.150.138 k-master-1 <none> <none> tigera-operator tigera-operator-5dc8b759d9-lwmb7 1/1 Running 1 (24h ago) 38h 172.28.176.4 k-master-1 <none> <none>