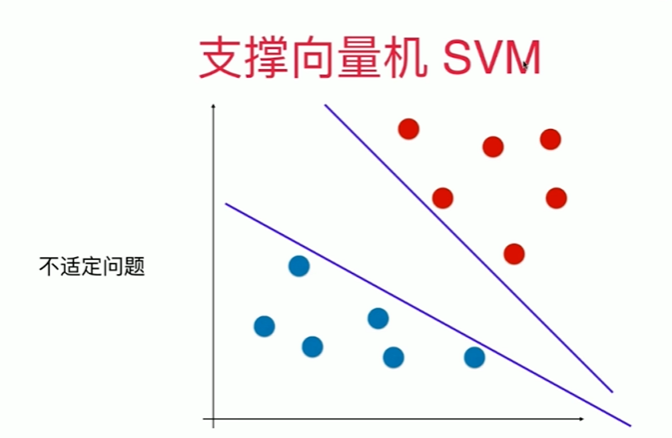

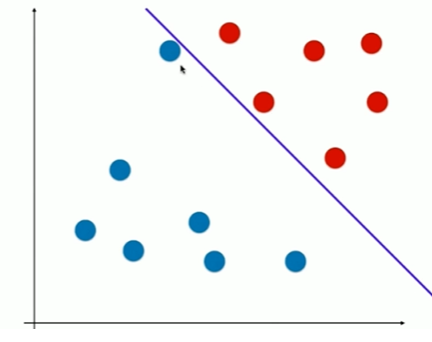

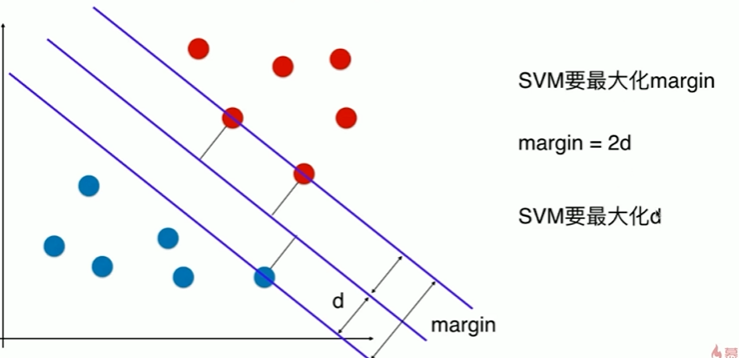

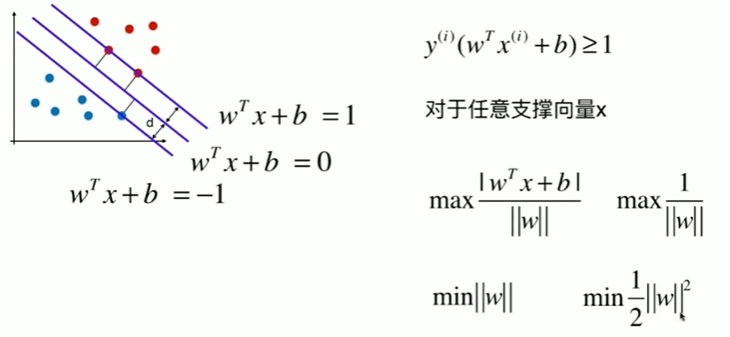

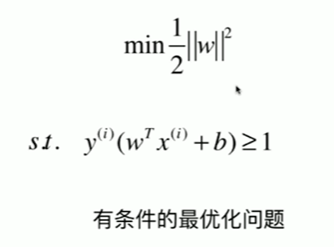

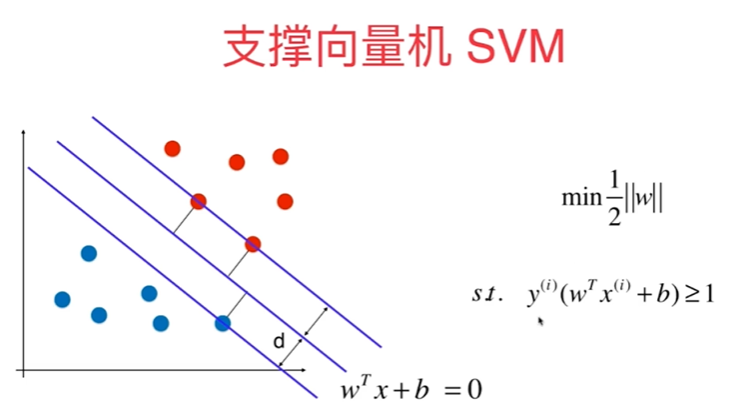

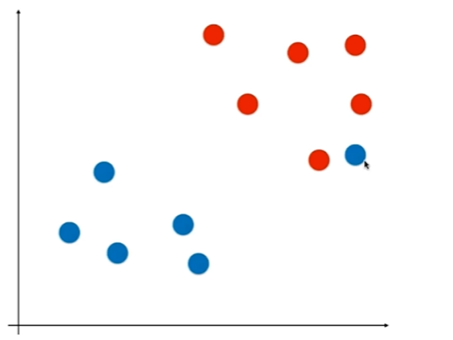

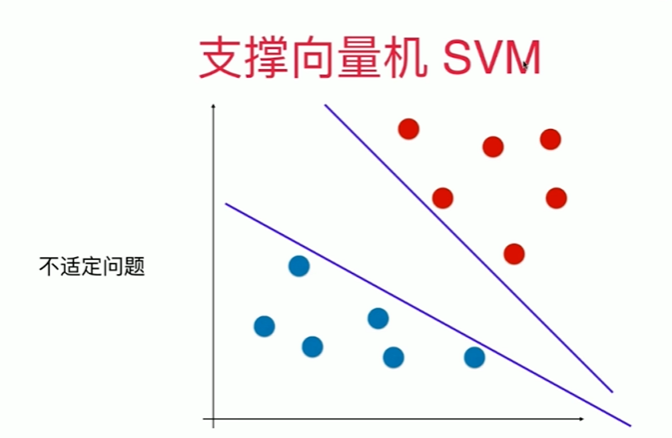

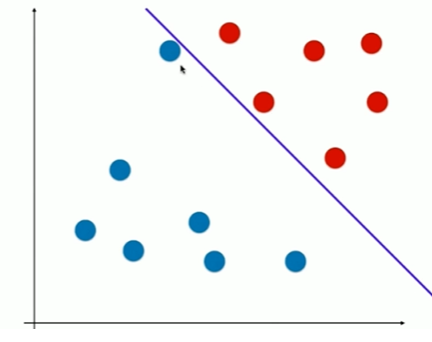

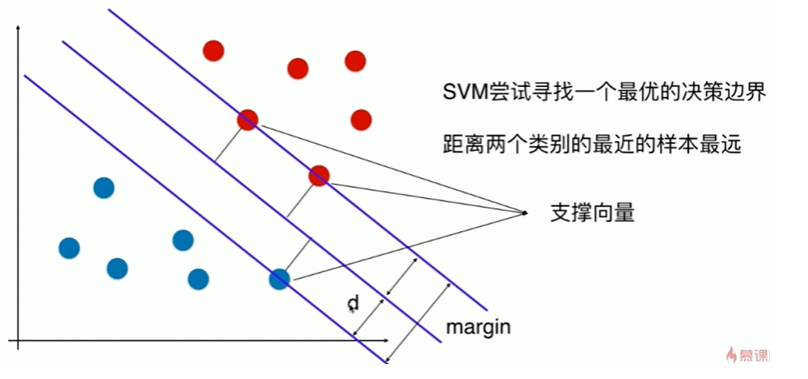

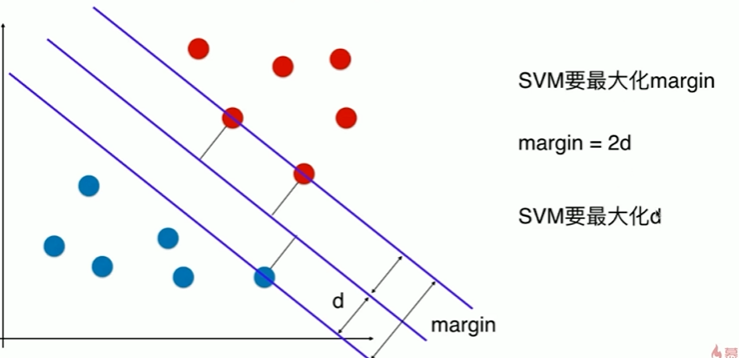

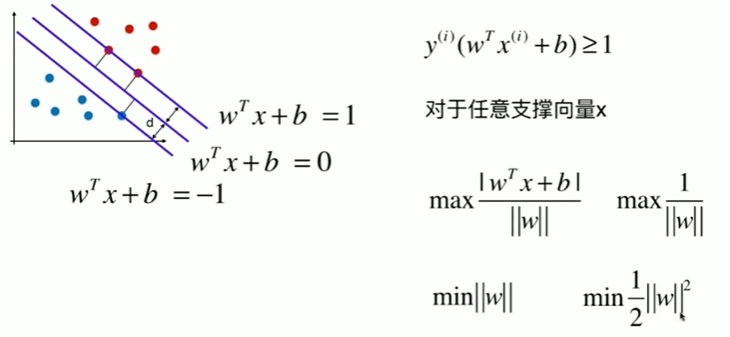

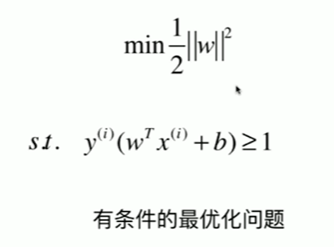

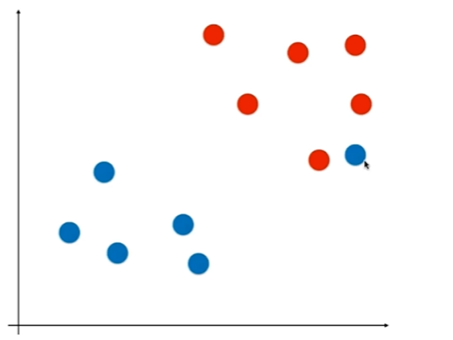

11-1 什么是支持向量机

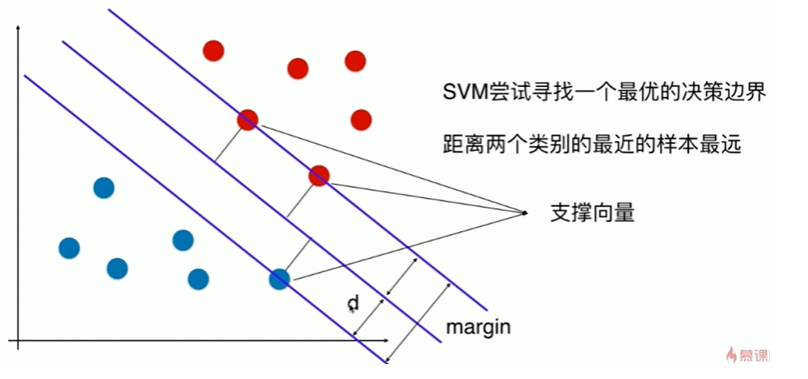

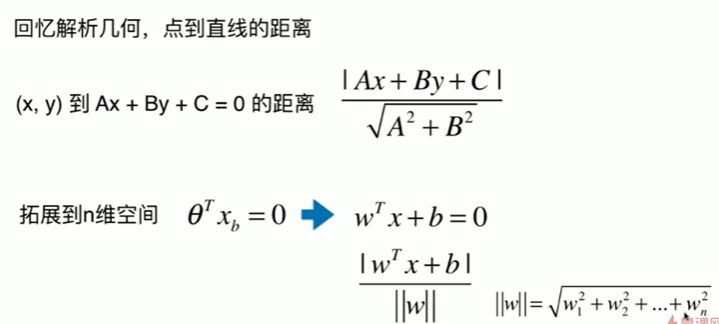

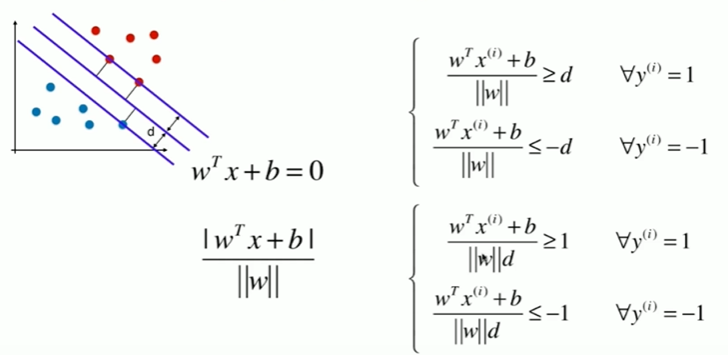

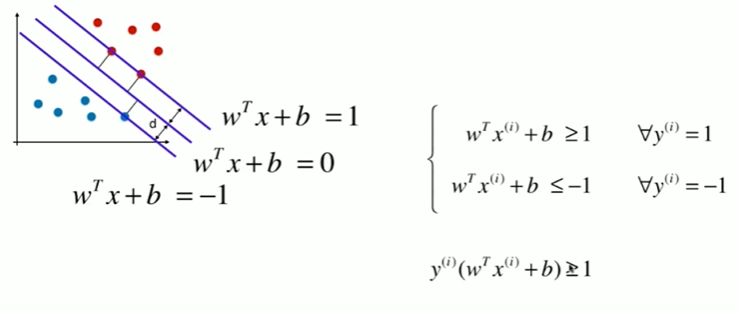

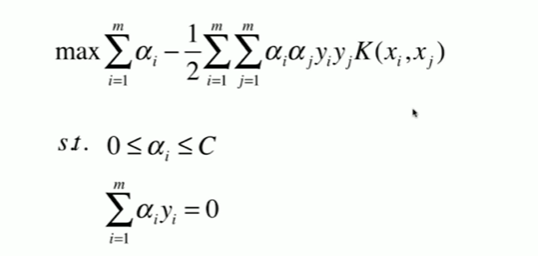

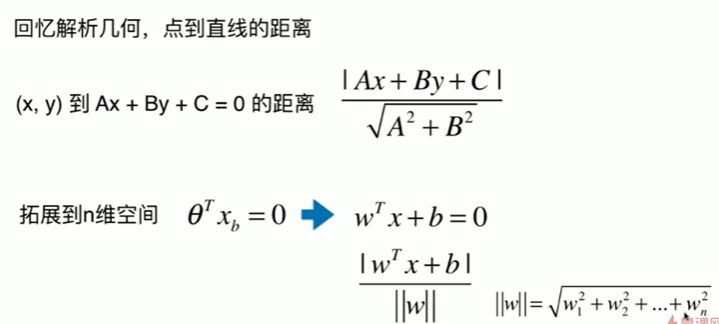

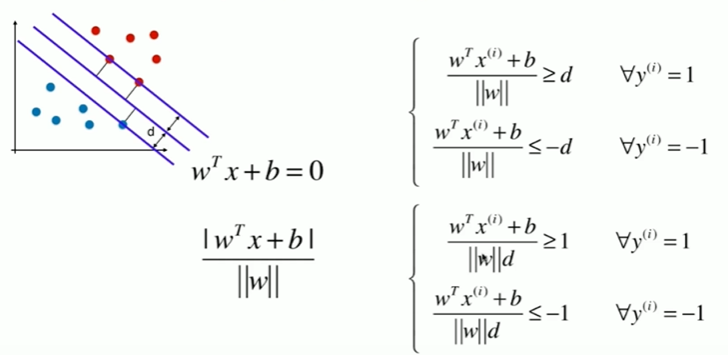

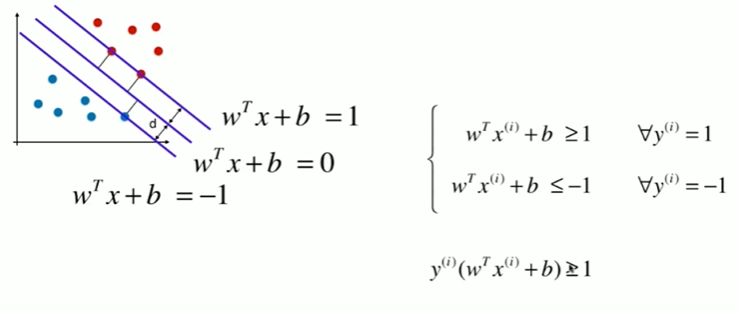

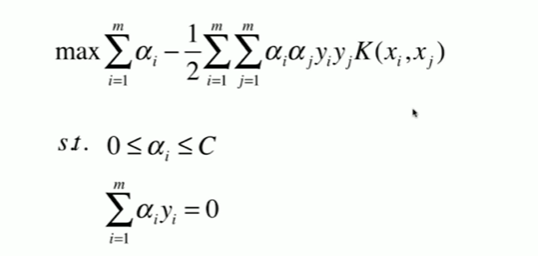

11-2 支持向量机的效用函数推导

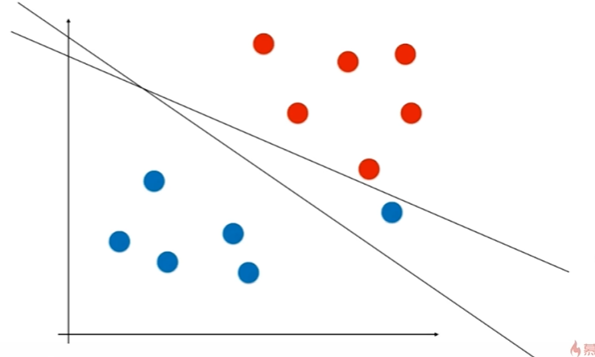

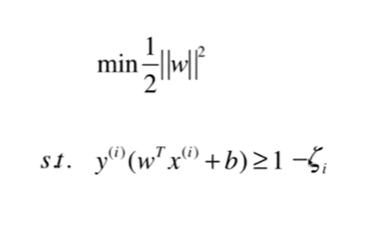

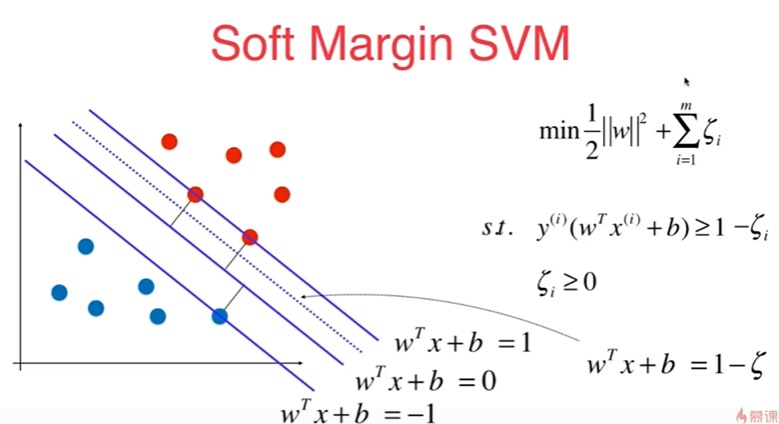

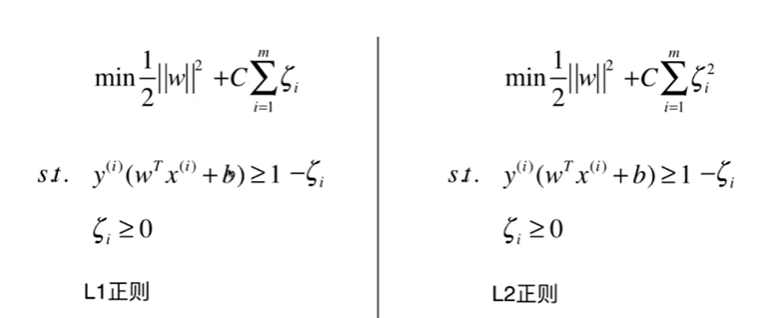

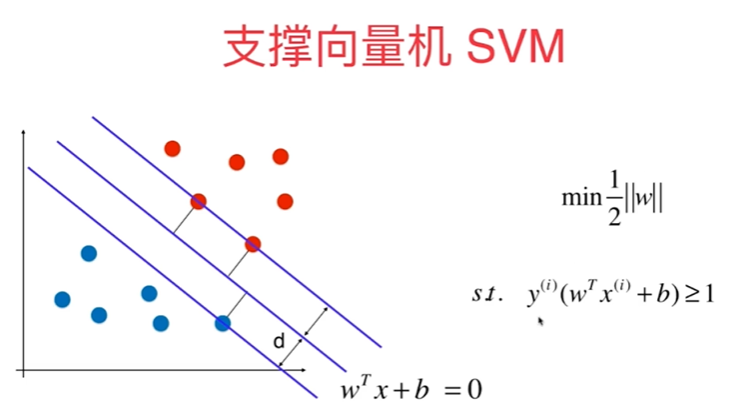

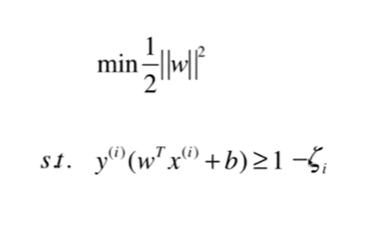

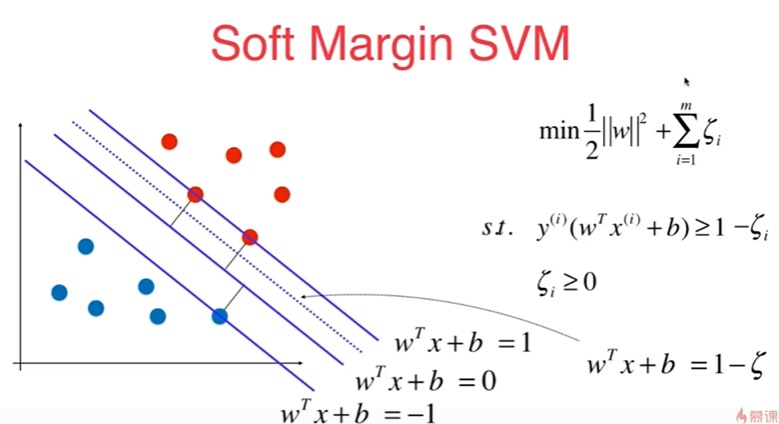

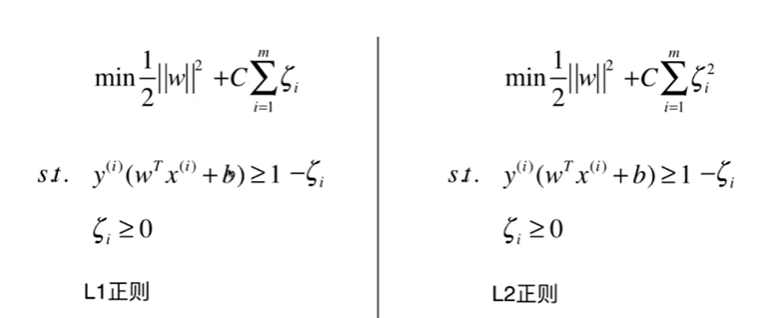

11-3 Soft Margin和SVM的正则化

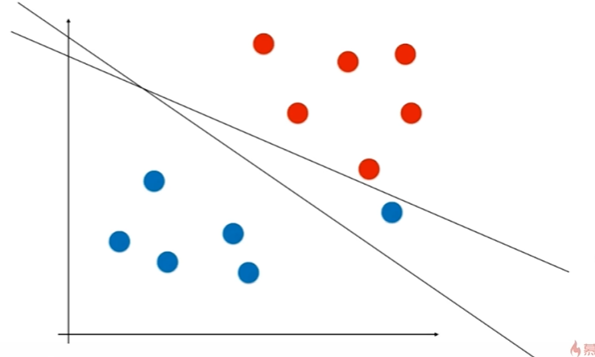

11-4 scikit-learn中的SVM

Notbook 示例

Notbook 源码

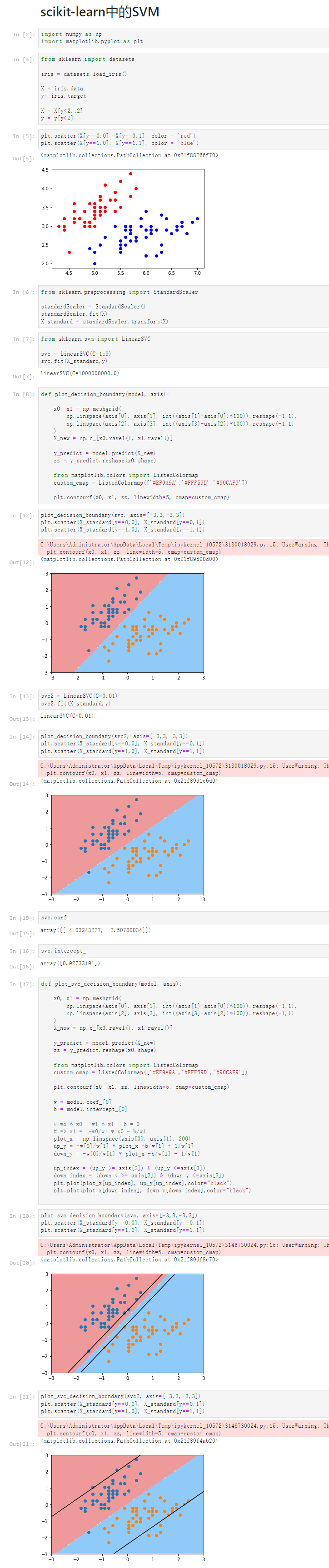

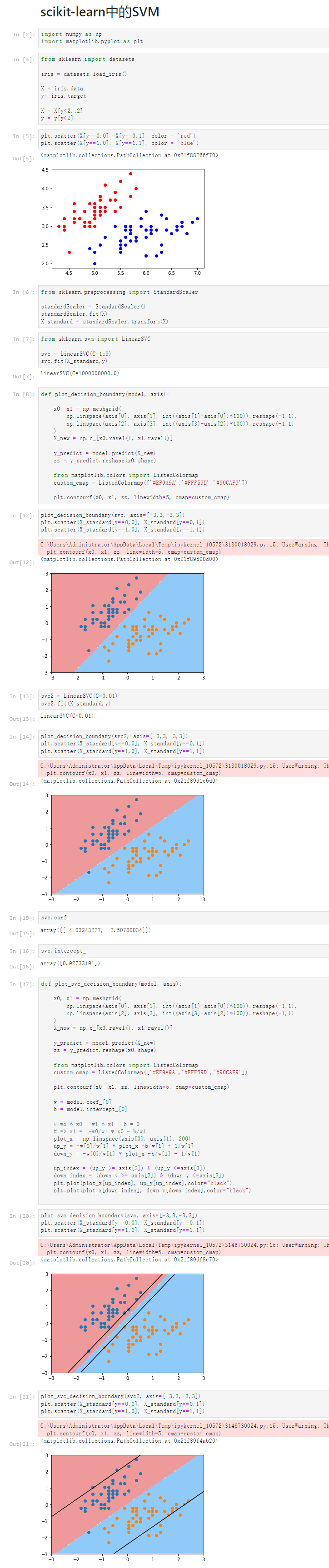

scikit-learn中的SVM

[2]

import numpy as np

import matplotlib.pyplot as plt

[4]

from sklearn import datasets

iris = datasets.load_iris()

X = iris.data

y= iris.target

X = X[y<2,:2]

y = y[y<2]

[5]

plt.scatter(X[y==0,0], X[y==0,1], color = 'red')

plt.scatter(X[y==1,0], X[y==1,1], color = 'blue')

<matplotlib.collections.PathCollection at 0x21f88266f70>

[6]

from sklearn.preprocessing import StandardScaler

standardScaler = StandardScaler()

standardScaler.fit(X)

X_standard = standardScaler.transform(X)

[7]

from sklearn.svm import LinearSVC

svc = LinearSVC(C=1e9)

svc.fit(X_standard,y)

LinearSVC(C=1000000000.0)

[8]

def plot_decision_boundary(model, axis):

x0, x1 = np.meshgrid(

np.linspace(axis[0], axis[1], int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2], axis[3], int((axis[3]-axis[2])*100)).reshape(-1,1)

)

X_new = np.c_[x0.ravel(), x1.ravel()]

y_predict = model.predict(X_new)

zz = y_predict.reshape(x0.shape)

from matplotlib.colors import ListedColormap

custom_cmap = ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

[12]

plot_decision_boundary(svc, axis=[-3,3,-3,3])

plt.scatter(X_standard[y==0,0], X_standard[y==0,1])

plt.scatter(X_standard[y==1,0], X_standard[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_10572\3130018029.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x21f89d00d00>

[13]

svc2 = LinearSVC(C=0.01)

svc2.fit(X_standard,y)

LinearSVC(C=0.01)

[14]

plot_decision_boundary(svc2, axis=[-3,3,-3,3])

plt.scatter(X_standard[y==0,0], X_standard[y==0,1])

plt.scatter(X_standard[y==1,0], X_standard[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_10572\3130018029.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x21f89d1c6d0>

[15]

svc.coef_

array([[ 4.03243277, -2.50700034]])

[16]

svc.intercept_

array([0.92733191])

[26]

def plot_svc_decision_boundary(model, axis):

x0, x1 = np.meshgrid(

np.linspace(axis[0], axis[1], int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2], axis[3], int((axis[3]-axis[2])*100)).reshape(-1,1)

)

X_new = np.c_[x0.ravel(), x1.ravel()]

y_predict = model.predict(X_new)

zz = y_predict.reshape(x0.shape)

from matplotlib.colors import ListedColormap

custom_cmap = ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

w = model.coef_[0]

b = model.intercept_[0]

# wo * x0 + w1 * x1 + b = 0

# => x1 = -w0/w1 * x0 - b/w1

plot_x = np.linspace(axis[0], axis[1], 200)

up_y = -w[0]/w[1] * plot_x -b/w[1] + 1/w[1]

down_y = -w[0]/w[1] * plot_x -b/w[1] - 1/w[1]

up_index = (up_y >= axis[2]) & (up_y <=axis[3])

down_index = (down_y >= axis[2]) & (down_y <=axis[3])

plt.plot(plot_x[up_index], up_y[up_index],color="black")

plt.plot(plot_x[down_index], down_y[down_index],color="black")

[27]

plot_svc_decision_boundary(svc, axis=[-3,3,-3,3])

plt.scatter(X_standard[y==0,0], X_standard[y==0,1])

plt.scatter(X_standard[y==1,0], X_standard[y==1,1])

-3 3

C:\Users\Administrator\AppData\Local\Temp\ipykernel_10572\3419381047.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x21f8a012e20>

[21]

plot_svc_decision_boundary(svc2, axis=[-3,3,-3,3])

plt.scatter(X_standard[y==0,0], X_standard[y==0,1])

plt.scatter(X_standard[y==1,0], X_standard[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_10572\3146730024.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x21f89f4ab20>

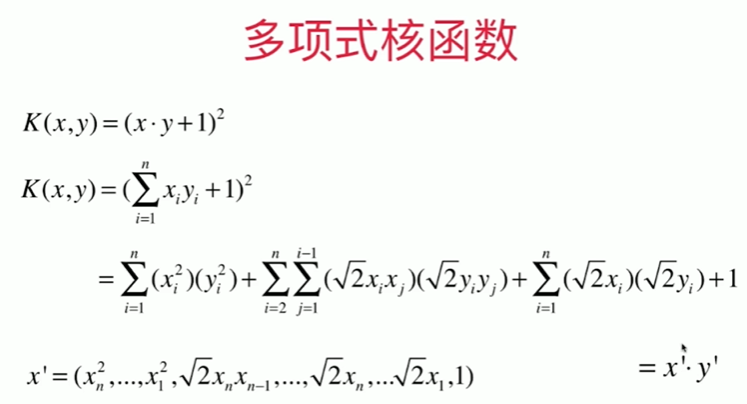

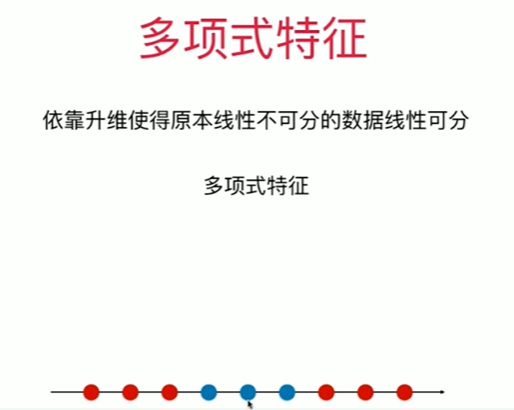

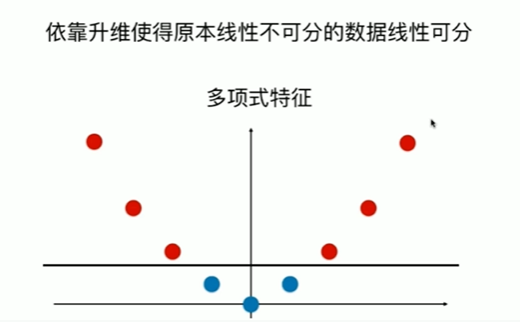

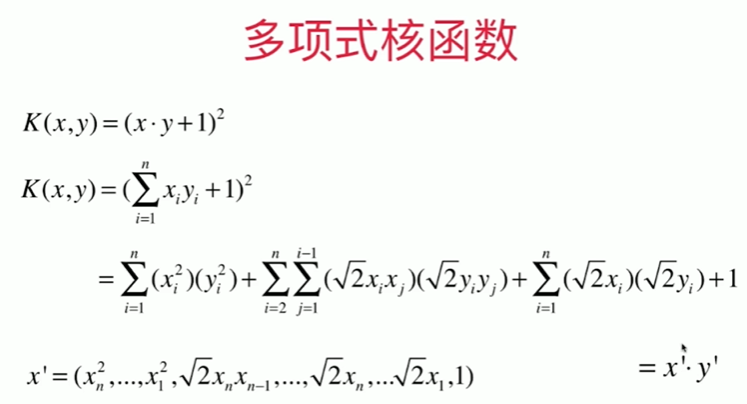

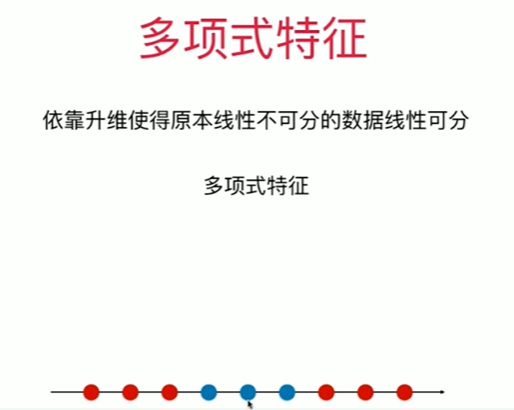

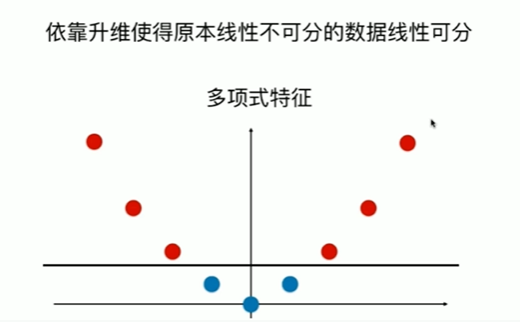

11-5 SVM中使用多项式特征

Notbook 示例

Notbook 源码

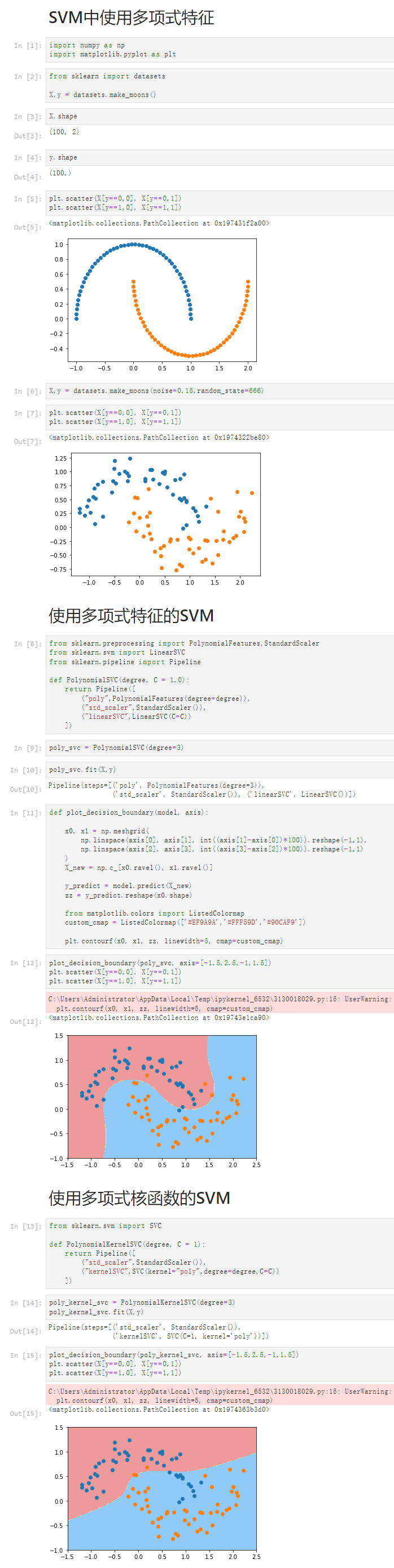

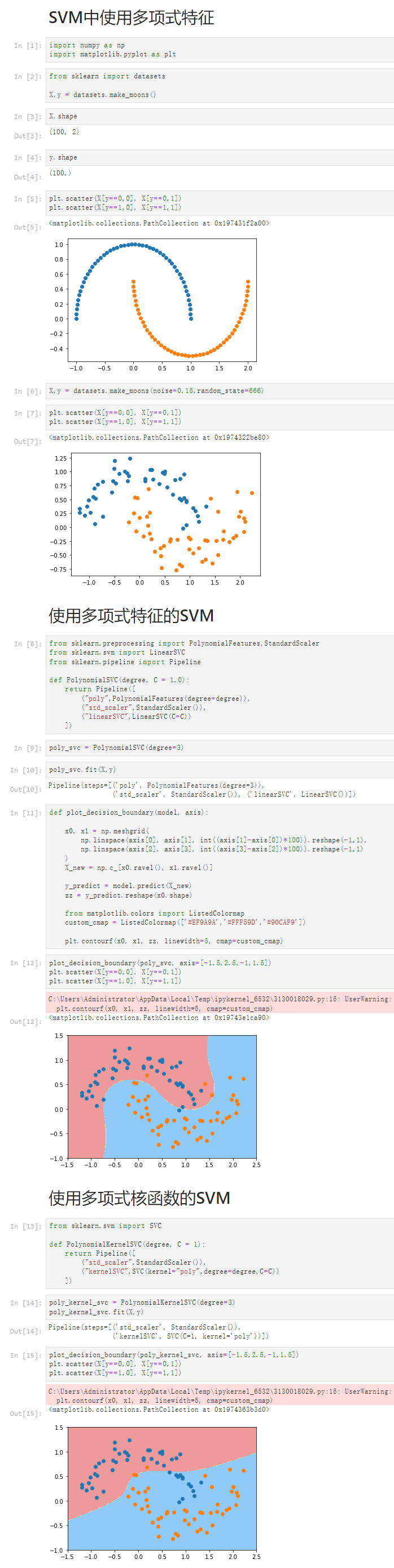

SVM中使用多项式特征

[1]

import numpy as np

import matplotlib.pyplot as plt

[2]

from sklearn import datasets

X,y = datasets.make_moons()

[3]

X.shape

(100, 2)

[4]

y.shape

(100,)

[5]

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

<matplotlib.collections.PathCollection at 0x197431f2a00>

[6]

X,y = datasets.make_moons(noise=0.15,random_state=666)

[7]

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

<matplotlib.collections.PathCollection at 0x1974322be80>

使用多项式特征的SVM

[8]

from sklearn.preprocessing import PolynomialFeatures,StandardScaler

from sklearn.svm import LinearSVC

from sklearn.pipeline import Pipeline

def PolynomialSVC(degree, C = 1.0):

return Pipeline([

("poly",PolynomialFeatures(degree=degree)),

("std_scaler",StandardScaler()),

("linearSVC",LinearSVC(C=C))

])

[9]

poly_svc = PolynomialSVC(degree=3)

[10]

poly_svc.fit(X,y)

Pipeline(steps=[('poly', PolynomialFeatures(degree=3)),

('std_scaler', StandardScaler()), ('linearSVC', LinearSVC())])

[11]

def plot_decision_boundary(model, axis):

x0, x1 = np.meshgrid(

np.linspace(axis[0], axis[1], int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2], axis[3], int((axis[3]-axis[2])*100)).reshape(-1,1)

)

X_new = np.c_[x0.ravel(), x1.ravel()]

y_predict = model.predict(X_new)

zz = y_predict.reshape(x0.shape)

from matplotlib.colors import ListedColormap

custom_cmap = ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

[12]

plot_decision_boundary(poly_svc, axis=[-1.5,2.5,-1,1.5])

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_6532\3130018029.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x19743e1ca90>

使用多项式核函数的SVM

[13]

from sklearn.svm import SVC

def PolynomialKernelSVC(degree, C = 1):

return Pipeline([

("std_scaler",StandardScaler()),

("kernelSVC",SVC(kernel="poly",degree=degree,C=C))

])

[14]

poly_kernel_svc = PolynomialKernelSVC(degree=3)

poly_kernel_svc.fit(X,y)

Pipeline(steps=[('std_scaler', StandardScaler()),

('kernelSVC', SVC(C=1, kernel='poly'))])

[15]

plot_decision_boundary(poly_kernel_svc, axis=[-1.5,2.5,-1,1.5])

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_6532\3130018029.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x1974363b3d0>

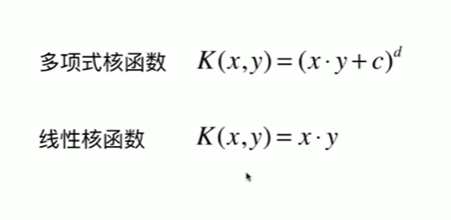

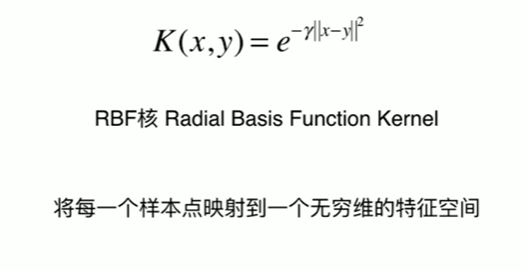

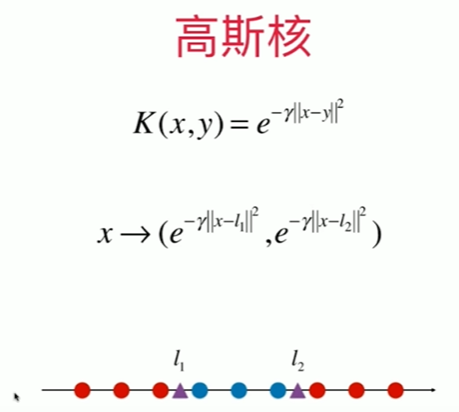

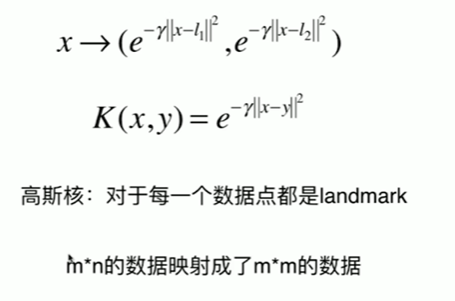

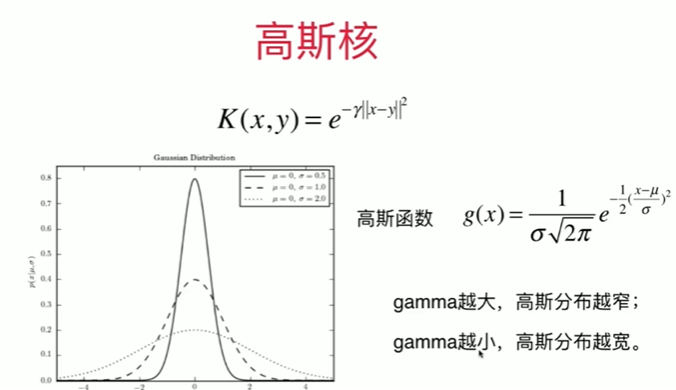

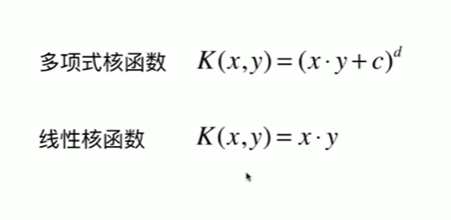

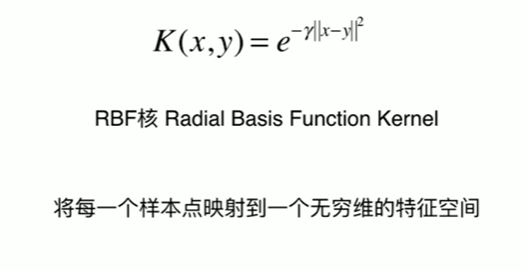

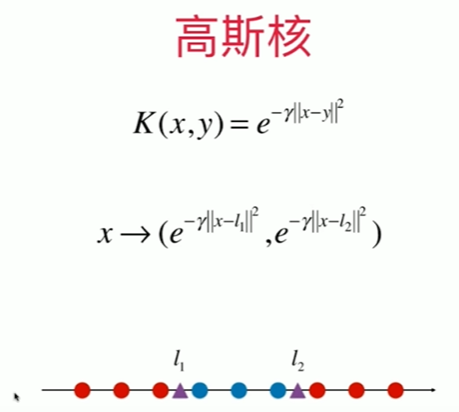

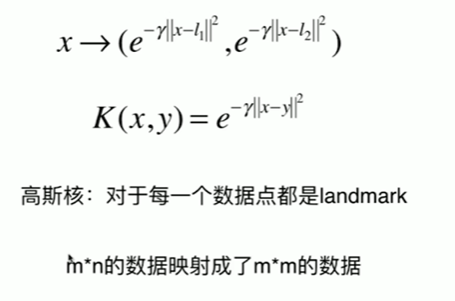

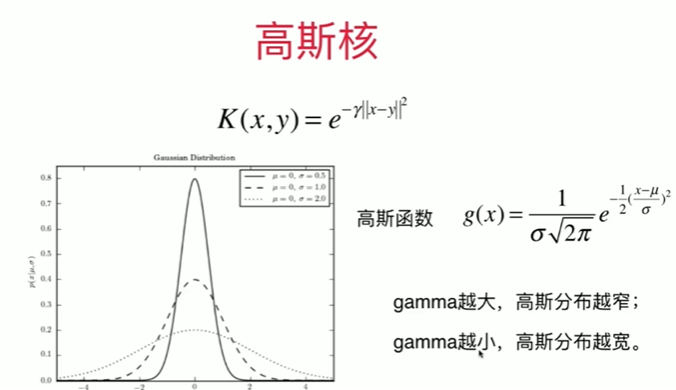

11-6 核函数

11-7 高斯核函数

Notbook 示例

Notbook 源码

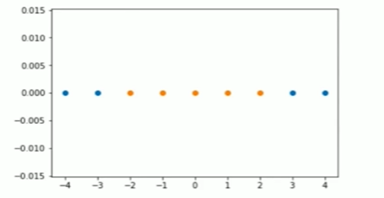

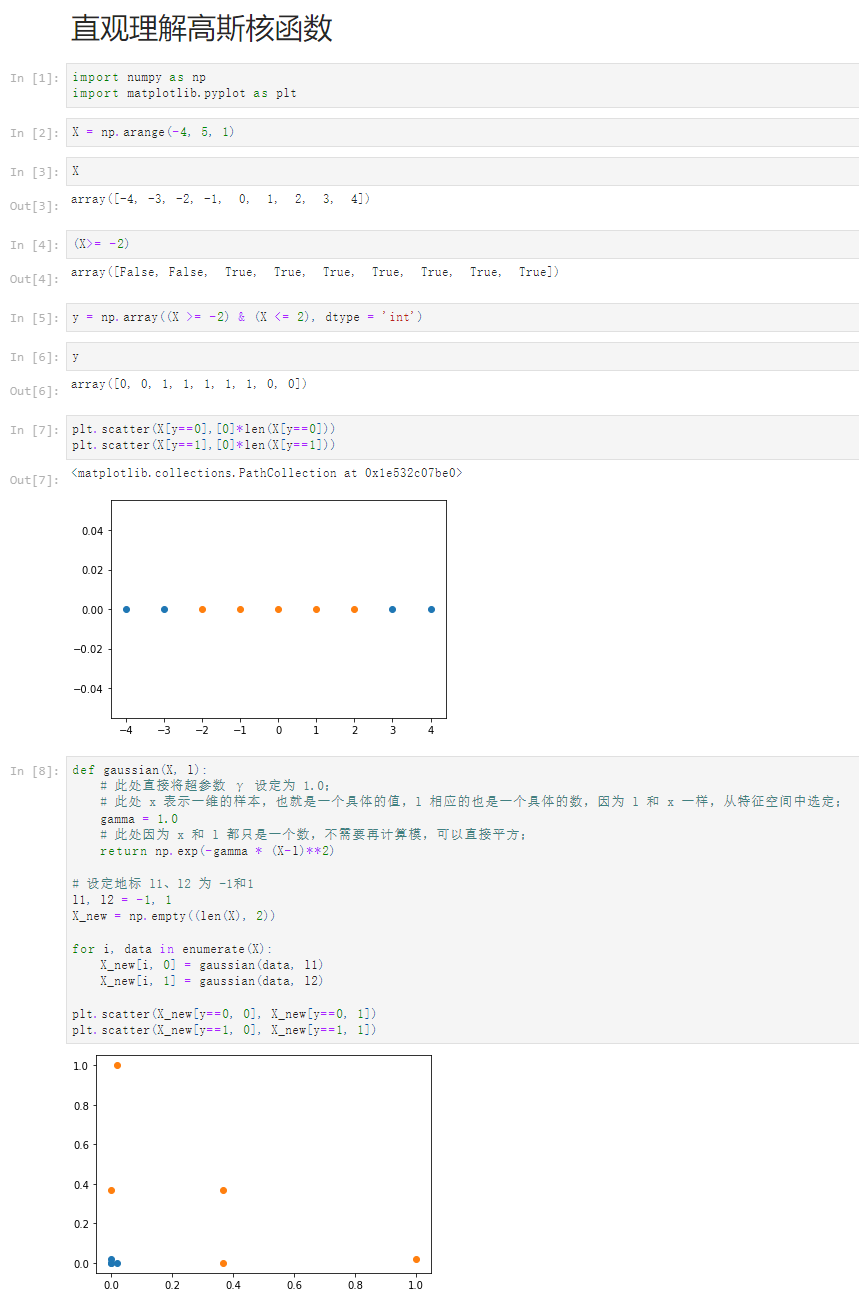

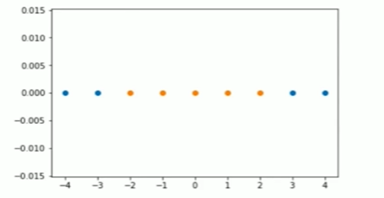

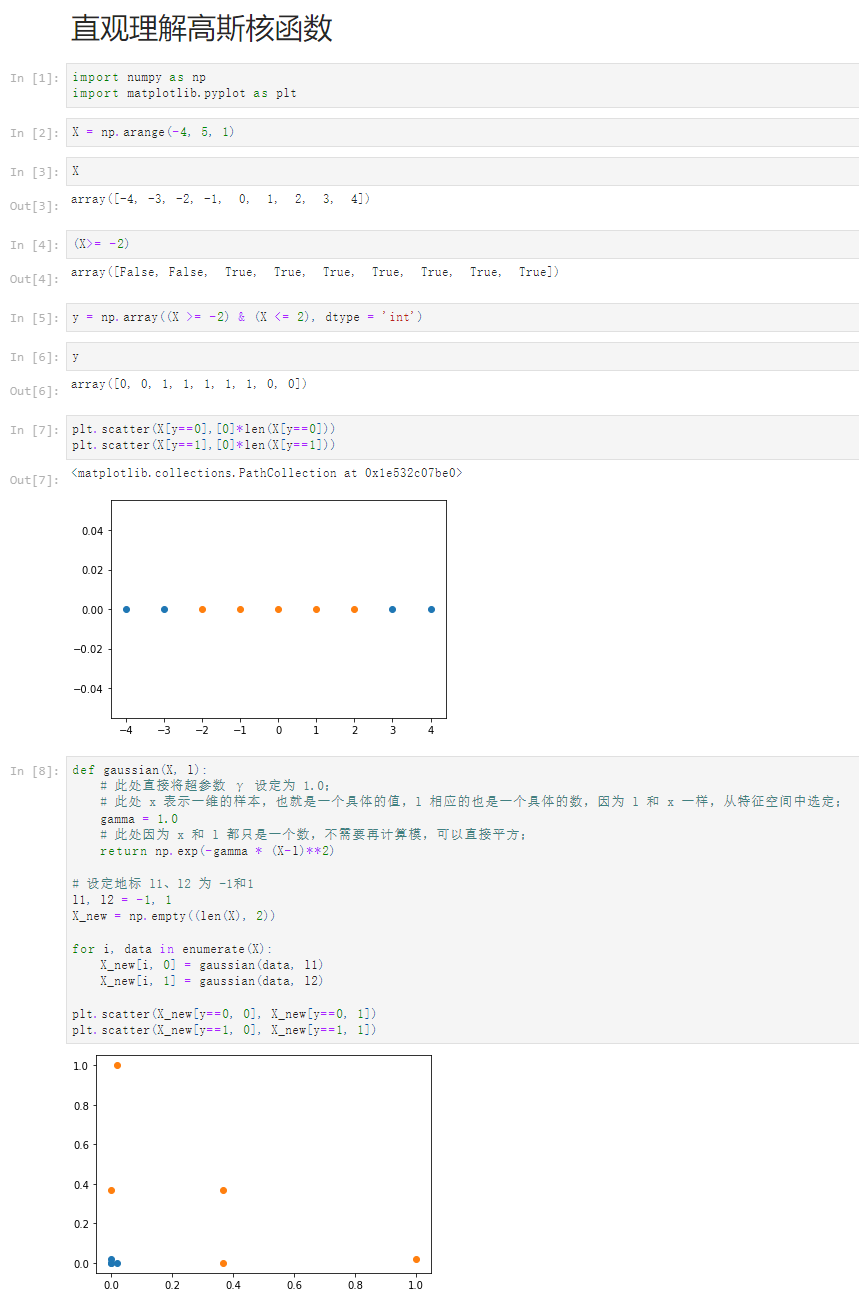

直观理解高斯核函数

[1]

import numpy as np

import matplotlib.pyplot as plt

[2]

X = np.arange(-4, 5, 1)

[3]

X

array([-4, -3, -2, -1, 0, 1, 2, 3, 4])

[4]

(X>= -2)

array([False, False, True, True, True, True, True, True, True])

[5]

y = np.array((X >= -2) & (X <= 2), dtype = 'int')

[6]

y

array([0, 0, 1, 1, 1, 1, 1, 0, 0])

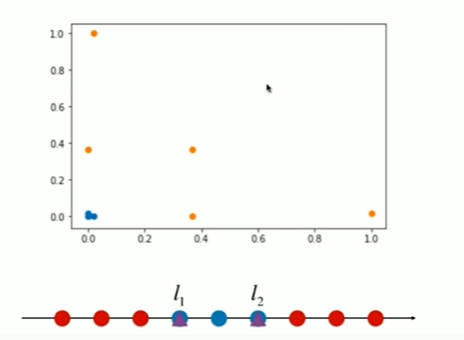

[7]

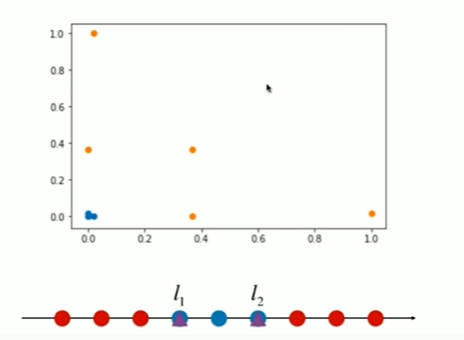

plt.scatter(X[y==0],[0]*len(X[y==0]))

plt.scatter(X[y==1],[0]*len(X[y==1]))

<matplotlib.collections.PathCollection at 0x1e532c07be0>

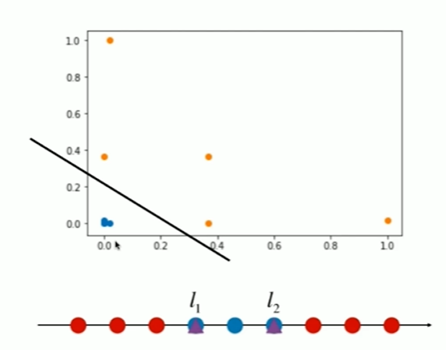

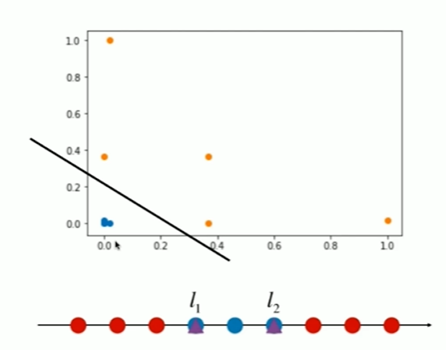

[8]

def gaussian(X, l):

# 此处直接将超参数 γ 设定为 1.0;

# 此处 x 表示一维的样本,也就是一个具体的值,l 相应的也是一个具体的数,因为 l 和 x 一样,从特征空间中选定;

gamma = 1.0

# 此处因为 x 和 l 都只是一个数,不需要再计算模,可以直接平方;

return np.exp(-gamma * (X-l)**2)

# 设定地标 l1、l2 为 -1和1

l1, l2 = -1, 1

X_new = np.empty((len(X), 2))

for i, data in enumerate(X):

X_new[i, 0] = gaussian(data, l1)

X_new[i, 1] = gaussian(data, l2)

plt.scatter(X_new[y==0, 0], X_new[y==0, 1])

plt.scatter(X_new[y==1, 0], X_new[y==1, 1])

11-8 scikit-learn中的高斯核函数

Notbook 示例

Notbook 源码

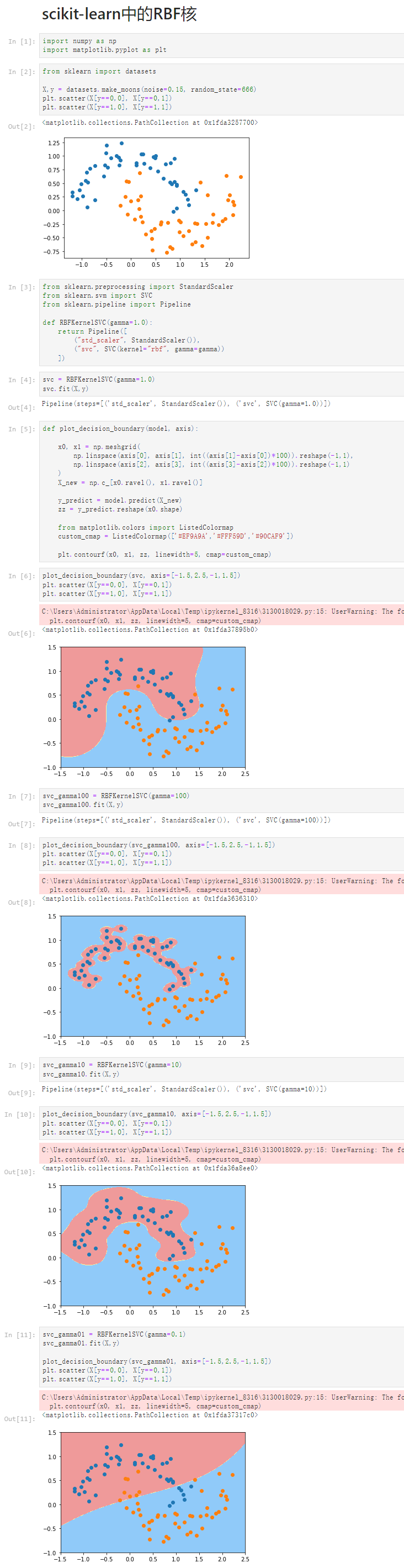

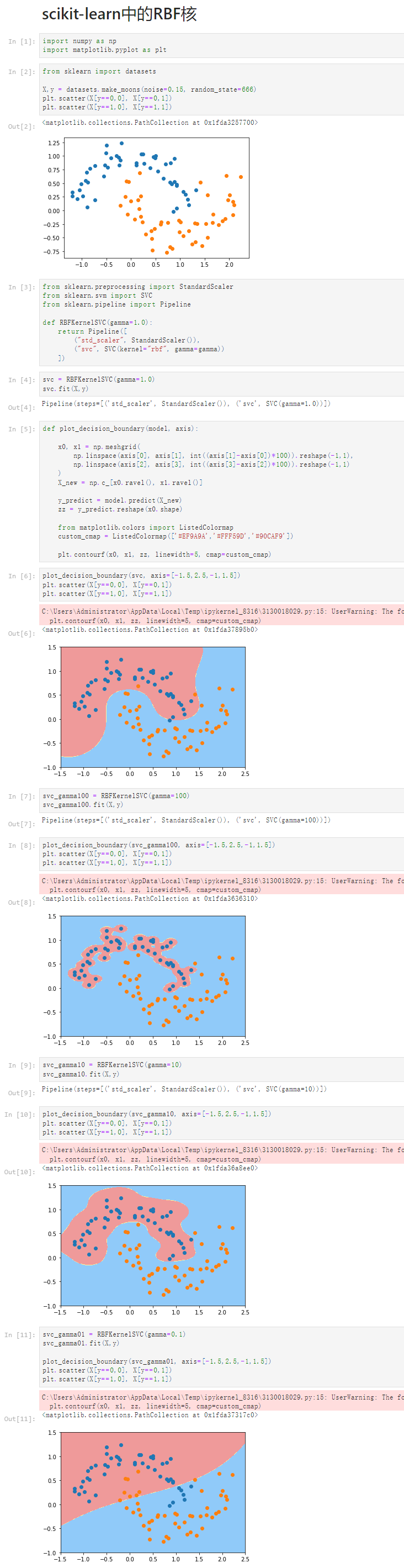

scikit-learn中的RBF核

[1]

import numpy as np

import matplotlib.pyplot as plt

[2]

from sklearn import datasets

X,y = datasets.make_moons(noise=0.15, random_state=666)

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

<matplotlib.collections.PathCollection at 0x1fda3257700>

[3]

from sklearn.preprocessing import StandardScaler

from sklearn.svm import SVC

from sklearn.pipeline import Pipeline

def RBFKernelSVC(gamma=1.0):

return Pipeline([

("std_scaler", StandardScaler()),

("svc", SVC(kernel="rbf", gamma=gamma))

])

[4]

svc = RBFKernelSVC(gamma=1.0)

svc.fit(X,y)

Pipeline(steps=[('std_scaler', StandardScaler()), ('svc', SVC(gamma=1.0))])

[5]

def plot_decision_boundary(model, axis):

x0, x1 = np.meshgrid(

np.linspace(axis[0], axis[1], int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2], axis[3], int((axis[3]-axis[2])*100)).reshape(-1,1)

)

X_new = np.c_[x0.ravel(), x1.ravel()]

y_predict = model.predict(X_new)

zz = y_predict.reshape(x0.shape)

from matplotlib.colors import ListedColormap

custom_cmap = ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

[6]

plot_decision_boundary(svc, axis=[-1.5,2.5,-1,1.5])

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_8316\3130018029.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x1fda37895b0>

[7]

svc_gamma100 = RBFKernelSVC(gamma=100)

svc_gamma100.fit(X,y)

Pipeline(steps=[('std_scaler', StandardScaler()), ('svc', SVC(gamma=100))])

[8]

plot_decision_boundary(svc_gamma100, axis=[-1.5,2.5,-1,1.5])

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_8316\3130018029.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x1fda3636310>

[9]

svc_gamma10 = RBFKernelSVC(gamma=10)

svc_gamma10.fit(X,y)

Pipeline(steps=[('std_scaler', StandardScaler()), ('svc', SVC(gamma=10))])

[10]

plot_decision_boundary(svc_gamma10, axis=[-1.5,2.5,-1,1.5])

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_8316\3130018029.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x1fda36a8ee0>

[11]

svc_gamma01 = RBFKernelSVC(gamma=0.1)

svc_gamma01.fit(X,y)

plot_decision_boundary(svc_gamma01, axis=[-1.5,2.5,-1,1.5])

plt.scatter(X[y==0,0], X[y==0,1])

plt.scatter(X[y==1,0], X[y==1,1])

C:\Users\Administrator\AppData\Local\Temp\ipykernel_8316\3130018029.py:15: UserWarning: The following kwargs were not used by contour: 'linewidth'

plt.contourf(x0, x1, zz, linewidth=5, cmap=custom_cmap)

<matplotlib.collections.PathCollection at 0x1fda37317c0>

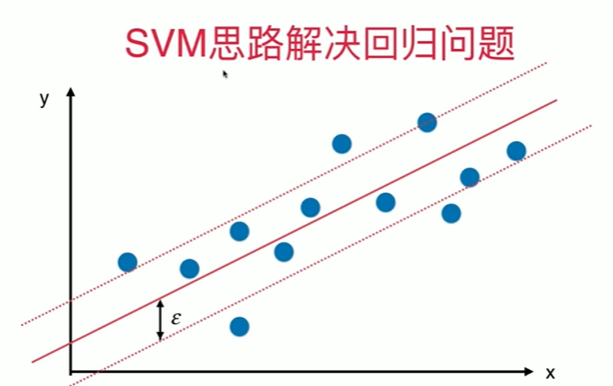

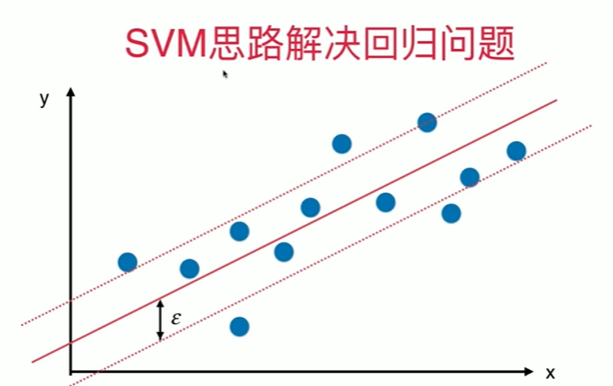

11-9 SVM思路解决回归问题

Notbook 示例

Notbook 源码

SVM 思路解决回归问题

[1]

import numpy as np

import matplotlib.pyplot as plt

[2]

from sklearn import datasets

boston = datasets.load_boston()

X = boston.data

y = boston.target

F:\anaconda\lib\site-packages\sklearn\utils\deprecation.py:87: FutureWarning: Function load_boston is deprecated; `load_boston` is deprecated in 1.0 and will be removed in 1.2.

The Boston housing prices dataset has an ethical problem. You can refer to

the documentation of this function for further details.

The scikit-learn maintainers therefore strongly discourage the use of this

dataset unless the purpose of the code is to study and educate about

ethical issues in data science and machine learning.

In this special case, you can fetch the dataset from the original

source::

import pandas as pd

import numpy as np

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]

Alternative datasets include the California housing dataset (i.e.

:func:`~sklearn.datasets.fetch_california_housing`) and the Ames housing

dataset. You can load the datasets as follows::

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing()

for the California housing dataset and::

from sklearn.datasets import fetch_openml

housing = fetch_openml(name="house_prices", as_frame=True)

for the Ames housing dataset.

warnings.warn(msg, category=FutureWarning)

[3]

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=666)

[4]

from sklearn.svm import LinearSVR

from sklearn.svm import SVR

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

def StandardLinearSVR(epsilon=0.1):

return Pipeline([

("std_scaler", StandardScaler()),

("linearSVR",LinearSVR(epsilon=epsilon))

])

[5]

svr = StandardLinearSVR()

svr.fit(X_train, y_train)

Pipeline(steps=[('std_scaler', StandardScaler()),

('linearSVR', LinearSVR(epsilon=0.1))])

[6]

svr.score(X_test,y_test)

0.6356218812016852