一、前言:

yolov5模型训练需要训练后使用pytorch训练好了模型,训练可以借鉴如下,或者上网搜索本人建议环境为 pytorch==1.13.0 opencv==3.4.1 libtorch包==1.13.0 cmake==随便

2021-1-12YOLO数据集加载_torch_distributed_zero_first_该醒醒了~的博客-CSDN博客

本篇文章主要是通过 C++ 进行模型的部署。

最终效果:

二、安装Visual Studio :

官网地址:

https://visualstudio.microsoft.com/zh-hans/vs/older-downloads/

上诉链接可能为2017推荐安装 Visual Studio Installer 2019或者2022

三、下载Opencv:

官网地址:

Home - OpenCV建议安装3.4.1

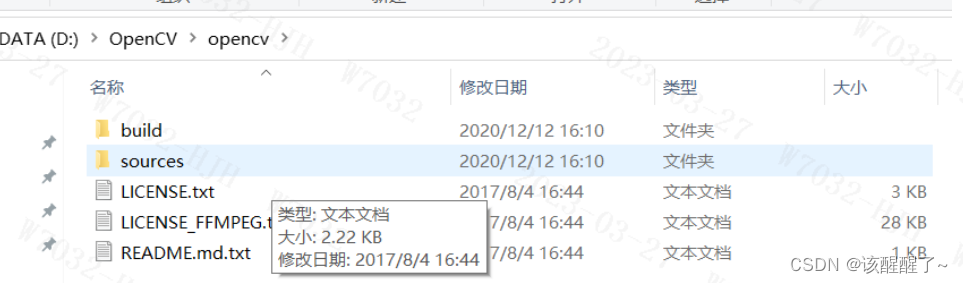

解压后有这几个东西

接着进行系统变量添加

系统变量的path 双击点开

添加如下路径 opencv 配置教程网络上都有可以查到

...\opencv\build\x64\vc15\bin

....\opencv\build\include

(省略部分为你opencv文件夹所在路径)

四、下载Libtorch:

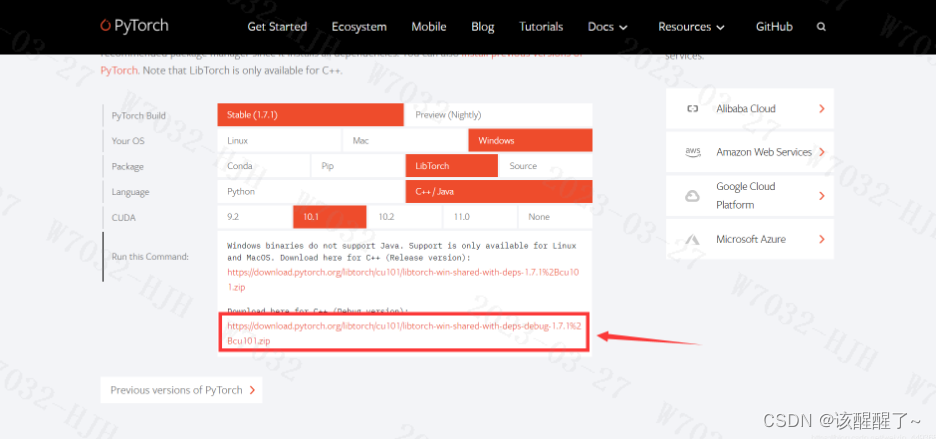

官网地址:可以在直接pytorch官网 在里面选择相对应的libtorch 如下图

下载相应版本:

解压如下

五、配置VS项目属性:

新建一个项目:

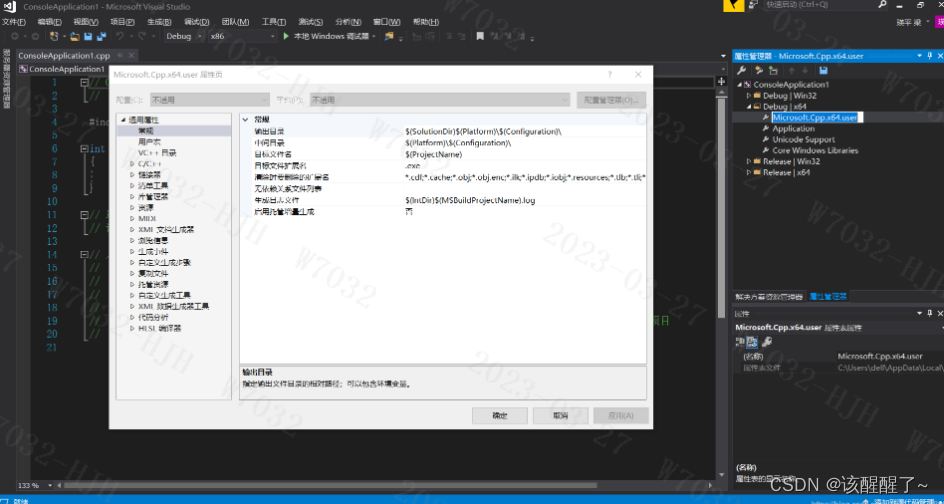

上方列表中找到(项目)点击下方的(某某属性)如下

选择 VC++目录-包含目录,添加以下路径:

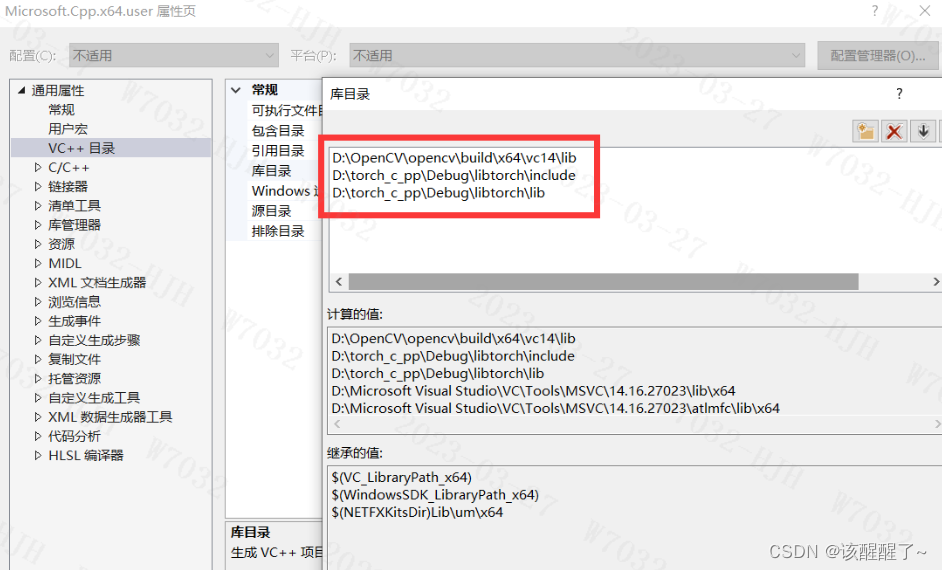

选择 VC++目录-库目录,添加以下路径:

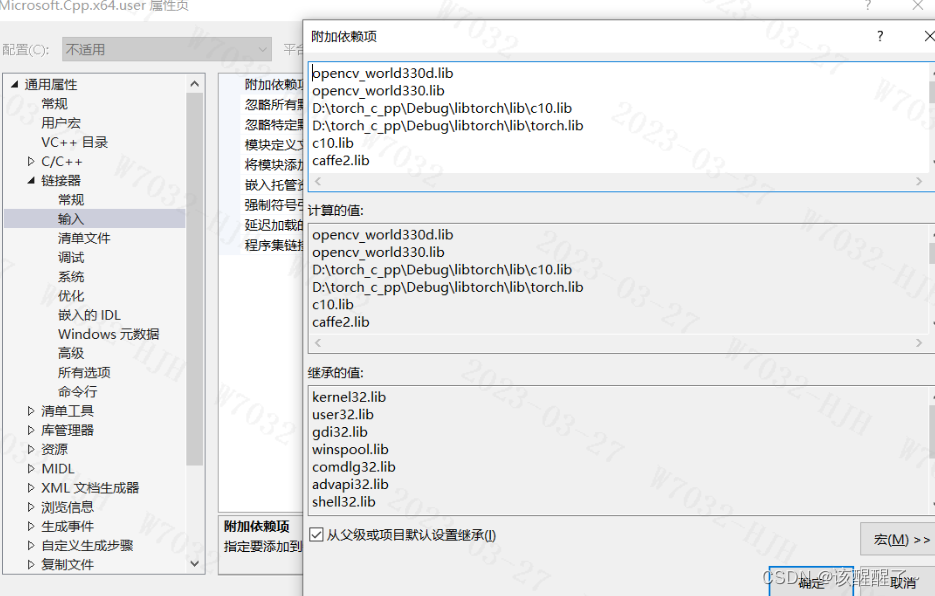

选择 链接器-输入-附加依赖项,添加以下路径:

......\opencv\build\x64\vc15\bin

........\opencv\build\x64\vc15\lib

如下文件路径如上 如果看不明白,直接上网搜,一大堆

注意如果你用的是debug项 只加入opencv_world330.lib 就是330后面没有d

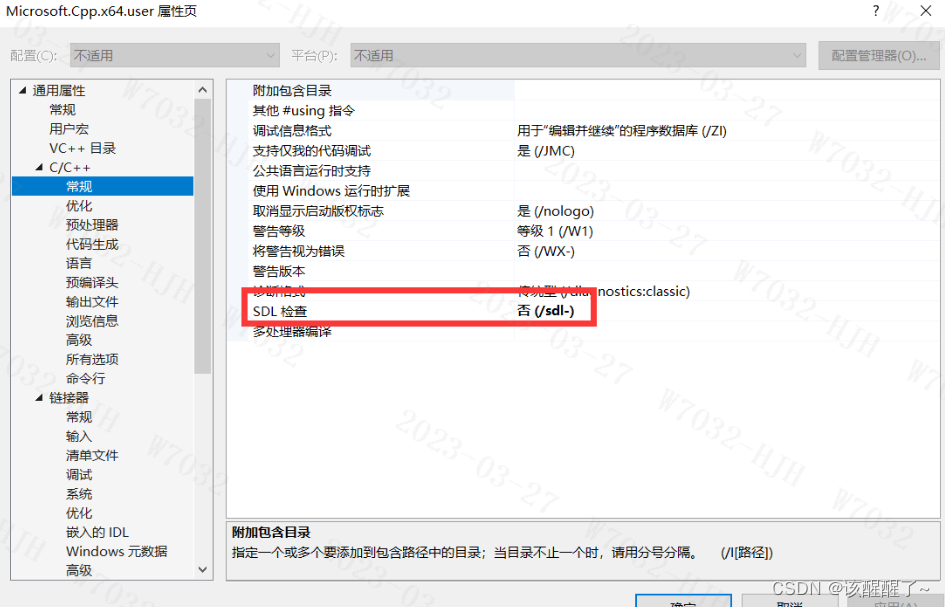

选择C/C+±常规-SDL检查,修改为”否“:

六、测试环境:

使用如下代码:

#include <torch/script.h>

#include <torch/torch.h>

#include <iostream>

#include <memory>

int main(int argc, const char* argv[]) {

std::cout << "cuda::is_available():" << torch::cuda::is_available() << std::endl;

torch::DeviceType device_type = at::kCPU; // 定义设备类型

if (torch::cuda::is_available())

device_type = at::kCUDA;

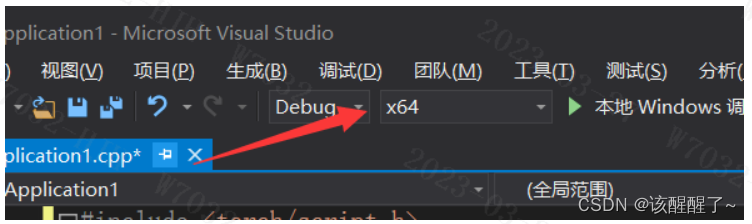

}注意如果你在debug中配置的环境就需要选择此项

结果

七、YOLOv5推理:

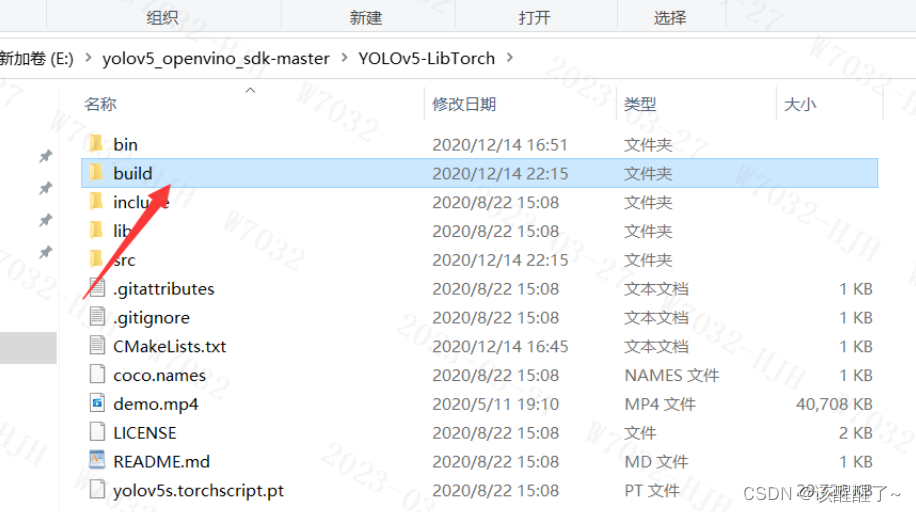

这里要注意,因为版本的原因,你下载的libtorch-yolov5可能和图中的文件存在差异

下载后解压,并创建build文件夹:

修改CMakeLists.txt:

在build文件夹中打开cmd,运行:

cmake ..注意cmake .. 中间有空格 并且此项目需要安装cmake可在浏览器上搜索下载,建议下载后缀名为.zip因为不需要安装,直接可以用,只是需要把里面的bin项添加到系统变量,方法看上面

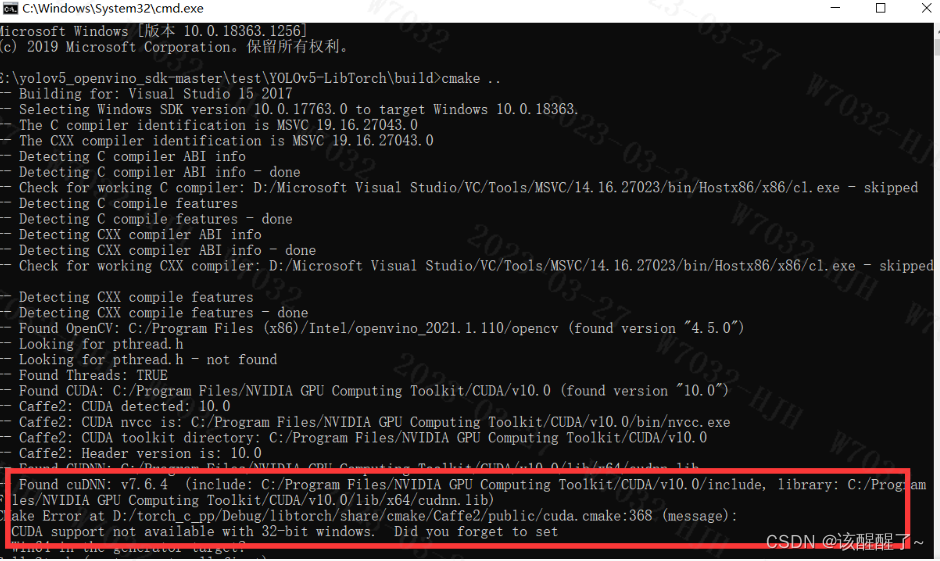

如果报如下错

修改一下cmake命令:

cmake .. -DCMAKE_GENERATOR_PLATFORM=x64成功

可以看到build文件夹下生成了VS项目:

八、运行预测:

打开项目,将YOLOv5LibTorch.cpp修改为:

#include <opencv2/opencv.hpp>

#include <torch/script.h>

#include <torch/torch.h>

#include <algorithm>

#include <iostream>

#include <time.h>

std::vector<torch::Tensor> non_max_suppression(torch::Tensor preds, float score_thresh=0.5, float iou_thresh=0.5)

{

std::vector<torch::Tensor> output;

for (size_t i=0; i < preds.sizes()[0]; ++i)

{

torch::Tensor pred = preds.select(0, i);

// Filter by scores

torch::Tensor scores = pred.select(1, 4) * std::get<0>( torch::max(pred.slice(1, 5, pred.sizes()[1]), 1));

pred = torch::index_select(pred, 0, torch::nonzero(scores > score_thresh).select(1, 0));

if (pred.sizes()[0] == 0) continue;

// (center_x, center_y, w, h) to (left, top, right, bottom)

pred.select(1, 0) = pred.select(1, 0) - pred.select(1, 2) / 2;

pred.select(1, 1) = pred.select(1, 1) - pred.select(1, 3) / 2;

pred.select(1, 2) = pred.select(1, 0) + pred.select(1, 2);

pred.select(1, 3) = pred.select(1, 1) + pred.select(1, 3);

// Computing scores and classes

std::tuple<torch::Tensor, torch::Tensor> max_tuple = torch::max(pred.slice(1, 5, pred.sizes()[1]), 1);

pred.select(1, 4) = pred.select(1, 4) * std::get<0>(max_tuple);

pred.select(1, 5) = std::get<1>(max_tuple);

torch::Tensor dets = pred.slice(1, 0, 6);

torch::Tensor keep = torch::empty({

dets.sizes()[0]});

torch::Tensor areas = (dets.select(1, 3) - dets.select(1, 1)) * (dets.select(1, 2) - dets.select(1, 0));

std::tuple<torch::Tensor, torch::Tensor> indexes_tuple = torch::sort(dets.select(1, 4), 0, 1);

torch::Tensor v = std::get<0>(indexes_tuple);

torch::Tensor indexes = std::get<1>(indexes_tuple);

int count = 0;

while (indexes.sizes()[0] > 0)

{

keep[count] = (indexes[0].item().toInt());

count += 1;

// Computing overlaps

torch::Tensor lefts = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor tops = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor rights = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor bottoms = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor widths = torch::empty(indexes.sizes()[0] - 1);

torch::Tensor heights = torch::empty(indexes.sizes()[0] - 1);

for (size_t i=0; i<indexes.sizes()[0] - 1; ++i)

{

lefts[i] = std::max(dets[indexes[0]][0].item().toFloat(), dets[indexes[i + 1]][0].item().toFloat());

tops[i] = std::max(dets[indexes[0]][1].item().toFloat(), dets[indexes[i + 1]][1].item().toFloat());

rights[i] = std::min(dets[indexes[0]][2].item().toFloat(), dets[indexes[i + 1]][2].item().toFloat());

bottoms[i] = std::min(dets[indexes[0]][3].item().toFloat(), dets[indexes[i + 1]][3].item().toFloat());

widths[i] = std::max(float(0), rights[i].item().toFloat() - lefts[i].item().toFloat());

heights[i] = std::max(float(0), bottoms[i].item().toFloat() - tops[i].item().toFloat());

}

torch::Tensor overlaps = widths * heights;

// FIlter by IOUs

torch::Tensor ious = overlaps / (areas.select(0, indexes[0].item().toInt()) + torch::index_select(areas, 0, indexes.slice(0, 1, indexes.sizes()[0])) - overlaps);

indexes = torch::index_select(indexes, 0, torch::nonzero(ious <= iou_thresh).select(1, 0) + 1);

}

keep = keep.toType(torch::kInt64);

output.push_back(torch::index_select(dets, 0, keep.slice(0, 0, count)));

}

return output;

}

#include <torch/script.h>

#include <iostream>

#include <memory>

int main(int argc, const char* argv[]) {

std::cout << "cuda::is_available():" << torch::cuda::is_available() << std::endl;

torch::DeviceType device_type = at::kCPU; // 定义设备类型

if (torch::cuda::is_available())

device_type = at::kCUDA;

}

int a(int argc, char* argv[])

{

std::cout << "cuda::is_available():" << torch::cuda::is_available() << std::endl;

torch::DeviceType device_type = at::kCPU; // 定义设备类型

if (torch::cuda::is_available())

device_type = at::kCUDA;

// Loading Module

torch::jit::script::Module module = torch::jit::load("../yolov5s.torchscript.pt");

module.to(device_type); // 模型加载至GPU

std::vector<std::string> classnames;

std::ifstream f("../coco.names");

std::string name = "";

while (std::getline(f, name))

{

classnames.push_back(name);

}

if(argc < 2)

{

std::cout << "Please run with test video." << std::endl;

return -1;

}

std::string video = argv[1];

cv:: VideoCapture cap = cv::VideoCapture(video);

// cap.set(cv::CAP_PROP_FRAME_WIDTH, 1920);

// cap.set(cv::CAP_PROP_FRAME_HEIGHT, 1080);

cv::Mat frame, img;

cap.read(frame);

int width = frame.size().width;

int height = frame.size().height;

int count = 0;

while(cap.isOpened())

{

count++;

clock_t start = clock();

cap.read(frame);

if(frame.empty())

{

std::cout << "Read frame failed!" << std::endl;

break;

}

// Preparing input tensor

cv::resize(frame, img, cv::Size(640, 384));

// cv::cvtColor(img, img, cv::COLOR_BGR2RGB);

// torch::Tensor imgTensor = torch::from_blob(img.data, {img.rows, img.cols,3},torch::kByte);

// imgTensor = imgTensor.permute({2,0,1});

// imgTensor = imgTensor.toType(torch::kFloat);

// imgTensor = imgTensor.div(255);

// imgTensor = imgTensor.unsqueeze(0);

// imgTensor = imgTensor.to(device_type);

cv::cvtColor(img, img, cv::COLOR_BGR2RGB); // BGR -> RGB

img.convertTo(img, CV_32FC3, 1.0f / 255.0f); // normalization 1/255

auto imgTensor = torch::from_blob(img.data, {

1, img.rows, img.cols, img.channels() }).to(device_type);

imgTensor = imgTensor.permute({

0, 3, 1, 2 }).contiguous(); // BHWC -> BCHW (Batch, Channel, Height, Width)

std::vector<torch::jit::IValue> inputs;

inputs.emplace_back(imgTensor);

// preds: [?, 15120, 9]

torch::jit::IValue output = module.forward(inputs);

auto preds = output.toTuple()->elements()[0].toTensor();

// torch::Tensor preds = module.forward({ imgTensor }).toTensor();

std::vector<torch::Tensor> dets = non_max_suppression(preds, 0.4, 0.5);

if (dets.size() > 0)

{

// Visualize result

for (size_t i=0; i < dets[0].sizes()[0]; ++ i)

{

float left = dets[0][i][0].item().toFloat() * frame.cols / 640;

float top = dets[0][i][1].item().toFloat() * frame.rows / 384;

float right = dets[0][i][2].item().toFloat() * frame.cols / 640;

float bottom = dets[0][i][3].item().toFloat() * frame.rows / 384;

float score = dets[0][i][4].item().toFloat();

int classID = dets[0][i][5].item().toInt();

cv::rectangle(frame, cv::Rect(left, top, (right - left), (bottom - top)), cv::Scalar(0, 255, 0), 2);

cv::putText(frame,

classnames[classID] + ": " + cv::format("%.2f", score),

cv::Point(left, top),

cv::FONT_HERSHEY_SIMPLEX, (right - left) / 200, cv::Scalar(0, 255, 0), 2);

}

}

// std::cout << "-[INFO] Frame:" << std::to_string(count) << " FPS: " + std::to_string(float(1e7 / (clock() - start))) << std::endl;

std::cout << "-[INFO] Frame:" << std::to_string(count) << std::endl;

// cv::putText(frame, "FPS: " + std::to_string(int(1e7 / (clock() - start))),

// cv::Point(50, 50),

// cv::FONT_HERSHEY_SIMPLEX, 1, cv::Scalar(0, 255, 0), 2);

cv::imshow("", frame);

// cv::imwrite("../images/"+cv::format("%06d", count)+".jpg", frame);

cv::resize(frame, frame, cv::Size(width, height));

if(cv::waitKey(1)== 27) break;

}

cap.release();

return 0;

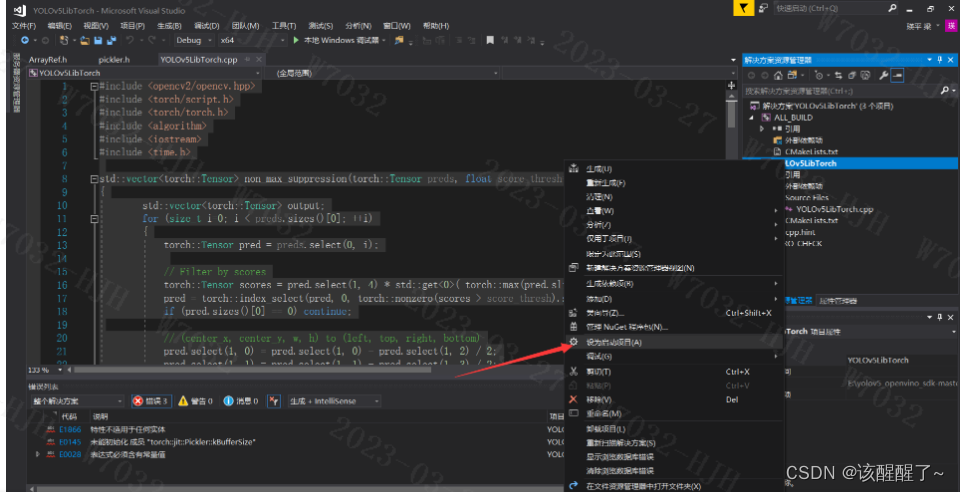

}然后右侧资源管理器,将其设置为启动项目:

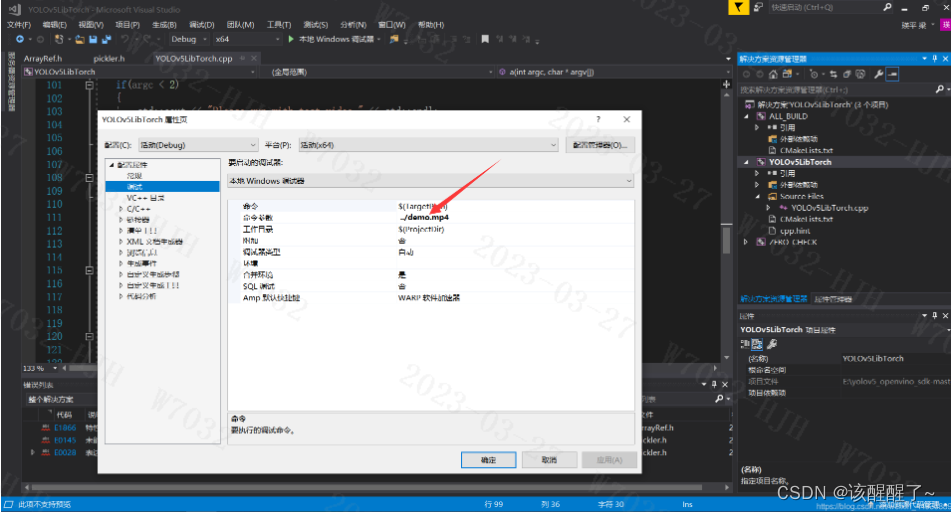

设置项目属性,即命令行第一个参数(测试视频):

运行即可:

九、修改为自己的模型:

注意了 yolov5模型不能直接被调用,需要在转化一下

转到 yolov5 训练的路径,运行 export.py 导出模型:

在--weights-- default=‘ 修改你要转化的模型文件路径‘

下方device default=’cpu‘ 改为 default=’0‘ 如果你不是gpu就改成cpu

修改后后然后运行就可以了

不用担心转化后找不到,因为转化后直接保存到模型文件路径下

然后修改项目中的权重就好

总结一下,本文不代表所有,重点是需要安装cmake 部分可能bug会很多,过了cmake和cmake转化,其他的就很容易,过了皆大欢喜,过不了,我也咩办法!!