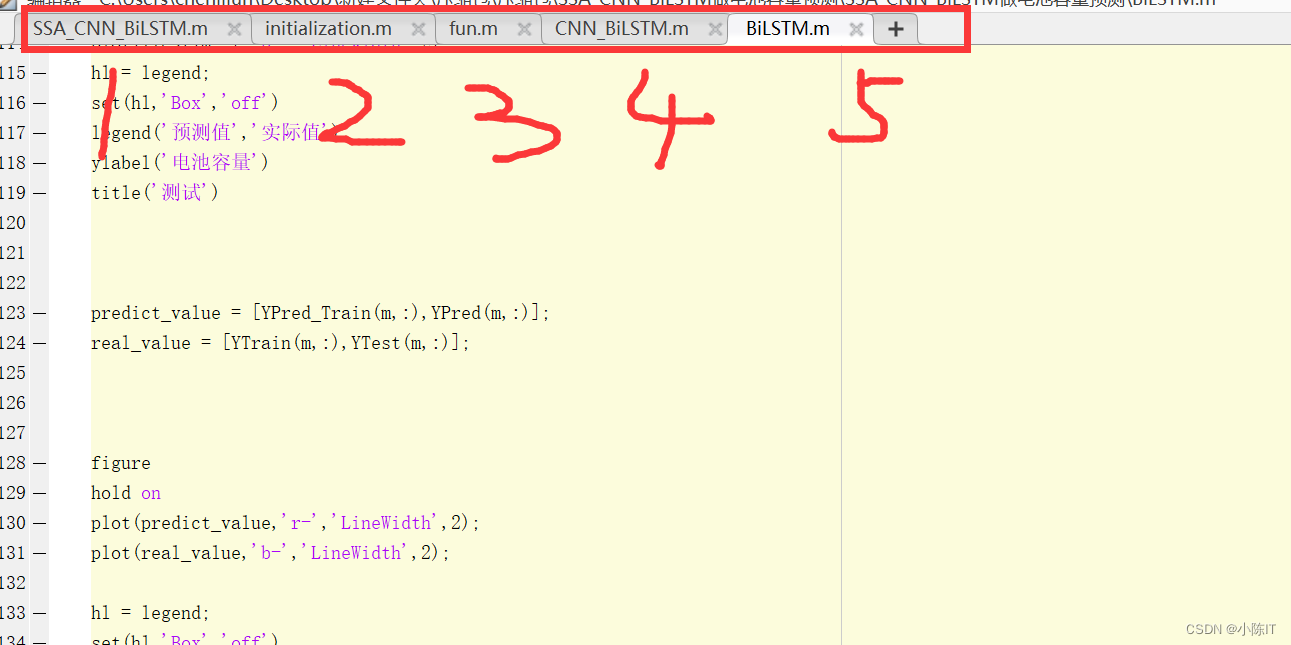

代码

代码按照如下顺序:

1、

clc

close all

clear all

data=xlsread('B05.xlsx',1,'A2:A169');

numTimeStepsTrain = floor(0.7*numel(data));

dataTrain = data(1:numTimeStepsTrain,:);%选择训练集

dataTest = data(numTimeStepsTrain+1:end,:); % 选择测试集

k =9; %滑动窗口设置为9 具体设多少需要衡量

m = 1 ; %预测步长

for i = 1:size(dataTrain)-k-m+1

XTrain(:,i) = dataTrain(i:i+k-1,:);

YTrain(:,i) = dataTrain(i+k:i+k+m-1,:);

end

for i = 1:size(dataTest)-k-m+1

XTest(:,i) = dataTest(i:i+k-1,:);

YTest(:,i) = dataTest(i+k:i+k+m-1,:);

end

x = XTrain;

y = YTrain;

[xnorm,xopt] = mapminmax(x,0,1);

[ynorm,yopt] = mapminmax(y,0,1);

x = x';

% 转换成2-D image

for i = 1:length(ynorm)

Train_xNorm{

i} = reshape(xnorm(:,i),k,1,1);

Train_yNorm(:,i) = ynorm(:,i);

Train_y(i,:) = y(:,i);

end

Train_yNorm= Train_yNorm';

xtest = XTest;

ytest = YTest;

[xtestnorm] = mapminmax('apply', xtest,xopt);

[ytestnorm] = mapminmax('apply',ytest,yopt);

xtest = xtest';

for i = 1:length(ytestnorm)

Test_xNorm{

i} = reshape(xtestnorm(:,i),k,1,1);

Test_yNorm(:,i) = ytestnorm(:,i);

Test_y(i,:) = ytest(:,i);

end

Test_yNorm = Test_yNorm';

%% SSA优化参数设置

SearchAgents = 10; % 种群数量 50

Max_iterations = 30 ; % 迭代次数 10

lowerbound = [1e-10 0.001 10 ];%三个参数的下限

upperbound = [1e-2 0.01 200 ];%三个参数的上限

dimension = 3;%数量,即要优化的LSTM参数个数

%% SSA优化LSTM

% [Best_pos,Best_score,Convergence_curve]=SSA(SearchAgents,Max_iterations,lowerbound,upperbound,dimension,ObjFcn)

%% 参数设置

ST = 0.8; % 预警值

PD = 0.2; % 发现者的比列,剩下的是加入者

PDNumber = SearchAgents * PD; % 发现者数量

SDNumber = SearchAgents - SearchAgents * PD; % 意识到有危险麻雀数量

%% 判断优化参数个数

if(max(size(upperbound)) == 1)

upperbound = upperbound .* ones(1, dimension);

lowerbound = lowerbound .* ones(1, dimension);

end

%% 种群初始化

pop_lsat = initialization(SearchAgents, dimension, upperbound, lowerbound);

pop_new = pop_lsat;

%% 计算初始适应度值

fitness = zeros(1, SearchAgents);

for i = 1 : SearchAgents

fitness(i) = fun(pop_new(i,:),Train_xNorm,Train_yNorm,Test_xNorm,Test_y,yopt,k);

end

%% 得到全局最优适应度值

[fitness, index]= sort(fitness);

GBestF = fitness(1);

%% 得到全局最优种群

for i = 1 : SearchAgents

pop_new(i, :) = pop_lsat(index(i), :);

end

GBestX = pop_new(1, :);

X_new = pop_new;

%% 优化算法

for i = 1: Max_iterations

BestF = fitness(1);

R2 = rand(1);

for j = 1 : PDNumber

if(R2 < ST)

X_new(j, :) = pop_new(j, :) .* exp(-j / (rand(1) * Max_iterations));

else

X_new(j, :) = pop_new(j, :) + randn() * ones(1, dimension);

end

end

for j = PDNumber + 1 : SearchAgents

if(j > (SearchAgents - PDNumber) / 2 + PDNumber)

X_new(j, :) = randn() .* exp((pop_new(end, :) - pop_new(j, :)) / j^2);

else

A = ones(1, dimension);

for a = 1 : dimension

if(rand() > 0.5)

A(a) = -1;

end

end

AA = A' / (A * A');

X_new(j, :) = pop_new(1, :) + abs(pop_new(j, :) - pop_new(1, :)) .* AA';

end

end

Temp = randperm(SearchAgents);

SDchooseIndex = Temp(1 : SDNumber);

for j = 1 : SDNumber

if(fitness(SDchooseIndex(j)) > BestF)

X_new(SDchooseIndex(j), :) = pop_new(1, :) + randn() .* abs(pop_new(SDchooseIndex(j), :) - pop_new(1, :));

elseif(fitness(SDchooseIndex(j)) == BestF)

K = 2 * rand() -1;

X_new(SDchooseIndex(j), :) = pop_new(SDchooseIndex(j), :) + K .* (abs(pop_new(SDchooseIndex(j), :) - ...

pop_new(end, :)) ./ (fitness(SDchooseIndex(j)) - fitness(end) + 10^-8));

end

end

%% 边界控制

for j = 1 : SearchAgents

for a = 1 : dimension

if(X_new(j, a) > upperbound(a))

X_new(j, a) = upperbound(a);

end

if(X_new(j, a) < lowerbound(a))

X_new(j, a) = lowerbound(a);

end

end

end

%% 获取适应度值

for j = 1 : SearchAgents

fitness_new(j) = fun(X_new(j, :),Train_xNorm,Train_yNorm,Test_xNorm,Test_y,yopt,k);

end

%% 获取最优种群

for j = 1 : SearchAgents

if(fitness_new(j) < GBestF)

GBestF = fitness_new(j);

GBestX = X_new(j, :);

end

end

%% 更新种群和适应度值

pop_new = X_new;

fitness = fitness_new;

%% 更新种群

[fitness, index] = sort(fitness);

for j = 1 : SearchAgents

pop_new(j, :) = pop_new(index(j), :);

end

%% 得到优化曲线

curve(i) = GBestF;

avcurve(i) = sum(curve) / length(curve);

end

%% 得到最优值

Best_pos = GBestX;

Best_score = curve(end);

%% 得到最优参数

NumOfUnits =abs(round( Best_pos(1,3))); % 最佳神经元个数

InitialLearnRate = Best_pos(1,2) ;% 最佳初始学习率

L2Regularization = Best_pos(1,1); % 最佳L2正则化系数

%

inputSize = k;

outputSize = 1; %数据输出y的维度

layers = [ ...

sequenceInputLayer([inputSize,1,1],'name','input') %输入层设置

sequenceFoldingLayer('name','fold')

convolution2dLayer([2,1],10,'Stride',[1,1],'name','conv1')

batchNormalizationLayer('name','batchnorm1')

reluLayer('name','relu1')

convolution2dLayer([1,1],10,'Stride',[1,1],'name','conv2')

batchNormalizationLayer('name','batchnorm2')

reluLayer('name','relu2')

maxPooling2dLayer([1,3],'Stride',1,'Padding','same','name','maxpool')

sequenceUnfoldingLayer('name','unfold')

flattenLayer('name','flatten')

bilstmLayer(NumOfUnits ,'Outputmode','sequence','name','hidden1')

dropoutLayer(0.3,'name','dropout_1')

bilstmLayer(NumOfUnits ,'Outputmode','sequence','name','hidden3')

dropoutLayer(0.3,'name','dropout_3')

bilstmLayer(NumOfUnits ,'Outputmode','last','name','hidden2')

dropoutLayer(0.3,'name','drdiopout_2')

fullyConnectedLayer(outputSize,'name','fullconnect') % 全连接层设置(影响输出维度)(cell层出来的输出层) %

tanhLayer('name','softmax')

regressionLayer('name','output')];

lgraph = layerGraph(layers)

lgraph = connectLayers(lgraph,'fold/miniBatchSize','unfold/miniBatchSize');

% 参数设置

% options = trainingOptions('adam', ... % 优化算法Adam

% 'MaxEpochs', 2000, ... % 最大训练次数

% 'GradientThreshold', 1, ... % 梯度阈值

% 'InitialLearnRate', InitialLearnRate, ... % 初始学习率

% 'LearnRateSchedule', 'piecewise', ... % 学习率调整

% 'LearnRateDropPeriod', 850, ... % 训练850次后开始调整学习率

% 'LearnRateDropFactor',0.2, ... % 学习率调整因子

% 'L2Regularization', L2Regularization, ... % 正则化参数

% 'ExecutionEnvironment', 'cpu',... % 训练环境

% 'Verbose', 0, ... % 关闭优化过程

% 'Plots', 'training-progress'); % 画出曲线

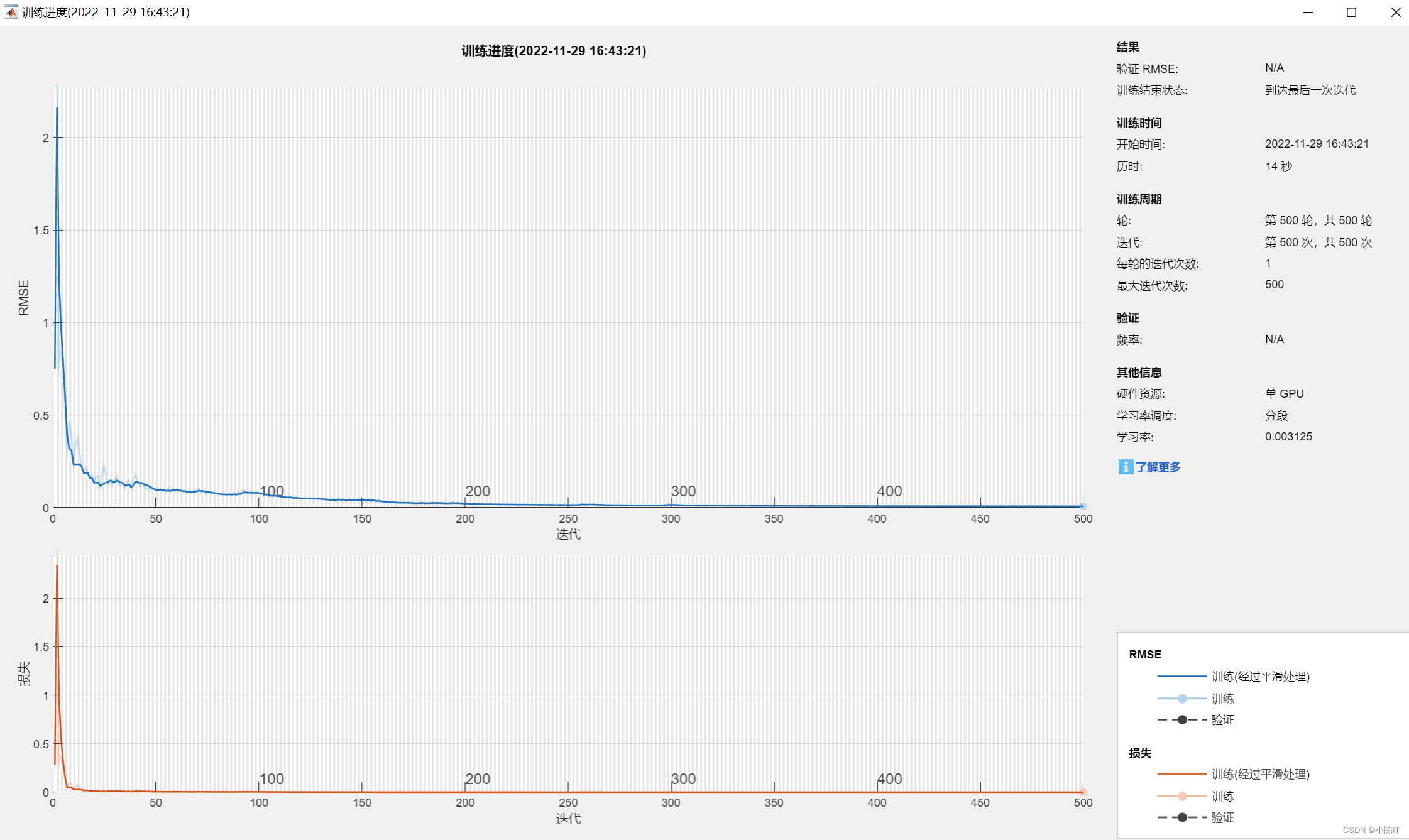

opts = trainingOptions('adam', ...

'MaxEpochs',500, ...

'GradientThreshold',1,...

'ExecutionEnvironment','cpu',...

'InitialLearnRate',InitialLearnRate, ...

'LearnRateSchedule','piecewise', ...

'LearnRateDropPeriod',100, ... %2个epoch后学习率更新

'LearnRateDropFactor',0.5, ...

'Shuffle','once',... % 时间序列长度

'SequenceLength',1,...

'MiniBatchSize',128,...

'Verbose',1,...

'Plots','training-progress');

% 网络训练

tic

net = trainNetwork(Train_xNorm,Train_yNorm,lgraph,opts );

Predict_Ynorm_Train = net.predict(Train_xNorm);

Predict_Y_Train = mapminmax('reverse',Predict_Ynorm_Train',yopt);

Predict_Y_Train = Predict_Y_Train';

figure

hold on

plot(Predict_Y_Train,'r-','LineWidth',2.0)

plot(Train_y,'b-','LineWidth',2.0);

ylabel('容量')

legend('预测值','实际值')

title('训练')

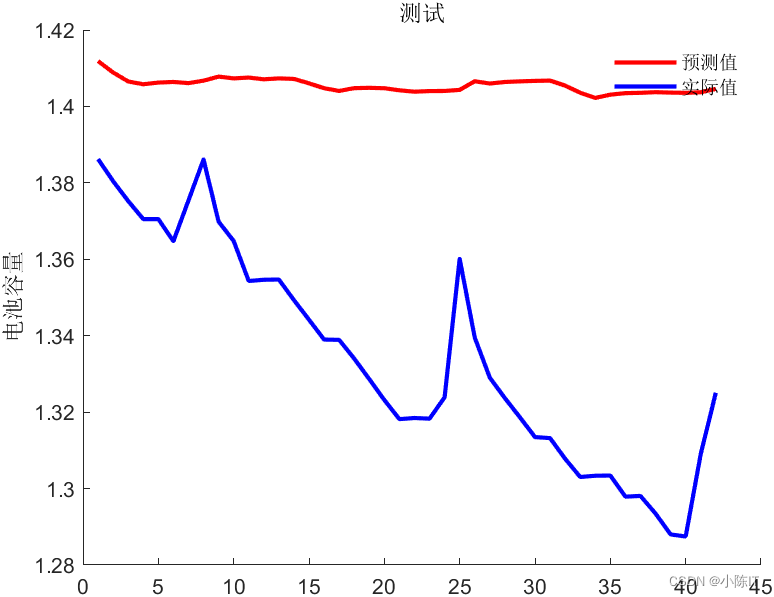

Predict_Ynorm = net.predict(Test_xNorm);

Predict_Y = mapminmax('reverse',Predict_Ynorm',yopt);

Predict_Y = Predict_Y';

figure

hold on

plot(Predict_Y,'r-','LineWidth',2.0)

plot(Test_y,'b-','LineWidth',2.0)

legend('预测值','实际值')

ylabel('容量')

title('测试')

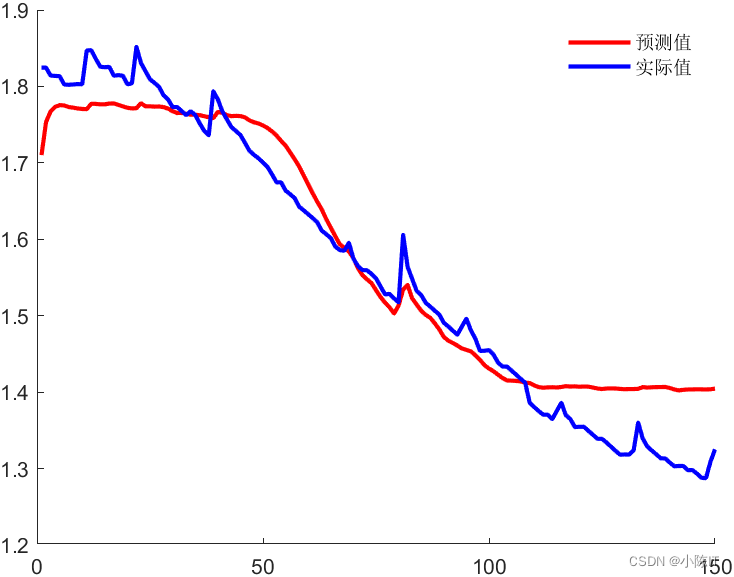

predict_value = [Predict_Y_Train' Predict_Y'];

real_value = [Train_y' Test_y'];

figure

hold on

plot(predict_value,'r-','LineWidth',2.0)

plot(real_value,'b-','LineWidth',2.0)

legend('预测值','实际值')

ylabel('容量')

title('测试')

% 预测结果评价

ae= abs(Predict_Y - Test_y);

rmse = (mean(ae.^2)).^0.5;

mse = mean(ae.^2);

mae = mean(ae);

mape = mean(ae./Predict_Y);

disp('预测结果评价指标:')

disp(['RMSE = ', num2str(rmse)])

disp(['MSE = ', num2str(mse)])

disp(['MAE = ', num2str(mae)])

disp(['MAPE = ', num2str(mape)])

disp(['最佳神经元个数为:', num2str(NumOfUnits)])

disp(['最佳初始学习率为:', num2str(InitialLearnRate)])

disp(['最佳L2正则化系数为:', num2str(L2Regularization)])

2、

function Positions = initialization(SearchAgents_no, dim, ub, lb)

%% 初始化

%% 待优化参数个数

Boundary_no = size(ub, 2);

%% 若待优化参数个数为1

if Boundary_no == 1

Positions = rand(SearchAgents_no, dim) .* (ub - lb) + lb;

end

%% 如果存在多个输入边界个数

if Boundary_no > 1

for i = 1 : dim

ub_i = ub(i);

lb_i = lb(i);

Positions(:, i) = rand(SearchAgents_no, 1) .* (ub_i - lb_i) + lb_i;

end

end

3、

function y = fun(x,Train_xNorm,Train_yNorm,Test_xNorm,Test_y,yopt,k)

%函数用于计算粒子适应度值

%rng default;%固定随机数

numhidden_units1 = fix(x(3))+1; % 隐含层神经元数量 round为四舍五入函数;

numhidden_units2= fix(x(3))+1;

% 层设置,参数设置

inputSize = k;

outputSize = 1; %数据输出y的维度

options = trainingOptions('adam', ...

'MaxEpochs',500, ...

'GradientThreshold',1,...

'ExecutionEnvironment','cpu',...

'InitialLearnRate',x(2), ...

'LearnRateSchedule','piecewise', ...

'LearnRateDropPeriod',100, ... %100个epoch后学习率更新

'LearnRateDropFactor',0.5, ...

'Shuffle','once',... % 时间序列长度

'SequenceLength',1,...

'MiniBatchSize',128,...

'L2Regularization', x(1), ...

'Verbose',1);

%% lstm

layers = [ ...

sequenceInputLayer([inputSize,1,1],'name','input') %输入层设置

sequenceFoldingLayer('name','fold')

convolution2dLayer([2,1],10,'Stride',[1,1],'name','conv1')

batchNormalizationLayer('name','batchnorm1')

reluLayer('name','relu1')

convolution2dLayer([1,1],10,'Stride',[1,1],'name','conv2')

batchNormalizationLayer('name','batchnorm2')

reluLayer('name','relu2')

maxPooling2dLayer([1,3],'Stride',1,'Padding','same','name','maxpool')

sequenceUnfoldingLayer('name','unfold')

flattenLayer('name','flatten')

bilstmLayer(numhidden_units1,'Outputmode','sequence','name','hidden1')

dropoutLayer(0.3,'name','dropout_1')

bilstmLayer(numhidden_units1,'Outputmode','sequence','name','hidden3')

dropoutLayer(0.3,'name','dropout_3')

bilstmLayer(numhidden_units2,'Outputmode','last','name','hidden2')

dropoutLayer(0.3,'name','drdiopout_2')

fullyConnectedLayer(outputSize,'name','fullconnect') % 全连接层设置(影响输出维度)(cell层出来的输出层) %

tanhLayer('name','softmax')

regressionLayer('name','output')];

lgraph = layerGraph(layers)

lgraph = connectLayers(lgraph,'fold/miniBatchSize','unfold/miniBatchSize');

%

% 网络训练

net = trainNetwork(Train_xNorm,Train_yNorm,lgraph,options);

Predict_Ynorm = net.predict(Test_xNorm);

Predict_Y = mapminmax('reverse',Predict_Ynorm',yopt);

Predict_Y = Predict_Y';

% rmse_without_update1 = sqrt(mean((Predict_Y(1,:)-Test_y(1,:))).^2,'ALL')

rmse_without_update1 = sqrt(mean((Predict_Y(1,:)-(Test_y(1,:))).^2,'ALL'))

y = rmse_without_update1 ;% cost为目标函数 ,目标函数为rmse

end

4、

clc

close all

clear all

data=xlsread('B05.xlsx',1,'A2:A169');

numTimeStepsTrain = floor(0.7*numel(data));

dataTrain = data(1:numTimeStepsTrain,:);%选择训练集

dataTest = data(numTimeStepsTrain+1:end,:); % 选择测试集

k =9; %滑动窗口设置为9 具体设多少需要衡量

m = 1 ; %预测步长

for i = 1:size(dataTrain)-k-m+1

XTrain(:,i) = dataTrain(i:i+k-1,:);

YTrain(:,i) = dataTrain(i+k:i+k+m-1,:);

end

for i = 1:size(dataTest)-k-m+1

XTest(:,i) = dataTest(i:i+k-1,:);

YTest(:,i) = dataTest(i+k:i+k+m-1,:);

end

x = XTrain;

y = YTrain;

[xnorm,xopt] = mapminmax(x,0,1);

[ynorm,yopt] = mapminmax(y,0,1);

x = x';

% 转换成2-D image

for i = 1:length(ynorm)

Train_xNorm{

i} = reshape(xnorm(:,i),k,1,1);

Train_yNorm(:,i) = ynorm(:,i);

Train_y(i,:) = y(:,i);

end

Train_yNorm= Train_yNorm';

xtest = XTest;

ytest = YTest;

[xtestnorm] = mapminmax('apply', xtest,xopt);

[ytestnorm] = mapminmax('apply',ytest,yopt);

xtest = xtest';

for i = 1:length(ytestnorm)

Test_xNorm{

i} = reshape(xtestnorm(:,i),k,1,1);

Test_yNorm(:,i) = ytestnorm(:,i);

Test_y(i,:) = ytest(:,i);

end

Test_yNorm = Test_yNorm';

%

inputSize = k;

outputSize = m; %数据输出y的维度

NumOfUnits = 100;

layers = [ ...

sequenceInputLayer([inputSize,1,1],'name','input') %输入层设置

sequenceFoldingLayer('name','fold')

convolution2dLayer([2,1],10,'Stride',[1,1],'name','conv1')

batchNormalizationLayer('name','batchnorm1')

reluLayer('name','relu1')

convolution2dLayer([1,1],10,'Stride',[1,1],'name','conv2')

batchNormalizationLayer('name','batchnorm2')

reluLayer('name','relu2')

maxPooling2dLayer([1,3],'Stride',1,'Padding','same','name','maxpool')

sequenceUnfoldingLayer('name','unfold')

flattenLayer('name','flatten')

bilstmLayer(NumOfUnits ,'Outputmode','sequence','name','hidden1')

dropoutLayer(0.3,'name','dropout_1')

bilstmLayer(NumOfUnits ,'Outputmode','sequence','name','hidden3')

dropoutLayer(0.3,'name','dropout_3')

bilstmLayer(NumOfUnits ,'Outputmode','last','name','hidden2')

dropoutLayer(0.3,'name','drdiopout_2')

fullyConnectedLayer(outputSize,'name','fullconnect') % 全连接层设置(影响输出维度)(cell层出来的输出层) %

tanhLayer('name','softmax')

regressionLayer('name','output')];

lgraph = layerGraph(layers)

lgraph = connectLayers(lgraph,'fold/miniBatchSize','unfold/miniBatchSize');

opts = trainingOptions('adam', ...

'MaxEpochs',500, ...

'GradientThreshold',1,...

'ExecutionEnvironment','cpu',...

'InitialLearnRate',0.001, ...

'LearnRateSchedule','piecewise', ...

'LearnRateDropPeriod',100, ... %2个epoch后学习率更新

'LearnRateDropFactor',0.5, ...

'Shuffle','once',... % 时间序列长度

'SequenceLength',1,...

'MiniBatchSize',28,...

'Verbose',1,...

'Plots','training-progress');

% 网络训练

tic

net = trainNetwork(Train_xNorm,Train_yNorm,lgraph,opts );

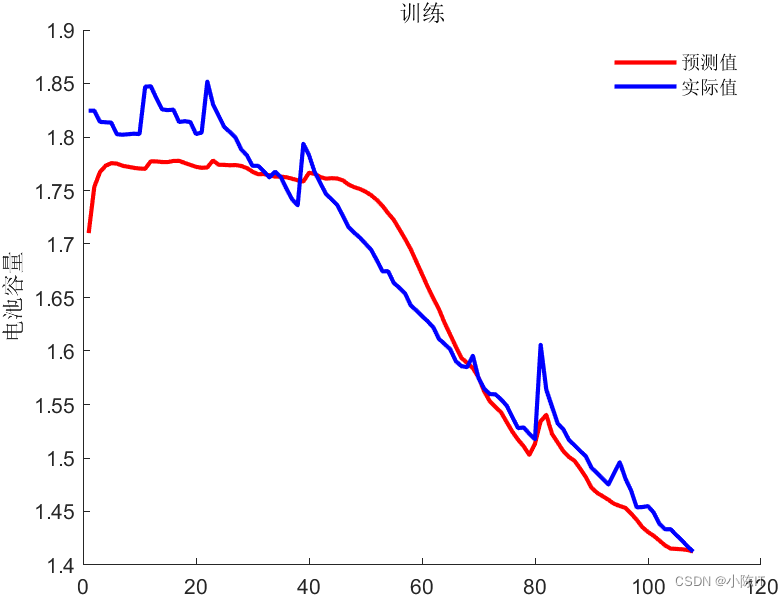

Predict_Ynorm_Train = net.predict(Train_xNorm);

Predict_Y_Train = mapminmax('reverse',Predict_Ynorm_Train',yopt);

Predict_Y_Train = Predict_Y_Train';

figure

hold on

plot(Predict_Y_Train,'r-','LineWidth',2.0)

plot(Train_y,'b-','LineWidth',2.0);

ylabel('容量')

legend('预测值','实际值')

title('训练')

Predict_Ynorm = net.predict(Test_xNorm);

Predict_Y = mapminmax('reverse',Predict_Ynorm',yopt);

Predict_Y = Predict_Y';

figure

hold on

plot(Predict_Y,'r-','LineWidth',2.0)

plot(Test_y,'b-','LineWidth',2.0)

legend('预测值','实际值')

ylabel('容量')

title('测试')

predict_value = [Predict_Y_Train' Predict_Y'];

real_value = [Train_y' Test_y'];

figure

hold on

plot(predict_value,'r-','LineWidth',2.0)

plot(real_value,'b-','LineWidth',2.0)

legend('预测值','实际值')

ylabel('容量')

title('测试')

% 预测结果评价

ae= abs(Predict_Y - Test_y);

rmse = (mean(ae.^2)).^0.5;

mse = mean(ae.^2);

mae = mean(ae);

mape = mean(ae./Predict_Y);

disp('预测结果评价指标:')

disp(['RMSE = ', num2str(rmse)])

disp(['MSE = ', num2str(mse)])

disp(['MAE = ', num2str(mae)])

disp(['MAPE = ', num2str(mape)])

5、

clc

close all

clear all

data=xlsread('B05.xlsx',1,'A2:A169');

numTimeStepsTrain = floor(0.7*numel(data));

dataTrain = data(1:numTimeStepsTrain,:);%选择训练集

dataTest = data(numTimeStepsTrain+1:end,:); % 选择测试集

%标准化

mu = mean(dataTrain,'ALL');

sig = std(dataTrain,0,'ALL');

dataTrainStandardized = (dataTrain - mu) / sig;

k =9; %滑动窗口设置为9 具体设多少需要衡量

m = 1 ; %预测步长

for i = 1:size(dataTrainStandardized)-k-m+1

XTrain(:,i) = dataTrainStandardized(i:i+k-1,:);

YTrain(:,i) = dataTrainStandardized(i+k:i+k+m-1,:);

end

% XTrain = XTrain';

% YTrain = YTrain';

dataTestStandardized = (dataTest - mu) / sig;

for i = 1:size(dataTestStandardized)-k-m+1

XTest(:,i) = dataTestStandardized(i:i+k-1,:);

YTest(:,i) = dataTestStandardized(i+k:i+k+m-1,:);

end

% XTest = XTest';

% YTest = YTest';

%% define the Deeper LSTM networks

%创建LSTM回归网络,指定LSTM层的隐含单元个数125

%序列预测,因此,输入一维,输出一维

numFeatures= k;%输入特征的维度

numResponses = m;%响应特征的维度

numHiddenUnits = 30;%隐藏单元个数

layers = [sequenceInputLayer(numFeatures)

bilstmLayer(numHiddenUnits)

fullyConnectedLayer(numResponses)

regressionLayer];

MaxEpochs=500;%最大迭代次数

InitialLearnRate=0.05;%初始学习率

%% back up to LSTM model

options = trainingOptions('adam', ...

'MaxEpochs',MaxEpochs,...%30用于训练的最大轮次数,迭代是梯度下降算法中使用小批处理来最小化损失函数的一个步骤。一个轮次是训练算法在整个训练集上的一次全部遍历。

'MiniBatchSize',30, ... %128小批量大小,用于每个训练迭代的小批处理的大小,小批处理是用于评估损失函数梯度和更新权重的训练集的子集。

'GradientThreshold',1, ...%梯度下降阈值

'InitialLearnRate',InitialLearnRate, ...%全局学习率。默认率是0.01,但是如果网络训练不收敛,你可能希望选择一个更小的值。默认情况下,trainNetwork在整个训练过程中都会使用这个值,除非选择在每个特定的时间段内通过乘以一个因子来更改这个值。而不是使用一个小的固定学习速率在整个训练, 在训练开始时选择较大的学习率,并在优化过程中逐步降低学习率,可以帮助缩短训练时间,同时随着训练的进行,可以使更小的步骤达到最优值,从而在训练结束时进行更精细的搜索。

'LearnRateSchedule','piecewise', ...%在训练期间降低学习率的选项,默认学习率不变。'piecewise’表示在学习过程中降低学习率

'LearnRateDropPeriod',100, ...% 10降低学习率的轮次数量,每次通过指定数量的epoch时,将全局学习率与学习率降低因子相乘

'LearnRateDropFactor',0.5, ...%学习下降因子

'Verbose',1, ...%1指示符,用于在命令窗口中显示训练进度信息,显示的信息包括轮次数、迭代数、时间、小批量的损失、小批量的准确性和基本学习率。训练一个回归网络时,显示的是均方根误差(RMSE),而不是精度。如果在训练期间验证网络。

'Plots','training-progress');

%% Train LSTM Network

net = trainNetwork(XTrain,YTrain,layers,options);

net = predictAndUpdateState(net,XTrain);

numTimeStepsTrain = numel(XTrain(1,:));

for i = 1:numTimeStepsTrain

[net,YPred_Train(:,i)] = predictAndUpdateState(net,XTrain(:,i),'ExecutionEnvironment','cpu');

end

YPred_Train = sig*YPred_Train + mu;

YTrain = sig*YTrain + mu;

numTimeStepsTest = numel(XTest(1,:));

for i = 1:numTimeStepsTest

[net,YPred(:,i)] = predictAndUpdateState(net,XTest(:,i),'ExecutionEnvironment','cpu');

end

YPred = sig*YPred + mu;

YTest = sig*YTest + mu;

% % 评价

ae= abs(YPred(m,:) - YTest(m,:));

rmse = (mean(ae.^2)).^0.5;

mse = mean(ae.^2);

mae = mean(ae);

mape = mean(ae./YTest(m,:));

disp('预测结果评价指标:')

disp(['RMSE = ', num2str(rmse)])

disp(['MSE = ', num2str(mse)])

disp(['MAE = ', num2str(mae)])

disp(['MAPE = ', num2str(mape)])

figure(1)

hold on

plot(YPred_Train(m,:),'r-','LineWidth',2);

plot(YTrain(m,:),'b-','LineWidth',2);

hl = legend;

set(hl,'Box','off')

legend('预测值','实际值')

ylabel('电池容量')

title('训练')

figure(2)

hold on

plot(YPred(m,:),'r-','LineWidth',2);

plot(YTest(m,:),'b-','LineWidth',2);

hl = legend;

set(hl,'Box','off')

legend('预测值','实际值')

ylabel('电池容量')

title('测试')

predict_value = [YPred_Train(m,:),YPred(m,:)];

real_value = [YTrain(m,:),YTest(m,:)];

figure

hold on

plot(predict_value,'r-','LineWidth',2);

plot(real_value,'b-','LineWidth',2);

hl = legend;

set(hl,'Box','off')

legend('预测值','实际值')

%

%

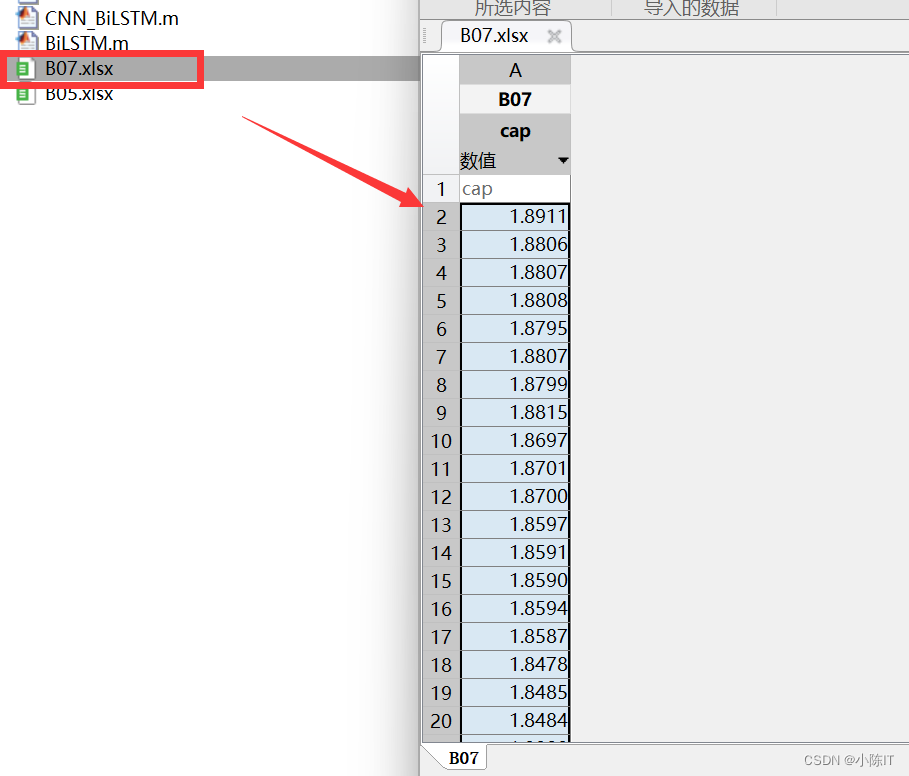

数据

两个数据集都类似

结果