多维时序 | MATLAB实现CNN-BiLSTM-Attention多变量时间序列预测

预测效果

基本介绍

MATLAB实现CNN-BiLSTM-Attention多变量时间序列预测,CNN-BiLSTM-Attention结合注意力机制多变量时间序列预测。

模型描述

Matlab实现CNN-BiLSTM-Attention多变量时间序列预测

1.data为数据集,格式为excel,4个输入特征,1个输出特征,考虑历史特征的影响,多变量时间序列预测;

2.CNN_BiLSTM_AttentionNTS.m为主程序文件,运行即可;

3.命令窗口输出R2、MAE、MAPE、MSE和MBE,可在下载区获取数据和程序内容;

注意程序和数据放在一个文件夹,运行环境为Matlab2020b及以上。

注意程序和数据放在一个文件夹,运行环境为Matlab2020b及以上。

4.注意力机制模块:

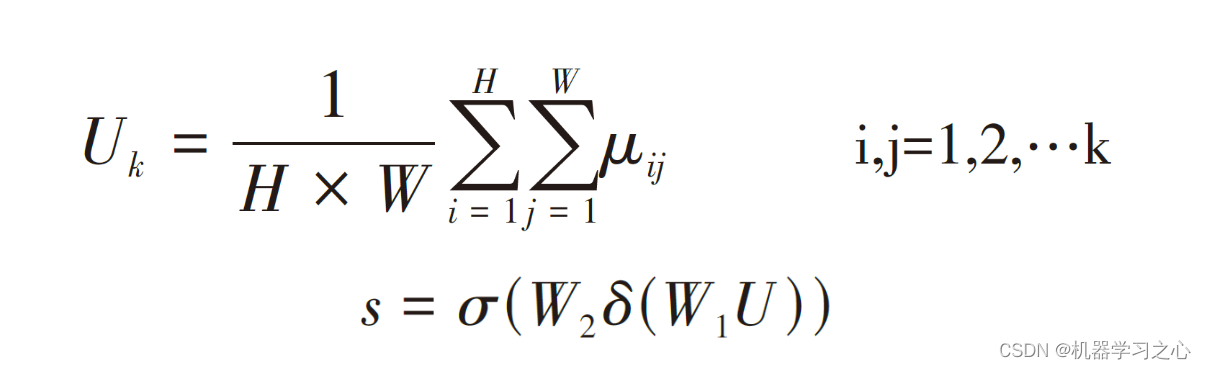

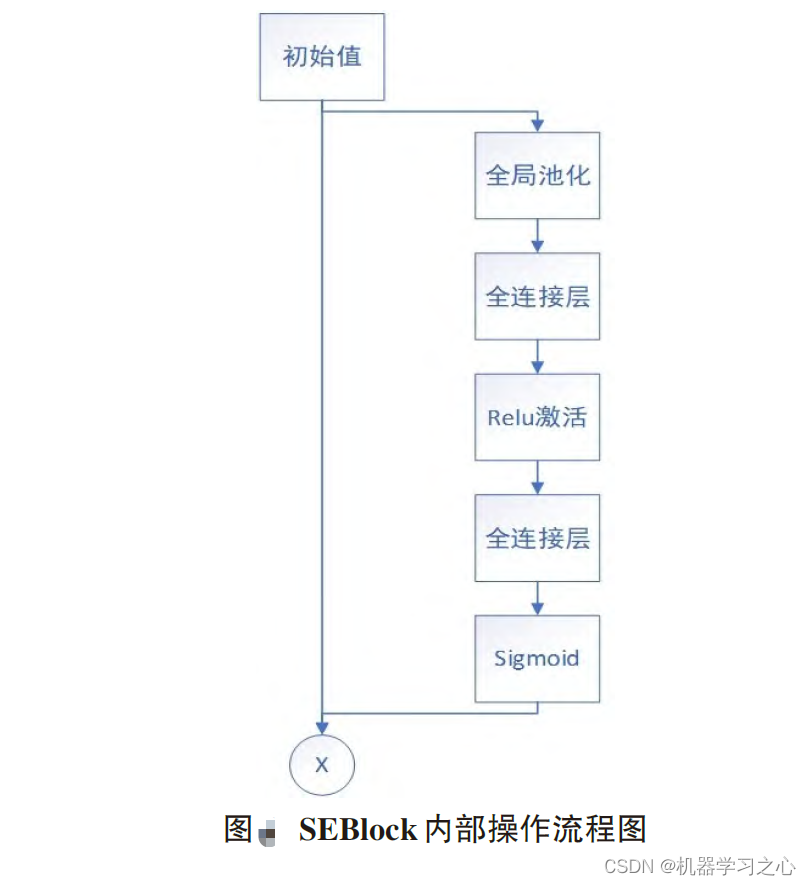

SEBlock(Squeeze-and-Excitation Block)是一种聚焦于通道维度而提出一种新的结构单元,为模型添加了通道注意力机制,该机制通过添加各个特征通道的重要程度的权重,针对不同的任务增强或者抑制对应的通道,以此来提取有用的特征。该模块的内部操作流程如图,总体分为三步:首先是Squeeze 压缩操作,对空间维度的特征进行压缩,保持特征通道数量不变。融合全局信息即全局池化,并将每个二维特征通道转换为实数。实数计算公式如公式所示。该实数由k个通道得到的特征之和除以空间维度的值而得,空间维数为H*W。其次是Excitation激励操作,它由两层全连接层和Sigmoid函数组成。如公式所示,s为激励操作的输出,σ为激活函数sigmoid,W2和W1分别是两个完全连接层的相应参数,δ是激活函数ReLU,对特征先降维再升维。最后是Reweight操作,对之前的输入特征进行逐通道加权,完成原始特征在各通道上的重新分配。

程序设计

- 完整程序和数据获取方式1:同等价值程序兑换;

- 完整程序和数据获取方式2:私信博主获取。

layers = [

% input matrix of spectrogram values

sequenceInputLayer(inputSize,"Name","sequence")

sequenceFoldingLayer("Name","fold");

% convolutional layers

convolution2dLayer([5 5],10,"Name","conv1","Stride",[2 1])

reluLayer("Name","relu1")

maxPooling2dLayer([5 5],"Name","maxpool1","Padding","same","Stride",[2 1])

convolution2dLayer([5 5],10,"Name","conv2","Stride",[2 1])

reluLayer("Name","relu2")

maxPooling2dLayer([5 5],"Name","maxpool2","Padding","same","Stride",[2 1])

convolution2dLayer([3 3],1,"Name","conv3","Padding",[1 1 1 1])

reluLayer("Name","relu3")

maxPooling2dLayer([2 2],"Name","maxpool3","Padding","same","Stride",[2 1]);

% unfold and feed into LSTM

sequenceUnfoldingLayer("Name","unfold")

flattenLayer("Name","flatten")

bilstmLayer(numHiddenUnits1,"Name","bilstm","OutputMode","last")

dropoutLayer(0.4,"Name","dropout")

fullyConnectedLayer(numClasses,"Name","fc")

softmaxLayer("Name","softmax")

classificationLayer("Name","classoutput");

];

lgraph = layerGraph(layers);

lgraph = connectLayers(lgraph,'fold/miniBatchSize','unfold/miniBatchSize');

% Training

maxEpochs = 200;

learningRate = 0.001;

miniBatchSize = 15; % is this needed?

options = trainingOptions('sgdm', ...

'ExecutionEnvironment', 'gpu', ...

'GradientThreshold', 1, ...

'MaxEpochs' ,maxEpochs, ...

'miniBatchSize',miniBatchSize,...

'SequenceLength', 'longest', ...

'Verbose', 0, ...

'ValidationData', {

xVal, yVal}, ...

'ValidationFrequency', 30, ...

'InitialLearnRate', learningRate, ...

'Plots', 'training-progress',...

'Shuffle', 'every-epoch');

net = trainNetwork(xTrain, yTrain, lgraph, options);

layers = [ sequenceInputLayer(12,'Normalization','none', 'MinLength', 9);

convolution1dLayer(3, 16)

batchNormalizationLayer()

reluLayer()

maxPooling1dLayer(2)

convolution1dLayer(5, 32)

batchNormalizationLayer()

reluLayer()

averagePooling1dLayer(2)

lstmLayer(100, 'OutputMode', 'last')

fullyConnectedLayer(9)

softmaxLayer()

classificationLayer()];

options = trainingOptions('adam', ...

'MaxEpochs',10, ...

'MiniBatchSize',27, ...

'SequenceLength','longest');

% Train network

net = trainNetwork(XTrain,YTrain,layers,options);

————————————————

版权声明:本文为CSDN博主「机器学习之心」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

参考资料

[1] http://t.csdn.cn/pCWSp

[2] https://download.csdn.net/download/kjm13182345320/87568090?spm=1001.2014.3001.5501

[3] https://blog.csdn.net/kjm13182345320/article/details/129433463?spm=1001.2014.3001.5501