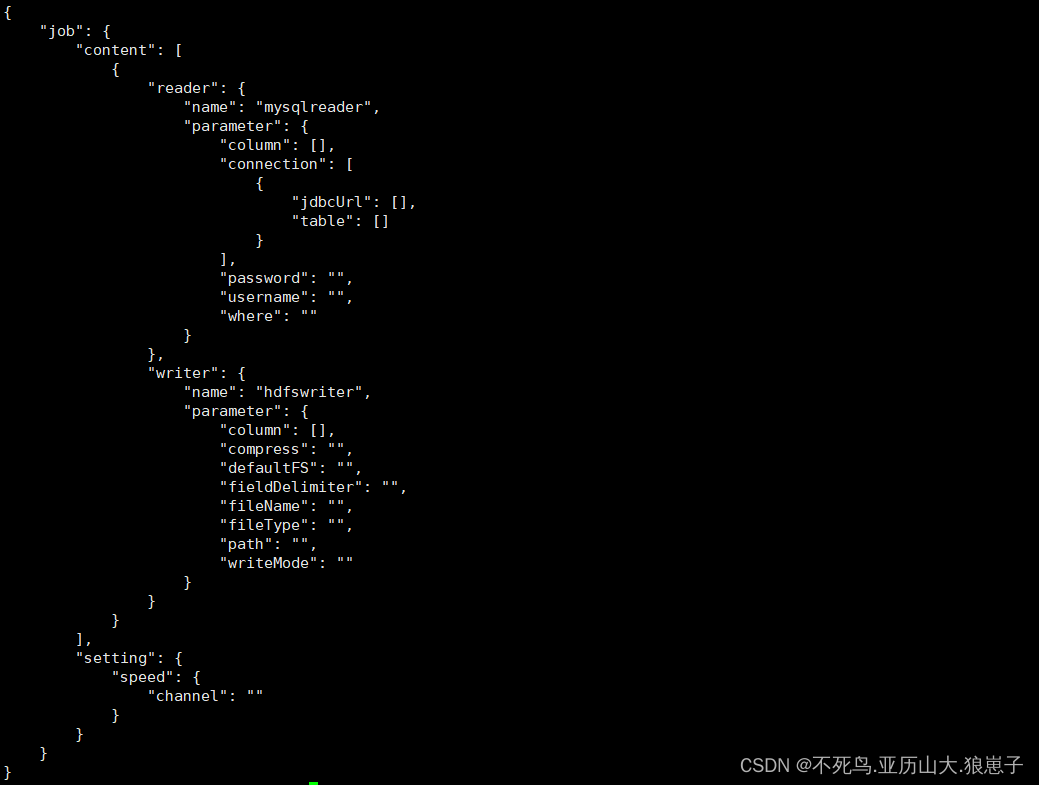

1 查看官方模板

python /opt/module/datax/bin/datax.py -r mysqlreader -w hdfswriter

mysqlreader 参数解析:

hdfswriter 参数解析:

2 准备数据

创建handsome表

mysql> create database datax;

mysql> use datax;

mysql> create table handsome(id int,name varchar(20));插入数据

insert into handsome values(1001,'zhangsan'),(1002,'lisi'),(1003,'wangwu');3 编写配置文件

vim /opt/module/datax/job/mysql2hdfs.json内容如下:

{

"job": {

"content": [{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"id",

"name"

],

"connection": [{

"jdbcUrl": [

"jdbc:mysql://192.168.222.132:3306/datax"

],

"table": [

"handsome"

]

}],

"username": "root",

"password": "123456"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [{

"name": "id",

"type": "int"

},

{

"name": "name",

"type": "string"

}

],

"defaultFS": "hdfs://192.168.222.138:9000",

"fieldDelimiter": "\t",

"fileName": "handsome.txt",

"fileType": "text",

"path": "/",

"writeMode": "append"

}

}

}],

"setting": {

"speed": {

"channel": "1"

}

}

}

}4 执行任务

bin/datax.py job/mysql2hdfs.json结果如下:

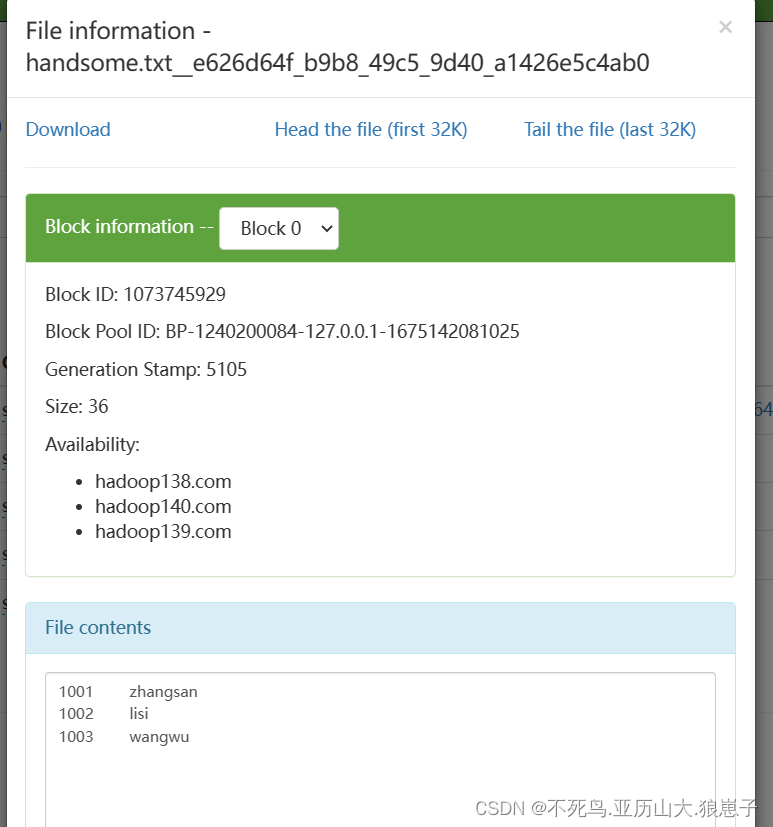

5 查看 hdfs

注意:HdfsWriter 实际执行时会在该文件名后添加随机的后缀作为每个线程写入实际文件名。

6 关于HA 的支持

"hadoopConfig":{

"dfs.nameservices": "ns",

"dfs.ha.namenodes.ns": "nn1,nn2",

"dfs.namenode.rpc-address.ns.nn1": "主机名:端口",

"dfs.namenode.rpc-address.ns.nn2": "主机名:端口",

"dfs.client.failover.proxy.provider.ns":"org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

}