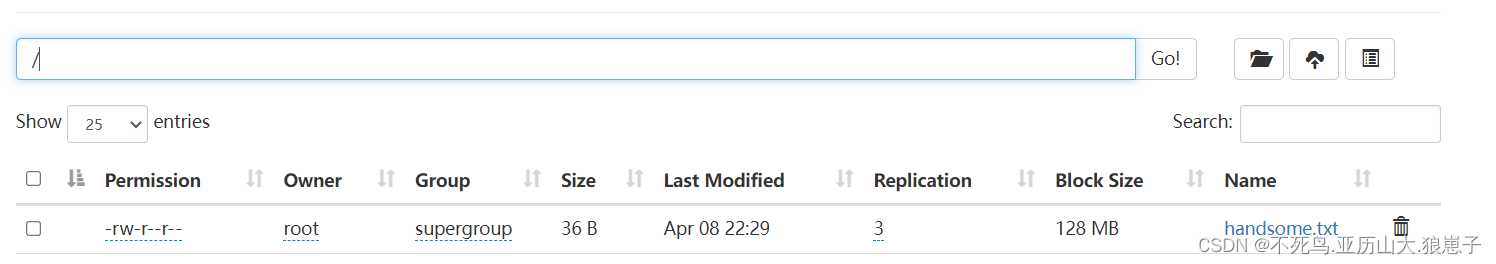

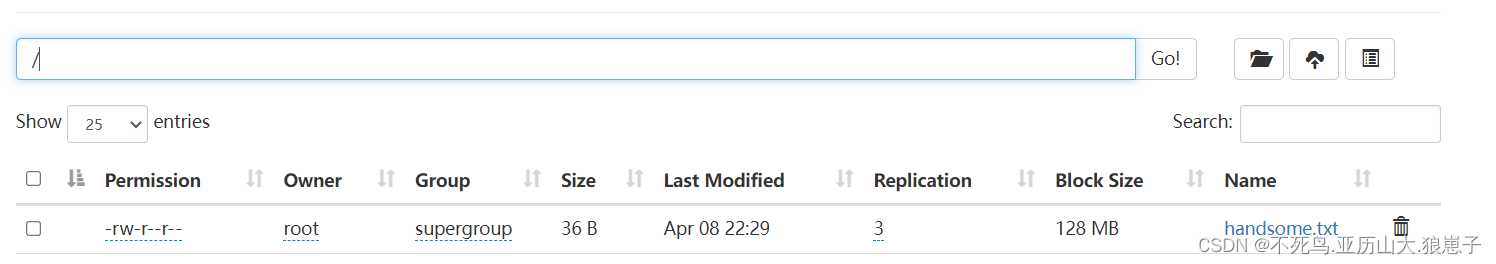

1 将上个案例上传的文件改名

hadoop fs -mv /handsome.txt* /handsome.txt

2 查看官方模板

python /opt/module/datax/bin/datax.py -r hdfsreader -w mysqlwriter

3 创建配置文件

{

"job": {

"content": [{

"reader": {

"name": "hdfsreader",

"parameter": {

"column": ["*"],

"defaultFS": "hdfs://hadoop138.com:9000",

"encoding": "UTF-8",

"fieldDelimiter": "\t",

"fileType": "text",

"path": "/handsome.txt"

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"column": [

"id",

"name"

],

"connection": [{

"jdbcUrl": "jdbc:mysql://192.168.222.132:3306/datax",

"table": ["handsome2"]

}],

"password": "123456",

"username": "root",

"writeMode": "insert"

}

}

}],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

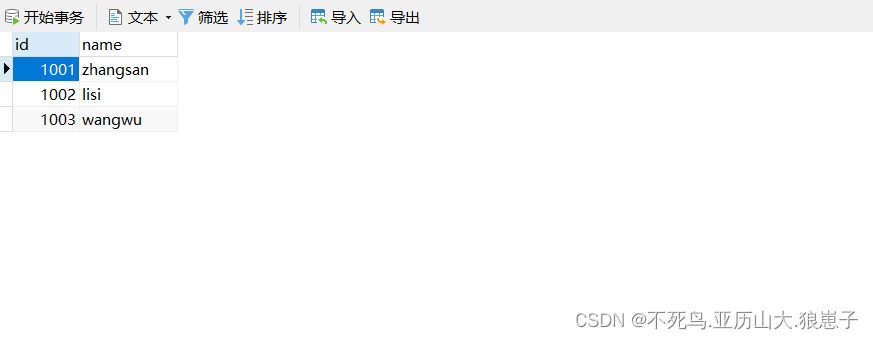

4 在MySQL的datax数据库中创建handsome2

mysql> use datax;

mysql> create table student2(id int,name varchar(20));

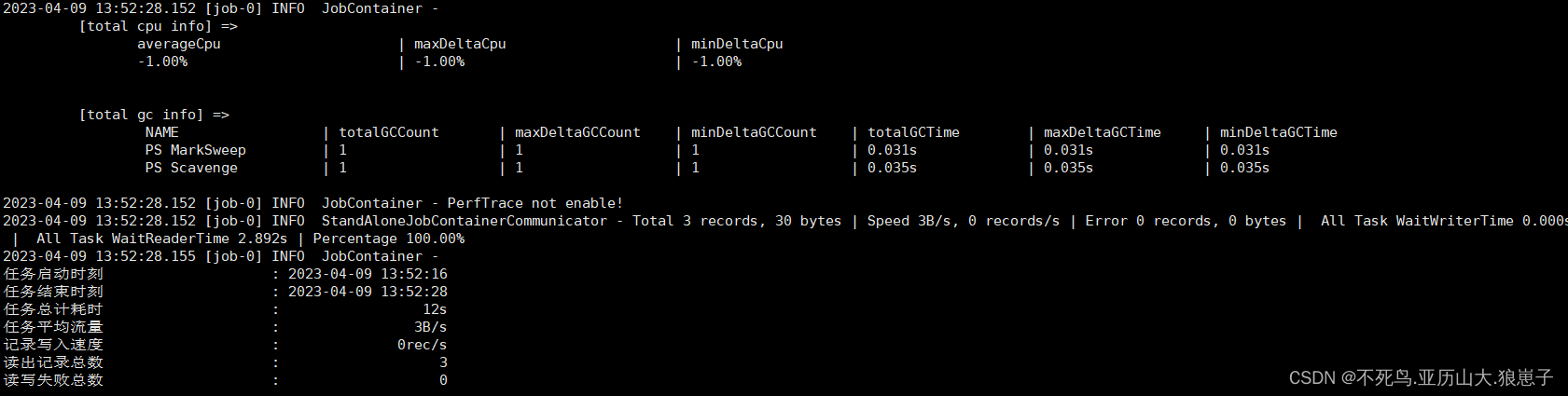

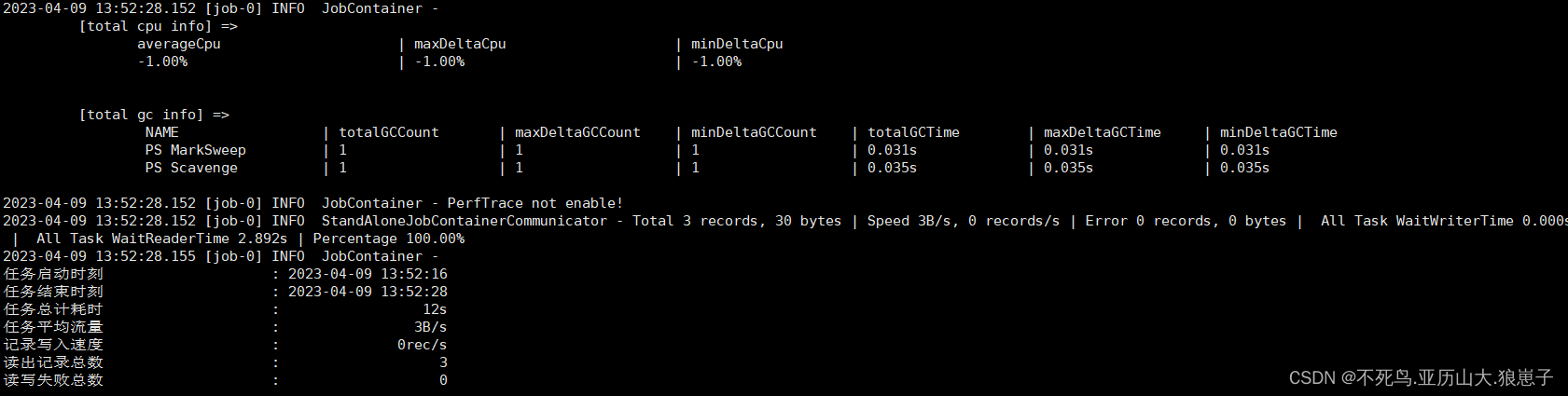

5 执行任务

bin/datax.py job/hdfs2mysql.json

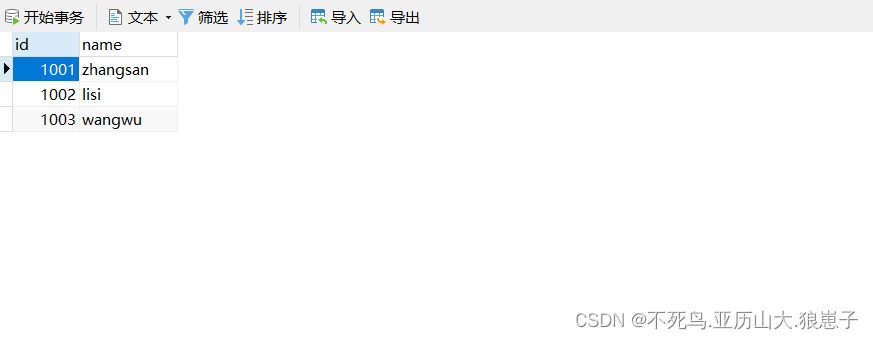

6 查看handsome2表