若采用docker容器化的部署方案可直接跳过前提准备哦~

前提准备

本文使用的是kafka_2.13-2.8.1.tgz

❀ 温馨提示: 该文件集成了zookeeper,因此不需要再特意下载哦~

前提

❀ 确保有jdk8环境

1、查看版本

java -version

2、安装jdk8(有网环境)

#Ubuntu

apt install -y openjdk-8-jdk-headless

#CentOS

yum install openjdk-8-jdk-headless

3、安装jdk8(无网环境)

jdk-8u221

链接:https://pan.baidu.com/s/1uhrtDj-pG3BsTq8tMiZAlg

提取码:26k2

#解压jdk到当前目录

tar -zxvf jdk-8u221-linux-x64.tar.gz

#编辑配置文件,配置环境变量

vi /etc/profile

#用vim编辑器来编辑profile文件,在文件末尾添加一下内容(按“i”进入编辑):

export JAVA_HOME=/usr/local/jdk1.8.0_221

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib:$CLASSPATH

export JAVA_PATH=${JAVA_HOME}/bin:${JRE_HOME}/bin

export PATH=$PATH:${JAVA_PATH}

#执行命令

source /etc/profile

#查看安装情况

java -version

kafka之服务器部署

单机

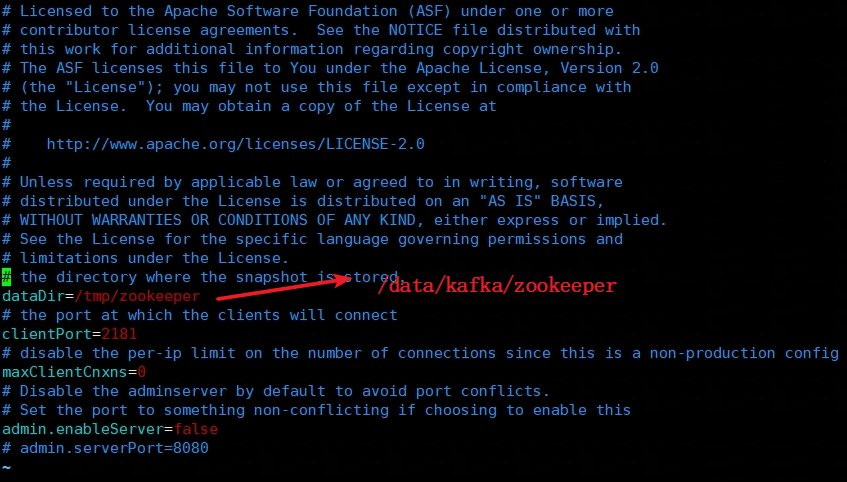

1、修改zookeeper配置

❀ 修改数据存储路径

温馨提示:修改成自个路径之前,记得先创建目录哦!

vim config/zookeeper.properties

dataDir=/data/kafka/zookeeper

# the port at which the clients will connect

clientPort=2181

# disable the per-ip limit on the number of connections since this is a non-production config

maxClientCnxns=0

# Disable the adminserver by default to avoid port conflicts.

# Set the port to something non-conflicting if choosing to enable this

admin.enableServer=false

# admin.serverPort=8080

2、启动zookeeper

./bin/zookeeper-server-start.sh config/zookeeper.properties

#后台启动

nohup ./bin/zookeeper-server-start.sh config/zookeeper.properties > zookeeper_nohup.log 2>&1 &

3、修改kafka配置文件server.properties

#修改IP地址为当前的主机IP:

advertised.listeners=PLAINTEXT://192.168.88.89:9092

#修改kafka日志保存路径:

log.dirs=/data/kafka/kafka-logs

#所有kafka znode的根目录,zk的IP+端口/node

zookeeper.connect=192.168.88.89:2181

4、启动kafka

./bin/kafka-server-start.sh config/server.properties

#后台启动

nohup bin/kafka-server-start.sh config/server.properties > kafka_server_nohup.log 2>&1 &

集群

1、绑定hosts

vim /etc/hosts

#绑定ip

192.168.5.11 kafka1 zoo1

192.168.5.12 kafka2 zoo2

192.168.5.13 kafka3 zoo3

2、修改zookeeper配置

vim config/zookeeper.properties

dataDir=/data/kafka/zkdata

# the port at which the clients will connect

clientPort=2181

# disable the per-ip limit on the number of connections since this is a non-production config

maxClientCnxns=0

# Disable the adminserver by default to avoid port conflicts.

# Set the port to something non-conflicting if choosing to enable this

admin.enableServer=false

# admin.serverPort=8080

tickTime=2000

initLimit=5

syncLimit=2

server.1=zoo1:2888:3888

server.2=zoo2:2888:3888

server.3=zoo3:2888:3888

3、创建数据目录和集群ID

myid 集群内不能重复的,每台机器设置成不一样的。

mkdir zkdata echo 1 > zkdata/myid

4、启动zookeeper

./bin/zookeeper-server-start.sh config/zookeeper.properties

5、修改kafka配置

vim config/server.properties

# 每个节点需要不一样

broker.id=1

listeners=PLAINTEXT://kafka1:9092

zookeeper.connect=zoo1:2181,zoo2:2181,zoo3:2181

6、启动kafka

./bin/kafka-server-start.sh config/server.properties

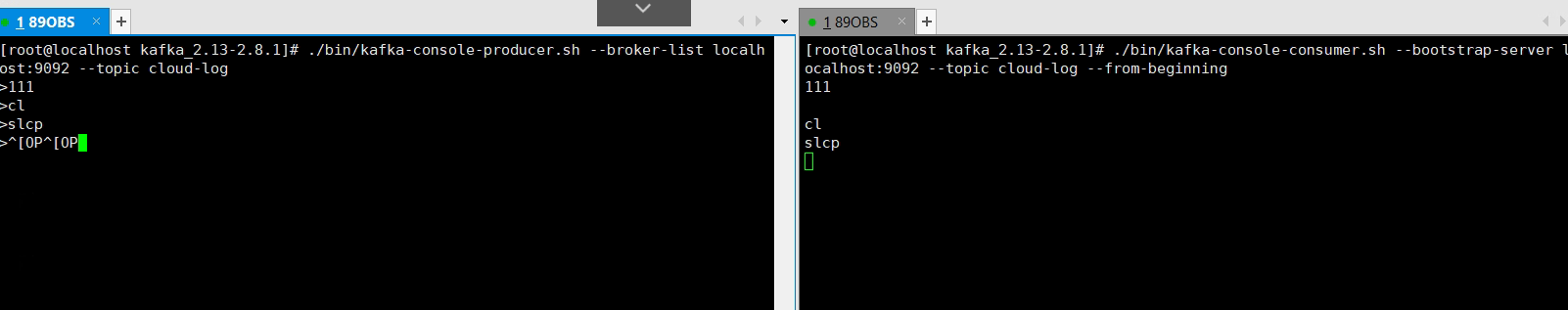

测试

#创建topic

./bin/kafka-topics.sh --create --zookeeper 192.168.88.89:2181 --partitions 1 --replication-factor 1 --topic cloud-log

# 查看topic list

bin/kafka-topics.sh --zookeeper localhost:2181 --list

#发送消息

./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic cloud-log

#接收消息

./bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic cloud-log --from-beginning

kafka之容器化部署

❀ 温馨提示:

出现/docker-entrypoint.sh: line 43: /conf/zoo.cfg: Permission denied

⼀般都是⽬录没权限,给对应⽬录添加权限即可

chmod 777 xxx

1、启动zookeeper容器

docker run -d -p 2181:2181 -p 2888:2888 -p 3888:3888 --privileged=true \

--restart=always --name=zkNode-1 \

-v /data/elk/zookeeper/conf:/conf \

-v /data/elk/zookeeper/data:/data \

-v /data/elk/zookeeper/datalog:/datalog wurstmeister/zookeeper

2、启动kafka容器

docker run -d --restart=always --name kafka \

-p 9092:9092 \

-v /data/elk/kafka/logs:/opt/kafka/logs \

-v /data/elk/kafka/data:/kafka/kafka-logs \

-v /data/elk/kafka/conf:/opt/kafka/config \

-e KAFKA_BROKER_ID=1 \

-e KAFKA_LOG_DIRS="/kafka/kafka-logs" \

-e KAFKA_ZOOKEEPER_CONNECT=10.84.77.10:2181 \

-e KAFKA_DEFAULT_REPLICATION_FACTOR=1 \

-e KAFKA_LOG_RETENTION_HOURS=72 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://10.84.77.10:9092 \

-e ALLOW_PLAINTEXT_LISTENER=yes \

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 -t wurstmeister/zookeeper

docker 启动参数说明:

-d:后台启动,

--restart=always:如果挂了总是会重启

--name:设置容器名

-p: 设置宿主机与容器之间的端⼝映射,例如:9902:9092,表示将容器中9092端⼝映射到宿主机的9902端⼝,当有请求访问宿主机的9902端⼝时,会被转发到容器内部的9092端⼝

-v:设置宿主机与容器之间的路径或⽂件映射,例如:/home/kafka/logs:/opt/kafka/logs,表示将容器内部的路径/opt/kafka/logs⽬录映射到宿主机的/home/kafka/logs⽬录,可以⽅便的从宿主机/home/kafka/logs/就能访问到容器内的⽬录,⼀般数据⽂件夹,配置⽂件均可如此配置,便于管理和数据持久化

-e设置环境变量参数,例如-e KAFKA_BROKER_ID=1,表示将该环境变量设置到容器的环境变量中,容器在启动时会读取该环境变量,并替换掉容器中配置⽂件的对应默认配置(server.properties⽂件中的 broker.id=1)

3、测试kafka

#进⼊kafka容器的命令⾏

docker exec -it kafka /bin/bash

#进⼊kafka所在⽬录

cd /opt/kafka_2.13-2.8.1/

#没有找到就⽤这个命令搜索

find / -name kafka-topics.sh

#创建topic

./bin/kafka-topics.sh --create --zookeeper 192.168.88.89:2181 --partitions 1 --replication-factor 1 --topic cloud-log

#发送消息

./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic cloud-log

#接收消息

./bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic cloud-log --from-beginning

大功告成!!!

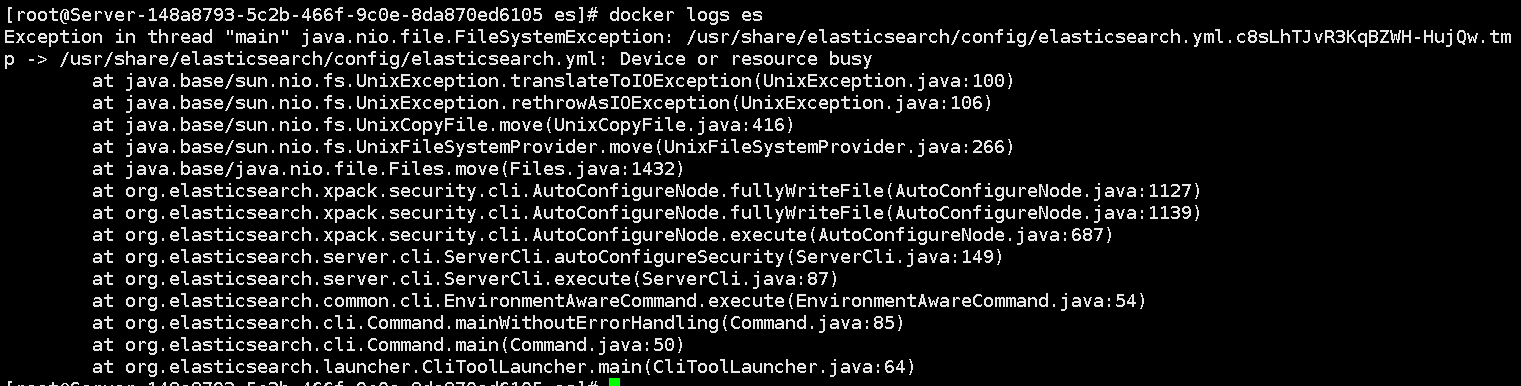

常见问题

- FileSystemException: Device or resource busy

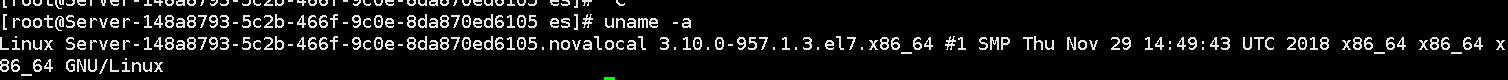

❀ 挂载点泄漏是内核3.10的bug,在后续内核版本得到修复,可以通过升级内核。生产环境,因此只能退一步海阔天空了,8版折磨了我很久,换个7版几下搞定~

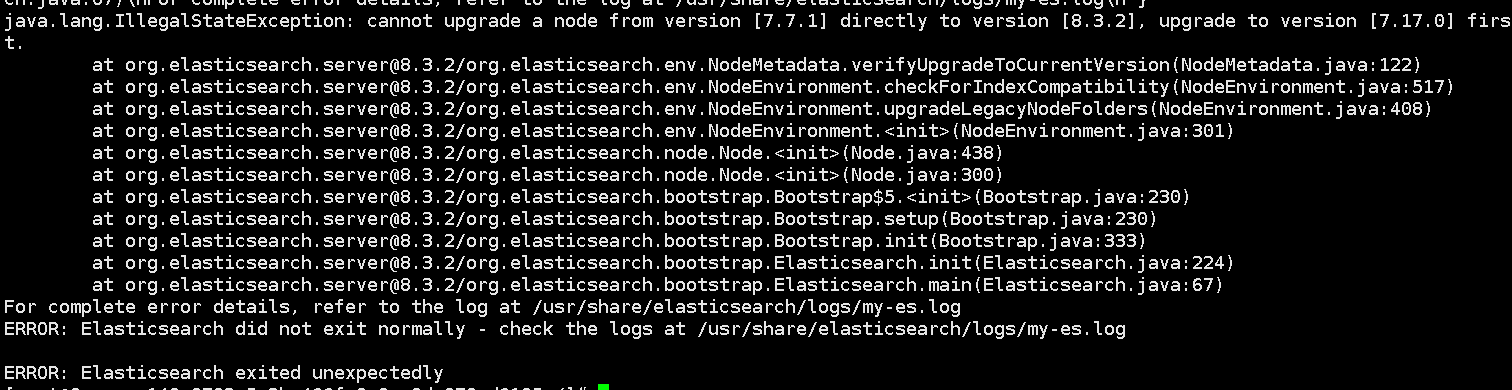

- cannot upgrade a node from version [7.7.1] directly to version [8.3.2], upgrade to version [7.17.0] first.

❀ 顾名思义了,之前安装过7.7.1然后基于原来挂载的文件安装8.3.2,把之前文件删了即可

- 出现/docker-entrypoint.sh: line 43: /conf/zoo.cfg: Permission denied

❀ ⼀般都是⽬录没权限,给对应⽬录添加权限即可

chmod 777 xxx

- FileNotFoundException: /opt/kafka 2. 13-2. 8. 1/bin/,./config/ tools-log4j. properties (No such file or directory)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-VUEHzTAO-1663039777941)(https://img.slcp.top/QQ%E5%9B%BE%E7%89%8720220730183800.png)]

❀ 顾名思义,在指定位置创建指定文件即可

- kafka.common.InconsistentClusterIdException: The Cluster ID C4wRULTzSGqNoEAInvubIw doesn’t match stored clusterId Some(eA5rD8rZSUm3EXr2glib2w) in meta.properties. The broker is trying tojoin the wrong cluster. Configured zookeeper.connect may be wrong.

个时候需要删除kafka的log目录,让程序重新生成

- 创建topic,kafka连zookeeper出现连接超时

❀ 首先查看zk容器是否启动成功

docker logs zk,其次查看IP是对应上,再者查看命令是否有问题./bin/kafka-topics.sh --create

--zookeeper192.168.88.89:2181 --partitions 1 --replication-factor 1 --topic cloud-log或者

./bin/kafka-topics.sh --create

--bootstrap-server10.84.77.10:2181 --partitions 1 --replication-factor 1 --topic cloud-log

- 其他未提及的问题,前往留言,小编会及时回复哦~

上一篇: Docker安装ELK详细步骤