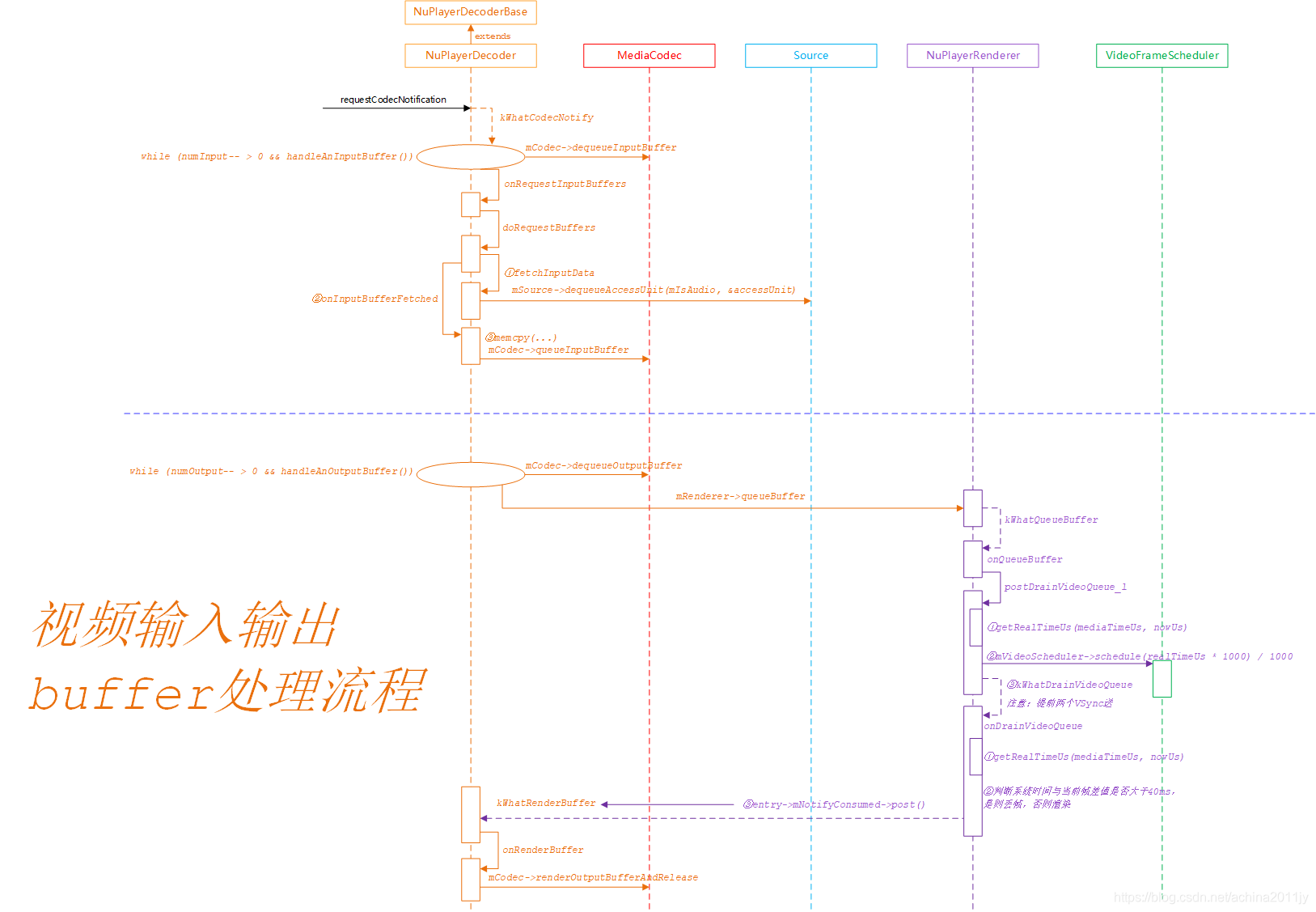

一、引言:

在上一篇博客中,分析了音频部分的buffer处理。nuplayer在音视频buffer的处理上共用了很多代码,这篇博客将直接从差异上开始分析,nuplayer的同步机制整体来说,和exoplayer大同小异,都是基于码流中的pts和系统时间来进行预估,并结合垂直同步信号时间点来确定最终的送显时间。不同点在于nuplayer对于送显时间的校准太过复杂,很多都看不懂,但是如果不重点关注校准的内容的话,其他部分还是很好理解的。

二、确定视频帧送显时间:

音视频的buffer处理函数很多都共用,我们直接定位到NuPlayerRenderer.cpp的postDrainVideoQueue_l函数:

void NuPlayer::Renderer::postDrainVideoQueue_l() {

if (mDrainVideoQueuePending

|| mSyncQueues

|| (mPaused && mVideoSampleReceived)) {

return;

}

if (mVideoQueue.empty()) {

return;

}

QueueEntry &entry = *mVideoQueue.begin();

/* 1.创建kWhatDrainVideoQueue消息用于后续投递处理 */

sp<AMessage> msg = new AMessage(kWhatDrainVideoQueue, id());

msg->setInt32("generation", mVideoQueueGeneration);

if (entry.mBuffer == NULL) {

// EOS doesn't carry a timestamp.

msg->post();

mDrainVideoQueuePending = true;

return;

}

int64_t delayUs;

int64_t nowUs = ALooper::GetNowUs();

int64_t realTimeUs;

if (mFlags & FLAG_REAL_TIME) {

int64_t mediaTimeUs;

CHECK(entry.mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

realTimeUs = mediaTimeUs;

} else {

int64_t mediaTimeUs;

CHECK(entry.mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

if (mAnchorTimeMediaUs < 0) {

setAnchorTime(mediaTimeUs, nowUs);

mPausePositionMediaTimeUs = mediaTimeUs;

mAnchorMaxMediaUs = mediaTimeUs;

realTimeUs = nowUs;

} else {

/* 2.获取当前帧送显时间 */

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

}

if (!mHasAudio) {

mAnchorMaxMediaUs = mediaTimeUs + 100000; // smooth out videos >= 10fps

}

// Heuristics to handle situation when media time changed without a

// discontinuity. If we have not drained an audio buffer that was

// received after this buffer, repost in 10 msec. Otherwise repost

// in 500 msec.

/* 当前帧渲染时间差 = 当前帧时间戳 - 当前帧送显时间 */

/* 当前帧渲染时间差 = 当前帧送显时间 - 系统时间 */

delayUs = realTimeUs - nowUs;

if (delayUs > 500000) {

int64_t postDelayUs = 500000;

if (mHasAudio && (mLastAudioBufferDrained - entry.mBufferOrdinal) <= 0) {

postDelayUs = 10000;

}

msg->setWhat(kWhatPostDrainVideoQueue);

msg->post(postDelayUs);

mVideoScheduler->restart();

ALOGI("possible video time jump of %dms, retrying in %dms",

(int)(delayUs / 1000), (int)(postDelayUs / 1000));

mDrainVideoQueuePending = true;

return;

}

}

/* 3.校准送显时间 */

realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000;

/* 4.计算出两个垂直同步信号用时时长 */

int64_t twoVsyncsUs = 2 * (mVideoScheduler->getVsyncPeriod() / 1000);

/* 再次计算下一帧视频渲染时间差 = 校准后的送显时间 - 系统时间 */

delayUs = realTimeUs - nowUs;

/* 5.送显:两个垂直同步信号点 */

ALOGW_IF(delayUs > 500000, "unusually high delayUs: %" PRId64, delayUs);

// post 2 display refreshes before rendering is due

msg->post(delayUs > twoVsyncsUs ? delayUs - twoVsyncsUs : 0);

mDrainVideoQueuePending = true;

}

注释一:

在确认了送显时间后,会将该消息发送出去,消息的处理中将会去进行渲染的操作。

注释二:

这里是得到一个初步的送显时间,mediaTimeUs和nowUs代表的意思分别是码流中的pts和当前的系统时间,跟进到getRealTimeUs看下是如何计算得到的初步送显时间的:

int64_t NuPlayer::Renderer::getRealTimeUs(int64_t mediaTimeUs, int64_t nowUs) {

int64_t currentPositionUs;

/* 获取当前播放位置 */

if (mPaused || getCurrentPositionOnLooper(

¤tPositionUs, nowUs, true /* allowPastQueuedVideo */) != OK) {

// If failed to get current position, e.g. due to audio clock is not ready, then just

// play out video immediately without delay.

return nowUs;

}

/* 当前帧时间戳 - 当前播放位置 + 系统时间 */

return (mediaTimeUs - currentPositionUs) + nowUs;

}

看返回值的计算,需要获取到当前播放位置,由getCurrentPositionOnLooper函数获得:

status_t NuPlayer::Renderer::getCurrentPositionOnLooper(

int64_t *mediaUs, int64_t nowUs, bool allowPastQueuedVideo) {

int64_t currentPositionUs;

/* if判断条件返回false,除非pause状态才会进入这里 */

if (getCurrentPositionIfPaused_l(¤tPositionUs)) {

*mediaUs = currentPositionUs;

return OK;

}

return getCurrentPositionFromAnchor(mediaUs, nowUs, allowPastQueuedVideo);

}

继续跟进到getCurrentPositionFromAnchor:

// Called on any threads.

status_t NuPlayer::Renderer::getCurrentPositionFromAnchor(

int64_t *mediaUs, int64_t nowUs, bool allowPastQueuedVideo) {

Mutex::Autolock autoLock(mTimeLock);

if (!mHasAudio && !mHasVideo) {

return NO_INIT;

}

if (mAnchorTimeMediaUs < 0) {

return NO_INIT;

}

/* 计算当前播放时间 = (系统时间 - 已播放时间) + 上一帧音频时间戳 */

int64_t positionUs = (nowUs - mAnchorTimeRealUs) + mAnchorTimeMediaUs;

if (mPauseStartedTimeRealUs != -1) {

positionUs -= (nowUs - mPauseStartedTimeRealUs);

}

// limit position to the last queued media time (for video only stream

// position will be discrete as we don't know how long each frame lasts)

if (mAnchorMaxMediaUs >= 0 && !allowPastQueuedVideo) {

if (positionUs > mAnchorMaxMediaUs) {

positionUs = mAnchorMaxMediaUs;

}

}

if (positionUs < mAudioFirstAnchorTimeMediaUs) {

positionUs = mAudioFirstAnchorTimeMediaUs;

}

*mediaUs = (positionUs <= 0) ? 0 : positionUs;

return OK;

}

我们需要注意一点,nuplayer的同步依然沿用的以音频时间戳为标准来调整视频帧的策略,所以,这里就需要去获得已播放音频帧的位置,核心就是代码中的那句注释,看一下mAnchorTimeRealUs这个变量是在哪里更新的,mAnchorTimeRealUs记录的是音频的播放位置,是通过setAnchorTime函数来更新的:

void NuPlayer::Renderer::setAnchorTime(

int64_t mediaUs, int64_t realUs, int64_t numFramesWritten, bool resume) {

Mutex::Autolock autoLock(mTimeLock);

/* 更新码流中获得的音频时间戳 */

mAnchorTimeMediaUs = mediaUs;

/* 更新AudioTrack实际的播放时间 */

mAnchorTimeRealUs = realUs;

/* 更新实际已写入AudioTrack的帧数 */

mAnchorNumFramesWritten = numFramesWritten;

if (resume) {

mPauseStartedTimeRealUs = -1;

}

}

setAnchorTime是通过上层播放器回调到AudioSink的fillAudioBuffer函数中去实操的:

size_t NuPlayer::Renderer::fillAudioBuffer(void *buffer, size_t size) {

...

if (mAudioFirstAnchorTimeMediaUs >= 0) {

int64_t nowUs = ALooper::GetNowUs();

setAnchorTime(mAudioFirstAnchorTimeMediaUs, nowUs - getPlayedOutAudioDurationUs(nowUs));

}

...

}

重点就是getPlayedOutAudioDurationUs函数里面是如何获取到实际播放的:

int64_t NuPlayer::Renderer::getPlayedOutAudioDurationUs(int64_t nowUs) {

uint32_t numFramesPlayed;

int64_t numFramesPlayedAt;

AudioTimestamp ts;

static const int64_t kStaleTimestamp100ms = 100000;

/* 调用getTimestamp来获取精确播放时间 */

status_t res = mAudioSink->getTimestamp(ts);

if (res == OK) {

// case 1: mixing audio tracks and offloaded tracks.

/* 获取音频已播放帧数 */

numFramesPlayed = ts.mPosition;

/* 获取底层更新该值时的系统时间 */

numFramesPlayedAt =

ts.mTime.tv_sec * 1000000LL + ts.mTime.tv_nsec / 1000;

/* 计算底层更新时与当前系统时间的时差 */

const int64_t timestampAge = nowUs - numFramesPlayedAt;

/* 如果差值超过100ms,则系统系统时间变更为当前时间 - 100ms */

if (timestampAge > kStaleTimestamp100ms) {

// This is an audio FIXME.

// getTimestamp returns a timestamp which may come from audio mixing threads.

// After pausing, the MixerThread may go idle, thus the mTime estimate may

// become stale. Assuming that the MixerThread runs 20ms, with FastMixer at 5ms,

// the max latency should be about 25ms with an average around 12ms (to be verified).

// For safety we use 100ms.

ALOGV("getTimestamp: returned stale timestamp nowUs(%lld) numFramesPlayedAt(%lld)",

(long long)nowUs, (long long)numFramesPlayedAt);

numFramesPlayedAt = nowUs - kStaleTimestamp100ms;

}

//ALOGD("getTimestamp: OK %d %lld", numFramesPlayed, (long long)numFramesPlayedAt);

} else if (res == WOULD_BLOCK) {

// case 2: transitory state on start of a new track

numFramesPlayed = 0;

numFramesPlayedAt = nowUs;

//ALOGD("getTimestamp: WOULD_BLOCK %d %lld",

// numFramesPlayed, (long long)numFramesPlayedAt);

} else {

// case 3: transitory at new track or audio fast tracks.

/* 调用getPlaybackHeadPosition获取当前播放帧数 */

res = mAudioSink->getPosition(&numFramesPlayed);

CHECK_EQ(res, (status_t)OK);

numFramesPlayedAt = nowUs;

numFramesPlayedAt += 1000LL * mAudioSink->latency() / 2; /* XXX */

//ALOGD("getPosition: %d %lld", numFramesPlayed, numFramesPlayedAt);

}

// TODO: remove the (int32_t) casting below as it may overflow at 12.4 hours.

//CHECK_EQ(numFramesPlayed & (1 << 31), 0); // can't be negative until 12.4 hrs, test

/* 实际播放时间(us) = audiotrack已播放帧数 * 1000 * 每帧大小(2ch,16bit即为4)+ 当前时间 - 底层最新值对应的系统时间 */

int64_t durationUs = (int64_t)((int32_t)numFramesPlayed * 1000LL * mAudioSink->msecsPerFrame())

+ nowUs - numFramesPlayedAt;

if (durationUs < 0) {

// Occurs when numFramesPlayed position is very small and the following:

// (1) In case 1, the time nowUs is computed before getTimestamp() is called and

// numFramesPlayedAt is greater than nowUs by time more than numFramesPlayed.

// (2) In case 3, using getPosition and adding mAudioSink->latency() to

// numFramesPlayedAt, by a time amount greater than numFramesPlayed.

//

// Both of these are transitory conditions.

ALOGV("getPlayedOutAudioDurationUs: negative duration %lld set to zero", (long long)durationUs);

durationUs = 0;

}

ALOGV("getPlayedOutAudioDurationUs(%lld) nowUs(%lld) frames(%u) framesAt(%lld)",

(long long)durationUs, (long long)nowUs, numFramesPlayed, (long long)numFramesPlayedAt);

return durationUs;

}

对这个函数的理解需要建立在AudioTrack的getTimestamp和getPlaybackHeadPosition两个函数基础上,如下是java层的两个接口:

/* 1 */

public boolean getTimestamp(AudioTimestamp timestamp);

/* 2 */

public int getPlaybackHeadPosition();

前者需要设备底层有实现,可以理解为这是一个精确获取pts的函数,但是该函数有一个特点,不会频繁刷新值,所以不宜频繁调用,从官方文档的给的参考来看,建议10s~60s调用一次,看一下入参类:

public long framePosition; /* 写入帧的位置 */

public long nanoTime; /* 更新帧位置时的系统时间 */

后者则是可以频繁调用的接口,其值返回的是从播放开始,audiotrack持续写入到hal层的数据,对这两个函数有了了解后,我们就知道有两种方式可以从audiotrack获取音频的pts。这里需要说明一下,audiosink对AudioTrack进行了封装操作,而且java层和native层的是有差异的,所以我们会发现在nuplayer的代码中调用AudioTrack获取播放位置的函数的时候,函数名和返回值会有不同。

我们看代码,实际上就是对当前播放时间的一个校准,校准的原理就是将底层上一帧值更新时的系统时间与当前时间进行比对,计算出一个差值,然后用这个差值去做校准,计算出实际已播放时间。

回到上面的getCurrentPositionFromAnchor函数:

/* 计算当前帧播放时间 = (系统时间 - 已播放时间) + 上一帧音频时间戳 */

int64_t positionUs = (nowUs - mAnchorTimeRealUs) + mAnchorTimeMediaUs;

在拿到了这个计算出来的当前播放时间后,注释二我们也就理解了,目的是通过对音频底层数据的校准分析,推断出此时在同步状态下,视频帧的送显时间。

注释三:

这里会对送显时间进行一个校准,但是nuplayer的校准写的太复杂了,没看懂。

注释四:

MediaCodec官方文档中,对于视频帧送显的建议是提前两个同步信号点,所以,nuplayer在这里计算了两个同步信号点的时长。

注释五:

保证在两个同步信号点之内将消息投递出去进行渲染,而这个消息,就是注释一的kWhatDrainVideoQueue消息。

三、视频渲染:

看下kWhatDrainVideoQueue消息是如何处理渲染的:

case kWhatDrainVideoQueue:

{

int32_t generation;

CHECK(msg->findInt32("generation", &generation));

if (generation != mVideoQueueGeneration) {

break;

}

mDrainVideoQueuePending = false;

onDrainVideoQueue();

Mutex::Autolock autoLock(mLock);

postDrainVideoQueue_l();

break;

}

跟进到onDrainVideoQueue()函数:

void NuPlayer::Renderer::onDrainVideoQueue() {

if (mVideoQueue.empty()) {

return;

}

QueueEntry *entry = &*mVideoQueue.begin();

if (entry->mBuffer == NULL) {

// EOS

notifyEOS(false /* audio */, entry->mFinalResult);

mVideoQueue.erase(mVideoQueue.begin());

entry = NULL;

setVideoLateByUs(0);

return;

}

int64_t nowUs = -1;

int64_t realTimeUs;

if (mFlags & FLAG_REAL_TIME) {

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &realTimeUs));

} else {

int64_t mediaTimeUs;

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

nowUs = ALooper::GetNowUs();

/* 重新计算实际送显时间 */

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

}

bool tooLate = false;

if (!mPaused) {

if (nowUs == -1) {

nowUs = ALooper::GetNowUs();

}

/* 计算出当前帧播放时差是否大于40ms */

setVideoLateByUs(nowUs - realTimeUs);

/* 大于40ms,即视频帧来的太迟了,将丢帧 */

tooLate = (mVideoLateByUs > 40000);

if (tooLate) {

ALOGV("video late by %lld us (%.2f secs)",

mVideoLateByUs, mVideoLateByUs / 1E6);

} else {

ALOGV("rendering video at media time %.2f secs",

(mFlags & FLAG_REAL_TIME ? realTimeUs :

(realTimeUs + mAnchorTimeMediaUs - mAnchorTimeRealUs)) / 1E6);

}

} else {

setVideoLateByUs(0);

if (!mVideoSampleReceived && !mHasAudio) {

// This will ensure that the first frame after a flush won't be used as anchor

// when renderer is in paused state, because resume can happen any time after seek.

setAnchorTime(-1, -1);

}

}

/* 注意这两个值决定是送显还是丢帧 */

entry->mNotifyConsumed->setInt64("timestampNs", realTimeUs * 1000ll);

entry->mNotifyConsumed->setInt32("render", !tooLate);

entry->mNotifyConsumed->post();

mVideoQueue.erase(mVideoQueue.begin());

entry = NULL;

mVideoSampleReceived = true;

if (!mPaused) {

if (!mVideoRenderingStarted) {

mVideoRenderingStarted = true;

notifyVideoRenderingStart();

}

notifyIfMediaRenderingStarted();

}

}

这个函数不复杂,对送显时间进行了再次校准,排除了消息收发处理中耽搁的时间,如果视频帧来的太迟了并且大于40ms,会将tooLate变量置为false,直接丢帧。这里有第一个地方需要注意,我们看到代码中重新计算了送显时间,但是却没有调用schedule去再次校准,不确定是否是一个bug,schedule函数的原理没有弄懂,但是我们可以确定的是,该函数肯定是将理论送显时间调整为某一个同步时间点。mNotifyConsumed->post()投递的消息是kWhatRenderBuffer,这个在音频部分已经分析过了,再看一下消息的处理,不再赘述:

void NuPlayer::Decoder::onRenderBuffer(const sp<AMessage> &msg) {

status_t err;

int32_t render;

size_t bufferIx;

CHECK(msg->findSize("buffer-ix", &bufferIx));

if (!mIsAudio) {

int64_t timeUs;

sp<ABuffer> buffer = mOutputBuffers[bufferIx];

buffer->meta()->findInt64("timeUs", &timeUs);

if (mCCDecoder != NULL && mCCDecoder->isSelected()) {

mCCDecoder->display(timeUs);

}

}

/* 如果render为false,就丢帧不去渲染 */

if (msg->findInt32("render", &render) && render) {

int64_t timestampNs;

CHECK(msg->findInt64("timestampNs", ×tampNs));

err = mCodec->renderOutputBufferAndRelease(bufferIx, timestampNs);

} else {

err = mCodec->releaseOutputBuffer(bufferIx);

}

if (err != OK) {

ALOGE("failed to release output buffer for %s (err=%d)",

mComponentName.c_str(), err);

handleError(err);

}

}

四、总结:

视频帧及同步处理机制逻辑图如下: