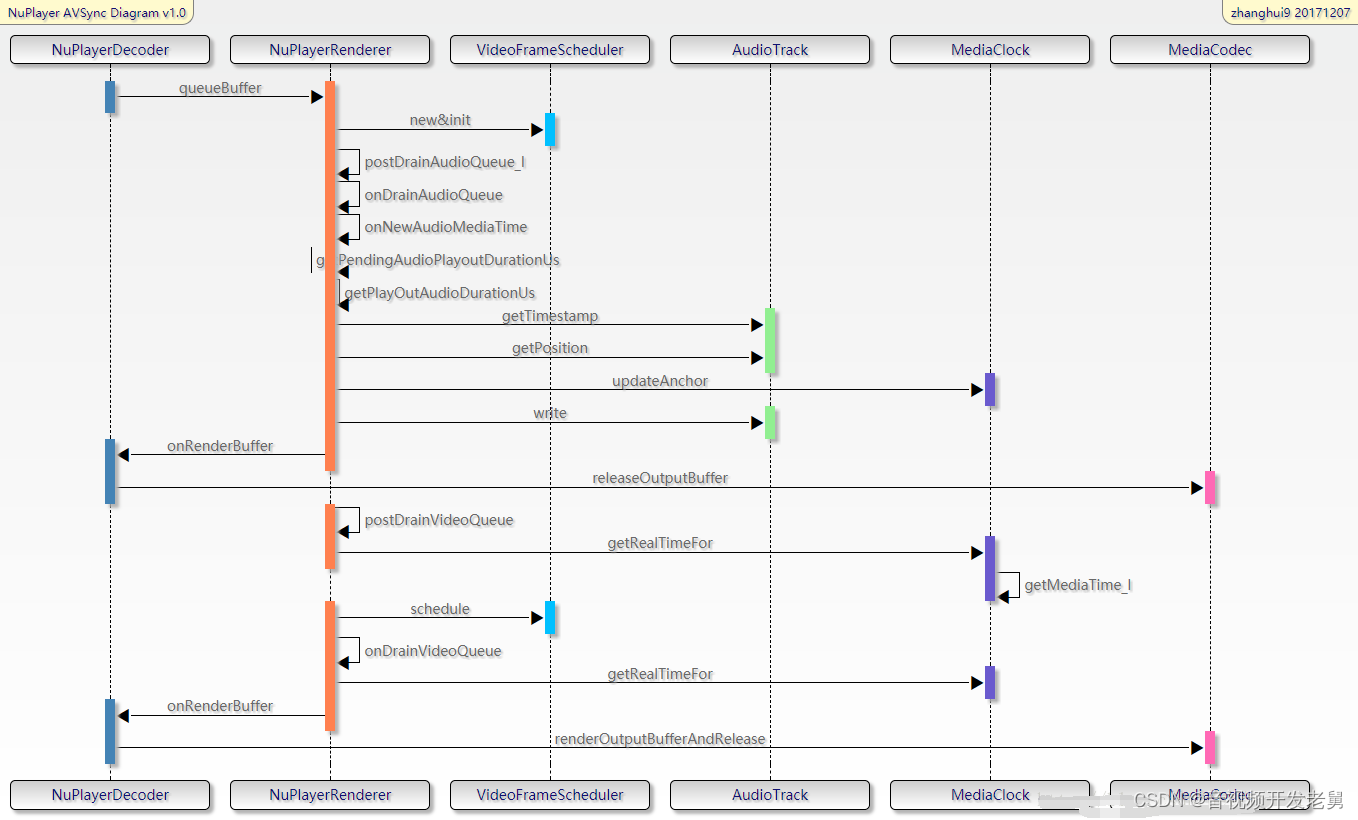

对于此前没有看过NuPlayer的朋友,我们在这里先用下面的时序图简单介绍一下NuPlayer在音视频同步这块的基本流程:

图中

NuPlayerDecoder拿到解码后的音视频数据后queueBuffer给NuPlayerRenderer,在NuPlayerRenderer中通过postDrainAudioQueue_l方法调用AudioTrack进行写入,并且获取“Audio当前播放的时间”,可以看到这里也调用了AudioTrack的getTimeStamp和getPosition方法,和ExoPlayer中类似,同时会利用MediaClock类记录一些锚点时间戳变量。NuPlayerRenderer中调用postDrainVideoQueue方法对video数据进行处理,包括计算实际送显时间,利用vsync信号调整送显时间等,这里的调整是利用VideoFrameScheduler类完成的。需要注意的是,实际上NuPlayerRenderer方法中只进行了avsync的调整,真正的播放还要通过onRendereBuffer调用到NuPlayerDecoder中,进而调用MediaCodec的release方法进行播放。

下面我会先简要的介绍NuPlayer avsync逻辑中的关键点,最后再进行详细的代码分析。

本文福利, 免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓

Video部分

1、利用pts和系统时间计算realTimeUs(视频帧应该在这个时间点显示)

NuPlayer::Renderer::postDrainVideoQueue

int64_t nowUs = ALooper::GetNowUs();

BufferItem *bufferItem = &*mBufferItems.begin();

int64_t itemMediaUs = bufferItem->mTimestamp / 1000;

//这里就是调用MediaClock的getRealTimeFor方法,得到“视频帧应该显示的时间”

int64_t itemRealUs = getRealTime(itemMediaUs, nowUs);

realTimeUs = PTS - nowMediaUs + nowUs

= PTS - (mAnchorTimeMediaUs + (nowUs - mAnchorTimeRealUs)) + nowUs mAnchorTimeMediaUs代表锚点媒体时间戳,可以理解为最开始播放的时候记录下来的第一个媒体时间戳。

mAnchorTimeRealUs代表锚点real系统时间戳。

nowUs - mAnchorTimeRealUs即为从开始播放到现在,系统时间经过了多久。

再加上mAnchorTimeMediaUs,即为“在当前系统时间下,对应的媒体时间戳”

用PTS减去这个时间,表示“还有多久该播放这一帧”

最后再加上一个系统时间,即为这一帧应该显示的时间。

2、利用vsync信号调整realTimeUs

NuPlayer::Renderer::postDrainVideoQueue

realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000;

schedule方法非常复杂,我们难以完全理解,但也看到了计算ns精度的视频帧间隔的代码,这也与exoplayer的做法相同。

3、提前2倍vsync duration进行render

NuPlayer::Renderer::postDrainVideoQueue

//2倍vsync duration

int64_t twoVsyncsUs = 2 * (mVideoScheduler->getVsyncPeriod() / 1000);

//利用调整后的realTimeUs再计算一次“还有多久该播放这一帧”

delayUs = realTimeUs - nowUs;

// post 2 display refreshes before rendering is due

//如果delayUs大于两倍vsync duration,则延迟到“距离显示时间两倍vsync duration之前的时间点”再发消息进入后面的流程,否则立即走后面的流程

msg->post(delayUs > twoVsyncsUs ? delayUs - twoVsyncsUs : 0);

4、丢帧与送显

NuPlayer::Renderer::onDrainVideoQueue

//取出pts值

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

nowUs = ALooper::GetNowUs();

//考虑到中间发消息等等会有耗时,所以这里重新利用pts计算一次realTimeUs

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

//如果nowUs>realTimeUs,即代表视频帧来晚了

setVideoLateByUs(nowUs - realTimeUs);

//如果晚了40ms,即认为超过了门限值

tooLate = (mVideoLateByUs > 40000);

//把realTimeUs赋值给timestampNs,通过消息发出去

entry->mNotifyConsumed->setInt64("timestampNs", realTimeUs * 1000ll);

注意,这里我认为有个bug, 大家看这里重新计算了一次realTimeUs,却没有再用mVideoScheduler->schedule进行调整,相当于之前那次调整白白浪费了。

小结

1.计算video送显时间的核心公式如下

realTimeUs = PTS - nowMediaUs + nowUs

= PTS - (mAnchorTimeMediaUs + (nowUs - mAnchorTimeRealUs)) + nowUs

2.比较exoplayer和nuplayer,可以看到相同点包括:

a.都是比较系统时间与视频帧的送显时间来判断要不要丢帧,丢帧门限值固定为40ms;

b.都会在计算送显时间时考虑函数调用与消息传递的耗时;

c.计算送显在计算送显时间时,都利用到了vsync信号来对送显时间进行校准

在相同点之外,也存在着差异:

a.nuplayer会在最开始的时候就先确保音视频保持基本范围的同步

b.nuplayer中会有一个提前两倍vsync时间开始执行releaseOutputbuffer的逻辑,这一点与API注释中的描述一致

本文福利, 免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓

Audio部分

1、初始pts的纠正

NuPlayer::Renderer::onQueueBuffer

int64_t diff = firstVideoTimeUs - firstAudioTimeUs;

...

if (diff > 100000ll) {

// Audio data starts More than 0.1 secs before video.

// Drop some audio.

// 这里是对音视频的第一个pts做一下纠正,保证一开始两者是同步的,但是这里只是考虑了audio提前的情况,而没有考虑video提前的情况

(*mAudioQueue.begin()).mNotifyConsumed->post();

mAudioQueue.erase(mAudioQueue.begin());

return;

}

2、利用pts更新几个锚点变量

NuPlayer::Renderer::onDrainAudioQueue

//pts减去“还没播放的时间”,就是当前已经播放的时间,即playedDuration,将其设置为nowMediaUs

int64_t nowMediaUs = mediaTimeUs - getPendingAudioPlayoutDurationUs(nowUs);

//计算“还没播放的时间”

//计算writtenFrames对应的duration

//writtenDuration = writtenFrames/sampleRate

int64_t writtenAudioDurationUs =

getDurationUsIfPlayedAtSampleRate(mNumFramesWritten);

//用wriitenDuration - playedDuration,即为“还没播出的时长pendingPlayDuration”

return writtenAudioDurationUs - getPlayedOutAudioDurationUs(nowUs);

//计算playedDuration – 使用getTimeStamp方法

status_t res = mAudioSink->getTimestamp(ts);

//当前播放的framePosition

numFramesPlayed = ts.mPosition;

//framePosition对应的系统时间

numFramesPlayedAt =

ts.mTime.tv_sec * 1000000LL + ts.mTime.tv_nsec / 1000;

int64_t durationUs = getDurationUsIfPlayedAtSampleRate(numFramesPlayed)

+ nowUs - numFramesPlayedAt;

这里可以说是avsync的核心逻辑了

来简单说说这几个变量,numFramesPlayed代表“从底层获取到的已播放帧数”,需要注意的是,这个并不一定是当前系统时间下已经播放的实时帧数,而numFramesPlayedAt代表“numFramesPlayed对应的系统时间”,所以

durationUs = numFramesPlayed/sampleRate +nowUs - numFramesPlayedAt才是当前系统时间下已经播放的音频时长

//计算playedDuration – 使用getPosition方法

//与exoplayer中的逻辑一样,如果getTimestamp用不了,再走getposition流程

res = mAudioSink->getPosition(&numFramesPlayed);

numFramesPlayedAt = nowUs;

//当前系统时间加上latency才是真正playedOut的时间,这里取了latency/2,可以看做是一种平均,因为latency方法返回值可能并不准

numFramesPlayedAt += 1000LL * mAudioSink->latency() / 2;

int64_t durationUs = getDurationUsIfPlayedAtSampleRate(numFramesPlayed)

+ nowUs - numFramesPlayedAt;

//利用当前系统时间,当前播放的媒体时间戳,pts,更新锚点

mMediaClock->updateAnchor(nowMediaUs, nowUs, mediaTimeUs);

void MediaClock::updateAnchor(

int64_t anchorTimeMediaUs,

int64_t anchorTimeRealUs,

int64_t maxTimeMediaUs) {

…

int64_t nowUs = ALooper::GetNowUs();

int64_t nowMediaUs =

anchorTimeMediaUs + (nowUs - anchorTimeRealUs) * (double)mPlaybackRate;

...

mAnchorTimeRealUs = nowUs;

mAnchorTimeMediaUs = nowMediaUs;

}

小结

整个逻辑核心的公式就是如何计算已经播出的audio时长:

durationUs = numFramesPlayed/sampleRate +nowUs - numFramesPlayedAt

与exoplayer一样,可以通过getTimeStamp或者getPosition方法来获取

不同的地方有几点:首先是调用getTimeStamp的间隔不同,exoplayer中是500ms间隔,

而nuplayer中的间隔是pendingPlayedOutDuration/2,没有取定值;

其次是调用getPosition方法时,加上的是latency/2。

至于那些锚点变量的计算,看似复杂,其中心思想也大同小异。

NuPlayer avsync逻辑代码精读

先来看video部分

–

在下面的方法中我们对outputbuffer进行处理

bool NuPlayer::Decoder::handleAnOutputBuffer(

size_t index,

size_t offset,

size_t size,

int64_t timeUs,//pts

int32_t flags) {

sp<ABuffer> buffer;

mCodec->getOutputBuffer(index, &buffer);

...

buffer->setRange(offset, size);

buffer->meta()->clear();

buffer->meta()->setInt64("timeUs", timeUs);

…

sp<AMessage> reply = new AMessage(kWhatRenderBuffer, this);

...

if (mRenderer != NULL) {

// send the buffer to renderer.

mRenderer->queueBuffer(mIsAudio, buffer, reply);

...

}

return true;

}

pts赋值给ABuffer,ABuffer作为queueBuffer方法的参数传给Renderer,kWhatRenderBuffer也作为消息传给Renderer

void NuPlayer::Renderer::onQueueBuffer(const sp<AMessage> &msg) {

...

if (mHasVideo) {

if (mVideoScheduler == NULL) {

mVideoScheduler = new VideoFrameScheduler();

//获取vsync duration和vsynctime,可以看到,在NuPlayer的avsync中,也涉及到针对vsync时间的调整

mVideoScheduler->init();

}

}

sp<ABuffer> buffer;

CHECK(msg->findBuffer("buffer", &buffer));

...

QueueEntry entry;

entry.mBuffer = buffer;

...

if (audio) {

Mutex::Autolock autoLock(mLock);

mAudioQueue.push_back(entry);

//处理音频队列的地方

postDrainAudioQueue_l();

} else {

mVideoQueue.push_back(entry);

//处理视频队列的地方

postDrainVideoQueue();

}

Mutex::Autolock autoLock(mLock);

...

sp<ABuffer> firstAudioBuffer = (*mAudioQueue.begin()).mBuffer;

sp<ABuffer> firstVideoBuffer = (*mVideoQueue.begin()).mBuffer;

...

int64_t firstAudioTimeUs;

int64_t firstVideoTimeUs;

CHECK(firstAudioBuffer->meta()

->findInt64("timeUs", &firstAudioTimeUs));

CHECK(firstVideoBuffer->meta()

->findInt64("timeUs", &firstVideoTimeUs));

int64_t diff = firstVideoTimeUs - firstAudioTimeUs;

...

if (diff > 100000ll) {

// Audio data starts More than 0.1 secs before video.

// Drop some audio.

// 这里是对音视频的第一个pts做一下纠正,保证一开始两者是同步的,但是这里只是考虑了audio提前的情况,而没有考虑video提前的情况

(*mAudioQueue.begin()).mNotifyConsumed->post();

mAudioQueue.erase(mAudioQueue.begin());

return;

}

...

}

在上面的方法中,获取了vsync时间和duration,这表示在后面的同步过程中会涉及到针对vsync信号的调整,同时还保证了音视频从一开始是同步的,下面进入正题

void NuPlayer::Renderer::postDrainVideoQueue() {

...

QueueEntry &entry = *mVideoQueue.begin();

sp<AMessage> msg = new AMessage(kWhatDrainVideoQueue, this);

...

int64_t delayUs;

int64_t nowUs = ALooper::GetNowUs();

int64_t realTimeUs;

if (mFlags & FLAG_REAL_TIME) {

...

} else {

int64_t mediaTimeUs;

//取出视频帧的pts

CHECK(entry.mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

{

Mutex::Autolock autoLock(mLock);

//realTimeUs的含义是“视频帧应该在这个时间点显示”

if (mAnchorTimeMediaUs < 0) {

mMediaClock->updateAnchor(mediaTimeUs, nowUs, mediaTimeUs);

mAnchorTimeMediaUs = mediaTimeUs;

realTimeUs = nowUs;

} else {

//利用pts和系统时间计算realTimeUs,参见1.1

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

}

}

...

// Heuristics to handle situation when media time changed without a

// discontinuity. If we have not drained an audio buffer that was

// received after this buffer, repost in 10 msec. Otherwise repost

// in 500 msec.

// delayUs代表“还有多久该播放这一帧”

delayUs = realTimeUs - nowUs;

if (delayUs > 500000) {

//这一帧来的太早了,超过了500ms的门限值,则重新loop一次,和exoplayer中来的太早就再等一个10ms循环的机制相同

int64_t postDelayUs = 500000;

if (mHasAudio && (mLastAudioBufferDrained - entry.mBufferOrdinal) <= 0) {

postDelayUs = 10000;

}

msg->setWhat(kWhatPostDrainVideoQueue);

msg->post(postDelayUs);

mVideoScheduler->restart();

ALOGI("possible video time jump of %dms, retrying in %dms",

(int)(delayUs / 1000), (int)(postDelayUs / 1000));

mDrainVideoQueuePending = true;

return;

}

}

//利用vsync信号调整realTimeUs,详细的调整方法参见1.2

realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000;

//2倍vsync duration

int64_t twoVsyncsUs = 2 * (mVideoScheduler->getVsyncPeriod() / 1000);

//利用调整后的realTimeUs再计算一次“还有多久该播放这一帧”

delayUs = realTimeUs - nowUs;

ALOGW_IF(delayUs > 500000, "unusually high delayUs: %" PRId64, delayUs);

// post 2 display refreshes before rendering is due

//如果delayUs大于两倍vsync duration,则延迟到“距离显示时间两倍vsync duration之前的时间点”再发消息进入后面的流程,否则立即走后面的流程

msg->post(delayUs > twoVsyncsUs ? delayUs - twoVsyncsUs : 0);

mDrainVideoQueuePending = true;

}

1.1

下面的方法根据audio clock情况,利用视频帧pts和系统时间来计算“视频帧应该显示的时间”,实际上只利用了pts计算,如果计算失败,再返回传进来的系统时间,也就是立即显示的意思

int64_t NuPlayer::Renderer::getRealTimeUs(int64_t mediaTimeUs, int64_t nowUs) {

int64_t realUs;

//这里实际调用的是MediaClock中的方法,见下面分析

if (mMediaClock->getRealTimeFor(mediaTimeUs, &realUs) != OK) {

// If failed to get current position, e.g. due to audio clock is

// not ready, then just play out video immediately without delay.

return nowUs;

}

return realUs;

}

总结这两个方法,可以得到如下的公式

realTimeUs = PTS - nowMediaUs + nowUs

= PTS - (mAnchorTimeMediaUs + (nowUs - mAnchorTimeRealUs)) + nowUs

mAnchorTimeMediaUs锚点媒体时间戳,可以理解为最开始播放的时候记录下来的第一个媒体时间戳

mAnchorTimeRealUs锚点real系统时间戳,

nowUs - mAnchorTimeRealUs即为从开始播放到现在,系统时间经过了多久。

这个时间再加上mAnchorTimeMediaUs,即为“在当前系统时间下,对应的媒体时间戳”,

用PTS减去这个时间,表示“还有多久该播放这一帧”。

最后再加上一个系统时间,即为这一帧应该显示的时间。

至于上面两个锚点时间的更新与计算,请见audio部分的分析,也颇为复杂

status_t MediaClock::getRealTimeFor(

int64_t targetMediaUs, int64_t *outRealUs) const {

...

int64_t nowUs = ALooper::GetNowUs();

int64_t nowMediaUs;

status_t status =

getMediaTime_l(nowUs, &nowMediaUs, true /* allowPastMaxTime */);

...

*outRealUs = (targetMediaUs - nowMediaUs) / (double)mPlaybackRate + nowUs;

return OK;

}

status_t MediaClock::getMediaTime_l(

int64_t realUs, int64_t *outMediaUs, bool allowPastMaxTime) const {

...

int64_t mediaUs = mAnchorTimeMediaUs

+ (realUs - mAnchorTimeRealUs) * (double)mPlaybackRate;

//下面是一系列的范围校验,关键的计算其实就是上面一行

if (mediaUs > mMaxTimeMediaUs && !allowPastMaxTime) {

mediaUs = mMaxTimeMediaUs;

}

if (mediaUs < mStartingTimeMediaUs) {

mediaUs = mStartingTimeMediaUs;

}

if (mediaUs < 0) {

mediaUs = 0;

}

*outMediaUs = mediaUs;

return OK;

}

1.2

在下面的方法中,利用vsync信号调整视频帧应该显示的时间

nsecs_t VideoFrameScheduler::schedule(nsecs_t renderTime) {

…

TODO: 非常复杂,还未理解透彻

}

1.3

postDrainVideoQueue方法最后的msg post后走到下面的函数中

void NuPlayer::Renderer::onDrainVideoQueue() {

...

QueueEntry *entry = &*mVideoQueue.begin();

...

int64_t nowUs = -1;

int64_t realTimeUs;

if (mFlags & FLAG_REAL_TIME) {

...

} else {

int64_t mediaTimeUs;

//取出pts值

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

nowUs = ALooper::GetNowUs();

//考虑到中间发消息等等会有耗时,所以这里重新利用pts计算一次realTimeUs

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

}

bool tooLate = false;

if (!mPaused) {

if (nowUs == -1) {

nowUs = ALooper::GetNowUs();

}

//如果nowUs>realTimeUs,即代表视频帧来晚了

setVideoLateByUs(nowUs - realTimeUs);

//如果晚了40ms,即认为超过了门限值

tooLate = (mVideoLateByUs > 40000);

if (tooLate) {

ALOGV("video late by %lld us (%.2f secs)",

(long long)mVideoLateByUs, mVideoLateByUs / 1E6);

} else {

int64_t mediaUs = 0;

//realTimeUs是系统时间戳,转换为媒体时间戳,其实这里就是打印log用一下,这个mediaUs并没什么实际卵用

mMediaClock->getMediaTime(realTimeUs, &mediaUs);

ALOGV("rendering video at media time %.2f secs",

(mFlags & FLAG_REAL_TIME ? realTimeUs :

mediaUs) / 1E6);

}

} else {

...

}

//把realTimeUs赋值给timestampNs,通过消息发出去

//TODO:这里为什么不再用videoFrameScheduler校正一下???不就相当于之前白校正了吗?

entry->mNotifyConsumed->setInt64("timestampNs", realTimeUs * 1000ll);

entry->mNotifyConsumed->setInt32("render", !tooLate);

entry->mNotifyConsumed->post();

mVideoQueue.erase(mVideoQueue.begin());

entry = NULL;

mVideoSampleReceived = true;

if (!mPaused) {

if (!mVideoRenderingStarted) {

mVideoRenderingStarted = true;

notifyVideoRenderingStart();

}

Mutex::Autolock autoLock(mLock);

notifyIfMediaRenderingStarted_l();

}

}

回到Decoder中,收到kWhatRenderBuffer消息,调用下面的方法

void NuPlayer::Decoder::onRenderBuffer(const sp<AMessage> &msg) {

status_t err;

int32_t render;

size_t bufferIx;

int32_t eos;

CHECK(msg->findSize("buffer-ix", &bufferIx));

if (!mIsAudio) {

int64_t timeUs;

sp<ABuffer> buffer = mOutputBuffers[bufferIx];

//这个是pts

buffer->meta()->findInt64("timeUs", &timeUs);

...

}

if (msg->findInt32("render", &render) && render) {

int64_t timestampNs;

//取出realTimeUs

CHECK(msg->findInt64("timestampNs", ×tampNs));

//下面对应的就是MediaCodec的releaseOutputBuffer方法

err = mCodec->renderOutputBufferAndRelease(bufferIx, timestampNs);

} else {

mNumOutputFramesDropped += !mIsAudio;

err = mCodec->releaseOutputBuffer(bufferIx);

}

...

}

下面来看nuplayer中audio部分的avsync逻辑,播放器不会调整audio的播放时间,我们关注的是如何获取当前播放的时间,即getCurrentPosition

本文福利, 免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓

–

和video类似,处理audio数据的入口在postDrainAudioQueue_l

所不同的是,方法的入参有一个delayUs,说明audio处理的循环中间是有一定间隔的,并非不停地运转loop

void NuPlayer::Renderer::postDrainAudioQueue_l(int64_t delayUs) {

...

mDrainAudioQueuePending = true;

sp<AMessage> msg = new AMessage(kWhatDrainAudioQueue, this);

msg->setInt32("drainGeneration", mAudioDrainGeneration);

msg->post(delayUs);

}

这个delayUs是在下面传进来的

case kWhatDrainAudioQueue:

{

…

//onDrainAudioQueue方法中,如果最后发现audioQueue中还有数据,则会返回true,表示需要继续写入数据,详细的分析见下面

if (onDrainAudioQueue()) {

//通过getPosition方法获取已经播放的framePosition

uint32_t numFramesPlayed;

CHECK_EQ(mAudioSink->getPosition(&numFramesPlayed),

(status_t)OK);

//写入的帧数writtenFrames减去已经播放的帧数即为还没有播放的帧数

uint32_t numFramesPendingPlayout =

mNumFramesWritten - numFramesPlayed;

//将numFramesPendingPlayout转换为时间单位,设为delayUs

// This is how long the audio sink will have data to

// play back.

int64_t delayUs =

mAudioSink->msecsPerFrame()

* numFramesPendingPlayout * 1000ll;

if (mPlaybackRate > 1.0f) {

delayUs /= mPlaybackRate;

}

//我们调用onDrainAudioQueue的间隔即为delayUs/2

//这样的话就和exoplayer类似了,exoplayer中写入audio数据的间隔是10ms,同时还有一个500ms的调用getTimeStamp间隔,在nuplayer中这个delayUs/2起到了相同的作用

// Let's give it more data after about half that time

// has elapsed.

Mutex::Autolock autoLock(mLock);

postDrainAudioQueue_l(delayUs / 2);

}

break;

}

这个delayUs是在下面传进来的

case kWhatDrainAudioQueue:

{

…

//onDrainAudioQueue方法中,如果最后发现audioQueue中还有数据,则会返回true,表示需要继续写入数据,详细的分析见下面

if (onDrainAudioQueue()) {

//通过getPosition方法获取已经播放的framePosition

uint32_t numFramesPlayed;

CHECK_EQ(mAudioSink->getPosition(&numFramesPlayed),

(status_t)OK);

//写入的帧数writtenFrames减去已经播放的帧数即为还没有播放的帧数

uint32_t numFramesPendingPlayout =

mNumFramesWritten - numFramesPlayed;

//将numFramesPendingPlayout转换为时间单位,设为delayUs

// This is how long the audio sink will have data to

// play back.

int64_t delayUs =

mAudioSink->msecsPerFrame()

* numFramesPendingPlayout * 1000ll;

if (mPlaybackRate > 1.0f) {

delayUs /= mPlaybackRate;

}

//我们调用onDrainAudioQueue的间隔即为delayUs/2

//这样的话就和exoplayer类似了,exoplayer中写入audio数据的间隔是10ms,同时还有一个500ms的调用getTimeStamp间隔,在nuplayer中这个delayUs/2起到了相同的作用

// Let's give it more data after about half that time

// has elapsed.

Mutex::Autolock autoLock(mLock);

postDrainAudioQueue_l(delayUs / 2);

}

break;

}

对应onDrainVideoQueue的逻辑如下

bool NuPlayer::Renderer::onDrainAudioQueue() {

// TODO: This call to getPosition checks if AudioTrack has been created

// in AudioSink before draining audio. If AudioTrack doesn't exist, then

// CHECKs on getPosition will fail.

uint32_t numFramesPlayed;

if (mAudioSink->getPosition(&numFramesPlayed) != OK) {

...

ALOGW("onDrainAudioQueue(): audio sink is not ready");

return false;

}

uint32_t prevFramesWritten = mNumFramesWritten;

//循环处理audioQueue中的音频数据

while (!mAudioQueue.empty()) {

QueueEntry *entry = &*mAudioQueue.begin();

mLastAudioBufferDrained = entry->mBufferOrdinal;

if (entry->mBuffer == NULL) {

// EOS

...

return false;

}

// ignore 0-sized buffer which could be EOS marker with no data

//第一次drain audio

if (entry->mOffset == 0 && entry->mBuffer->size() > 0) {

int64_t mediaTimeUs;

//取pts

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

ALOGV("onDrainAudioQueue: rendering audio at media time %.2f secs",

mediaTimeUs / 1E6);

//利用pts初始化几个锚点变量,详见2.1

onNewAudioMediaTime(mediaTimeUs);

}

//copy表示buffer还剩多少音频数据

size_t copy = entry->mBuffer->size() - entry->mOffset;

//written返回的是实际写入的数据量,我们传入的目标量是copy值

ssize_t written = mAudioSink->write(entry->mBuffer->data() + entry->mOffset,

copy, false /* blocking */);

if (written < 0) {

// An error in AudioSink write. Perhaps the AudioSink was not properly opened.

...

break;

}

//更新offset

entry->mOffset += written;

if (entry->mOffset == entry->mBuffer->size()) {

//当前buffer已经全部写入了,送给decoder的onRenderBuffer方法

entry->mNotifyConsumed->post();

mAudioQueue.erase(mAudioQueue.begin());

entry = NULL;

}

//更新mNumFramesWritten

size_t copiedFrames = written / mAudioSink->frameSize();

mNumFramesWritten += copiedFrames;

...

if (written != (ssize_t)copy) {

// A short count was received from AudioSink::write()

//

// AudioSink write is called in non-blocking mode.

// It may return with a short count when:

//

// 1) Size to be copied is not a multiple of the frame size. We consider this fatal.

// 2) The data to be copied exceeds the available buffer in AudioSink.

// 3) An error occurs and data has been partially copied to the buffer in AudioSink.

// 4) AudioSink is an AudioCache for data retrieval, and the AudioCache is exceeded.

// (Case 1)

// Must be a multiple of the frame size. If it is not a multiple of a frame size, it

// needs to fail, as we should not carry over fractional frames between calls.

CHECK_EQ(copy % mAudioSink->frameSize(), 0);

// (Case 2, 3, 4)

// Return early to the caller.

// Beware of calling immediately again as this may busy-loop if you are not careful.

ALOGV("AudioSink write short frame count %zd < %zu", written, copy);

break;

}

}

int64_t maxTimeMedia;

{

Mutex::Autolock autoLock(mLock);

//锚点媒体时间戳加上新写入帧数对应的时长,即为媒体时间戳最大值

maxTimeMedia =

mAnchorTimeMediaUs +

(int64_t)(max((long long)mNumFramesWritten - mAnchorNumFramesWritten, 0LL)

* 1000LL * mAudioSink->msecsPerFrame());

}

//更新MediaClock中的maxTimeMedia

mMediaClock->updateMaxTimeMedia(maxTimeMedia);

// calculate whether we need to reschedule another write.

//还有数据,继续循环准备下一次写入

bool reschedule = !mAudioQueue.empty()

&& (!mPaused

|| prevFramesWritten != mNumFramesWritten); // permit pause to fill buffers

//ALOGD("reschedule:%d empty:%d mPaused:%d prevFramesWritten:%u mNumFramesWritten:%u",

// reschedule, mAudioQueue.empty(), mPaused, prevFramesWritten, mNumFramesWritten);

return reschedule;

}

2.1

在下面的方法中对锚点变量进行初始化,传入参数为pts

回顾一下,mAnchorTimeMediaUs为锚点媒体时间戳,可以理解为最开始播放的时候记录下来的第一个媒体时间戳,mAnchorTimeRealUs为锚点real系统时间戳

void NuPlayer::Renderer::onNewAudioMediaTime(int64_t mediaTimeUs) {

Mutex::Autolock autoLock(mLock);

…

//用“初始audio pts”设置“初始锚点媒体时间戳”,参见2.1.1

setAudioFirstAnchorTimeIfNeeded_l(mediaTimeUs);

int64_t nowUs = ALooper::GetNowUs();

//pts减去还没播放的时间,其实就是当前已经播放的时间,即playedDuration,将其设置为nowMediaUs,关于如何计算”还没播放的时间”,参见2.1.2

int64_t nowMediaUs = mediaTimeUs - getPendingAudioPlayoutDurationUs(nowUs);

//利用当前系统时间,当前播放的媒体时间戳,pts,更新锚点,参见2.1.3

mMediaClock->updateAnchor(nowMediaUs, nowUs, mediaTimeUs);

//“锚点写入帧数量”初始化为0

mAnchorNumFramesWritten = mNumFramesWritten;

//将锚点媒体时间戳设置为初始audio pts

mAnchorTimeMediaUs = mediaTimeUs;

}

2.1.1

在下面两个方法中利用初始audio pts设置初始锚点媒体时间戳

void NuPlayer::Renderer::setAudioFirstAnchorTimeIfNeeded_l(int64_t mediaUs) {

if (mAudioFirstAnchorTimeMediaUs == -1) {

mAudioFirstAnchorTimeMediaUs = mediaUs;

mMediaClock->setStartingTimeMedia(mediaUs);

}

}

void MediaClock::setStartingTimeMedia(int64_t startingTimeMediaUs) {

Mutex::Autolock autoLock(mLock);

mStartingTimeMediaUs = startingTimeMediaUs;

}

2.1.2

在这里我们要先明确,音频存在着两个核心变量,一个是writtenFrames,代表写入audioTrack的帧数,一个是playedFramed,代表已经播出的帧数

在下面的方法中,计算的是音频“还没播出的时长”

// Calculate duration of pending samples if played at normal rate (i.e., 1.0).

int64_t NuPlayer::Renderer::getPendingAudioPlayoutDurationUs(int64_t nowUs) {

//计算writtenFrames对应的duration

//writtenDuration = writtenFrames/sampleRate

int64_t writtenAudioDurationUs =

getDurationUsIfPlayedAtSampleRate(mNumFramesWritten);

//用wriitenDuration - playedDuration,即为“还没播出的时长pendingPlayDuration”,关于playedDuration的计算,参见2.1.2.1

return writtenAudioDurationUs - getPlayedOutAudioDurationUs(nowUs);

}

int64_t NuPlayer::Renderer::getDurationUsIfPlayedAtSampleRate(uint32_t numFrames) {

int32_t sampleRate = offloadingAudio() ?

mCurrentOffloadInfo.sample_rate : mCurrentPcmInfo.mSampleRate;

return (int64_t)((int32_t)numFrames * 1000000LL / sampleRate);

}

2.1.2.1

在下面的方法中计算playedDuration,传入参数为系统时间

// Calculate duration of played samples if played at normal rate (i.e., 1.0).

int64_t NuPlayer::Renderer::getPlayedOutAudioDurationUs(int64_t nowUs) {

uint32_t numFramesPlayed;

int64_t numFramesPlayedAt;

AudioTimestamp ts;

static const int64_t kStaleTimestamp100ms = 100000;

//对应java api中的AudioTrack.getTimeStamp

status_t res = mAudioSink->getTimestamp(ts);

if (res == OK) { // case 1: mixing audio tracks and offloaded tracks.

//当前播放的framePosition

numFramesPlayed = ts.mPosition;

//framePosition对应的系统时间

numFramesPlayedAt =

ts.mTime.tv_sec * 1000000LL + ts.mTime.tv_nsec / 1000;

//这个时间与系统时间不应该差的太多,阈值默认为100ms

const int64_t timestampAge = nowUs - numFramesPlayedAt;

if (timestampAge > kStaleTimestamp100ms) {

ALOGV("getTimestamp: returned stale timestamp nowUs(%lld) numFramesPlayedAt(%lld)",

(long long)nowUs, (long long)numFramesPlayedAt);

numFramesPlayedAt = nowUs - kStaleTimestamp100ms;

}

//ALOGD("getTimestamp: OK %d %lld", numFramesPlayed, (long long)numFramesPlayedAt);

} else if (res == WOULD_BLOCK) { // case 2: transitory state on start of a new track

numFramesPlayed = 0;

numFramesPlayedAt = nowUs;

//ALOGD("getTimestamp: WOULD_BLOCK %d %lld",

// numFramesPlayed, (long long)numFramesPlayedAt);

} else { // case 3: transitory at new track or audio fast tracks.

//与exoplayer中的逻辑一样,如果getTimestamp用不了,再走getposition流程

res = mAudioSink->getPosition(&numFramesPlayed);

CHECK_EQ(res, (status_t)OK);

numFramesPlayedAt = nowUs;

//当前系统时间加上latency才是真正playedOut的时间,这里取了latency/2,可以看做是一种平均,因为latency方法返回值可能并不准

numFramesPlayedAt += 1000LL * mAudioSink->latency() / 2; /* XXX */

//ALOGD("getPosition: %u %lld", numFramesPlayed, (long long)numFramesPlayedAt);

}

//来简单说说这几个变量,numFramesPlayed代表“从底层获取到的已播放帧数”,需要注意的是,这个并不一定是当前系统时间下已经播放的实时帧数,而numFramesPlayedAt代表“numFramesPlayed对应的系统时间”,所以

durationUs = numFramesPlayed/sampleRate +nowUs - numFramesPlayedAt才是当前系统时间下已经播放的音频时长

int64_t durationUs = getDurationUsIfPlayedAtSampleRate(numFramesPlayed)

+ nowUs - numFramesPlayedAt;

if (durationUs < 0) {

// Occurs when numFramesPlayed position is very small and the following:

// (1) In case 1, the time nowUs is computed before getTimestamp() is called and

// numFramesPlayedAt is greater than nowUs by time more than numFramesPlayed.

// (2) In case 3, using getPosition and adding mAudioSink->latency() to

// numFramesPlayedAt, by a time amount greater than numFramesPlayed.

//

// Both of these are transitory conditions.

ALOGV("getPlayedOutAudioDurationUs: negative duration %lld set to zero", (long long)durationUs);

durationUs = 0;

}

ALOGV("getPlayedOutAudioDurationUs(%lld) nowUs(%lld) frames(%u) framesAt(%lld)",

(long long)durationUs, (long long)nowUs, numFramesPlayed, (long long)numFramesPlayedAt);

return durationUs;

}

2.1.3

在下面的方法中更新锚点,可以看到这里的逻辑和1.1中的完全一样,传进来的第三个参数是pts

void MediaClock::updateAnchor(

int64_t anchorTimeMediaUs,

int64_t anchorTimeRealUs,

int64_t maxTimeMediaUs) {

…

int64_t nowUs = ALooper::GetNowUs();

int64_t nowMediaUs =

anchorTimeMediaUs + (nowUs - anchorTimeRealUs) * (double)mPlaybackRate;

...

mAnchorTimeRealUs = nowUs;

mAnchorTimeMediaUs = nowMediaUs;

mMaxTimeMediaUs = maxTimeMediaUs;

}

2.2

在下面的方法中调用releaseOutputBuffer播放音频

void NuPlayer::Decoder::onRenderBuffer(const sp<AMessage> &msg) {

status_t err;

int32_t render;

size_t bufferIx;

int32_t eos;

CHECK(msg->findSize("buffer-ix", &bufferIx));

...

if (msg->findInt32("render", &render) && render) {

//audio不会走这个分支

} else {

mNumOutputFramesDropped += !mIsAudio;

//对应mediacodec的releaseOutputBuffer方法

err = mCodec->releaseOutputBuffer(bufferIx);

}

..

}

本文福利, 免费领取C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓