iOSReplayKit插件使用

iOSReplayKit用途

ReplayKit是苹果为iOS/tvOS/macOS平台视频直播和视频录制提供的工具包(Record or stream video from the screen, and audio from the app and microphone.),整个插件使用很简单(因为大部分功能都被苹果限制死了),调用StartXxxxWithHandler开始录制,提供回调函数接收调用结果和数据包;StopXxxxWithHandler结束录制,提供回调函数接收调用结果。

iOSReplayKit(ReplayKit for iOS)插件是提供给UE使用iOS平台ReplayKit软件包的桥接器。插件包含一个蓝图类UIOSReplayKitControl,提供调用iOS ReplayKit API函数的蓝图接口;一个Objective-C语言写的ReplayKitRecorder实现类。

开始使用

因为要在Unreal开发的iOS平台APP使用视频录制功能,查找资料发现苹果提供的ReplayKit可以支持视频和麦克风录制。进一步查找Unreal平台插件,在github上找到一个replaykit for ios的项目

PushkinStudio/PsReplayKit

但这个项目最后更新已经是2018年了。

后来查看Unreal引擎源码,发现Unreal引擎里面已经集成ReplayKit插件。

直接在项目引入插件,重启

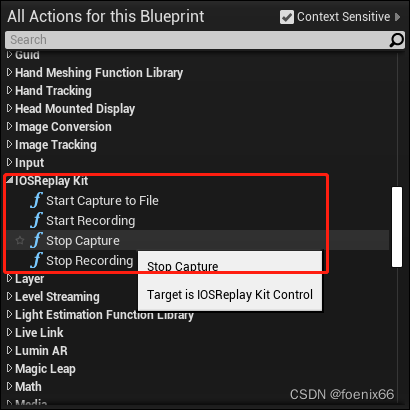

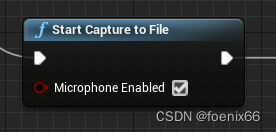

插件使用很简单,一共提供了四个函数

Unreal本着已经提供了源代码没必要再提供文档的原则,查看帮助文档,得到的信息也不会比函数名更多了。

至于StartRecording和StartCaptureToFile有什么区别,查看源代码,发现确实是Apple的锅,Apple ReplayKit提供startRecordingWithHandler和startCaptureWithHandler两个方法,startCaptureWithHandler提供了回调函数,可以自己处理录制过程;startRecordingWithHandler不提供过程回调,仅在stop时提供预览(调用系统预览框),全程开发者无法干预。

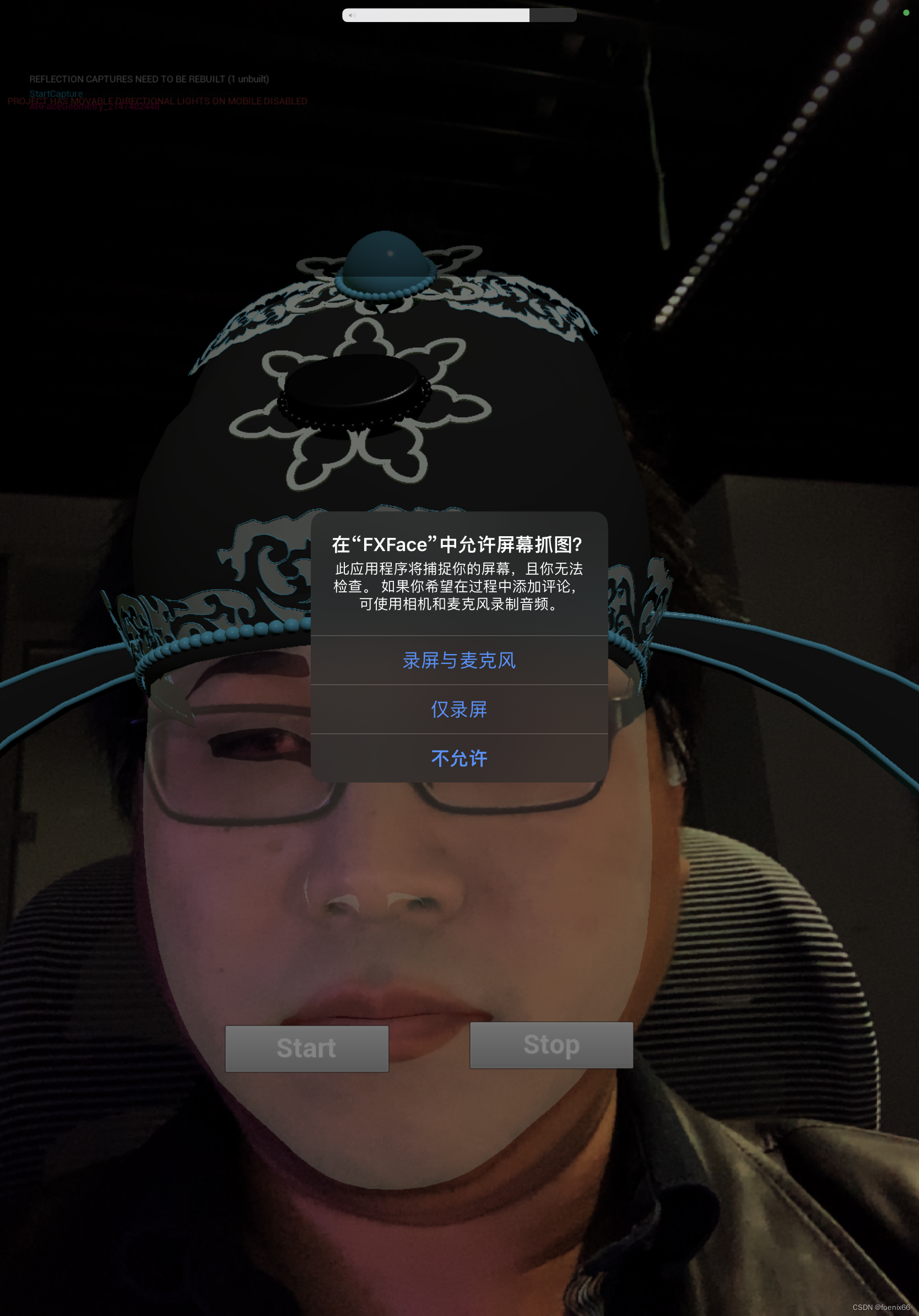

创建两个按钮,点击绑定调用StartCaptureToFile和StopCapture,打包输出,在真机上运行点击Start录制,成功唤起权限确认对话框。

点击Stop结束,显示存储到相册权限提示,看起来一切正常。

在测试几次,结果发现问题了,除了一开始成功的一次,后面基本上都是失败的,并没有任何文件存储到相册。

然后换了StartRecording和StopRecording接口,一样的问题,偶尔成功,大部分失败。

查看源代码,发现StartCaptureToFile调用startCapture,该函数实现代码是自己将回调数据包写入mp4文件,其中有处理文件的代码

// create the asset writer

[_assetWriter release] ;

// todo: do we care about the file name? support cleaning up old captures?

auto fileName = FString::Printf(TEXT("%s.mp4"), *FGuid::NewGuid().ToString());

_captureFilePath = [captureDir stringByAppendingFormat : @"/%@", fileName.GetNSString()];

[_captureFilePath retain] ;

_assetWriter = [[AVAssetWriter alloc]initWithURL: [NSURL fileURLWithPath : _captureFilePath] fileType : AVFileTypeMPEG4 error : nil];

// _assetWriter = [AVAssetWriter assetWriterWithURL : [NSURL fileURLWithPath : _captureFilePath] fileType : AVFileTypeMPEG4 error : nil];

[_assetWriter retain] ;

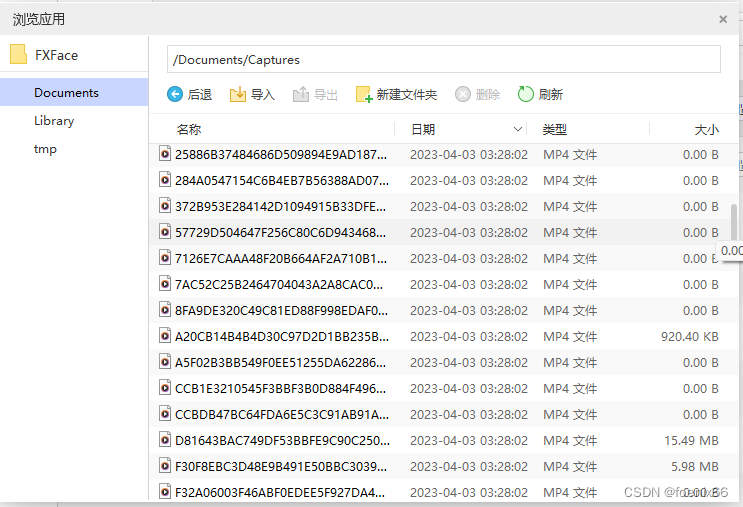

通过USB连接iPAD,在文件浏览里面发现Capture目录下大部分录制的mp4都是0字节文件,说明在写入环节出现问题了

查看写入代码,增加Unreal屏幕打印调式信息功能

if (_assetWriter.status == AVAssetWriterStatusUnknown)

{

[_assetWriter startWriting] ;

[_assetWriter startSessionAtSourceTime : CMSampleBufferGetPresentationTimeStamp(sampleBuffer)] ;

if (GEngine) {

CMTime time = CMSampleBufferGetPresentationTimeStamp(sampleBuffer);

GEngine->AddOnScreenDebugMessage(-1, 5.f, FColor::Blue, FString::Printf(TEXT("_assetWriter startWriting: %d"), (int)CMTimeGetSeconds(time)));

}

}

if (_assetWriter.status == AVAssetWriterStatusFailed)

{

NSLog(@"%d: %@", (int)_assetWriter.error.code, _assetWriter.error.localizedDescription);

if (GEngine) {

const char* dest = [_assetWriter.error.localizedDescription UTF8String];

GEngine->AddOnScreenDebugMessage(-1, 5.f, FColor::Green, FString::Printf(TEXT("_assetWriter failed: %d %s"), (int)_assetWriter.error.code, UTF8_TO_TCHAR(dest)));

}

}

运行后屏幕打印的调试信息表明回调函数没有问题,问题出在AVAssetWriter状态是AVAssetWriterStatusFailed,错误代码为-11823,错误描述cannot save。

经历漫长编译-输出-安装-调试,发现蓝色提示信息_assetWriter startWriting 16600

GEngine->AddOnScreenDebugMessage(-1, 5.f, FColor::Blue, FString::Printf(TEXT("_assetWriter startWriting: %d"), (int)CMTimeGetSeconds(time)));

夹杂在绿色-11823,cannot save错误信息之间显示了两条。蓝色提示信息是在调用_assetWriter.startWriting时显示的,显然调用了两次startWriting导致AV视频写入器报错了。

if (_assetWriter.status == AVAssetWriterStatusUnknown)

{

[_assetWriter startWriting] ;

// ...

}

代码在调用startWriting时判断了写入器状态是不是无状态,调用startWriting以后写入器的状态应该会发生变化,线性调用是不会导致调用两次的,除非是多线程同时调用。连续调用了两次startWriting说明在状态改变之前又有新的回调函数调用了,猜想视频数据包和音频数据包处理是在不同线程进行的,几乎同时调用了回调函数,而回调函数并没有采用任何的线程锁机制,导致startWriting被调用了两次,从而引起AVAssetWriterStatusFailed错误,而偶尔成功的几次,应该是多线程没有同时回调,侥幸成功。

修改代码

增加一个frames自增变量,仅在0==frames时调用startWriting函数

if (0 == _frames++) {

[_assetWriter startWriting] ;

[_assetWriter startSessionAtSourceTime : CMSampleBufferGetPresentationTimeStamp(sampleBuffer)] ;

if (GEngine) {

CMTime time = CMSampleBufferGetPresentationTimeStamp(sampleBuffer);

GEngine->AddOnScreenDebugMessage(-1, 5.f, FColor::Blue, FString::Printf(TEXT("_assetWriter startWriting: %d"), (int)CMTimeGetSeconds(time)));

}

}

这里没有用线程锁,用了++自增操作,++操作仅一个CPU指令周期,几乎不可能被中断。

增加MIC录制

AVAssetWriterInput* input = nullptr;

// ...

else if (bufferType == RPSampleBufferTypeAudioMic)

{

// todo?

}

// ...

bufferType == RPSampleBufferTypeAudioMic表示这是一个Microphone采集回调数据,但代码中仅有一个todo?注释,并没有任何代码实现,也就是直接使用插件是不能录制麦克风声音的。(直到5.1版本的iOSReplayKit都没有实现Mic采集)

仿照RPSampleBufferTypeAudioApp内录音频,创建一个Mic录制写入器

// create the audio input

[_audioInput release] ;

AudioChannelLayout acl;

bzero(&acl, sizeof(acl));

acl.mChannelLayoutTag = kAudioChannelLayoutTag_Stereo;

auto audioSettings = @{

AVFormatIDKey: @(kAudioFormatMPEG4AAC),

AVSampleRateKey: @(44100),

AVChannelLayoutKey: [NSData dataWithBytes : &acl length : sizeof(acl)] ,

};

_audioInput = [AVAssetWriterInput assetWriterInputWithMediaType : AVMediaTypeAudio outputSettings : audioSettings];

_audioInput.expectsMediaDataInRealTime = YES;

[_audioInput retain] ;

// create the microphone input

[_microInput release] ;

AudioChannelLayout mic;

bzero(&mic, sizeof(mic));

mic.mChannelLayoutTag = kAudioChannelLayoutTag_Mono;

auto micSettings = @{

AVFormatIDKey: @(kAudioFormatMPEG4AAC),

AVSampleRateKey: @(44100),

AVChannelLayoutKey: [NSData dataWithBytes : &mic length : sizeof(mic)] ,

};

_microInput = [AVAssetWriterInput assetWriterInputWithMediaType : AVMediaTypeAudio outputSettings : micSettings];

_microInput.expectsMediaDataInRealTime = YES;

[_microInput retain] ;

// add the input to the writer

// ...

if ([_assetWriter canAddInput : _audioInput])

{

[_assetWriter addInput : _audioInput] ;

}

if ([_assetWriter canAddInput : _microInput])

{

[_assetWriter addInput : _microInput] ;

}

todo?注释增加mic input输入处理

AVAssetWriterInput* input = nullptr;

if (bufferType == RPSampleBufferTypeVideo)

{

input = _videoInput;

}

else if (bufferType == RPSampleBufferTypeAudioApp)

{

input = _audioInput;

}

else if (bufferType == RPSampleBufferTypeAudioMic)

{

// todo?

input = _microInput;

}

if (input && input.isReadyForMoreMediaData)

{

[input appendSampleBuffer : sampleBuffer] ;

}

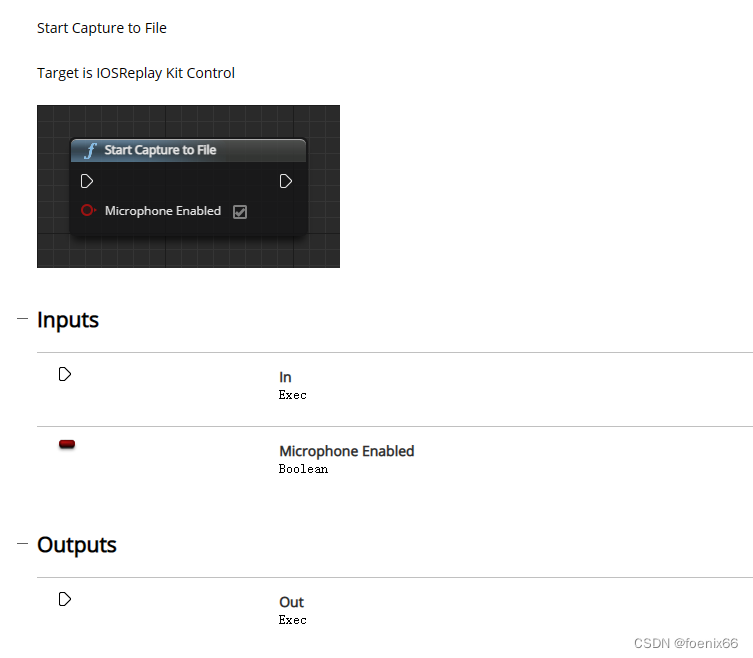

增加上面代码后,启动startCapture时勾选麦克风,就可以录制麦克风声音了(不知道官方插件为啥不实现这个简单的功能)

完整代码

// Copyright Epic Games, Inc. All Rights Reserved.

#include "ReplayKitRecorder.h"

#if PLATFORM_IOS

#include "IOSAppDelegate.h"

#include "IOS/IOSView.h"

#include "Misc/Paths.h"

#include "Engine/Engine.h"

#include "Engine/GameViewportClient.h"

@implementation ReplayKitRecorder

RPScreenRecorder* _Nullable _screenRecorder;

RPBroadcastActivityViewController* _Nullable _broadcastActivityController;

RPBroadcastController* _Nullable _broadcastController;

// stuff used when capturing to file

AVAssetWriter* _Nullable _assetWriter;

AVAssetWriterInput* _Nullable _videoInput;

AVAssetWriterInput* _Nullable _audioInput;

AVAssetWriterInput* _Nullable _microInput;

NSString* _Nullable _captureFilePath;

int _frames;

- (void) initializeWithMicrophoneEnabled:(BOOL)bMicrophoneEnabled withCameraEnabled:(BOOL)bCameraEnabled {

_screenRecorder = [RPScreenRecorder sharedRecorder];

[_screenRecorder setDelegate:self];

#if !PLATFORM_TVOS

[_screenRecorder setMicrophoneEnabled:bMicrophoneEnabled];

[_screenRecorder setCameraEnabled:bCameraEnabled];

#endif

}

- (void)startRecording {

// NOTE(omar): stop any live broadcasts before staring a local recording

if( _broadcastController != nil ) {

[self stopBroadcast];

}

if( [_screenRecorder isAvailable] ) {

[_screenRecorder startRecordingWithHandler:^(NSError * _Nullable error) {

if( error ) {

NSLog( @"error starting screen recording");

}

}];

}

}

- (void)stopRecording {

if( [_screenRecorder isAvailable] && [_screenRecorder isRecording] ) {

[_screenRecorder stopRecordingWithHandler:^(RPPreviewViewController * _Nullable previewViewController, NSError * _Nullable error) {

[previewViewController setPreviewControllerDelegate:self];

// automatically show the video preview when recording is stopped

previewViewController.popoverPresentationController.sourceView = (UIView* _Nullable)[IOSAppDelegate GetDelegate].IOSView;

[[IOSAppDelegate GetDelegate].IOSController presentViewController:previewViewController animated:YES completion:nil];

}];

}

}

- (void)createCaptureContext

{

auto fileManager = [NSFileManager defaultManager];

auto docDir = [NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) objectAtIndex:0];

auto captureDir = [docDir stringByAppendingFormat : @"/Captures"];

[fileManager createDirectoryAtPath : captureDir withIntermediateDirectories : YES attributes : nil error : nil] ;

[_captureFilePath release] ;

// create the asset writer

[_assetWriter release] ;

// todo: do we care about the file name? support cleaning up old captures?

auto fileName = FString::Printf(TEXT("%s.mp4"), *FGuid::NewGuid().ToString());

_captureFilePath = [captureDir stringByAppendingFormat : @"/%@", fileName.GetNSString()];

[_captureFilePath retain] ;

_assetWriter = [[AVAssetWriter alloc]initWithURL: [NSURL fileURLWithPath : _captureFilePath] fileType : AVFileTypeMPEG4 error : nil];

// _assetWriter = [AVAssetWriter assetWriterWithURL : [NSURL fileURLWithPath : _captureFilePath] fileType : AVFileTypeMPEG4 error : nil];

[_assetWriter retain] ;

// create the video input

[_videoInput release] ;

auto view = [IOSAppDelegate GetDelegate].IOSView;

auto width = [NSNumber numberWithFloat : view.frame.size.width];

auto height = [NSNumber numberWithFloat : view.frame.size.height];

if (GEngine && GEngine->GameViewport && GEngine->GameViewport->Viewport)

{

auto viewportSize = GEngine->GameViewport->Viewport->GetSizeXY();

width = [NSNumber numberWithInt : viewportSize.X];

height = [NSNumber numberWithInt : viewportSize.Y];

}

auto videoSettings = @{

AVVideoCodecKey: AVVideoCodecTypeH264,

AVVideoWidthKey : width,

AVVideoHeightKey : height

};

_videoInput = [AVAssetWriterInput assetWriterInputWithMediaType : AVMediaTypeVideo outputSettings : videoSettings];

_videoInput.expectsMediaDataInRealTime = YES;

[_videoInput retain] ;

// create the audio input

[_audioInput release] ;

AudioChannelLayout acl;

bzero(&acl, sizeof(acl));

acl.mChannelLayoutTag = kAudioChannelLayoutTag_Stereo;

auto audioSettings = @{

AVFormatIDKey: @(kAudioFormatMPEG4AAC),

AVSampleRateKey: @(44100),

AVChannelLayoutKey: [NSData dataWithBytes : &acl length : sizeof(acl)] ,

};

_audioInput = [AVAssetWriterInput assetWriterInputWithMediaType : AVMediaTypeAudio outputSettings : audioSettings];

_audioInput.expectsMediaDataInRealTime = YES;

[_audioInput retain] ;

// create the microphone input

[_microInput release] ;

AudioChannelLayout mic;

bzero(&mic, sizeof(mic));

mic.mChannelLayoutTag = kAudioChannelLayoutTag_Mono;

auto micSettings = @{

AVFormatIDKey: @(kAudioFormatMPEG4AAC),

AVSampleRateKey: @(44100),

AVChannelLayoutKey: [NSData dataWithBytes : &mic length : sizeof(mic)] ,

};

_microInput = [AVAssetWriterInput assetWriterInputWithMediaType : AVMediaTypeAudio outputSettings : micSettings];

_microInput.expectsMediaDataInRealTime = YES;

[_microInput retain] ;

// add the input to the writer

if ([_assetWriter canAddInput : _videoInput])

{

[_assetWriter addInput : _videoInput] ;

}

if ([_assetWriter canAddInput : _audioInput])

{

[_assetWriter addInput : _audioInput] ;

}

if ([_assetWriter canAddInput : _microInput])

{

[_assetWriter addInput : _microInput] ;

}

}

- (void)startCapture

{

if (_broadcastController)

{

[self stopBroadcast] ;

}

if ([_screenRecorder isAvailable])

{

_frames = 0;

[self createCaptureContext] ;

[_screenRecorder startCaptureWithHandler : ^ (CMSampleBufferRef sampleBuffer, RPSampleBufferType bufferType, NSError * error)

{

if (CMSampleBufferDataIsReady(sampleBuffer))

{

if (0 == _frames++) {

[_assetWriter startWriting] ;

[_assetWriter startSessionAtSourceTime : CMSampleBufferGetPresentationTimeStamp(sampleBuffer)] ;

if (GEngine) {

CMTime time = CMSampleBufferGetPresentationTimeStamp(sampleBuffer);

GEngine->AddOnScreenDebugMessage(-1, 5.f, FColor::Blue, FString::Printf(TEXT("_assetWriter startWriting: %d"), (int)CMTimeGetSeconds(time)));

}

}

/*

if (_assetWriter.status == AVAssetWriterStatusUnknown)

{

[_assetWriter startWriting] ;

[_assetWriter startSessionAtSourceTime : CMSampleBufferGetPresentationTimeStamp(sampleBuffer)] ;

if (GEngine) {

CMTime time = CMSampleBufferGetPresentationTimeStamp(sampleBuffer);

GEngine->AddOnScreenDebugMessage(-1, 5.f, FColor::Blue, FString::Printf(TEXT("_assetWriter startWriting: %d"), (int)CMTimeGetSeconds(time)));

}

}

*/

if (_assetWriter.status == AVAssetWriterStatusFailed)

{

// NSLog(@"%d: %@", (int)_assetWriter.error.code, _assetWriter.error.localizedDescription);

if (GEngine) {

const char* dest = [_assetWriter.error.localizedDescription UTF8String];

GEngine->AddOnScreenDebugMessage(-1, 5.f, FColor::Green, FString::Printf(TEXT("_assetWriter failed: %d %s"), (int)_assetWriter.error.code, UTF8_TO_TCHAR(dest)));

}

}

else {

AVAssetWriterInput* input = nullptr;

if (bufferType == RPSampleBufferTypeVideo)

{

input = _videoInput;

}

else if (bufferType == RPSampleBufferTypeAudioApp)

{

input = _audioInput;

}

else if (bufferType == RPSampleBufferTypeAudioMic)

{

// todo?

input = _microInput;

}

if (input && input.isReadyForMoreMediaData)

{

[input appendSampleBuffer : sampleBuffer] ;

}

}

}

}

completionHandler: ^ (NSError * error)

{

if (error)

{

NSLog(@"completionHandler: %@", error);

if (GEngine) {

GEngine->AddOnScreenDebugMessage(-1, 5.f, FColor::Red, FString::Printf(TEXT("error at startCapture")));

}

}

}];

}

}

- (void)stopCapture

{

if ([_screenRecorder isAvailable])

{

[_screenRecorder stopCaptureWithHandler:^(NSError *error)

{

if (error)

{

NSLog(@"stopCaptureWithHandler: %@", error);

}

if (_assetWriter)

{

[_assetWriter finishWritingWithCompletionHandler:^()

{

NSLog(@"finishWritingWithCompletionHandler");

#if !PLATFORM_TVOS

if (UIVideoAtPathIsCompatibleWithSavedPhotosAlbum(_captureFilePath))

{

UISaveVideoAtPathToSavedPhotosAlbum(_captureFilePath, nil, nil, nil);

NSLog(@"capture saved to album");

}

#endif

[_captureFilePath release];

_captureFilePath = nullptr;

[_videoInput release];

_videoInput = nullptr;

[_audioInput release];

_audioInput = nullptr;

[_microInput release];

_microInput = nullptr;

[_assetWriter release];

_assetWriter = nullptr;

}];

}

}];

}

}

//

// livestreaming functionality

//

- (void)startBroadcast {

// NOTE(omar): ending any local recordings that might be active before starting a broadcast

if( [_screenRecorder isRecording] ) {

[self stopRecording];

}

[RPBroadcastActivityViewController loadBroadcastActivityViewControllerWithHandler:^(RPBroadcastActivityViewController * _Nullable broadcastActivityViewController, NSError * _Nullable error) {

_broadcastActivityController = broadcastActivityViewController;

[_broadcastActivityController setDelegate:self];

[[IOSAppDelegate GetDelegate].IOSController presentViewController:_broadcastActivityController animated:YES completion:nil];

}];

}

- (void)pauseBroadcast {

if( [_broadcastController isBroadcasting] ) {

[_broadcastController pauseBroadcast];

}

}

- (void)resumeBroadcast {

if( [_broadcastController isPaused] ) {

[_broadcastController resumeBroadcast];

}

}

- (void)stopBroadcast {

if( [_broadcastController isBroadcasting ] ) {

[_broadcastController finishBroadcastWithHandler:^(NSError * _Nullable error) {

if( error ) {

NSLog( @"error finishing broadcast" );

}

[_broadcastController release];

_broadcastController = nil;

}];

}

}

//

// delegates

//

// screen recorder delegate

- (void)screenRecorder:(RPScreenRecorder* _Nullable)screenRecorder didStopRecordingWithError:(NSError* _Nullable)error previewViewController:(RPPreviewViewController* _Nullable)previewViewController {

NSLog(@"RTRScreenRecorderDelegate::didStopRecrodingWithError");

[previewViewController dismissViewControllerAnimated:YES completion:nil];

}

- (void)screenRecorderDidChangeAvailability:(RPScreenRecorder* _Nullable)screenRecorder {

NSLog(@"RTRScreenRecorderDelegate::screenRecorderDidChangeAvailability");

}

// screen recorder preview view controller delegate

- (void)previewControllerDidFinish:(RPPreviewViewController* _Nullable)previewController {

NSLog( @"RTRPreviewViewControllerDelegate::previewControllerDidFinish" );

[previewController dismissViewControllerAnimated:YES completion:nil];

}

- (void)previewController:(RPPreviewViewController* _Nullable)previewController didFinishWithActivityTypes:(NSSet <NSString*> * _Nullable)activityTypes __TVOS_PROHIBITED {

NSLog( @"RTRPreviewViewControllerDelegate::didFinishWithActivityTypes" );

[previewController dismissViewControllerAnimated:YES completion:nil];

}

// broadcast activity view controller delegate

- (void)broadcastActivityViewController:(RPBroadcastActivityViewController* _Nullable)broadcastActivityViewController didFinishWithBroadcastController:(RPBroadcastController* _Nullable)broadcastController error:(NSError* _Nullable)error {

NSLog( @"RPBroadcastActivityViewControllerDelegate::didFinishWithBroadcastController" );

[broadcastActivityViewController dismissViewControllerAnimated:YES completion:^{

_broadcastController = [broadcastController retain];

[_broadcastController setDelegate:self];

[_broadcastController startBroadcastWithHandler:^(NSError* _Nullable _error) {

if( _error ) {

NSLog( @"error starting broadcast" );

}

}];

}];

}

// broadcast controller delegate

- (void)broadcastController:(RPBroadcastController* _Nullable)broadcastController didFinishWithError:(NSError* _Nullable)error {

NSLog( @"RPBroadcastControllerDelegate::didFinishWithError" );

}

- (void)broadcastController:(RPBroadcastController* _Nullable)broadcastController didUpdateServiceInfo:(NSDictionary <NSString*, NSObject <NSCoding>*> *_Nullable)serviceInfo {

NSLog( @"RPBroadcastControllerDelegate::didUpdateServiceInfo" );

}

@end

#endif

Objective-C和C++混合编码

Objective-C和C++虽然都是C语言,但他们语法差异还是很大的。Objective-C和C++混合编程很简单,将Objective-C代码放在.h和.cpp文件中,就可以直接写Objective-C代码,也可以直接写C++代码,不需要做任何特殊处理,编译器可以直接编译混合了Objective-C代码和C++代码的.h文件和.cpp文件。