参考视频:05.用PyTorch实现线性回归_哔哩哔哩_bilibili

还是以 y = 3 x + 2 y=3x+2 y=3x+2为例(事先不知道)

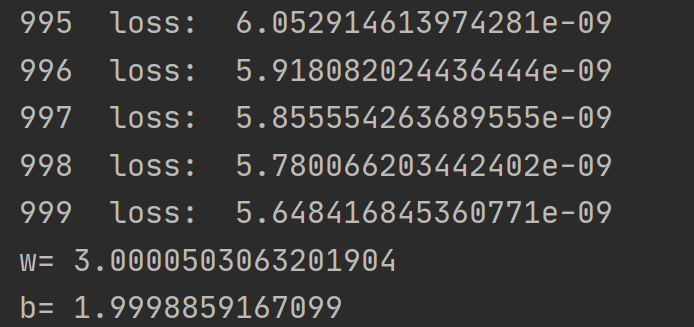

学习率设为0.01,训练1000次

要注意视频中定义损失函数的部分所用的参数size_average=False已经过时,需要改成reduction=‘sum’

完整代码:

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

import numpy as np

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[5.0], [8.0], [11.0]])

class LinearModel(nn.Module):

def __init__(self):

super(LinearModel, self).__init__()

self.linear = nn.Linear(1, 1) # 定义输入输出的维度1

def forward(self, x): # override前馈函数

y_pred = self.linear(x) # wx+b

return y_pred

model = LinearModel() # 实例化模型

# criterion = nn.MSELoss(size_average=False)

criterion = torch.nn.MSELoss(reduction='sum') # 定义损失函数

optimizer = torch.optim.SGD(model.parameters(), lr=0.01) # 设置优化器SGD,学习率0.01

loss_list = []

# 开始训练

for epoch in range(1000):

y_pred = model(x_data) # 算出前馈forward

loss = criterion(y_pred, y_data) # 算出损失

print(epoch, " loss: ", loss.item())

loss_list.append(loss.item())

optimizer.zero_grad() # 将梯度清零,防止累加

loss.backward() # 计算梯度

optimizer.step() # 使用梯度更新参数

print('w=', model.linear.weight.item())

print('b=', model.linear.bias.item())

# 绘制Epoch-Loss曲线

plt.figure()

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.plot(np.arange(0, 1000, 1), np.array(loss_list))

plt.show()

经过1000次训练后运行结果如下:

非常逼近w=3,b=2