Java-API对HDFS的操作

哈哈哈哈,深夜来一波干货哦!!!

Java-PAI对hdfs的操作,首先我们建一个maven项目,我主要说,我们可以通过Java代码来对HDFS的具体信息的打印,然后用java代码实现上传文件和下载文件,以及对文件的增删。

首先来介绍下如何将java代码和HDFS联系起来,HDFS是分布式文件系统,说通俗点就是用的存储的数据库,是hadoop的核心组件之一,其他还有mapreduce,yarn.其实也就是我们通过java代码来访问这个这个系统。然后进行操作等等。。java中导入jar包来进行编码操作。

我们这里使用pom.xml导入依赖的方式,还有种1中方式就是lib(通俗易懂就是导入jar包)。

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>hu-hadoop1</groupId> <artifactId>k</artifactId> <version>0.0.1-SNAPSHOT</version> <packaging>jar</packaging> <name>k</name> <url>http://maven.apache.org</url> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> </properties> <dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>3.8.1</version> <scope>test</scope> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.6.0</version> <scope>provided</scope> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.6.0</version> <scope>provided</scope> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-app</artifactId> <version>2.6.0</version> <scope>provided</scope> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-common</artifactId> <version>2.6.0</version> <scope>provided</scope> </dependency> </dependencies> </project>

然后我们就可以开始进入编码阶段了。

首先建一个类HDFSDemo1

谈谈如何访问HDFS的方法:在下提出3种方法.分别一下列出。清晰可见在下的真心实意。

方式一:使用 使用hfds api 操作hadoop

package com.bw.day01; import java.io.InputStream; import java.net.URL; import org.apache.hadoop.fs.FsUrlStreamHandlerFactory; import org.apache.hadoop.io.IOUtils; /** * 相对较老的hdfs api 使用java api也是可以访问hdfs的 * @author huhu_k * */ public class HDFSDemo1 { static { URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory()); } public static void main(String[] args) throws Exception { URL url = new URL("hdfs://hu-hadoop1:8020/1708as1/f1.txt"); InputStream openStream = url.openStream(); IOUtils.copyBytes(openStream, System.out, 4096); } }

方式二:相对较老的hdfs api 进行访问

package com.bw.day01;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

import java.util.Map.Entry;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.hdfs.DistributedFileSystem;

import org.apache.hadoop.hdfs.protocol.DatanodeInfo;

/**

* 使用hfds api 操作hadoop

*

* @author huhu_k

*

*/

public class HDFSDemo2 {

public static void main(String[] args) throws Exception {

// 1.step 配置容器 可以配置

Configuration conf = new Configuration();

conf.set("fs.default.name", "hdfs://hu-hadoop1:8020");

// 2.step

FileSystem fs = FileSystem.get(conf);

DistributedFileSystem dfs = (DistributedFileSystem) fs;

DatanodeInfo[] di = dfs.getDataNodeStats();

for (DatanodeInfo d : di) {

//datanode的一些信息

System.out.println(d.getBlockPoolUsed());

System.out.println(d.getCacheCapacity());

System.out.println(d.getCapacity());

System.out.println(d.getDfsUsed()); // 地址

System.out.println(d.getInfoAddr()); // 状态

System.out.println(d.getAdminState());

System.out.println("----------------------------");

// System.out.println(d.getDatanodeReport());

SimpleDateFormat sdf = new SimpleDateFormat("EEE MMM dd HH:mm:ss z yyyy", java.util.Locale.US);

String value = d.getDatanodeReport();

Map<String, String> map1 = new HashMap<>();

Map<String, String> map2 = new HashMap<>();

Map<String, String> map3 = new HashMap<>();

int flag = 0;

String[] str1 = value.split("\\n");

for (String s : str1) {

String[] str2 = s.split(": ");

if (0 == flag) {

if ("Last contact".equals(str2[0])) {

String format = sdf.format(new Date(str2[1]));

Date date = sdf.parse(format.toString());

//最后一次访问hdfs 和现实的时间戳==心跳

System.out.println(System.currentTimeMillis() - date.getTime());

System.out.println(System.currentTimeMillis());

System.out.println(date.getTime());

}

map1.put(str2[0], str2[1]);

} else if (1 == flag) {

map2.put(str2[0], str2[1]);

} else {

map3.put(str2[0], str2[1]);

}

}

System.out.println("----------------------------");

for (Entry<String, String> m1 : map1.entrySet()) {

System.out.println(m1.getKey() + "---" + m1.getValue());

}

for (Entry<String, String> m2 : map1.entrySet()) {

System.out.println(m2.getKey() + "---" + m2.getValue());

}

for (Entry<String, String> m3 : map1.entrySet()) {

System.out.println(m3.getKey() + "---" + m3.getValue());

}

}

}

}

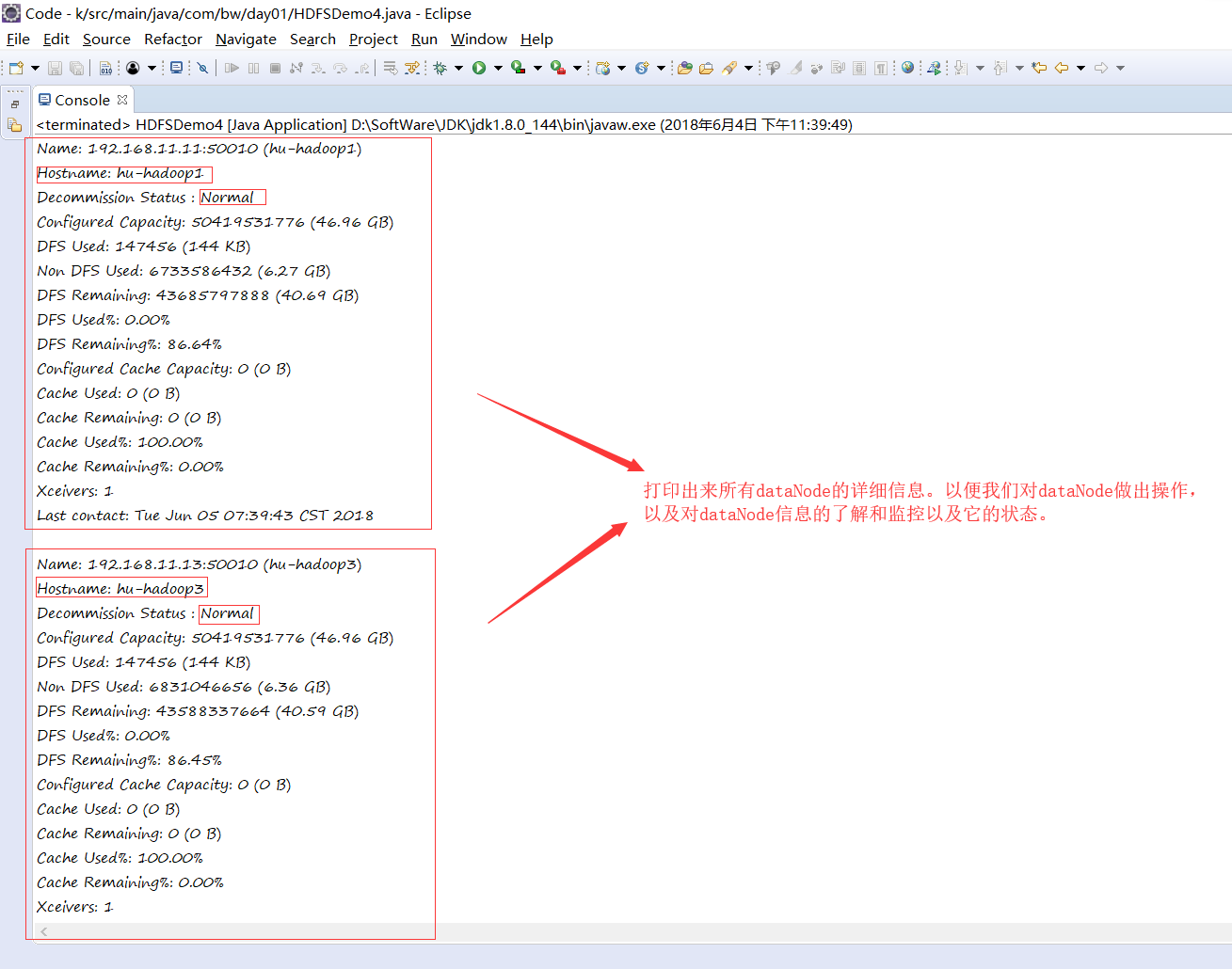

注意: 以上代码好多没用的,我觉得最有用得就是 getDatanodeReport(); 所以我只说只一个,结果如下:

可以一眼就看出来它的用处了吧。

方式三:使用配置文件的方法+fs.newInstance(Conf):

这里的配置文件是从虚拟机上的hadoop/etc里面。分别为core.site.xml和hdfs-site.xml

core-site.xml

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://hu-hadoop1:8020</value> </property> <property> <name>io.file.buffer.size</name> <value>4096</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/bigdata/tmp</value> </property> </configuration>

hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.block.size</name> <value>134217728</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:///home/hadoopdata/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:///home/hadoopdata/dfs/data</value> </property> <property> <name>fs.checkpoint.dir</name> <value>file:///home/hadoopdata/checkpoint/dfs/cname</value> </property> <property> <name>fs.checkpoint.edits.dir</name> <value>file:///home/hadoopdata/checkpoint/dfs/cname</value> </property> <property> <name>dfs.http.address</name> <value>hu-hadoop1:50070</value> </property> <property> <name>dfs.secondary.http.address</name> <value>hu-hadoop2:50090</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> <property> <name>dfs.permissions</name> <value>false</value> </property> </configuration>

将这两个文件写入到src下:建一个HDFSDemo3 然后开始编码:

文件的删除1:

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

// hadoop路径

FileSystem fs = FileSystem.newInstance(conf);

// 本地得路径

LocalFileSystem localFileSystem = FileSystem.getLocal(conf);

// mkdir创建文件夹

Path path = new Path("/1708as1/hehe.txt");

if (fs.exists(path)) {

// fs.delete(path);默认递归删除

fs.delete(path, true);// false:不是递归 true:递归

System.out.println("------------------");

fs.mkdirs(new Path("/1708a1/f1.txt"));

} else {

fs.mkdirs(path);

System.out.println("------------------****");

}

}

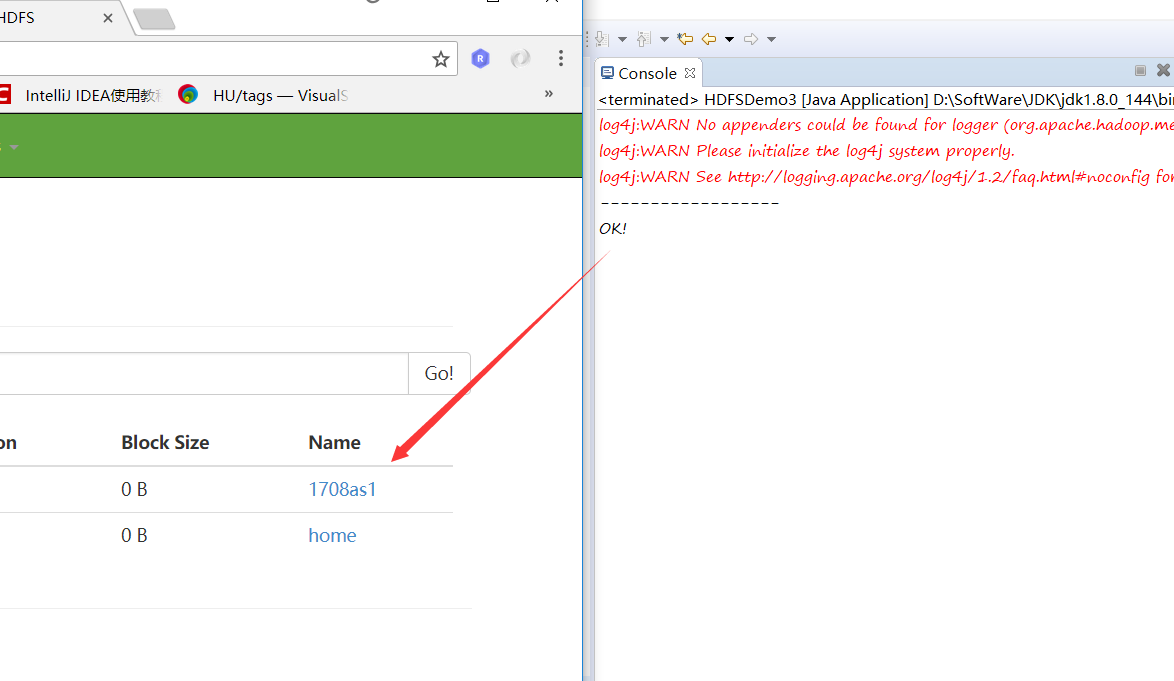

创建一个文件并写入内容:

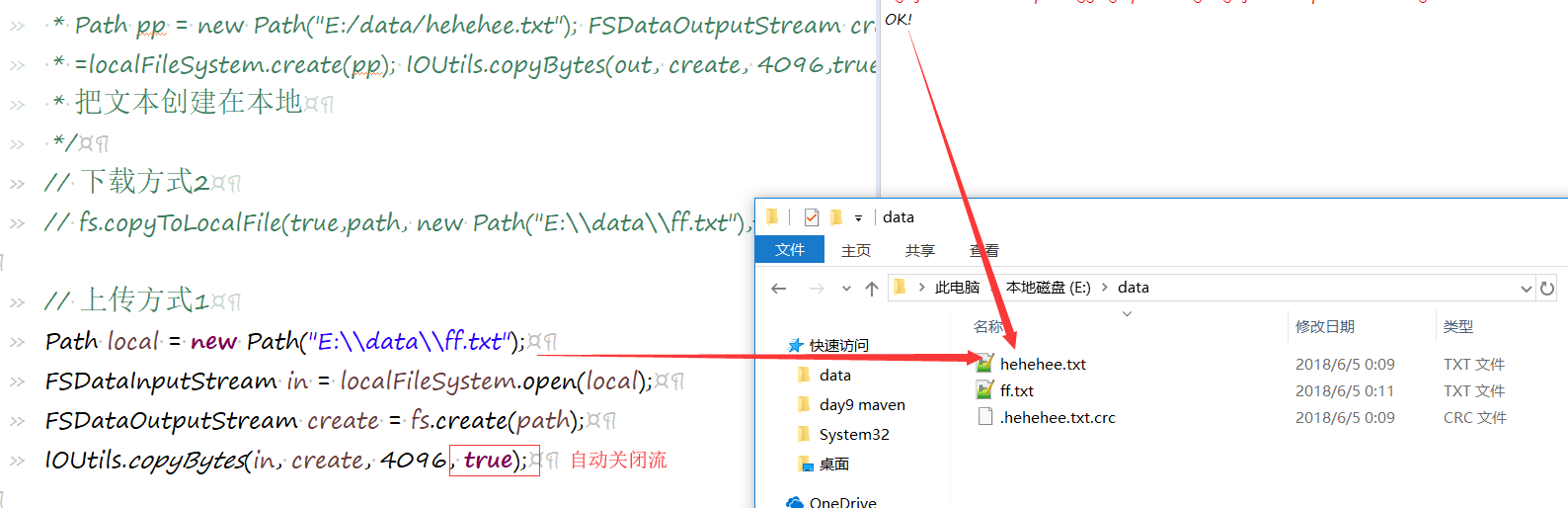

下载文件:

方法一:

方法二:

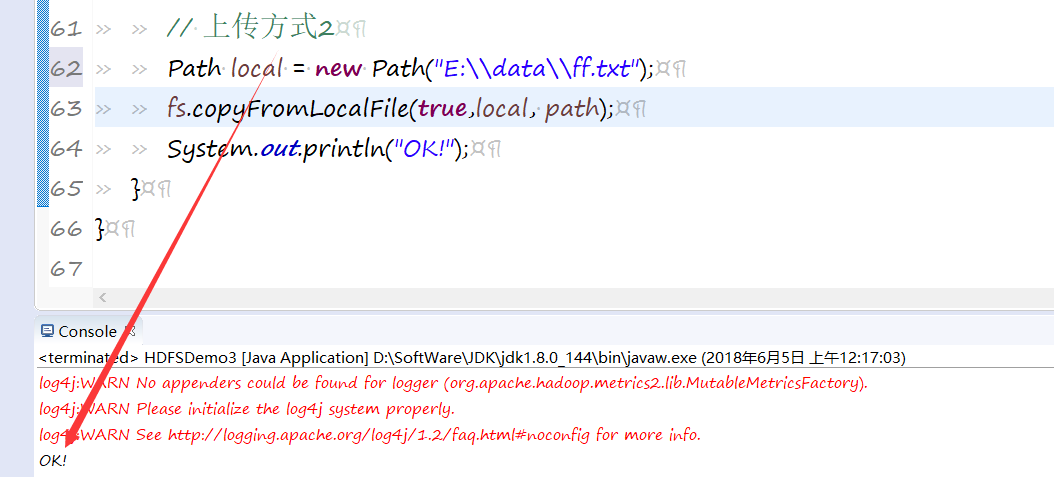

上传文件:

方式一:

方式二:

下载文件:

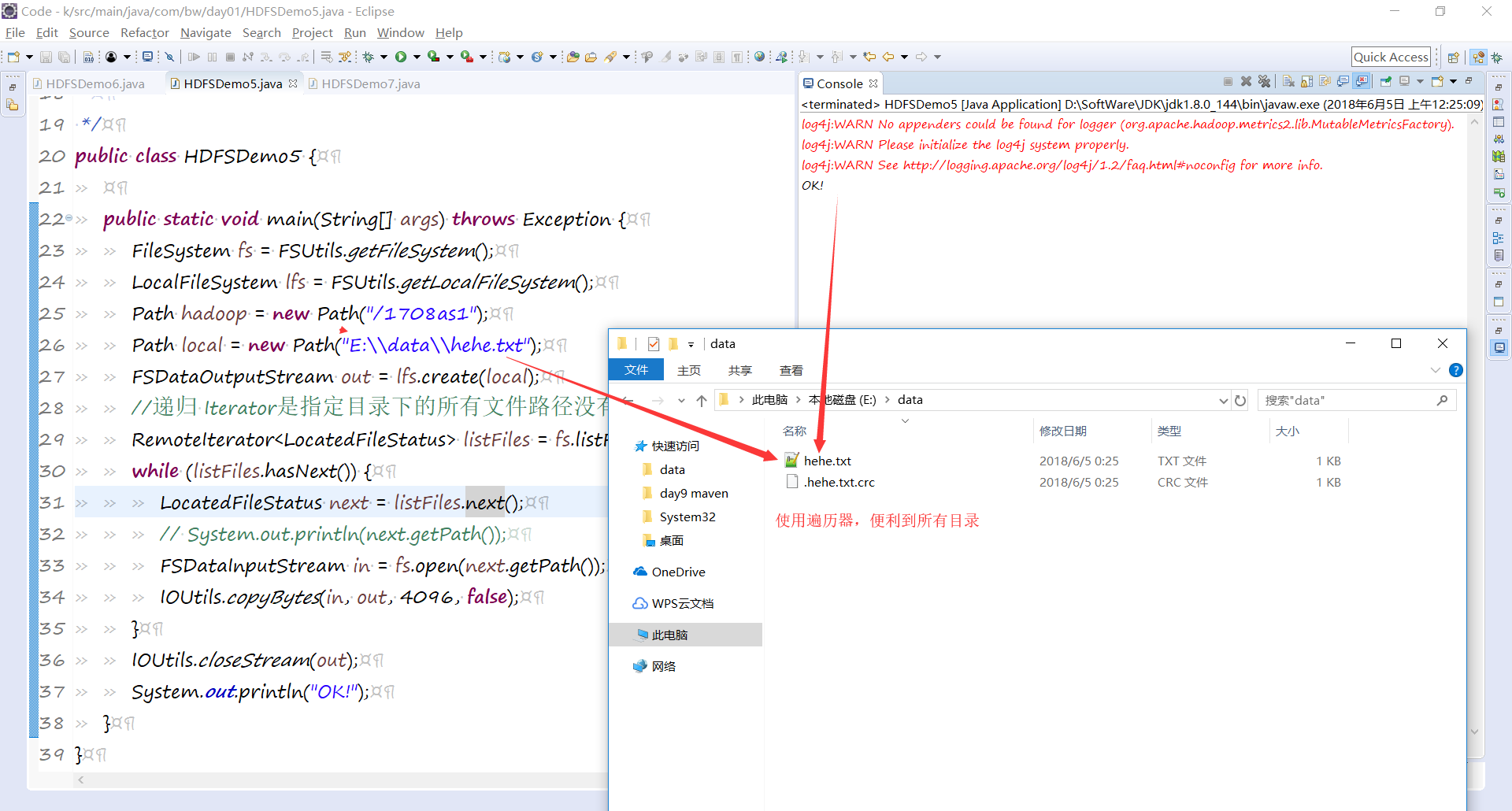

合并下载:

方法一:

package com.bw.day01;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocalFileSystem;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.RemoteIterator;

import org.apache.hadoop.io.IOUtils;

import com.bw.day01.util.FSUtils;

/**

* 合并下载

*

* @author huhu_k

*

*/

public class HDFSDemo5 {

public static void main(String[] args) throws Exception {

FileSystem fs = FSUtils.getFileSystem();

LocalFileSystem lfs = FSUtils.getLocalFileSystem();

Path hadoop = new Path("/1708as1");

Path local = new Path("E:\\data\\hehe.txt");

FSDataOutputStream out = lfs.create(local);

//递归 Iterator是指定目录下的所有文件路径没有文件夹的

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(hadoop, true);

while (listFiles.hasNext()) {

LocatedFileStatus next = listFiles.next();

// System.out.println(next.getPath());

FSDataInputStream in = fs.open(next.getPath());

IOUtils.copyBytes(in, out, 4096, false);

}

IOUtils.closeStream(out);

System.out.println("OK!");

}

}

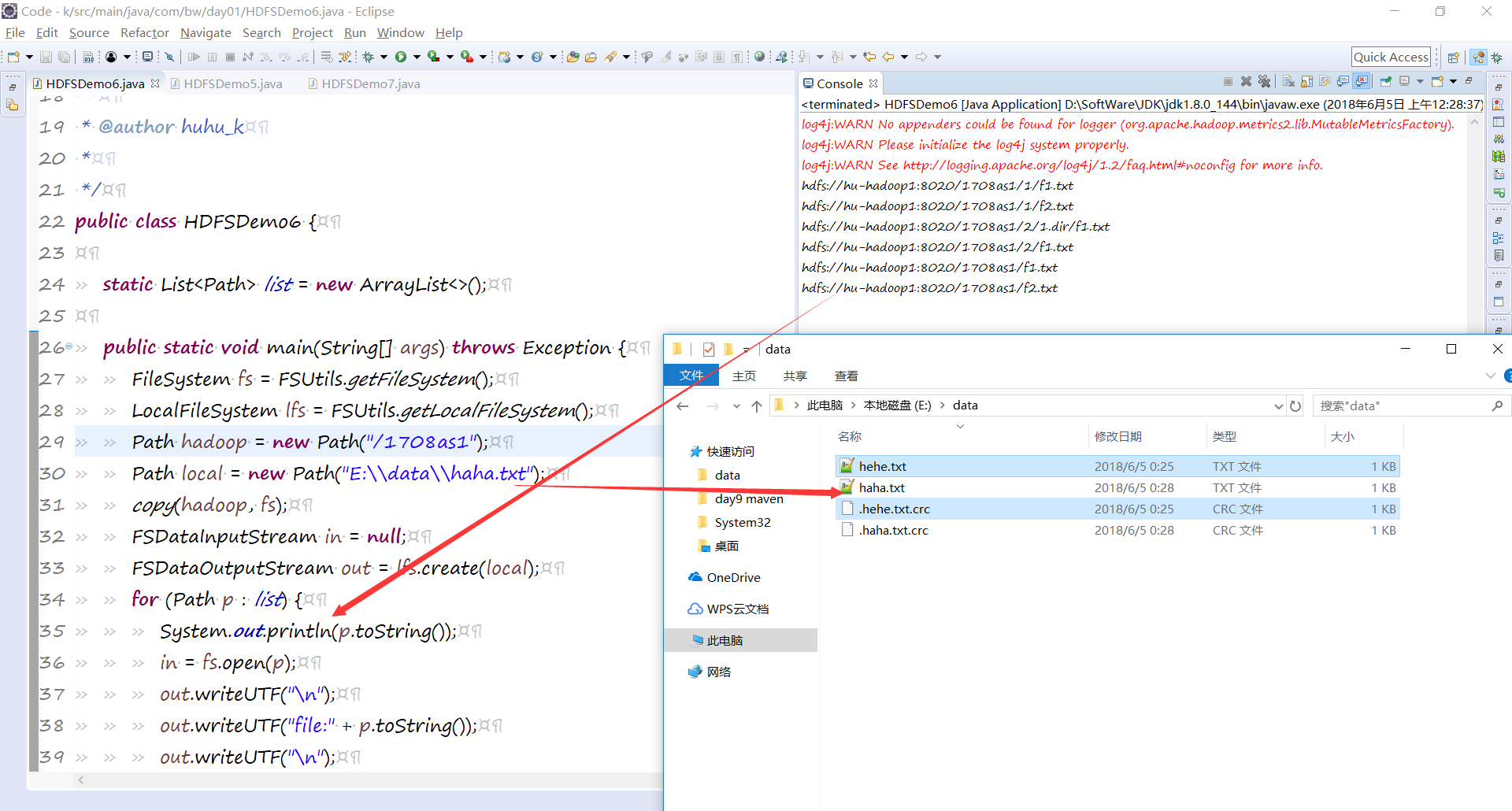

方法二:

package com.bw.day01;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocalFileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import com.bw.day01.util.FSUtils;

/**

* 合并下载

*

* @author huhu_k

*

*/

public class HDFSDemo6 {

static List<Path> list = new ArrayList<>();

public static void main(String[] args) throws Exception {

FileSystem fs = FSUtils.getFileSystem();

LocalFileSystem lfs = FSUtils.getLocalFileSystem();

Path hadoop = new Path("/1708as1");

Path local = new Path("E:\\data\\haha.txt");

copy(hadoop, fs);

FSDataInputStream in = null;

FSDataOutputStream out = lfs.create(local);

for (Path p : list) {

System.out.println(p.toString());

in = fs.open(p);

out.writeUTF("\n");

out.writeUTF("file:" + p.toString());

out.writeUTF("\n");

IOUtils.copyBytes(in, out, 4096, false);

}

IOUtils.closeStream(out);

}

/**

* 使用递归得到文件所有目录

* @param hadoop

* @param fs

* @throws Exception

*/

public static void copy(Path hadoop, FileSystem fs) throws Exception {

FileStatus[] lFileStatus = fs.listStatus(hadoop);

for (FileStatus f : lFileStatus) {

if (fs.isDirectory(f.getPath())) {

// System.out.println("目录:" + f.getPath());

copy(f.getPath(), fs);

} else if (fs.isFile(f.getPath())) {

// System.out.println("wenjian:" + f.getPath());

list.add(f.getPath());

}

}

}

}

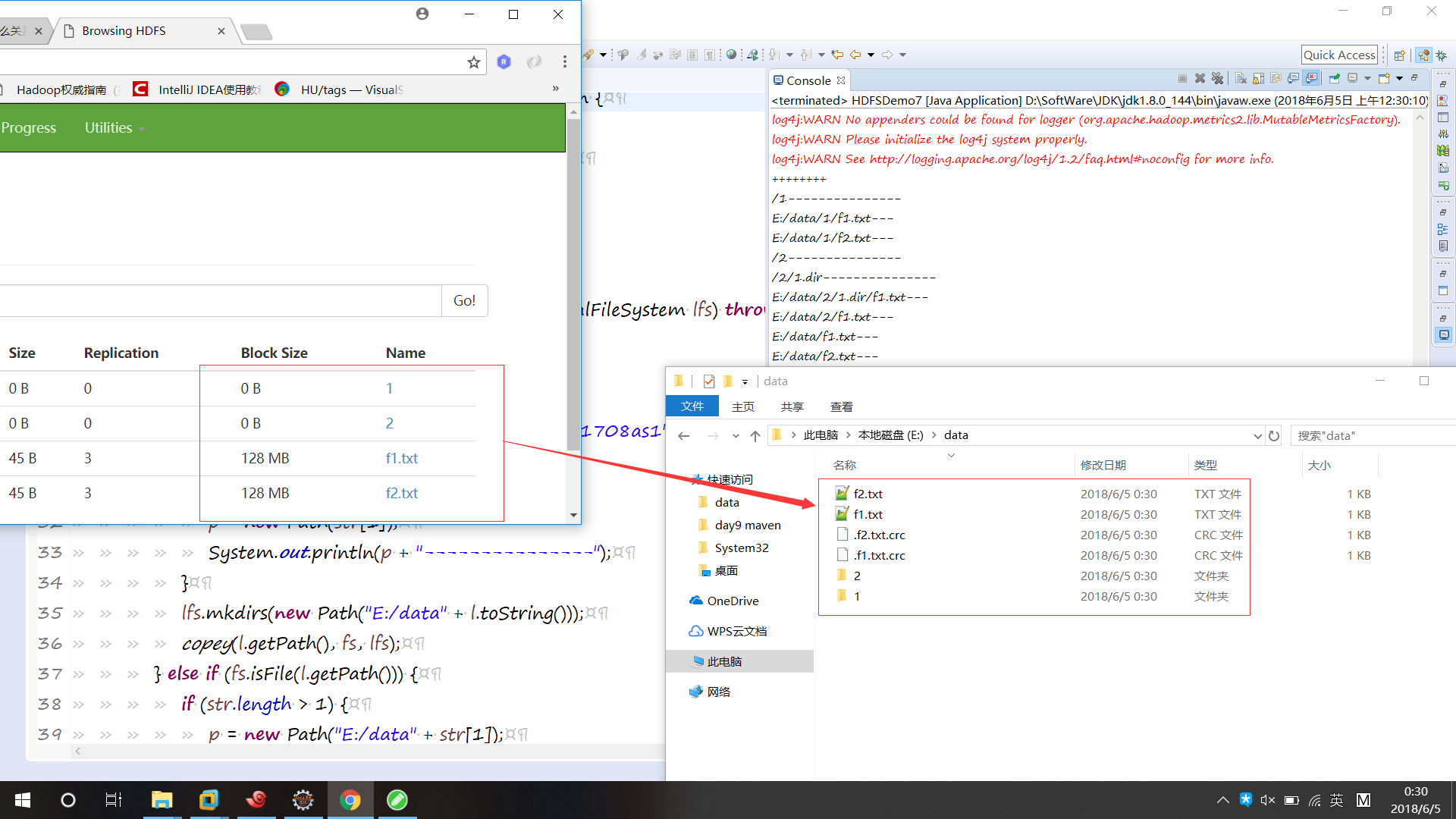

原样下载:

package com.bw.day01;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocalFileSystem;

import org.apache.hadoop.fs.Path;

import com.bw.day01.util.FSUtils;

/**

* 原样下载

*

* @author huhu_k

*

*/

public class HDFSDemo7 {

public static void main(String[] args) throws Exception {

FileSystem fs = FSUtils.getFileSystem();

LocalFileSystem lfs = FSUtils.getLocalFileSystem();

System.out.println("++++++++");

copey(new Path("/1708as1"), fs, lfs);

}

public static void copey(Path path, FileSystem fs, LocalFileSystem lfs) throws Exception {

Path p = new Path("/");

FileStatus[] listStatus = fs.listStatus(path);

for (FileStatus l : listStatus) {

String[] str = l.getPath().toString().split("8020/1708as1");

if (fs.isDirectory(l.getPath())) {

if (str.length > 1) {

p = new Path(str[1]);

System.out.println(p + "---------------");

}

lfs.mkdirs(new Path("E:/data" + l.toString()));

copey(l.getPath(), fs, lfs);

} else if (fs.isFile(l.getPath())) {

if (str.length > 1) {

p = new Path("E:/data" + str[1]);

System.out.println(p.toString() + "---");

}

fs.copyToLocalFile(l.getPath(), p);

}

}

}

注意:

可能在运行时报指针异常!!!

可以参考以下链接解决:https://www.cnblogs.com/meiLinYa/p/9136771.html

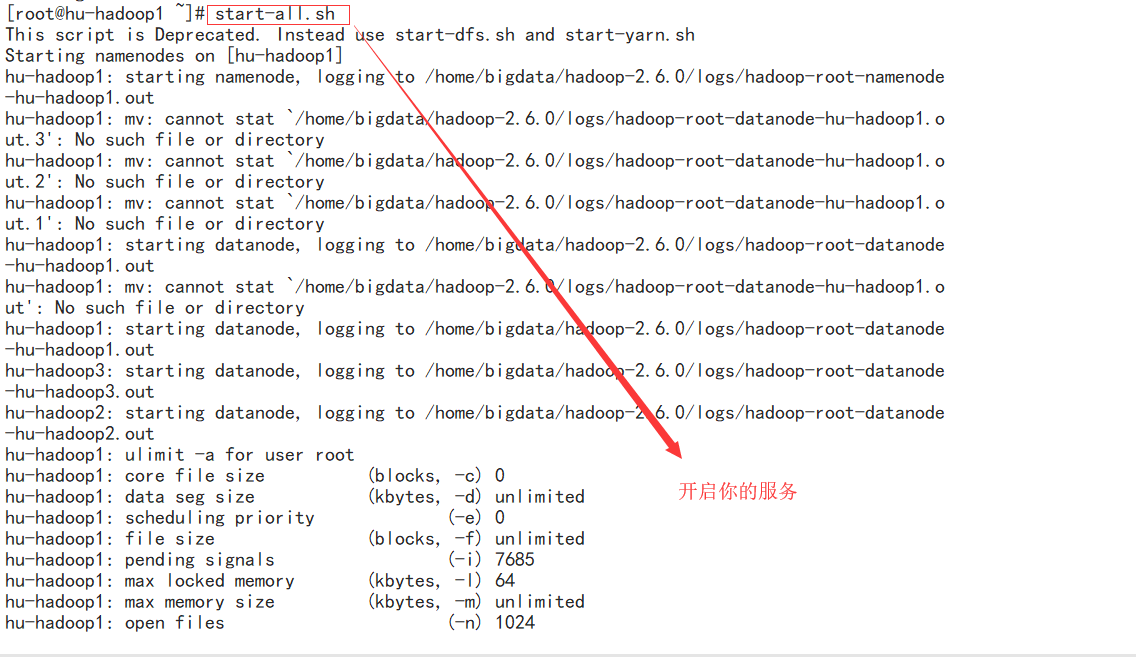

还有在Java-API对hdfs操作时要开启服务 start-all-sh.

然后主要的都讲完了!!怎么样是波狗粮吧。。。。

huhu_L:深夜的狗粮吃的最撑哦!!!