API使用

一、准备工作

1.1、解压

解压 hadoop 安装包到非中文路径(例如:D:\users\hadoop-2.6.0-cdh5.14.2)

1.2、环境变量

在 windows 上配置 HADOOP_HOME 环境变量(与 windows 配置 jdk 环境变量

方法类似)

1.3、新建工程

使用开发工具创建一个 Maven 工程

1.4、依赖包

导入相应的依赖,依赖如下:

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>RELEASE</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0-cdh5.14.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.0-cdh5.14.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0-cdh5.14.2</version>

</dependency>

</dependencies>

注意:Maven 仓库没有支持 cdh 相关依赖,cloudera 自己建立了一个相关的

仓库,需要在 pom 单独添加 cloudera 仓库。

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

1.5、测试

创建一个包cn.big.data,创建 HdfsClient 类,使用 Junit 方式测试

创建一个目录

package cn.big.data;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

public class HdfsClient {

@Test

public void testMkdirs() throws IOException, InterruptedException, URISyntaxException {

// 1 获取文件系统

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"), conf, "root");

// 2 创建目录

fs.mkdirs(new Path("/myApi"));

// 3 关闭资源

fs.close();

}

}

1.6、注意事项

如果 idea 打印不出日志,在控制台上只显示如下信息

1.log4j:WARNNoappenderscouldbefoundforlogger(org.apache.hadoop.

util.Shell).

2.log4j:WARNPleaseinitializethelog4jsystemproperly.

3.log4j:WARNSeehttp://logging.apache.org/log4j/1.2/faq.html#noconfi

gformoreinfo.

需要在项目的 src/main/resources 目录下,新建一个文件,命名为

“log4j.properties”,在文件中填入:

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

二、使用方法

2.1、HDFS 文件上传

@Test

public void upLoad() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

// 设置副本存储数量为1,默认是3

configuration.set("dfs.replication","1");

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

//上传文件

fs.copyFromLocalFile(new Path("D:\\study\\codes\\hadoop\\HdfsClientDemo\\data\\hdfsDemo\\test.txt"),new Path("/myApi/"));

//关闭资源

fs.close();

System.out.println("ok");

}

2.2、HDFS 文件下载

@Test

public void downLoad() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

//下载文件

// boolean delSrc 指是否将原文件删除

// Path src 指要下载的文件路径

// Path dst 指将文件下载到的路径

// boolean useRawLocalFileSystem 是否开启文件校验

fs.copyToLocalFile(false,new Path("/myApi/test.txt"),new Path("D:\\study\\codes\\hadoop\\HdfsClientDemo\\HdfsTest"),true);

fs.close();

}

2.3、HDFS 文件夹删除

@Test

public void dRemove() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

//删除文件夹

fs.delete(new Path("/myApi/remove"),true);

fs.close();

}

2.4、HDFS 文件名更改

public void fRename() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

//修改文件名

fs.rename(new Path("/myApi/test.txt"),new Path("/myApi/testRename.txt"));

fs.close();

}

2.5、HDFS 文件详情查看

@Test

public void testListFiles() throws IOException, URISyntaxException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

//获取文件详情

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"),true);

while (listFiles.hasNext()){

LocatedFileStatus status = listFiles.next();

//输出详情

//文件名称

System.out.println(status.getPath().getName());

//长度

System.out.println(status.getLen());

//权限

System.out.println(status.getPermission());

//组

System.out.println(status.getGroup());

//获取存储的块信息

BlockLocation[] blockLocations = status.getBlockLocations();

for (BlockLocation blockLocation : blockLocations) {

//获取块存储的主机节点

String[] hosts = blockLocation.getHosts();

for (String host : hosts) {

System.out.println(host);

}

}

System.out.println("-------------------------------");

}

}

2.6、HDFS 文件和文件夹判断

@Test

public void testListStatus() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

//判断是文件还是文件夹

FileStatus[] listStatus = fs.listStatus(new Path("/"));

for (FileStatus fileStatus : listStatus) {

if (fileStatus.isFile()){

System.out.println("f:"+fileStatus.getPath().getName());

}else {

System.out.println("d:"+fileStatus.getPath().getName());

}

}

fs.close();

}

2.7、HDFS 的 I/O 流操作

2.7.1 文件上传

@Test

public void putFileToHDFS() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

//创建输入流

FileInputStream fis = new FileInputStream(new File("D:\\study\\codes\\hadoop\\HdfsClientDemo\\HdfsTest\\test.txt"));

//获取输出流

FSDataOutputStream fos = fs.create(new Path("/myApi/testIO.txt"));

//执行流拷贝

IOUtils.copyBytes(fis,fos,configuration);

//关闭资源

IOUtils.closeStream(fis);

IOUtils.closeStream(fos);

}

2.7.2 文件下载

@Test

public void getFileFromHDFS() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

//获取输入流

FSDataInputStream fis = fs.open(new Path("/myApi/testIO.txt"));

//获取输出流

FileOutputStream fos = new FileOutputStream(new File("D:\\study\\codes\\hadoop\\HdfsClientDemo\\HdfsTest\\IODownload.txt"));

//流的对拷

IOUtils.copyBytes(fis,fos,configuration);

//关闭资源

IOUtils.closeStream(fis);

IOUtils.closeStream(fos);

fs.close();

}

2.8、定位文件读取

这里强调可以设置任意位置读取 hdfs 文件,对于 mapreduce 分片 inputsplit 和 spark 分区理解有一定帮助。

先将 hadoop 安装包上传到 HDFS 文件系统

下载第一块

@Test

public void readFileSeek1() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

FSDataInputStream fis = fs.open(new Path("/myApi//hadoop-2.6.0-cdh5.14.2.tar.gz"));

FileOutputStream fos = new FileOutputStream(new File("C:\\Users\\Dongue\\Desktop\\seek\\hadoop-2.6.0-cdh5.14.2.tar.gz.part1"));

//流的拷贝

byte[] buf = new byte[1024];

for (int i = 0; i < 1024 * 128; i++) {

fis.read(buf);

fos.write(buf);

}

IOUtils.closeStream(fis);

IOUtils.closeStream(fos);

}

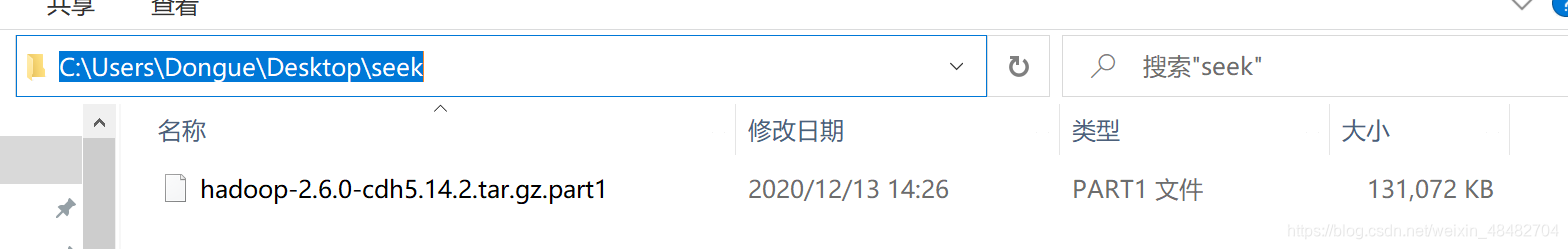

下载成功

下载第二块

@Test

public void readFileSeek2() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://192.168.247.130:9000"),configuration,"root");

FSDataInputStream fis = fs.open(new Path("/myApi//hadoop-2.6.0-cdh5.14.2.tar.gz"));

//定位输入数据位置

fis.seek(1024*1024*128);

FileOutputStream fos = new FileOutputStream(new File("C:\\Users\\Dongue\\Desktop\\seek\\hadoop-2.6.0-cdh5.14.2.tar.gz.part2"));

//流的对拷

IOUtils.copyBytes(fis,fos,configuration);

IOUtils.closeStream(fis);

IOUtils.closeStream(fos);

}

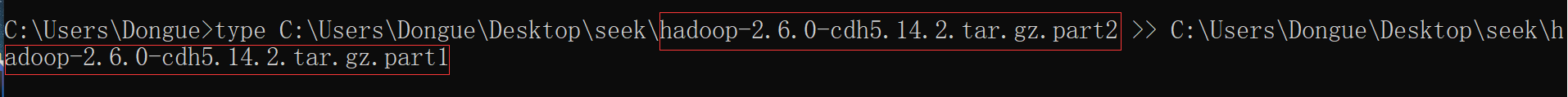

合并文件

在 window 命令窗口中执行

type hadoop-2.6.0-cdh5.14.2.tar.gz.part2 >> hadoop-2.6.0-cdh5.14.2.tar.gz.part1

合并后就是完整的 hadoop 安装包文件