1. Istio 基础

1.1 服务网格

服务网格应用程序中管理实例之间的网络流量的部分称为数据平面

控制平面负责生成和部署控制平面行为的相关配置

控制平面通常包括API接口,命令行界面和用于管理应用程序的图形用户界面等

1.2 为什么要用服务网格

对于部署在kubernetes的典型应用,其流量主要有两部分组成

- 通过Ingress Gateway进入网格的以及通过Egress Gateway离开网络的南北向流量

- 网格内部东西向流量

若没有用到服务网格,则可能会存在以下问题:

- 服务之间通讯安全无法保证

- 跟踪通信延迟非常困难

- 负载均衡功能有限

1.3 服务网格的功能

- Traffic managerment 流量治理

- Protocol layer7(http,gRPC)

- Dynamic Routing conditional,weighting,mirroring

- Resiliency timeouts,retries,circuit breakers

- Policy access control,rate limits,quotas

- Testing fault injection

- Security 安全

- Encrption mTLS,certificate management, external CA

- Strong Identity SPIFFE or similar

- Auth authentication,authorisation

- Observabilty 可观测

- Metrics golden metrics,prometheus,grafana

- Tracing trace across workloads

- Traffic cluster,ingress/egress

- Mesh 网格

- Supported Compute kubernetes,virtual machines

- Multi-cluster-gateways ,federation

1.4 Istio 架构

Istio服务网格逻辑上分为数据平面和控制平面

- Istio: 控制平面,由多个组件组合完成控制机制.

- Envoy: 数据平面,以Sidecar形式与服务进程运行在一起的只能代理Envoy

1.5 Istio模块

-

Pilot

- 服务发现

- 配置Sidecar

- 流量治理

- A/B testing AB测试

- Failover故障转移

- Fault Injection 故障注入

- Canary rollout金丝雀发布

- Circuit breaker熔断

- Retries 重试

- Timeouts超时

-

Citadel安全

- 证书分发,轮替,吊销

-

Galley

中间层,将Pilot与底层解耦.将Pilot配置转为底层配置.

1.6 数据平面组件

配置由控制平面下发

- Ingress Gateway(必选)

- Egress Gateway(可选)

- Sidecar proxy(内部通信)

2. Kiali

2.1 Istio 可视化组件

- Grafana

- Kiali

- 服务拓扑图

- 分布式跟踪

- 指标数据收集和图标

- 配置校验

- 健康检查和显示

2.2 Kiali

基于go语言开发,由2个组件组成

- Kiali front-end: Web UI查询后端并展示给用户

- Kiali back-end: 后端应用,负责与Istio组件通讯,检索和处理数据并公开给前端

Kiali依赖于kubernetes和Istio提供外部服务和组件

- prometheus: 查询prometheus中的指标数据来生成网络拓扑,显示指标,生成健康状况及可能存在的问题

- Cluster API: 获取和解释服务网络的配置

- Jaeger: 查询和展示追踪数据,前提是Istio需要开启追踪功能

- Grafana: 调用Grafana展示指标数据

3 Kubernetes CRD

Istio的所有路由规则和控制策略都基于Kubernetes CRD实现,于是各种配置策略的定义也都保存在Kube-apiserver后端的ETCD中

- 这意味着kube-apiserver也就是Istio的APIServer

- Galley负责从kube-apiserver加载配置并进行分发

Istio提供了许多CRD,他们隶属于不同功能组群

- network(networking.istio.io): 流量治理,8个CR

- VirtualService,DestinationRule,Gateway

- ServiceEntry,Sidecar,EnvoyFilter

- WorkloadEntry,WorkloadGroup

- Security(security.istio.io): 网格安全,3个CR

- AuthorizationPolicy,PeerAuthentication,RequestAuthentication

- Telemetry(telemetry.istio.io): 网格遥控,1个CR

- Telemetry

- Extensions(extensions.istio.io): 扩展机制,1个CR

- WasmPlugin

- IstioOperator(install.istio.io): IstioOperator用来管理Istio集群,1个CR

- IstioOperator

4. 部署Istio

4.1 部署Istio方法

- Istioctl

- Istio Operator

- Helm

4.2 使用Istioctl 部署

# mkdir /apps

# cd /apps

# curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.13.3 TARGET_ARCH=x86_64 sh -

# ln -sf /apps/istio-1.13.3 /apps/istio

# ln -sf /apps/istio/bin/istioctl /usr/bin/

4.3 查看Istio内置档案

# istioctl profile list

Istio configuration profiles:

default

demo

empty

external

minimal

openshift

preview

remote

4.4 部署Istio集群

# istioctl install -s profile=demo -y

✔ Istio core installed

✔ Istiod installed

✔ Ingress gateways installed

✔ Egress gateways installed

✔ Installation complete

Making this installation the default for injection and validation.

Thank you for installing Istio 1.13. Please take a few minutes to tell us about your install/upgrade experience! https://forms.gle/pzWZpAvMVBecaQ9h9

# kubectl get pods -n istio-system

NAME READY STATUS RESTARTS AGE

istio-egressgateway-5c597cdb77-48bj8 1/1 Running 0 95s

istio-ingressgateway-8d7d49b55-bmbx5 1/1 Running 0 95s

istiod-54c54679d7-78f2d 1/1 Running 0 119s

4.5 安装组件

# kubectl apply -f samples/addons/

serviceaccount/grafana created

configmap/grafana created

service/grafana created

deployment.apps/grafana created

configmap/istio-grafana-dashboards created

configmap/istio-services-grafana-dashboards created

deployment.apps/jaeger created

service/tracing created

service/zipkin created

service/jaeger-collector created

serviceaccount/kiali created

configmap/kiali created

clusterrole.rbac.authorization.k8s.io/kiali-viewer created

clusterrole.rbac.authorization.k8s.io/kiali created

clusterrolebinding.rbac.authorization.k8s.io/kiali created

role.rbac.authorization.k8s.io/kiali-controlplane created

rolebinding.rbac.authorization.k8s.io/kiali-controlplane created

service/kiali created

deployment.apps/kiali created

serviceaccount/prometheus created

configmap/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus configured

clusterrolebinding.rbac.authorization.k8s.io/prometheus configured

service/prometheus created

deployment.apps/prometheus created

# kubectl get svc -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana ClusterIP 10.200.62.191 <none> 3000/TCP 70s

istio-egressgateway ClusterIP 10.200.29.127 <none> 80/TCP,443/TCP 8m58s

istio-ingressgateway LoadBalancer 10.200.239.76 <pending> 15021:49708/TCP,80:42116/TCP,443:61970/TCP,31400:56204/TCP,15443:45720/TCP 8m58s

istiod ClusterIP 10.200.249.97 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 9m23s

jaeger-collector ClusterIP 10.200.4.90 <none> 14268/TCP,14250/TCP,9411/TCP 70s

kiali ClusterIP 10.200.132.85 <none> 20001/TCP,9090/TCP 69s

prometheus ClusterIP 10.200.99.200 <none> 9090/TCP 69s

tracing ClusterIP 10.200.160.255 <none> 80/TCP,16685/TCP 70s

zipkin ClusterIP 10.200.92.91 <none> 9411/TCP 70s

# kubectl get pods -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-6c5dc6df7c-96cwc 1/1 Running 0 2m31s

istio-egressgateway-5c597cdb77-48bj8 1/1 Running 0 10m

istio-ingressgateway-8d7d49b55-bmbx5 1/1 Running 0 10m

istiod-54c54679d7-78f2d 1/1 Running 0 10m

jaeger-9dd685668-hmg9c 1/1 Running 0 2m31s

kiali-699f98c497-wjtwl 1/1 Running 0 2m30s

prometheus-699b7cc575-5d7gk 2/2 Running 0 2m30s

4.6 建立命名空间

root@k8s-master-01:/apps/istio# kubectl create ns hr

namespace/hr created

root@k8s-master-01:/apps/istio# kubectl label ns hr istio-injection=enabled

namespace/hr labeled

4.7 部署测试镜像

启动一个测试pod,可以看到会自动注入一个container

ot@k8s-master-01:/apps/istio# kubectl apply -f samples/sleep/sleep.yaml -n hr

serviceaccount/sleep created

service/sleep created

deployment.apps/sleep created

root@k8s-master-01:/apps/istio# kubectl get pods -n hr

NAME READY STATUS RESTARTS AGE

sleep-557747455f-k8qzc 2/2 Running 0 32s

oot@k8s-master-01:/apps/istio# kubectl get pods -n hr sleep-557747455f-k8qzc -o yaml

...略

containerStatuses:

- containerID: docker://4f376791fe667e23910a512710839e82913df2191fc8fc288e9bfd441e9f1b23

image: istio/proxyv2:1.13.3

imageID: docker-pullable://istio/proxyv2@sha256:e8986efce46a7e1fcaf837134f453ea2b5e0750a464d0f2405502f8ddf0e2cd2

lastState: {

}

name: istio-proxy

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2022-10-11T09:05:13Z"

root@k8s-master-01:/apps/istio# istioctl ps

NAME CLUSTER CDS LDS EDS RDS ISTIOD VERSION

istio-egressgateway-5c597cdb77-48bj8.istio-system Kubernetes SYNCED SYNCED SYNCED NOT SENT istiod-54c54679d7-78f2d 1.13.3

istio-ingressgateway-8d7d49b55-bmbx5.istio-system Kubernetes SYNCED SYNCED SYNCED NOT SENT istiod-54c54679d7-78f2d 1.13.3

sleep-557747455f-k8qzc.hr Kubernetes SYNCED SYNCED SYNCED SYNCED istiod-54c54679d7-78f2d 1.13.3

查看Sadecar上的listener

root@k8s-master-01:/apps/istio# istioctl pc listener sleep-557747455f-k8qzc.hr

ADDRESS PORT MATCH DESTINATION

10.200.0.2 53 ALL Cluster: outbound|53||kube-dns.kube-system.svc.cluster.local

0.0.0.0 80 Trans: raw_buffer; App: http/1.1,h2c Route: 80

0.0.0.0 80 ALL PassthroughCluster

10.200.103.146 80 Trans: raw_buffer; App: http/1.1,h2c Route: tomcat-service.default.svc.cluster.local:80

10.200.103.146 80 ALL Cluster: outbound|80||tomcat-service.default.svc.cluster.local

10.200.0.1 443 ALL Cluster: outbound|443||kubernetes.default.svc.cluster.local

10.200.133.113 443 Trans: raw_buffer; App: http/1.1,h2c Route: kubernetes-dashboard.kubernetes-dashboard.svc.cluster.local:443

10.200.133.113 443 ALL Cluster: outbound|443||kubernetes-dashboard.kubernetes-dashboard.svc.cluster.local

10.200.176.11 443 ALL Cluster: outbound|443||ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

10.200.239.76 443 ALL Cluster: outbound|443||istio-ingressgateway.istio-system.svc.cluster.local

10.200.249.97 443 ALL Cluster: outbound|443||istiod.istio-system.svc.cluster.local

10.200.29.127 443 ALL Cluster: outbound|443||istio-egressgateway.istio-system.svc.cluster.local

10.200.91.80 443 ALL Cluster: outbound|443||metrics-server.kube-system.svc.cluster.local

10.200.62.191 3000 Trans: raw_buffer; App: http/1.1,h2c Route: grafana.istio-system.svc.cluster.local:3000

10.200.62.191 3000 ALL Cluster: outbound|3000||grafana.istio-system.svc.cluster.local

192.168.31.101 4194 Trans: raw_buffer; App: http/1.1,h2c Route: kubelet.kube-system.svc.cluster.local:4194

192.168.31.101 4194 ALL Cluster: outbound|4194||kubelet.kube-system.svc.cluster.local

192.168.31.102 4194 Trans: raw_buffer; App: http/1.1,h2c Route: kubelet.kube-system.svc.cluster.local:4194

192.168.31.102 4194 ALL Cluster: outbound|4194||kubelet.kube-system.svc.cluster.local

192.168.31.103 4194 Trans: raw_buffer; App: http/1.1,h2c Route: kubelet.kube-system.svc.cluster.local:4194

192.168.31.103 4194 ALL Cluster: outbound|4194||kubelet.kube-system.svc.cluster.local

192.168.31.111 4194 Trans: raw_buffer; App: http/1.1,h2c Route: kubelet.kube-system.svc.cluster.local:4194

192.168.31.111 4194 ALL Cluster: outbound|4194||kubelet.kube-system.svc.cluster.local

192.168.31.112 4194 Trans: raw_buffer; App: http/1.1,h2c Route: kubelet.kube-system.svc.cluster.local:4194

192.168.31.112 4194 ALL Cluster: outbound|4194||kubelet.kube-system.svc.cluster.local

192.168.31.113 4194 Trans: raw_buffer; App: http/1.1,h2c Route: kubelet.kube-system.svc.cluster.local:4194

192.168.31.113 4194 ALL Cluster: outbound|4194||kubelet.kube-system.svc.cluster.local

192.168.31.114 4194 Trans: raw_buffer; App: http/1.1,h2c Route: kubelet.kube-system.svc.cluster.local:4194

192.168.31.114 4194 ALL Cluster: outbound|4194||kubelet.kube-system.svc.cluster.local

10.200.41.11 8000 Trans: raw_buffer; App: http/1.1,h2c Route: dashboard-metrics-scraper.kubernetes-dashboard.svc.cluster.local:8000

10.200.41.11 8000 ALL Cluster: outbound|8000||dashboard-metrics-scraper.kubernetes-dashboard.svc.cluster.local

0.0.0.0 8080 Trans: raw_buffer; App: http/1.1,h2c Route: 8080

0.0.0.0 8080 ALL PassthroughCluster

10.200.98.90 8080 Trans: raw_buffer; App: http/1.1,h2c Route: kube-state-metrics.kube-system.svc.cluster.local:8080

10.200.98.90 8080 ALL Cluster: outbound|8080||kube-state-metrics.kube-system.svc.cluster.local

0.0.0.0 9090 Trans: raw_buffer; App: http/1.1,h2c Route: 9090

0.0.0.0 9090 ALL PassthroughCluster

10.200.241.145 9090 Trans: raw_buffer; App: http/1.1,h2c Route: prometheus.monitoring.svc.cluster.local:9090

10.200.241.145 9090 ALL Cluster: outbound|9090||prometheus.monitoring.svc.cluster.local

0.0.0.0 9100 Trans: raw_buffer; App: http/1.1,h2c Route: 9100

0.0.0.0 9100 ALL PassthroughCluster

10.200.0.2 9153 Trans: raw_buffer; App: http/1.1,h2c Route: kube-dns.kube-system.svc.cluster.local:9153

10.200.0.2 9153 ALL Cluster: outbound|9153||kube-dns.kube-system.svc.cluster.local

0.0.0.0 9411 Trans: raw_buffer; App: http/1.1,h2c Route: 9411

0.0.0.0 9411 ALL PassthroughCluster

192.168.31.101 10250 ALL Cluster: outbound|10250||kubelet.kube-system.svc.cluster.local

192.168.31.102 10250 ALL Cluster: outbound|10250||kubelet.kube-system.svc.cluster.local

192.168.31.103 10250 ALL Cluster: outbound|10250||kubelet.kube-system.svc.cluster.local

192.168.31.111 10250 ALL Cluster: outbound|10250||kubelet.kube-system.svc.cluster.local

192.168.31.112 10250 ALL Cluster: outbound|10250||kubelet.kube-system.svc.cluster.local

192.168.31.113 10250 ALL Cluster: outbound|10250||kubelet.kube-system.svc.cluster.local

192.168.31.114 10250 ALL Cluster: outbound|10250||kubelet.kube-system.svc.cluster.local

0.0.0.0 10255 Trans: raw_buffer; App: http/1.1,h2c Route: 10255

0.0.0.0 10255 ALL PassthroughCluster

10.200.4.90 14250 Trans: raw_buffer; App: http/1.1,h2c Route: jaeger-collector.istio-system.svc.cluster.local:14250

10.200.4.90 14250 ALL Cluster: outbound|14250||jaeger-collector.istio-system.svc.cluster.local

10.200.4.90 14268 Trans: raw_buffer; App: http/1.1,h2c Route: jaeger-collector.istio-system.svc.cluster.local:14268

10.200.4.90 14268 ALL Cluster: outbound|14268||jaeger-collector.istio-system.svc.cluster.local

0.0.0.0 15001 ALL PassthroughCluster

0.0.0.0 15001 Addr: *:15001 Non-HTTP/Non-TCP

0.0.0.0 15006 Addr: *:15006 Non-HTTP/Non-TCP

0.0.0.0 15006 Trans: tls; App: istio-http/1.0,istio-http/1.1,istio-h2; Addr: 0.0.0.0/0 InboundPassthroughClusterIpv4

0.0.0.0 15006 Trans: raw_buffer; App: http/1.1,h2c; Addr: 0.0.0.0/0 InboundPassthroughClusterIpv4

0.0.0.0 15006 Trans: tls; App: TCP TLS; Addr: 0.0.0.0/0 InboundPassthroughClusterIpv4

0.0.0.0 15006 Trans: raw_buffer; Addr: 0.0.0.0/0 InboundPassthroughClusterIpv4

0.0.0.0 15006 Trans: tls; Addr: 0.0.0.0/0 InboundPassthroughClusterIpv4

0.0.0.0 15006 Trans: tls; App: istio,istio-peer-exchange,istio-http/1.0,istio-http/1.1,istio-h2; Addr: *:80 Cluster: inbound|80||

0.0.0.0 15006 Trans: raw_buffer; Addr: *:80 Cluster: inbound|80||

0.0.0.0 15010 Trans: raw_buffer; App: http/1.1,h2c Route: 15010

0.0.0.0 15010 ALL PassthroughCluster

10.200.249.97 15012 ALL Cluster: outbound|15012||istiod.istio-system.svc.cluster.local

0.0.0.0 15014 Trans: raw_buffer; App: http/1.1,h2c Route: 15014

0.0.0.0 15014 ALL PassthroughCluster

0.0.0.0 15021 ALL Inline Route: /healthz/ready*

10.200.239.76 15021 Trans: raw_buffer; App: http/1.1,h2c Route: istio-ingressgateway.istio-system.svc.cluster.local:15021

10.200.239.76 15021 ALL Cluster: outbound|15021||istio-ingressgateway.istio-system.svc.cluster.local

0.0.0.0 15090 ALL Inline Route: /stats/prometheus*

10.200.239.76 15443 ALL Cluster: outbound|15443||istio-ingressgateway.istio-system.svc.cluster.local

0.0.0.0 16685 Trans: raw_buffer; App: http/1.1,h2c Route: 16685

0.0.0.0 16685 ALL PassthroughCluster

0.0.0.0 20001 Trans: raw_buffer; App: http/1.1,h2c Route: 20001

0.0.0.0 20001 ALL PassthroughCluster

10.200.239.76 31400 ALL Cluster: outbound|31400||istio-ingressgateway.istio-system.svc.cluster.local

查看endpoint

root@k8s-master-01:~# istioctl pc ep sleep-557747455f-k8qzc.hr

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.200.92.91:9411 HEALTHY OK zipkin

127.0.0.1:15000 HEALTHY OK prometheus_stats

127.0.0.1:15020 HEALTHY OK agent

172.100.109.65:8443 HEALTHY OK outbound|443||kubernetes-dashboard.kubernetes-dashboard.svc.cluster.local

172.100.109.66:8080 HEALTHY OK outbound|8080||kube-state-metrics.kube-system.svc.cluster.local

172.100.109.73:9090 HEALTHY OK outbound|9090||prometheus.istio-system.svc.cluster.local

172.100.109.74:9411 HEALTHY OK outbound|9411||jaeger-collector.istio-system.svc.cluster.local

172.100.109.74:9411 HEALTHY OK outbound|9411||zipkin.istio-system.svc.cluster.local

172.100.109.74:14250 HEALTHY OK outbound|14250||jaeger-collector.istio-system.svc.cluster.local

172.100.109.74:14268 HEALTHY OK outbound|14268||jaeger-collector.istio-system.svc.cluster.local

172.100.109.74:16685 HEALTHY OK outbound|16685||tracing.istio-system.svc.cluster.local

172.100.109.74:16686 HEALTHY OK outbound|80||tracing.istio-system.svc.cluster.local

172.100.109.82:8080 HEALTHY OK outbound|8080||ehelp-tomcat-service.ehelp.svc.cluster.local

172.100.109.87:4443 HEALTHY OK outbound|443||metrics-server.kube-system.svc.cluster.local

172.100.140.74:8080 HEALTHY OK outbound|8080||ehelp-tomcat-service.ehelp.svc.cluster.local

172.100.183.184:15010 HEALTHY OK outbound|15010||istiod.istio-system.svc.cluster.local

172.100.183.184:15012 HEALTHY OK outbound|15012||istiod.istio-system.svc.cluster.local

172.100.183.184:15014 HEALTHY OK outbound|15014||istiod.istio-system.svc.cluster.local

172.100.183.184:15017 HEALTHY OK outbound|443||istiod.istio-system.svc.cluster.local

172.100.183.185:8080 HEALTHY OK outbound|80||istio-ingressgateway.istio-system.svc.cluster.local

172.100.183.185:8443 HEALTHY OK outbound|443||istio-ingressgateway.istio-system.svc.cluster.local

172.100.183.185:15021 HEALTHY OK outbound|15021||istio-ingressgateway.istio-system.svc.cluster.local

172.100.183.185:15443 HEALTHY OK outbound|15443||istio-ingressgateway.istio-system.svc.cluster.local

172.100.183.185:31400 HEALTHY OK outbound|31400||istio-ingressgateway.istio-system.svc.cluster.local

172.100.183.186:8080 HEALTHY OK outbound|80||istio-egressgateway.istio-system.svc.cluster.local

172.100.183.186:8443 HEALTHY OK outbound|443||istio-egressgateway.istio-system.svc.cluster.local

172.100.183.187:3000 HEALTHY OK outbound|3000||grafana.istio-system.svc.cluster.local

172.100.183.188:9090 HEALTHY OK outbound|9090||kiali.istio-system.svc.cluster.local

172.100.183.188:20001 HEALTHY OK outbound|20001||kiali.istio-system.svc.cluster.local

172.100.183.189:80 HEALTHY OK outbound|80||sleep.hr.svc.cluster.local

172.100.76.163:53 HEALTHY OK outbound|53||kube-dns.kube-system.svc.cluster.local

172.100.76.163:9153 HEALTHY OK outbound|9153||kube-dns.kube-system.svc.cluster.local

172.100.76.165:8000 HEALTHY OK outbound|8000||dashboard-metrics-scraper.kubernetes-dashboard.svc.cluster.local

172.100.76.167:9090 HEALTHY OK outbound|9090||prometheus.monitoring.svc.cluster.local

192.168.31.101:6443 HEALTHY OK outbound|443||kubernetes.default.svc.cluster.local

192.168.31.101:8443 HEALTHY OK outbound|443||ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

192.168.31.101:9100 HEALTHY OK outbound|9100||node-exporter.monitoring.svc.cluster.local

192.168.31.102:6443 HEALTHY OK outbound|443||kubernetes.default.svc.cluster.local

192.168.31.102:8443 HEALTHY OK outbound|443||ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

192.168.31.102:9100 HEALTHY OK outbound|9100||node-exporter.monitoring.svc.cluster.local

192.168.31.103:6443 HEALTHY OK outbound|443||kubernetes.default.svc.cluster.local

192.168.31.103:8443 HEALTHY OK outbound|443||ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

192.168.31.103:9100 HEALTHY OK outbound|9100||node-exporter.monitoring.svc.cluster.local

192.168.31.111:8443 HEALTHY OK outbound|443||ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

192.168.31.111:9100 HEALTHY OK outbound|9100||node-exporter.monitoring.svc.cluster.local

192.168.31.112:8443 HEALTHY OK outbound|443||ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

192.168.31.112:9100 HEALTHY OK outbound|9100||node-exporter.monitoring.svc.cluster.local

192.168.31.113:8443 HEALTHY OK outbound|443||ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

192.168.31.113:9100 HEALTHY OK outbound|9100||node-exporter.monitoring.svc.cluster.local

192.168.31.114:8443 HEALTHY OK outbound|443||ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

192.168.31.114:9100 HEALTHY OK outbound|9100||node-exporter.monitoring.svc.cluster.local

unix://./etc/istio/proxy/SDS HEALTHY OK sds-grpc

unix://./etc/istio/proxy/XDS HEALTHY OK xds-grpc

root@k8s-master-01:~# kubectl get pods -o wide -n hr

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

sleep-557747455f-k8qzc 2/2 Running 0 15h 172.100.183.189 192.168.31.103 <none> <none>

4.8 通过网格访问

进入pod,通过网格访问kiali和grafana

root@k8s-master-01:~# kubectl exec -it sleep-557747455f-k8qzc -n hr sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ $ curl kiali.istio-system:20001

<a href="/kiali/">Found</a>.

## 访问grafana

/ $ curl grafana.istio-system:3000

<!doctype html><html lang="en"><head><meta charset="utf-8"/><meta http-equiv="X-UA-Compatible" content="IE=edge,chrome=1"/><meta name="viewport" content="width=device-width"/><meta name="theme-color" content="#000"/><title>Grafana</title><base href="/"/><link rel="preload" href="public/fonts/roboto/RxZJdnzeo3R5zSexge8UUVtXRa8TVwTICgirnJhmVJw.woff2" as="font" crossorigin/><link rel="icon" type="image/png" href="public/img/fav32.png"/><link rel="apple-touch-icon" sizes="180x180" href="public/img/apple-touch-icon.png"/><link rel="mask-icon" href="public/img/grafana_mask_icon.svg" color="#F05A28"/><link rel="stylesheet" href="public/build/grafana.dark.fab5d6bbd438adca1160.css"/><script nonce="">performance.mark('frontend_boot_css_time_seconds');</script><meta name="apple-mobile-web-app-capable" content="yes"/><meta name="apple-mobile-web-app-status-bar-style" content="black"/><meta name="msapplication-TileColor" content="#2b5797"/><

略

5. 网格内的服务发现

5.1 deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: demoappv10

version: v1.0

name: demoappv10

spec:

progressDeadlineSeconds: 600

replicas: 3

selector:

matchLabels:

app: demoapp

version: v1.0

template:

metadata:

labels:

app: demoapp

version: v1.0

spec:

containers:

- image: ikubernetes/demoapp:v1.0

imagePullPolicy: IfNotPresent

name: demoapp

env:

- name: "PORT"

value: "8080"

ports:

- containerPort: 8080

name: web

protocol: TCP

resources:

limits:

cpu: 50m

---

apiVersion: v1

kind: Service

metadata:

name: demoappv10

spec:

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: demoapp

version: v1.0

type: ClusterIP

---

5.2 部署并查看服务状态

当部署完成时,pod就被自动注入了一个sidecar envoy

# kubectl apply -f deploy-demoapp.yaml

deployment.apps/demoappv10 created

service/demoappv10 created

# kubectl get pod -n hr -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoappv10-6ff964cbff-bnlgx 2/2 Running 0 4m25s 172.100.183.190 192.168.31.103 <none> <none>

demoappv10-6ff964cbff-ccsnc 2/2 Running 0 4m25s 172.100.76.166 192.168.31.113 <none> <none>

demoappv10-6ff964cbff-vp7gw 2/2 Running 0 4m25s 172.100.109.77 192.168.31.111 <none> <none>

sleep-557747455f-k8qzc 2/2 Running 0 15h 172.100.183.189 192.168.31.103 <none> <none>

# kubectl get svc -n hr -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

demoappv10 ClusterIP 10.200.156.253 <none> 8080/TCP 2m55s app=demoapp,version=v1.0

sleep ClusterIP 10.200.210.162 <none> 80/TCP 15h app=sleep

此时路由已经显示了demoapp的8080

# istioctl pc route sleep-557747455f-k8qzc.hr|grep demo

8080 demoappv10, demoappv10.hr + 1 more... /*

# istioctl pc cluster sleep-557747455f-k8qzc.hr|grep demo

demoappv10.hr.svc.cluster.local 8080 - outbound EDS

## 此时后端的ep也被发现

# istioctl pc ep sleep-557747455f-k8qzc.hr|grep demo

172.100.109.77:8080 HEALTHY OK outbound|8080||demoappv10.hr.svc.cluster.local

172.100.183.190:8080 HEALTHY OK outbound|8080||demoappv10.hr.svc.cluster.local

172.100.76.166:8080 HEALTHY OK outbound|8080||demoappv10.hr.svc.cluster.local

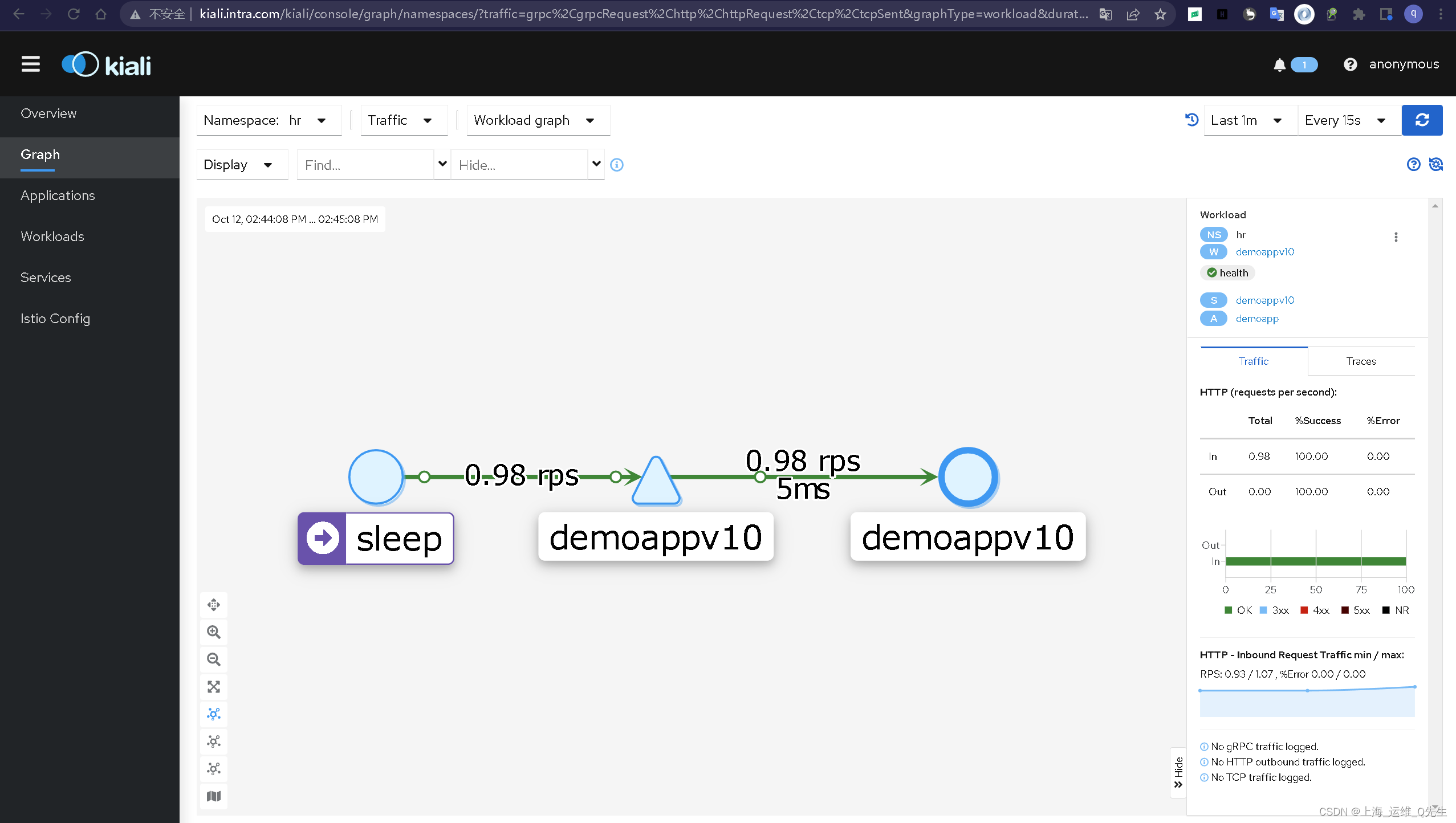

5.2 在sleep pod中测试通过网格访问demoapp10

/ $ curl demoappv10:8080

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-6ff964cbff-bnlgx, ServerIP: 172.100.183.190!

/ $ curl demoappv10:8080

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-6ff964cbff-vp7gw, ServerIP: 172.100.109.77!

/ $ curl demoappv10:8080

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-6ff964cbff-vp7gw, ServerIP: 172.100.109.77!

/ $ curl demoappv10:8080

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-6ff964cbff-ccsnc, ServerIP: 172.100.76.166!

/ $ curl demoappv10:8080

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-6ff964cbff-bnlgx, ServerIP: 172.100.183.190!

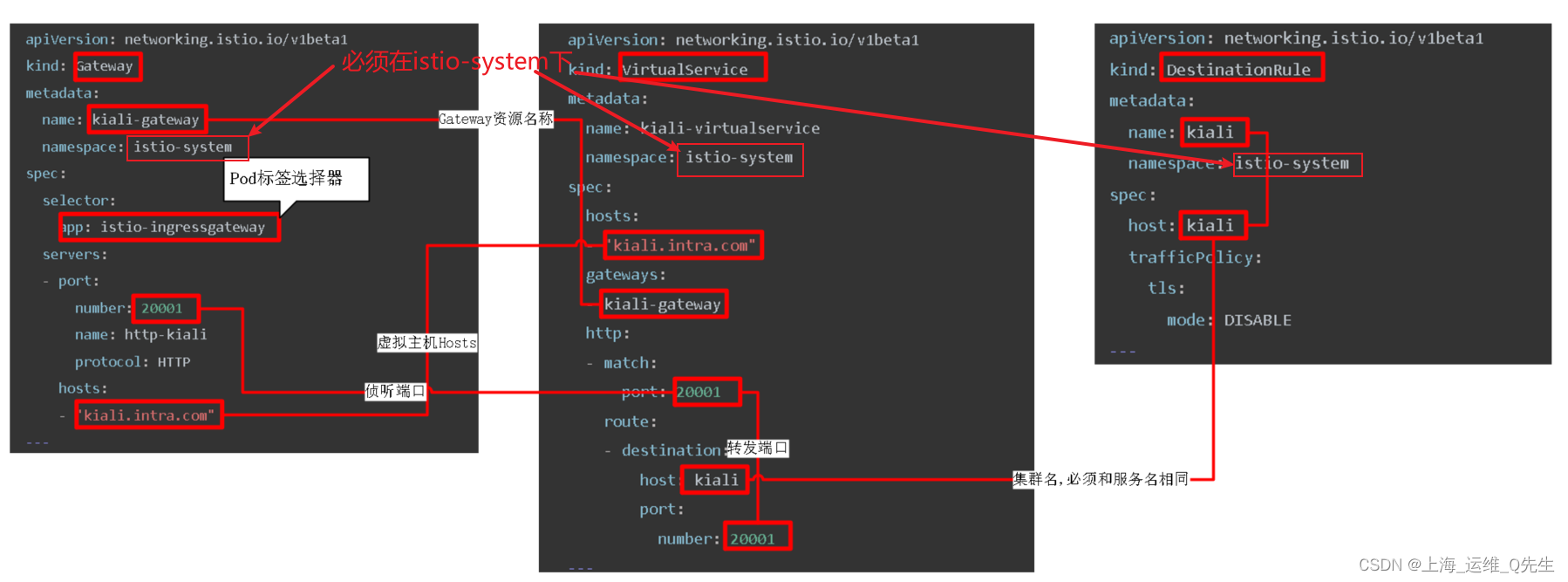

6. 使用Ingress添加端口方式实现kiali对外提供访问

想要将网格内部的服务实现对外提供服务,需要配置:

- Gateway

- VirtualService

- DestinationRule

6.1 gateway

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: kiali-gateway

namespace: istio-system

spec:

selector:

app: istio-ingressgateway

servers:

- port:

number: 20001

name: http-kiali

protocol: HTTP

hosts:

- "kiali.intra.com"

---

6.2 VirtualService

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: kiali-virtualservice

namespace: istio-system

spec:

hosts:

- "kiali.intra.com"

gateways:

- kiali-gateway

http:

- match:

- port: 20001

route:

- destination:

host: kiali

port:

number: 20001

---

6.3 DestinationRule

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: kiali

namespace: istio-system

spec:

host: kiali

trafficPolicy:

tls:

mode: DISABLE

---

6.4 部署kiali-gateway

# kubectl apply -f .

destinationrule.networking.istio.io/kiali created

gateway.networking.istio.io/kiali-gateway created

virtualservice.networking.istio.io/kiali-virtualservice created

root@k8s-master-01:/apps/istio-in-practise/Traffic-Management-Basics/kiali# kubectl get svc -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana ClusterIP 10.200.62.191 <none> 3000/TCP 17h

istio-egressgateway ClusterIP 10.200.29.127 <none> 80/TCP,443/TCP 17h

istio-ingressgateway LoadBalancer 10.200.239.76 <pending> 15021:49708/TCP,80:42116/TCP,443:61970/TCP,31400:56204/TCP,15443:45720/TCP 17h

istiod ClusterIP 10.200.249.97 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 17h

jaeger-collector ClusterIP 10.200.4.90 <none> 14268/TCP,14250/TCP,9411/TCP 17h

kiali ClusterIP 10.200.132.85 <none> 20001/TCP,9090/TCP 17h

prometheus ClusterIP 10.200.99.200 <none> 9090/TCP 17h

tracing ClusterIP 10.200.160.255 <none> 80/TCP,16685/TCP 17h

zipkin ClusterIP 10.200.92.91 <none> 9411/TCP 17h

6.5 查看侦听器

可以看到kiali的侦听已经在ingress上生成

# kubectl get pods -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-6c5dc6df7c-96cwc 1/1 Running 0 20h

istio-egressgateway-5c597cdb77-48bj8 1/1 Running 0 21h

istio-ingressgateway-8d7d49b55-bmbx5 1/1 Running 0 21h

istiod-54c54679d7-78f2d 1/1 Running 0 21h

jaeger-9dd685668-hmg9c 1/1 Running 0 20h

kiali-699f98c497-wjtwl 1/1 Running 0 20h

prometheus-699b7cc575-5d7gk 2/2 Running 0 20h

# istioctl pc listener istio-ingressgateway-8d7d49b55-bmbx5.istio-system

ADDRESS PORT MATCH DESTINATION

0.0.0.0 15021 ALL Inline Route: /healthz/ready*

0.0.0.0 15090 ALL Inline Route: /stats/prometheus*

0.0.0.0 20001 ALL Route: http.20001

6.6 service添加external地址

# kubectl edit svc istio-ingressgateway -n istio-system

### 在spec下追加

spec:

allocateLoadBalancerNodePorts: true

clusterIP: 10.200.239.76

clusterIPs:

- 10.200.239.76

## 追加以下两行

externalIPs:

- 192.168.31.163

#在port:下追加4行

- name: kiali

nodePort: 43230

port: 20001

protocol: TCP

targetPort: 20001

# kubectl get svc -n istio-system |grep ingress

istio-ingressgateway LoadBalancer 10.200.239.76 192.168.31.163 15021:49708/TCP,80:42116/TCP,443:61970/TCP,31400:56204/TCP,15443:45720/TCP,20001:43230/TCP 21h

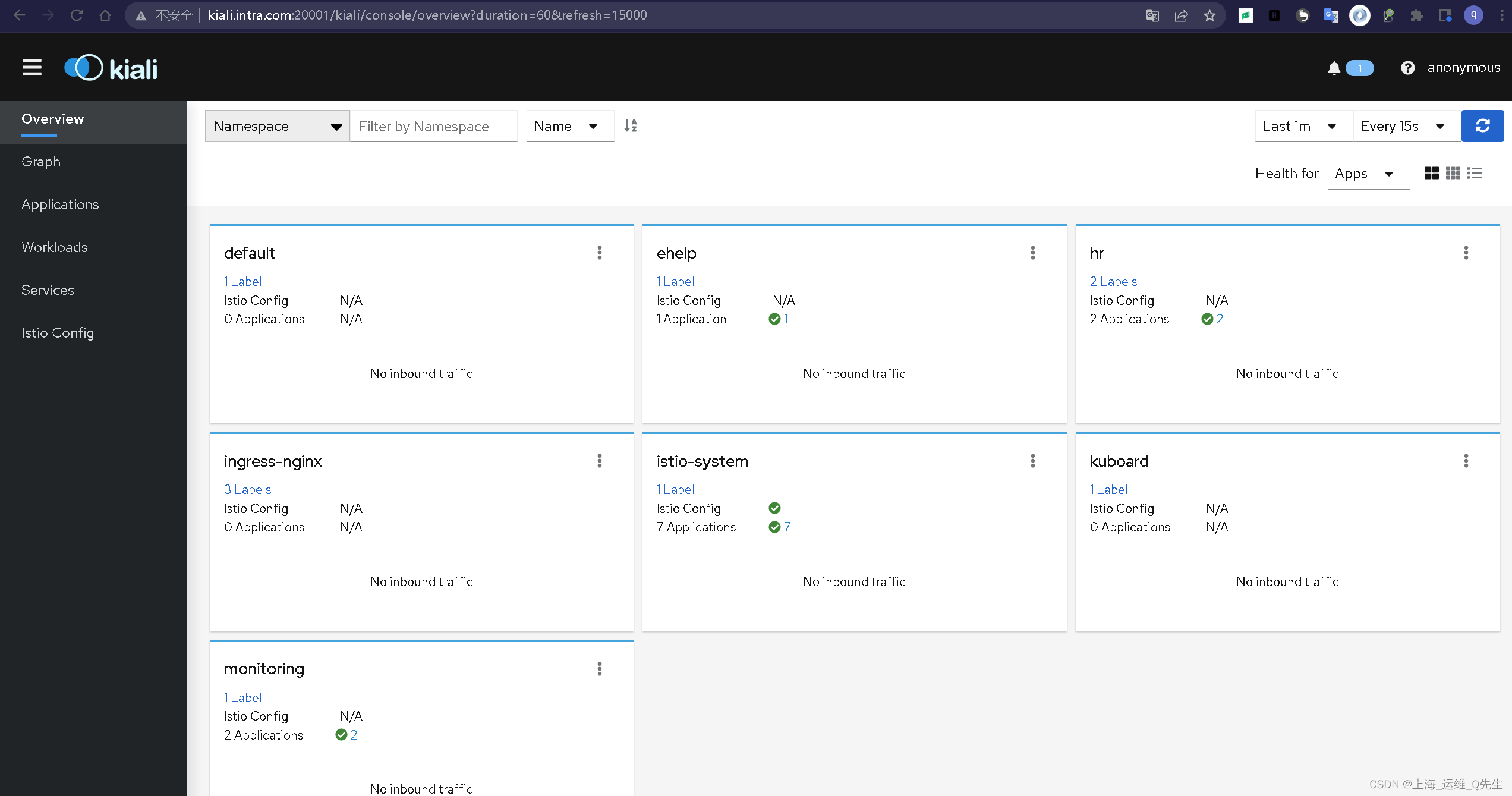

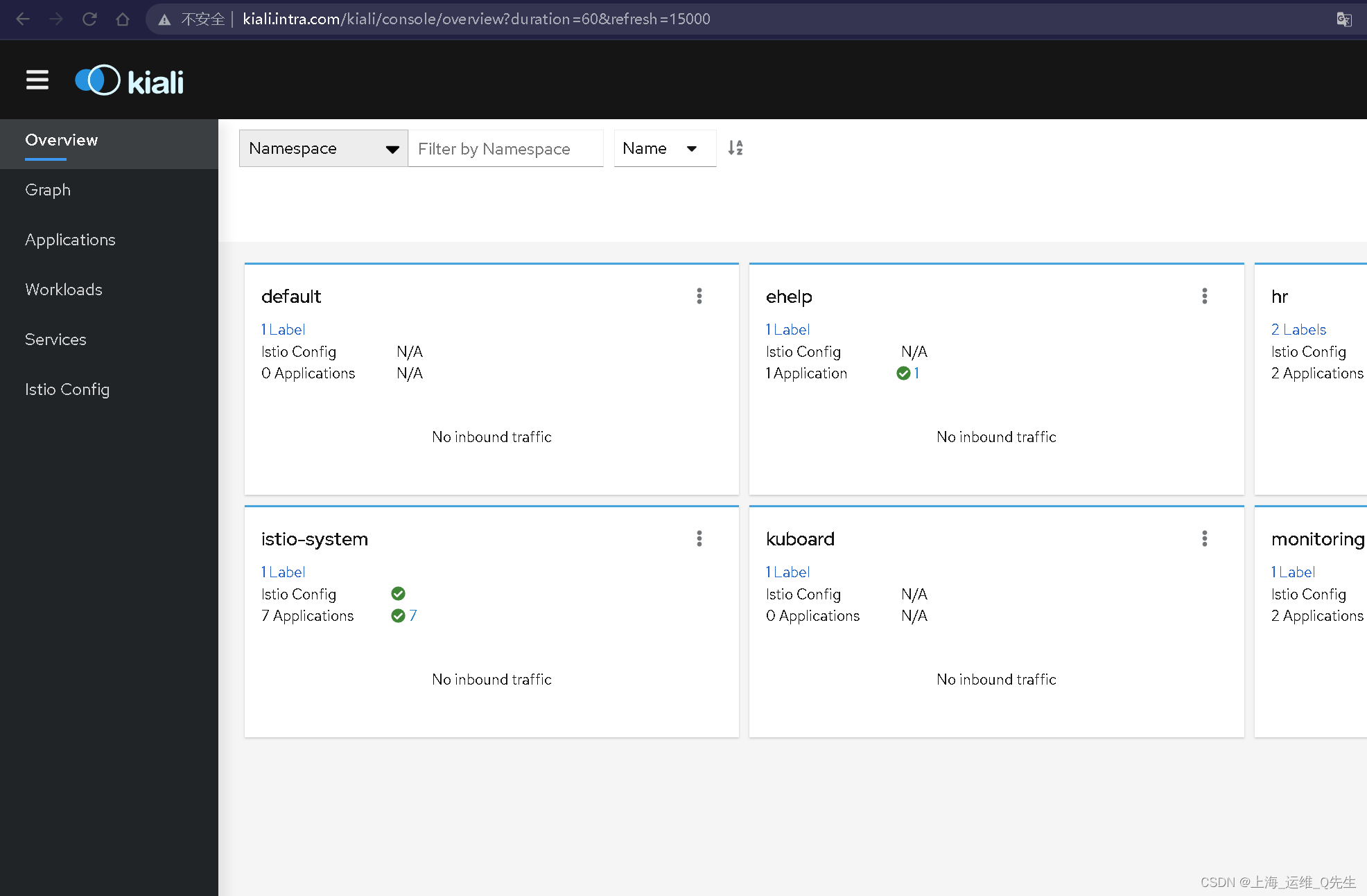

此时在主机的hosts中绑定kiali的域名,进程访问测试

6.7 梳理下访问流程

-

浏览器访问ingress service的20001端口

# kubectl get svc -n istio-system |grep istio-ingressgateway istio-ingressgateway LoadBalancer 10.200.239.76 192.168.31.163 15021:49708/TCP,80:42116/TCP,443:61970/TCP,31400:56204/TCP,15443:45720/TCP,20001:43230/TCP 22h -

Ingress的svc将流量转给Ingress的pod的20001

# istioctl pc listener istio-ingressgateway-8d7d49b55-bmbx5.istio-system|grep 20001 0.0.0.0 20001 ALL Route: http.20001 -

Ingress再根据kiali-virtualservice.yaml的配置将流量转给kiali的service

# cat kiali-virtualservice.yaml apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: kiali-virtualservice namespace: istio-system spec: hosts: - "kiali.intra.com" gateways: - kiali-gateway http: - match: - port: 20001 route: - destination: host: kiali port: number: 20001 --- # kubectl get svc -n istio-system |grep kiali kiali ClusterIP 10.200.132.85 <none> 20001/TCP,9090/TCP 22h -

kiali的service再将流量转给后端的ep,最后根据ep对应的pod进行响应

# kubectl get ep kiali -n istio-system NAME ENDPOINTS AGE kiali 172.100.183.188:9090,172.100.183.188:20001 22h # kubectl get pods -n istio-system -o wide|grep kiali kiali-699f98c497-wjtwl 1/1 Running 0 22h 172.100.183.188 192.168.31.103 <none> <none>

7. 通过80端口方式实现kiali对外提供访问

7.1 gateway

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: kiali-gateway

namespace: istio-system

spec:

selector:

app: istio-ingressgateway

servers:

- port:

number: 80

name: http-kiali

protocol: HTTP

hosts:

- "kiali.intra.com"

---

7.2 VirtualService

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: kiali-virtualservice

namespace: istio-system

spec:

hosts:

- "kiali.intra.com"

gateways:

- kiali-gateway

http:

- match:

- uri:

prefix: /

route:

- destination:

host: kiali

port:

number: 20001

---

7.3 DestinationRule

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: kiali

namespace: istio-system

spec:

host: kiali

trafficPolicy:

tls:

mode: DISABLE

---

此时直接访问http://kiali.intra.com/即可

8. 卸载Istio

8.1 插件卸载

# kubectl delete -f samples/addons/

serviceaccount "grafana" deleted

configmap "grafana" deleted

service "grafana" deleted

deployment.apps "grafana" deleted

configmap "istio-grafana-dashboards" deleted

configmap "istio-services-grafana-dashboards" deleted

deployment.apps "jaeger" deleted

service "tracing" deleted

service "zipkin" deleted

service "jaeger-collector" deleted

serviceaccount "kiali" deleted

configmap "kiali" deleted

clusterrole.rbac.authorization.k8s.io "kiali-viewer" deleted

clusterrole.rbac.authorization.k8s.io "kiali" deleted

clusterrolebinding.rbac.authorization.k8s.io "kiali" deleted

role.rbac.authorization.k8s.io "kiali-controlplane" deleted

rolebinding.rbac.authorization.k8s.io "kiali-controlplane" deleted

service "kiali" deleted

deployment.apps "kiali" deleted

serviceaccount "prometheus" deleted

configmap "prometheus" deleted

clusterrole.rbac.authorization.k8s.io "prometheus" deleted

clusterrolebinding.rbac.authorization.k8s.io "prometheus" deleted

service "prometheus" deleted

deployment.apps "prometheus" deleted

此时只剩下了2个数据平面的gateway和控制平面的istiod

# kubectl get pods -n istio-system

NAME READY STATUS RESTARTS AGE

istio-egressgateway-5c597cdb77-48bj8 1/1 Running 0 40h

istio-ingressgateway-8d7d49b55-bmbx5 1/1 Running 0 40h

istiod-54c54679d7-78f2d 1/1 Running 0 40h

8.2 卸载服务网格

# istioctl x uninstall --purge -y

All Istio resources will be pruned from the cluster

Removed IstioOperator:istio-system:installed-state.

Removed PodDisruptionBudget:istio-system:istio-egressgateway.

Removed PodDisruptionBudget:istio-system:istio-ingressgateway.

Removed PodDisruptionBudget:istio-system:istiod.

Removed Deployment:istio-system:istio-egressgateway.

Removed Deployment:istio-system:istio-ingressgateway.

Removed Deployment:istio-system:istiod.

Removed Service:istio-system:istio-egressgateway.

Removed Service:istio-system:istio-ingressgateway.

Removed Service:istio-system:istiod.

Removed ConfigMap:istio-system:istio.

Removed ConfigMap:istio-system:istio-sidecar-injector.

Removed Pod:istio-system:istio-egressgateway-5c597cdb77-48bj8.

Removed Pod:istio-system:istio-ingressgateway-8d7d49b55-bmbx5.

Removed Pod:istio-system:istiod-54c54679d7-78f2d.

Removed ServiceAccount:istio-system:istio-egressgateway-service-account.

Removed ServiceAccount:istio-system:istio-ingressgateway-service-account.

Removed ServiceAccount:istio-system:istio-reader-service-account.

Removed ServiceAccount:istio-system:istiod.

Removed ServiceAccount:istio-system:istiod-service-account.

Removed RoleBinding:istio-system:istio-egressgateway-sds.

Removed RoleBinding:istio-system:istio-ingressgateway-sds.

Removed RoleBinding:istio-system:istiod.

Removed RoleBinding:istio-system:istiod-istio-system.

Removed Role:istio-system:istio-egressgateway-sds.

Removed Role:istio-system:istio-ingressgateway-sds.

Removed Role:istio-system:istiod.

Removed Role:istio-system:istiod-istio-system.

Removed EnvoyFilter:istio-system:stats-filter-1.11.

Removed EnvoyFilter:istio-system:stats-filter-1.12.

Removed EnvoyFilter:istio-system:stats-filter-1.13.

Removed EnvoyFilter:istio-system:tcp-stats-filter-1.11.

Removed EnvoyFilter:istio-system:tcp-stats-filter-1.12.

Removed EnvoyFilter:istio-system:tcp-stats-filter-1.13.

Removed MutatingWebhookConfiguration::istio-revision-tag-default.

Removed MutatingWebhookConfiguration::istio-sidecar-injector.

Removed ValidatingWebhookConfiguration::istio-validator-istio-system.

Removed ValidatingWebhookConfiguration::istiod-default-validator.

Removed ClusterRole::istio-reader-clusterrole-istio-system.

Removed ClusterRole::istio-reader-istio-system.

Removed ClusterRole::istiod-clusterrole-istio-system.

Removed ClusterRole::istiod-gateway-controller-istio-system.

Removed ClusterRole::istiod-istio-system.

Removed ClusterRoleBinding::istio-reader-clusterrole-istio-system.

Removed ClusterRoleBinding::istio-reader-istio-system.

Removed ClusterRoleBinding::istiod-clusterrole-istio-system.

Removed ClusterRoleBinding::istiod-gateway-controller-istio-system.

Removed ClusterRoleBinding::istiod-istio-system.

Removed CustomResourceDefinition::authorizationpolicies.security.istio.io.

Removed CustomResourceDefinition::destinationrules.networking.istio.io.

Removed CustomResourceDefinition::envoyfilters.networking.istio.io.

Removed CustomResourceDefinition::gateways.networking.istio.io.

Removed CustomResourceDefinition::istiooperators.install.istio.io.

Removed CustomResourceDefinition::peerauthentications.security.istio.io.

Removed CustomResourceDefinition::proxyconfigs.networking.istio.io.

Removed CustomResourceDefinition::requestauthentications.security.istio.io.

Removed CustomResourceDefinition::serviceentries.networking.istio.io.

Removed CustomResourceDefinition::sidecars.networking.istio.io.

Removed CustomResourceDefinition::telemetries.telemetry.istio.io.

Removed CustomResourceDefinition::virtualservices.networking.istio.io.

Removed CustomResourceDefinition::wasmplugins.extensions.istio.io.

Removed CustomResourceDefinition::workloadentries.networking.istio.io.

Removed CustomResourceDefinition::workloadgroups.networking.istio.io.

✔ Uninstall complete

此时istio-system命名空间下的资源都已经被删除了

# kubectl get pods -n istio-system

No resources found in istio-system namespace.

# kubectl get svc -n istio-system

No resources found in istio-system namespace.

8.3 删除istio-system命名空间

# kubectl delete ns istio-system

namespace "istio-system" deleted

# kubectl get ns istio-system

Error from server (NotFound): namespaces "istio-system" not found

其他命名空间下可能还有一些由服务网格创建的残余的sidecar.可以单独进行删除.这里就不一一演示了.