本文来自公众号:机器人视觉

完成双目相机标定以后,获得双目相机的参数矩阵

包括左右相机的内参数矩阵、左右相机的畸变系数矩阵、右相机相对于左相机的旋转矩阵与平移矩阵

已知左右相机图像中的对应点坐标,获取目标在双目视觉传感器下三维坐标的流程如下:

1、将双目相机标定参数整理如下:

//左相机内参数矩阵

float leftIntrinsic[3][3] = { 3061.6936, -0.8869, 641.3042,

0, 3058.8751, 508.9555,

0, 0, 1 };

//左相机畸变系数

float leftDistortion[1][5] = { -0.0133, 0.6503, 0.0029, -0.0049, -16.8704 };

//左相机旋转矩阵

float leftRotation[3][3] = { 1, 0, 0,

0, 1, 0,

0, 0, 1 };

//左相机平移向量

float leftTranslation[1][3] = { 0, 0, 0 };

//右相机内参数矩阵

float rightIntrinsic[3][3] = { 3069.2482, -0.8951, 620.5357,

0, 3069.2450, 532.7122,

0, 0, 1 };

//右相机畸变系数

float rightDistortion[1][5] = { -0.0593, 3.4501, 0.0003, -8.5614, -58.3116 };

//右相机旋转矩阵

float rightRotation[3][3] = { 0.9989, 0.0131, -0.0439,

-0.0121, 0.9996, 0.0233,

0.0441, -0.0228, 0.9987};

//右相机平移向量

float rightTranslation[1][3] = {-73.8389, 2.6712, 3.3792};

2、二维像素坐标与相机坐标系下三维坐标转换

//************************************

// Description: 根据左右相机中成像坐标求解空间坐标

// Method: uv2xyz

// FullName: uv2xyz

// Parameter: Point2f uvLeft

// Parameter: Point2f uvRight

// Returns: cv::Point3f

//************************************

Point3f uv2xyz(Point2f uvLeft, Point2f uvRight)

{

// [u1] |X| [u2] |X|

//Z*|v1| = Ml*|Y| Z*|v2| = Mr*|Y|

// [ 1] |Z| [ 1] |Z|

// |1| |1|

Mat mLeftRotation = Mat(3, 3, CV_32F, leftRotation);

Mat mLeftTranslation = Mat(3, 1, CV_32F, leftTranslation);

Mat mLeftRT = Mat(3, 4, CV_32F);//左相机M矩阵

hconcat(mLeftRotation, mLeftTranslation, mLeftRT);

Mat mLeftIntrinsic = Mat(3, 3, CV_32F, leftIntrinsic);

Mat mLeftM = mLeftIntrinsic * mLeftRT;

//cout<<"左相机M矩阵 = "<<endl<<mLeftM<<endl;

Mat mRightRotation = Mat(3, 3, CV_32F, rightRotation);

Mat mRightTranslation = Mat(3, 1, CV_32F, rightTranslation);

Mat mRightRT = Mat(3, 4, CV_32F);//右相机M矩阵

hconcat(mRightRotation, mRightTranslation, mRightRT);

Mat mRightIntrinsic = Mat(3, 3, CV_32F, rightIntrinsic);

Mat mRightM = mRightIntrinsic * mRightRT;

//cout<<"右相机M矩阵 = "<<endl<<mRightM<<endl;

//最小二乘法A矩阵

Mat A = Mat(4, 3, CV_32F);

A.at<float>(0, 0) = uvLeft.x * mLeftM.at<float>(2, 0) - mLeftM.at<float>(0, 0);

A.at<float>(0, 1) = uvLeft.x * mLeftM.at<float>(2, 1) - mLeftM.at<float>(0, 1);

A.at<float>(0, 2) = uvLeft.x * mLeftM.at<float>(2, 2) - mLeftM.at<float>(0, 2);

A.at<float>(1, 0) = uvLeft.y * mLeftM.at<float>(2, 0) - mLeftM.at<float>(1, 0);

A.at<float>(1, 1) = uvLeft.y * mLeftM.at<float>(2, 1) - mLeftM.at<float>(1, 1);

A.at<float>(1, 2) = uvLeft.y * mLeftM.at<float>(2, 2) - mLeftM.at<float>(1, 2);

A.at<float>(2, 0) = uvRight.x * mRightM.at<float>(2, 0) - mRightM.at<float>(0, 0);

A.at<float>(2, 1) = uvRight.x * mRightM.at<float>(2, 1) - mRightM.at<float>(0, 1);

A.at<float>(2, 2) = uvRight.x * mRightM.at<float>(2, 2) - mRightM.at<float>(0, 2);

A.at<float>(3, 0) = uvRight.y * mRightM.at<float>(2, 0) - mRightM.at<float>(1, 0);

A.at<float>(3, 1) = uvRight.y * mRightM.at<float>(2, 1) - mRightM.at<float>(1, 1);

A.at<float>(3, 2) = uvRight.y * mRightM.at<float>(2, 2) - mRightM.at<float>(1, 2);

//最小二乘法B矩阵

Mat B = Mat(4, 1, CV_32F);

B.at<float>(0, 0) = mLeftM.at<float>(0, 3) - uvLeft.x * mLeftM.at<float>(2, 3);

B.at<float>(1, 0) = mLeftM.at<float>(1, 3) - uvLeft.y * mLeftM.at<float>(2, 3);

B.at<float>(2, 0) = mRightM.at<float>(0, 3) - uvRight.x * mRightM.at<float>(2, 3);

B.at<float>(3, 0) = mRightM.at<float>(1, 3) - uvRight.y * mRightM.at<float>(2, 3);

Mat XYZ = Mat(3, 1, CV_32F);

//采用SVD最小二乘法求解XYZ

solve(A, B, XYZ, DECOMP_SVD);

//cout<<"空间坐标为 = "<<endl<<XYZ<<endl;

//世界坐标系中坐标

Point3f world;

world.x = XYZ.at<float>(0, 0);

world.y = XYZ.at<float>(1, 0);

world.z = XYZ.at<float>(2, 0);

return world;

}

//************************************

// Description: 将世界坐标系中的点投影到左右相机成像坐标系中

// Method: xyz2uv

// FullName: xyz2uv

// Parameter: Point3f worldPoint

// Parameter: float intrinsic[3][3]

// Parameter: float translation[1][3]

// Parameter: float rotation[3][3]

// Returns: cv::Point2f

//************************************

Point2f xyz2uv(Point3f worldPoint, float intrinsic[3][3], float translation[1][3], float rotation[3][3])

{

// [fx s x0] [Xc] [Xw] [u] 1 [Xc]

//K = |0 fy y0| TEMP = [R T] |Yc| = TEMP*|Yw| | | = —*K *|Yc|

// [ 0 0 1 ] [Zc] |Zw| [v] Zc [Zc]

// [1 ]

Point3f c;

c.x = rotation[0][0] * worldPoint.x + rotation[0][1] * worldPoint.y + rotation[0][2] * worldPoint.z + translation[0][0] * 1;

c.y = rotation[1][0] * worldPoint.x + rotation[1][1] * worldPoint.y + rotation[1][2] * worldPoint.z + translation[0][1] * 1;

c.z = rotation[2][0] * worldPoint.x + rotation[2][1] * worldPoint.y + rotation[2][2] * worldPoint.z + translation[0][2] * 1;

Point2f uv;

uv.x = (intrinsic[0][0] * c.x + intrinsic[0][1] * c.y + intrinsic[0][2] * c.z) / c.z;

uv.y = (intrinsic[1][0] * c.x + intrinsic[1][1] * c.y + intrinsic[1][2] * c.z) / c.z;

return uv;

}

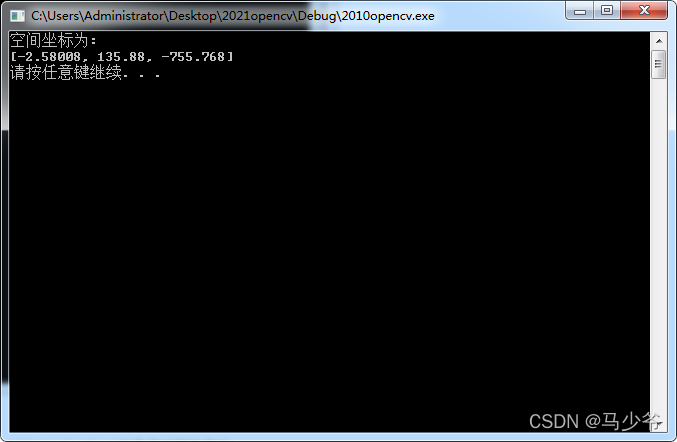

3、由像素坐标获取三维坐标

Point2f l = (638, 393);

Point2f r = (85, 502);

Point3f worldPoint;

worldPoint = uv2xyz(l, r);

cout << "空间坐标为:" << endl << uv2xyz(l, r) << endl;

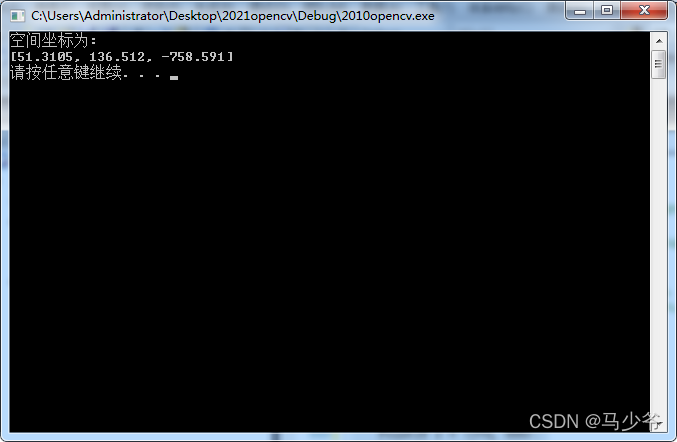

更换点对测试

Point2f l = (857, 666);

Point2f r = (303, 775);

//Point2f l = (1014, 445);

//Point2f r = (523, 387);

Point3f worldPoint;

worldPoint = uv2xyz(l, r);

cout << "空间坐标为:" << endl << uv2xyz(l, r) << endl;

system("pause");

更换点对测试:

Point2f l = (931, 449);

Point2f r = (370, 555);

Point3f worldPoint;

worldPoint = uv2xyz(l, r);

cout << "空间坐标为:" << endl << uv2xyz(l, r) << endl;

system("pause");

线结构光传感器标定(相机标定+结构光标定)完整流程(一)

https://blog.csdn.net/qq_27353621/article/details/120787942

UR机器人手眼标定(二)

https://blog.csdn.net/qq_27353621/article/details/121603215

双目相机标定(三)

https://blog.csdn.net/qq_27353621/article/details/121031972

公众号:机器人视觉