第三届阿里云智维算法大赛总结

问题描述

给定一段时间的系统日志数据,参赛者应提出自己的解决方案,以诊断服务器发生了哪种故障。具体来说,参赛者需要从组委会提供的数据中挖掘出和各类故障相关的特征,并采用合适的机器学习算法予以训练,最终得到可以区分故障类型的最优模型。数据处理方法和算法不限,但选手应该综合考虑算法的效果和复杂度,以构建相对高效的解决方案。

初赛会提供训练数据集,供参赛选手训练模型并验证模型效果使用。同时,也将提供测试集,选手需要对测试集中的数据诊断识别出故障类型,并将模型判断出的结果上传至竞赛平台,平台会根据提交的诊断结果,来评估模型的效果。

数据简述

Table 1: SEL日志数据, 数据文件名: preliminary_sel_log_dataset.csv

Table 2: 训练标签数据, 数据文件名: preliminary_train_label_dataset.csv, preliminary_train_label_dataset_s.csv

其中0类和1类表示CPU相关故障,2类表示内存相关故障,3类表示其他类型故障

注: 上述两个文件的总label数据对应”preliminary_sel_log_dataset.csv“中所有的日志。在比赛之初,组委会曾在”preliminary_sel_log_dataset.csv“中开放过一份不带label的log数据交由选手提交答案测评,现该部分log数据不变,并将其对应的的label(preliminary_train_label_dataset_s.csv)一并开放,选手有了更多的数据用于训练。

Table 3: 选手提交数据, 数据文件名: preliminary_submit_dataset_a.csv, 对应的log文件名:preliminary_sel_log_dataset_a.csv

选手需要使用preliminary_sel_log_dataset_a.csv中的日志内容,评测出对应的诊断结果,并填充到preliminary_submit_dataset_a.csv中,preliminary_submit_dataset_a.csv是选手需要提交到系统的最终结果文件。

Table 4: SEL日志语料数据, 数据文件名: additional_sel_log_dataset.csv

注: 主要是给选手进行预训练用的数据,该数据集没有对应的label标签,也没有server_model字段,选手可以酌情使用

Table 5: SEL日志数据, 数据文件名: final_sel_log_dataset_*.csv

Table 6: 训练标签数据, 数据文件名: final_train_label_dataset_*.csv

Table 7: 选手提交数据, 数据文件名: final_submit_dataset_*.csv

本次竞赛采用多分类加权Macro F1-score作为评价指标

思路一

模板匹配

基于开源IBM/Drain3日志模板Github

给定非结构化日志,进行匹配模板

For the input:

connected to 10.0.0.1

connected to 192.168.0.1

Hex number 0xDEADBEAF

user davidoh logged in

user eranr logged in

Drain3 extracts the following templates:

ID=1 : size=2 : connected to <:IP:>

ID=2 : size=1 : Hex number <:HEX:>

ID=3 : size=2 : user <:*:> logged in

在配置文件drain.ini中,可以调节模板匹配相关的参数

config filename is drain3.ini in working directory. It can also be configured passing a TemplateMinerConfig object to the TemplateMiner constructor.

Primary configuration parameters:

设定一个相似度阈值,低于相似度生成新的模板

[DRAIN]/*sim_th - similarity threshold. if percentage of similar tokens for a log message is below this number, a new log cluster will be created (default 0.4) *

[DRAIN]/depth - max depth levels of log clusters. Minimum is 2. (default 4)# 挖掘深度

[DRAIN]/max_children - max number of children of an internal node (default 100)

[DRAIN]/max_clusters - max number of tracked clusters (unlimited by default). When this number is reached, model starts replacing old clusters with a new ones according to the LRU cache eviction policy.

[DRAIN]/extra_delimiters - delimiters to apply when splitting log message into words (in addition to whitespace) ( default none). Format is a Python list e.g. [‘_’, ‘:’].

[MASKING]/masking - parameters masking - in json format (default “”)

[MASKING]/mask_prefix & [MASKING]/mask_suffix - the wrapping of identified parameters in templates. By default, it is < and > respectively.

[SNAPSHOT]/snapshot_interval_minutes - time interval for new snapshots (default 1)

[SNAPSHOT]/compress_state - whether to compress the state before saving it. This can be useful when using Kafka persistence.

还可以通过设定正则,设定mask一些指定的值,如IP,数字,进制数等

[MASKING]

masking = [

{

"regex_pattern":"((?<=[^A-Za-z0-9])|^)(([0-9a-f]{

2,}:){

3,}([0-9a-f]{

2,}))((?=[^A-Za-z0-9])|$)", "mask_with": "ID"},

{

"regex_pattern":"((?<=[^A-Za-z0-9])|^)(\\d{1,3}\\.\\d{1,3}\\.\\d{1,3}\\.\\d{1,3})((?=[^A-Za-z0-9])|$)", "mask_with": "IP"},

{

"regex_pattern":"((?<=[^A-Za-z0-9])|^)([0-9a-f]{6,} ?){3,}((?=[^A-Za-z0-9])|$)", "mask_with": "SEQ"},

{

"regex_pattern":"((?<=[^A-Za-z0-9])|^)([0-9A-F]{4} ?){4,}((?=[^A-Za-z0-9])|$)", "mask_with": "SEQ"},

{

"regex_pattern":"((?<=[^A-Za-z0-9])|^)(0x[a-f0-9A-F]+)((?=[^A-Za-z0-9])|$)", "mask_with": "HEX"},

{

"regex_pattern":"((?<=[^A-Za-z0-9])|^)([\\-\\+]?\\d+)((?=[^A-Za-z0-9])|$)", "mask_with": "NUM"},

{

"regex_pattern":"(?<=executed cmd )(\".+?\")", "mask_with": "CMD"}

]

from drain3 import TemplateMiner #开源在线日志解析框架

from drain3.file_persistence import FilePersistence

from drain3.template_miner_config import TemplateMinerConfig

config = TemplateMinerConfig()

config.load('./lib/drain3 - 副本.ini') ## drain配置文件

config.profiling_enabled = False

import pandas as pd

#读取数据

data_train = pd.read_csv('./data/preliminary_sel_log_dataset.csv')

data_test = pd.read_csv('./data/preliminary_sel_log_dataset_a.csv')

data = pd.concat([data_train, data_test])

data = data.reset_index()

# 读取drain配置文件

drain_file = 'comp_a_sellog_smi'

persistence = FilePersistence(drain_file + '.bin')

template_miner = TemplateMiner(persistence, config=config)

##模板提取

for msg in data.msg.tolist():

template_miner.add_log_message(msg)

temp_count = len(template_miner.drain.clusters)

## 筛选模板

template_dic = {

}

size_list = []

for cluster in template_miner.drain.clusters:

size_list.append(cluster.size)

size_list = sorted(size_list, reverse=True)[:206] ## 筛选模板集合大小前200条,这里的筛选只是举最简单的例子。

min_size = size_list[-1]

for cluster in template_miner.drain.clusters: ## 把符合要求的模板存下来

#print(cluster.cluster_id)

if cluster.size >= min_size:

template_dic[cluster.cluster_id] = cluster.size

# 加入server-model特征

sm_hash = dict()

for i, sm in enumerate(data['server_model'].unique().tolist()):

if sm not in sm_hash:

sm_hash[sm] = i

def match_template(df, template_miner, template_dic):

msg = df.msg

cluster = template_miner.match(msg) # 匹配模板,由开源工具提供

if cluster and cluster.cluster_id in template_dic:

df['template_id'] = cluster.cluster_id # 模板id

df['template'] = cluster.get_template() # 具体模板

else:

df['template_id'] = 'None' # 没有匹配到模板的数据也会记录下来,之后也会用作一种特征。

df['template'] = 'None'

df['sm'] = sm_hash[df['server_model']]

return df

data = data.apply(match_template, template_miner=template_miner, template_dic=template_dic, axis=1)

data.to_csv('./lib/' + drain_file +'_result_match_data_sm.csv')

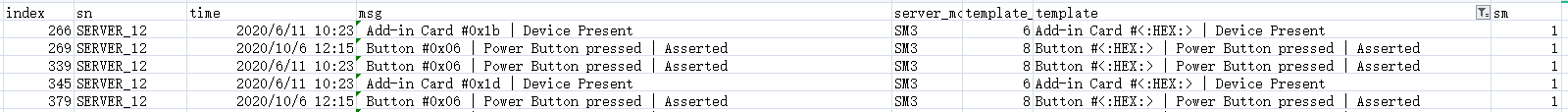

匹配输出案例

训练数据生成

读取训练标签后,在日志数据中匹配对应的sn,日志按时间排序,选在发生故障前的前几条数据进入训练集,(在使用lstm进行训练时需要有相同的时序,条数不够的就用最后一条填充了),对模板进行onthot处理,最后对进行分组求和,也就是一段时间内所有日志模板出现的情况。

from threading import Thread

import threading

import time

import numpy as np

import pandas as pd

from tqdm.notebook import tqdm

def read_train_data():

# 读取sel日志

sel_data = pd.read_pickle('./lib/comp_a_sellog_result_match_data.pkl')

#sel_data = pd.read_csv('./lib/comp_a_sellog_smi_result_match_data35.csv')#读取匹配好模板的数据

#sel_data = pd.read_csv('./lib/comp_a_sellog_smi_result_match_data_sm.csv')#读取匹配好模板的数据

# sel_data = sel_data.drop(columns=['msg','template_id'])

# sel_data = sel_data.rename(columns={'template': 'msg'})

# 排序 重置索引

sel_data.sort_values(by=['sn', 'time'], inplace=True)

sel_data.reset_index(drop=True, inplace=True)

sel_data = sel_data.drop(['Unnamed: 0'],axis=1)

return sel_data

# 原始标签

def get_label_data():

# df_train_label = pd.read_csv('./data/preliminary_train_label_dataset.csv')

# df_train_label_s = pd.read_csv('./data/preliminary_train_label_dataset_s.csv')

# df_train_label = pd.concat([df_train_label, df_train_label_s])

df_train_label = pd.read_csv('./lib/df_train_label_one.csv')

df_train_label = df_train_label.drop_duplicates(['sn','fault_time','label'])

df_train_label.sort_values(by=['sn', 'fault_time'], inplace=True)

df_train_label.reset_index(drop=True, inplace=True)

return df_train_label

# 读取测试集

def read_submit_data():

submit = pd.read_csv('./data/submit/preliminary_submit_dataset_a.csv')

submit.sort_values(by=['sn', 'fault_time'], inplace=True)

submit.reset_index(drop=True, inplace=True)

return submit

# 构建模板onehot

def feature_generation(df_data):

df_data.rename(columns={

'time':'collect_time'},inplace=True)

dummy_list = set(df_data.template_id.unique())

dummy_col = ['template_id_' + str(x) for x in dummy_list]

df_data = template_dummy(df_data)

df_data = df_data.reset_index(drop=True)

return df_data

# onehot

def template_dummy(df):

df_dummy = pd.get_dummies(df['template_id'], prefix='template_id')

df = pd.concat([df[['sn','collect_time','sm']], df_dummy], axis=1)

return df

def build_train_data(df_data,label):

print(threading.current_thread().name+' 线程已启动!')

# 构建模型的训练集,合并标签和msg

label_data = []

col = set(df_data.columns.tolist()+label.columns.tolist())

train_data = pd.DataFrame(columns=col)

for i, row in tqdm(label.iterrows()):

filter_data = df_data[(df_data['sn']==row['sn'])&(df_data['collect_time']<=row['fault_time'])].tail(7)

#if filter_data.shape[0] < 7:

#filter_data_back = df_data[(df_data['sn']==row['sn'])&(df_data['collect_time']>row['fault_time'])].head(4)

while 0 < filter_data.shape[0] < 7:

filter_data = pd.concat([filter_data,filter_data.tail(1)],axis = 0)

#print('1')

filter_data['fault_time'] = row['fault_time']

filter_data['label'] = row['label']

train_data = pd.concat([filter_data,train_data])

train_data = train_data.drop(['collect_time'],axis=1)

return train_data

# 构建模型的测试集合

def build_submit_data(df_data,submit):

print(threading.current_thread().name+' 线程已启动!')

col = set(df_data.columns.tolist()+submit.columns.tolist())

test_data = pd.DataFrame(columns=col)

for i, row in tqdm(submit.iterrows()):

filter_data = df_data[(df_data['sn']==row['sn'])&(df_data['collect_time']<=row['fault_time'])].tail(7)#10

# if filter_data.shape[0] < 1:

# filter_data_back = df_data[(df_data['sn']==row['sn'])&(df_data['collect_time']>row['fault_time'])].head(4)

# filter_data = pd.concat([filter_data,filter_data_back])

while 0 < filter_data.shape[0] < 7:

filter_data = pd.concat([filter_data,filter_data.tail(1)],axis = 0)

filter_data['fault_time'] = row['fault_time']

test_data = pd.concat([filter_data,test_data])

test_data = test_data.drop(['collect_time'],axis=1)

return test_data

# 线程类

class MyThread(Thread):

def __init__(self,name,df_data,label_data):

Thread.__init__(self,name = name)

self.df_data = df_data

self.label_data = label_data

def run(self):

self.result = build_train_data(self.df_data,self.label_data)

def get_result(self):

return self.result

# 线程类

class MyThread2(Thread):

def __init__(self,name,df_data,submit_data):

Thread.__init__(self,name = name)

self.df_data = df_data

self.submit_data = submit_data

def run(self):

self.result = build_submit_data(self.df_data,self.submit_data)

def get_result(self):

return self.result

# 读取日志数据

sel_data = read_train_data()

#特征生成 onehot

df_data = feature_generation(sel_data)

# 获取训练标签

label = get_label_data()

# 获取测试标签

submit_data = read_submit_data()

print('标签数量',label.shape,'日志数量',df_data.shape,'测试数据', submit_data.shape)

# label1 = label[0:4151]

# label2 = label[4151:4151*2]

# label3 = label[4151*2:4151*3]

# label4 = label[4151*3:-1]

label1 = label[0:2836]

label2 = label[2836:2836*2]

label3 = label[2836*2:2836*3]

label4 = label[2836*3:]

submit_data1 = submit_data[0:750]

submit_data2 = submit_data[750:750*2]

submit_data3 = submit_data[750*2:750*3]

submit_data4 = submit_data[750*3:]

thd11 = MyThread2('T1',df_data,submit_data1)

thd22 = MyThread2('T2',df_data,submit_data2)

thd33 = MyThread2('T3',df_data,submit_data3)

thd44 = MyThread2('T4',df_data,submit_data4)

thd11.start()

thd22.start()

thd33.start()

thd44.start()

thd11.join()

thd22.join()

thd33.join()

thd44.join()

print('All Done\n')

print('T1 线程',thd11.get_result().shape)

print('T2 线程',thd22.get_result().shape)

print('T3 线程',thd33.get_result().shape)

print('T4 线程',thd44.get_result().shape)

#合并

test_data = pd.concat([thd11.get_result(),thd22.get_result(),thd33.get_result(),thd44.get_result()])

#print('测试数据总量',test_data.shape)

thd1 = MyThread('T1',df_data,label1)

thd2 = MyThread('T2',df_data,label2)

thd3 = MyThread('T3',df_data,label3)

thd4 = MyThread('T4',df_data,label4)

thd1.start()

thd2.start()

thd3.start()

thd4.start()

thd1.join()

thd2.join()

thd3.join()

thd4.join()

print('All Done\n')

print('T1 线程',thd1.get_result().shape)

print('T2 线程',thd2.get_result().shape)

print('T3 线程',thd3.get_result().shape)

print('T4 线程',thd4.get_result().shape)

#合并

train_data = pd.concat([thd1.get_result(),thd2.get_result(),thd3.get_result(),thd4.get_result()])

print('训练数据总量',train_data.shape)

train_data = train_data.groupby(['sn','fault_time','label','sm']).agg(sum).reset_index()

test_data = test_data.groupby(['sn','fault_time','sm']).agg(sum).reset_index()

# 获取traindata/label/testdata

train_label = np.array(train_data['label'])

train_feature = np.array(train_data.drop(['sn','fault_time','label'],axis = 1))

test_feature = np.array(test_data.drop(['sn','fault_time'],axis = 1))

#保存数据

np.savetxt('./lib/train_feature',train_feature)

np.savetxt('./lib/train_label',train_label)

np.savetxt('./lib/test_featureone.csv',test_feature)

输入模型

使用lightgbm调参过程使用neptune.ai进行记录。调参工具使用GridSearchCV

import os

import nltk

# nltk.download('punkt')

import numpy as np

import pandas as pd

from nltk.tokenize import word_tokenize

from gensim.models.doc2vec import Doc2Vec, TaggedDocument

from sklearn.ensemble import RandomForestClassifier

import lightgbm as lgb

import neptune.new as neptune

from tqdm.notebook import tqdm

from sklearn.model_selection import train_test_split

from sklearn.model_selection import StratifiedKFold, KFold

#读取submit数据

submit = pd.read_csv('./data/submit/preliminary_submit_dataset_a.csv')

submit.sort_values(by=['sn', 'fault_time'], inplace=True)

submit.reset_index(drop=True, inplace=True)

# 读取训练数据

# train_feature = np.loadtxt('./lib/train_feature_sm_alla.csv')

# train_label = np.loadtxt('./lib/train_label_sm_alla.csv')

# test_feature = np.loadtxt('./lib/test_feature_sm_alla.csv')

# train_feature = np.loadtxt('./lib/train_feature_sm_onel.csv')

# train_label = np.loadtxt('./lib/train_label_sm_onel.csv')

# test_feature = np.loadtxt('./lib/test_feature_sm_onel.csv')

# train_feature = np.loadtxt('./lib/train_feature_emb_alla.csv')

# train_label = np.loadtxt('./lib/train_label_emb_alla.csv')

# test_feature = np.loadtxt('./lib/test_feature_emb_alla.csv')

# train_feature = np.loadtxt('./lib/train_feature_emb_one.csv')

# train_label = np.loadtxt('./lib/train_label_emb_one.csv')

# test_feature = np.loadtxt('./lib/test_feature_emb_one.csv')

# train_feature = np.loadtxt('./lib/train_featureone7.csv')

# train_label = np.loadtxt('./lib/train_labelone7.csv')

# test_feature = np.loadtxt('./lib/test_featureone7.csv')

# train_feature = np.loadtxt('./lib/train_feature_sm_onel_h.csv')

# train_label = np.loadtxt('./lib/train_label_sm_onel_h.csv')

#mb_

train_feature = np.loadtxt('./lib/train_feature.csv')

train_label = np.loadtxt('./lib/train_label.csv')

#mb_

test_feature = np.loadtxt('./lib/test_featureone.csv')

from sklearn.metrics import f1_score

def f1__score(y_true, y_pred):

return f1_score(y_true, y_pred,average="macro")

def feval_metrics1(y_pred, y_true):

#y_pred = y_pred.reshape(4,-1).T

y_pred = np.argmax(y_pred, axis=1)# 4 分类

return f1__score(y_true, y_pred)

# 参数

params = {

'num_class':4,

'is_unbalance':True,

'learning_rate': 0.001,

# 'num_leaves':28,

# 'max_depth':5,

# 'min_data_in_leaf':50,

# 'bagging_fraction':0.6,

# 'feature_fraction':0.9,

'subsample': 0.8,

'lambda_l1': 0.1,

'lambda_l2': 0.2,

'nthread': -1,

'n_jobs':-1,

'objective': 'multiclass',

'seed':2022}#

f1scores = []

def lgm_model(lgb, train_x, train_y, x_submit): #

folds = 10

seed = 2022

kf = KFold(n_splits=folds, shuffle=True, random_state=seed)

#设置测试集,输出矩阵。每一组数据输出:[0,0,0,0]以概率值填入

submit = np.zeros((x_submit.shape[0],4))

all_submit = []

train_pred_res = np.zeros((train_feature.shape[0],4))

#交叉验证分数

for i, (train_index, valid_index) in enumerate(kf.split(train_x, train_y)):

#print(i, len(train_index), len(valid_index))

print('************************************ {} ************************************'.format(str(i+1)))

trn_x, trn_y, val_x, val_y = train_x[train_index], train_y[train_index], train_x[valid_index], train_y[valid_index]

train_data = lgb.Dataset(trn_x, label = trn_y)

valid_data = lgb.Dataset(val_x, label = val_y)

lgbmodel = lgb.train({

**params},

train_set = train_data,

valid_sets = [valid_data],

num_boost_round=15000,

early_stopping_rounds=200,

verbose_eval=100,

valid_names=['valid'])

#train_pred = lgbmodel.predict(train_feature, num_iteration=lgbmodel.best_iteration)

test_pred = lgbmodel.predict(val_x, num_iteration=lgbmodel.best_iteration)

submit_pred = lgbmodel.predict(x_submit, num_iteration=lgbmodel.best_iteration)

all_submit.append(submit_pred)

#train_pred_res += train_pred

submit += submit_pred

run["vaid/f1"].log(feval_metrics1(test_pred,val_y))

f1scores.append(feval_metrics1(test_pred,val_y))

print(f1scores)

print('平均',np.mean(f1scores))

return submit,all_submit,np.mean(f1scores)

#################################### 初始化 ##########################################

run = neptune.init(

project="zsaisai/lightgbm01",

api_token="eyJhcGlfYWRkcmVzcyI6Imh0dHBzOi8vYXBwLm5lcHR1bmUuYWkiLCJhcGlfdXJsIjoiaHR0cHM6Ly9hcHAubmVwdHVuZS5haSIsImFwaV9rZXkiOiIyNmFjMDhkOC02OTUzLTRhZGQtYmEwOS1iOGJhNDgwNzU2ODQifQ==",

) # your credentials

##################################Train Model ########################################

#test_submit,all_submit,

test_submit,all_submit,f1s = lgm_model(lgb,train_feature,train_label,test_feature)

##################################记 录 参 数 ########################################

run["parameters"] = params

run["sys/tags"].add(["调参"])

run["max/f1"].log(max(f1scores))

run["avg/avg_f1"].log(f1s)

run.stop()

## 所有模型平均

s = (test_submit)/10

result = np.argmax(s, axis=1)

# 融合

submit['label'] = result

submit.to_csv('./test_1222.csv', index=0)

GridSearchCV调参

import os

import numpy as np

import pandas as pd

import lightgbm as lgb

from sklearn.model_selection import GridSearchCV

train_feature = np.loadtxt('./lib/train_feature_sm_filter_label.csv')

train_label = np.loadtxt('./lib/train_label_sm_filter_label.csv')

test_feature = np.loadtxt('./lib/test_featureone_sm_filter_label.csv')

# emb_train_feature = np.loadtxt('./lib/train_feature_emb_filter_label.csv')

# emb_train_label = np.loadtxt('./lib/train_label_emb_filter_label.csv')

# emb_test_feature = np.loadtxt('./lib/test_feature_em_filter_label.csv')

# nn_out = np.loadtxt('./lib/train_feature_emb_out.csv')

# nn_test_y = np.loadtxt('./lib/test_feature_emb_out.csv')

# train_feature = np.hstack((nn_out,mb_train_feature))

# test_feature = np.hstack((nn_test_y,mb_test_feature))

# train_feature.shape,train_label.shape,test_feature.shape

#读取submit数据

submit = pd.read_csv('./data/submit/preliminary_submit_dataset_a.csv')

submit.sort_values(by=['sn', 'fault_time'], inplace=True)

submit.reset_index(drop=True, inplace=True)

from sklearn.metrics import f1_score

def f1__score(y_true, y_pred):

return f1_score(y_true, y_pred,average="macro")

def feval_metrics(y_pred, y_true):

y_pred = np.argmax(y_pred, axis=1)# 4 分类

return f1__score(y_true, y_pred)

#params = {**SEARCH_PARAMS}

lgbmodel = lgb.LGBMClassifier(

learning_rate=0.1,

num_leaves=50,

n_estimators=43,

max_depth=6,

bagging_fraction=0.8,

feature_fraction=0.8,

boosting_type='gbdt',

is_unbalance=True,

nthread=-1,

n_jobs=-1,

num_class=4,

objective='multiclass',

seed=2022)

params_test1={

'max_depth': range(3,9,2),

'num_leaves':range(50, 170, 30)

}

gsearch1 = GridSearchCV(estimator=lgbmodel, param_grid=params_test1, cv=5, verbose=1, n_jobs=-1)

gsearch1.fit(train_feature,train_label)

gsearch1.best_params_, gsearch1.best_score_

#细调

params_test2={

'max_depth': [3,4,5],

'num_leaves':[42,45,50,39,36]

}

gsearch1 = GridSearchCV(estimator=lgbmodel, param_grid=params_test2, cv=5, verbose=1, n_jobs=-1)

gsearch1.fit(train_feature,train_label)

gsearch1.best_params_, gsearch1.best_score_

#params = {**SEARCH_PARAMS}

lgbmodel = lgb.LGBMClassifier(

learning_rate=0.1,

num_leaves=42,

n_estimators=43,

max_depth=3,

bagging_fraction=0.8,

feature_fraction=0.8,

boosting_type='gbdt',

is_unbalance=True,

nthread=-1,

n_jobs=-1,

num_class=4,

objective='multiclass',

seed=2022)

#细调

params_test3={

'min_child_samples': [18, 17, 16, 21, 22],

'min_child_weight':[0.001, 0.002]

}

gsearch1 = GridSearchCV(estimator=lgbmodel, param_grid=params_test3, cv=5, verbose=1, n_jobs=-1)

gsearch1.fit(train_feature,train_label)

gsearch1.best_params_, gsearch1.best_score_

#params = {**SEARCH_PARAMS}

lgbmodel = lgb.LGBMClassifier(

learning_rate=0.1,

n_estimators=43,

num_leaves=42,

max_depth=3,

min_child_samples=18,

min_child_weight=0.001,

bagging_fraction=0.8,

feature_fraction=0.8,

boosting_type='gbdt',

is_unbalance=True,

nthread=-1,

n_jobs=-1,

num_class=4,

objective='multiclass',

seed=2022)

#细调

params_test4={

'feature_fraction': [0.5, 0.6, 0.7, 0.8, 0.9],

'bagging_fraction': [0.6, 0.7, 0.8, 0.9, 1.0]

}

gsearch1 = GridSearchCV(estimator=lgbmodel, param_grid=params_test4, cv=5, verbose=1, n_jobs=-1)

gsearch1.fit(train_feature,train_label)

gsearch1.best_params_, gsearch1.best_score_

#params = {**SEARCH_PARAMS}

lgbmodel = lgb.LGBMClassifier(

learning_rate=0.1,

n_estimators=43,

num_leaves=42,

max_depth=3,

min_child_samples=18,

min_child_weight=0.001,

bagging_fraction=0.6,

feature_fraction=0.9,

boosting_type='gbdt',

is_unbalance=True,

nthread=-1,

n_jobs=-1,

num_class=4,

objective='multiclass',

seed=2022)

params_test5={

'feature_fraction': [0.82, 0.85, 0.88, 0.9, 0.92, 0.95, 0.98 ]

}

gsearch1 = GridSearchCV(estimator=lgbmodel, param_grid=params_test5, cv=5, verbose=1, n_jobs=-1)

gsearch1.fit(train_feature,train_label)

gsearch1.best_params_, gsearch1.best_score_

params_test6={

'reg_alpha': [0, 0.001, 0.01, 0.03, 0.08, 0.3, 0.5],

'reg_lambda': [0, 0.001, 0.01, 0.03, 0.08, 0.3, 0.5]

}

gsearch1 = GridSearchCV(estimator=lgbmodel, param_grid=params_test6, cv=5, verbose=1, n_jobs=-1)

gsearch1.fit(train_feature,train_label)

gsearch1.best_params_, gsearch1.best_score_

思路二

使用无标签语料+训练集数据集进行Doc2Vec-embedding,得到的model在对训练集合进行vector转换(问题:这里因为不同的机器可能日志条数不同,类似故障日志映射的vector相似度可能会降低)

import os

import nltk

# nltk.download('punkt')

import numpy as np

import pandas as pd

from nltk.tokenize import word_tokenize

from gensim.models.doc2vec import Doc2Vec, TaggedDocument

from sklearn.ensemble import RandomForestClassifier

from tqdm.notebook import tqdm

def read_train_data():

# 读取sel日志

data_train = pd.read_csv('./data/preliminary_sel_log_dataset.csv')

data_test = pd.read_csv('./data/preliminary_sel_log_dataset_a.csv')

# 合并 排序 重置索引

sel_data = pd.concat([data_train, data_test])

sel_data.sort_values(by=['sn', 'time'], inplace=True)

sel_data.reset_index(drop=True, inplace=True)

return sel_data

# 取出每台服务器的最后十条日志 获取tokenized_sent

def get_tokenized_sent(sel_data):

#sn_list = sel_data['sn'].drop_duplicates(keep='first').to_list()

sel_data_x = sel_data.groupby('sn').tail(10)

sel_data_x['new'] = sel_data_x.groupby(['sn'])['msg'].transform(lambda x : '.'.join(x))

sel_data_x.drop_duplicates(subset=['sn'],keep='first',inplace=True)

sel_data_x = sel_data_x.reset_index(drop=True)

tail_msg_list = sel_data_x['new'].tolist()

tokenized_sent = [word_tokenize(s.lower()) for s in tail_msg_list]

return tokenized_sent

def read_pretrain_data():

# 读取sel日志

data_train = pd.read_csv('./data/preliminary_sel_log_dataset.csv')

data_train1 = pd.read_csv('./data/preliminary_sel_log_dataset_a.csv')

data_pretrain = pd.read_csv('./data/pretrain/additional_sel_log_dataset.csv')

# 合并 排序 重置索引

sel_data = pd.concat([data_train, data_train1,data_pretrain])

sel_data.sort_values(by=['sn', 'time'], inplace=True)

sel_data.reset_index(drop=True, inplace=True)

return sel_data

# 原始标签

def get_label_data():

df_train_label = pd.read_csv('./data/preliminary_train_label_dataset.csv')

df_train_label_s = pd.read_csv('./data/preliminary_train_label_dataset_s.csv')

df_train_label = pd.concat([df_train_label, df_train_label_s])

df_train_label = df_train_label.drop_duplicates(['sn','fault_time','label'])

return df_train_label

# 读取预训练数据

pre_sel_data = read_pretrain_data()

# 读取数据

sel_data = read_train_data()

label = get_label_data()

# 获取分词

tokenized_sent = get_tokenized_sent(pre_sel_data)

# 训练embbeding模型(Doc2Vec)

tagged_data = [TaggedDocument(d, [i]) for i, d in enumerate(tokenized_sent)]

model = Doc2Vec(tagged_data,

vector_size = 100,

window = 3,

workers=4)

#epochs = 15

# 构建模型的训练集,合并标签和msg

label.sort_values(by=['sn', 'fault_time'], inplace=True)

label.reset_index(drop=True, inplace=True)

train_data = []

for i, row in tqdm(label.iterrows()):

train_data.append(model.infer_vector(word_tokenize('.'.join(sel_data[(sel_data['sn']==row['sn'])&(sel_data['time']<=row['fault_time'])].tail(7)['msg']).lower())))

train_feature = np.array(train_data)

train_label = label['label'].values

# 构建模型的测试集合

submit = pd.read_csv('./data/submit/preliminary_submit_dataset_a.csv')

submit.sort_values(by=['sn', 'fault_time'], inplace=True)

submit.reset_index(drop=True, inplace=True)

test_data = []

for i, row in tqdm(submit.iterrows()):

test_data.append(model.infer_vector(word_tokenize('. '.join(sel_data[(sel_data['sn']==row['sn'])&(sel_data['time']<=row['fault_time'])].tail(7)['msg']).lower())))

test_feature = np.array(test_data)

#保持数据

np.savetxt('./lib/train_feature_emb_alla.csv',train_feature)

np.savetxt('./lib/train_label_emb_alla.csv',train_label)

np.savetxt('./lib/test_feature_emb_alla.csv',test_feature)

biL-lstm+attention

import os

import torch

import torch.nn as nn

import numpy as np

import pandas as pd

import torch.utils.data as Data

from torch.autograd import Variable

from torch.utils.data import DataLoader

import torch.nn.functional as F

from tqdm.notebook import tqdm

from torch.utils.data.sampler import SubsetRandomSampler

from sklearn.metrics import f1_score

from sklearn.model_selection import train_test_split

from sklearn import metrics

# 数据预处理

# 读取数据

#mb_

train_feature = np.loadtxt('../lib/train_feature_sm_filter_label_lstm.csv')

train_label = np.loadtxt('../lib/train_label_sm_filter_label_lstm.csv')

test_feature = np.loadtxt('../lib/test_featureone_sm_filter_label_lstm.csv')

#train_feature1 = np.loadtxt('../lib/train_feature_sm_filter_label_lstm_nosm.csv')

# y = df_data_train['label']

# x = df_data_train.drop(['sn','collect_time_gap','fault_time','label'],axis=1)

# 转为torch

x = torch.from_numpy(train_feature).type(torch.FloatTensor)

y = torch.from_numpy(train_label).type(torch.LongTensor)

x_ = x.reshape(-1,7,207)

# 分出验证机

X_train, y_train = x_, y_.T[0]

#X_train, X_test, y_train, y_test = train_test_split( x_, y_.T[0], test_size=0.01,random_state=1)#_.T[0]

# # 查看训练数据结构

X_train.shape,y_train.shape#,X_test.shape,y_test.shape

#训练集

# # 转换成torch可以识别的Dataset

torch_dataset = Data.TensorDataset(X_train,y_train)

#将dataset 放入DataLoader

trainloader = Data.DataLoader(

dataset=torch_dataset,

batch_size=32, #一个batch大小,1为在线

shuffle=False, #每次训练打乱数据, 默认为False

drop_last=True, #舍弃除不尽的日志数据

num_workers=0 # 不使用多进程读取数据

)

#验证集

# torch_dataset = Data.TensorDataset(X_test,y_test)

# #将dataset 放入DataLoader

# validloader = Data.DataLoader(

# dataset=torch_dataset,

# batch_size=y_test.shape[0],

# shuffle=True,

# drop_last=True,

# num_workers=0

# )

# 网络

class lstm(nn.Module):

def __init__(self,input_size,hidden_size,num_layers,num_classes):

super(lstm,self).__init__()

#self.emb = nn.Embedding(2, 8)

self.lstm = nn.LSTM(input_size = input_size, hidden_size=hidden_size, num_layers=num_layers,

dropout = 0.5, batch_first = True, bias = True, bidirectional = False)

self.drop = nn.Dropout(0.4)

self.fc1 = nn.Linear(448, 512)

self.fc2 = nn.Linear(512, 256)

self.fc3 = nn.Linear(256, 128)

self.fc4 = nn.Linear(128, num_classes)

# 软注意力机制 (key=value=x)

def attention_net(self, x, query, mask=None):

import math

d_k = query.size(-1)# d_k为query的维度

scores = torch.matmul(query, x.transpose(1, 2)) / math.sqrt(d_k)

# 对最后一个维度 归一化得分

alpha_n = F.softmax(scores, dim=-1)

# 对权重化的x求和

context = torch.matmul(alpha_n, x).sum(1)

return context, alpha_n

def forward(self, input):

# mb_input = input[...,1:]

# sm_input = input[...,0:1][:,0,:]

#print(sm_input)

out,(h_n,c_n) = self.lstm(input)

out = F.relu(out)

#query = self.drop(out)

# 加入attention机制

#attn_output, alpha_n = self.attention_net(out, query)

#print(out.shape)

out = out.reshape(-1,64*x_.shape[1])

#print(attn_output)

#out = torch.cat([sm_input,attn_output],axis=1)

#out = F.relu(out)

out = self.fc1(out)#attn_output

out = self.drop(out)

out = F.relu(out)

out = self.fc2(out)

out = self.drop(out)

out = F.relu(out)

out = self.fc3(out)

out = F.relu(out)

out = self.fc4(out)#attn_output

return out

#定义模型传参

#(self,out_channels,num_layers,H,num_classes)

model = lstm(207,64,5,4)

#model = lstm(8,512,3,4)

print(model)

# 使用GPU

if torch.cuda.is_available():

model.cuda()

print("GPU")

else:

print("CPU")

#定义参数

epochs = 500

learn_rate = 0.0005

momentum = 0.5

#定义损失函数

#loss_fn = torch.nn.MSELoss(reduction='sum')

loss_fn = torch.nn.CrossEntropyLoss()

#定义优化器

optimizer = torch.optim.Adam(model.parameters(),lr=learn_rate)

#optimizer = torch.optim.SGD(model.parameters(),lr=learn_rate,momentum =momentum)

%%time

model.train()

loss_ = []

for epoch in tqdm(range(epochs)):

for i, (X_train,y_train) in enumerate(trainloader):

#数据放入GPU

if torch.cuda.is_available():

X_train = Variable(X_train).cuda()

y_train = Variable(y_train).cuda()

#print(Variable(y_train).data)

#前向传播

out = model(X_train)

#print(out)

#执行计算损失函数

loss = loss_fn(out,y_train)

# loss = F.nll_loss(out,y)

#执行梯度归零

optimizer.zero_grad()

#执行反向传播

loss.backward()

#执行优化器

optimizer.step()

loss_.append(loss.item())

#输出误差

if i%20 == 0:

print("Train Epoch: {}, Iteration {}, Loss: {}".format(epoch+1,i,loss.item()))

import matplotlib.pyplot as plt

id = [i for i in range(len(loss_))]

plt.figure(figsize=(8,5))

plt.plot(id, loss_, color='red',linewidth=2,label = 'LOSS')#线1

plt.rcParams['font.sans-serif']=['SimHei']

plt.legend(fontsize = 15)

plt.ylabel("LOSS",fontsize = 20)

plt.xlabel("EPOCH-STEP",fontsize = 20)

plt.ylim(0,3)

plt.title('LOSS',fontsize = 20)

plt.show()

test_feature = torch.from_numpy(test_feature).type(torch.FloatTensor)

test_feature = test_feature.reshape(-1,7,207)

model.eval()

y_ = []

out = model(test_feature)

pre = torch.max(F.softmax(out), 1)[1]

print("ok")

#读取submit数据

submit = pd.read_csv('../data/submit/preliminary_submit_dataset_a.csv')

submit.sort_values(by=['sn', 'fault_time'], inplace=True)

submit.reset_index(drop=True, inplace=True)

submit['label'] = pre

submit.to_csv('./test_22.csv', index=0)

其他

neptune.ai可视化平台

import neptune.new as neptune

#################################### 初始化 ##########################################

run = neptune.init(

project="zsaisai/lightgbm01",

api_token="eyJhcGlfYWRkcmVzcyI6Imh0dHBzOi8vYXBwLm5lcHR1bmUuYWkiLCJhcGlfdXJsIjoiaHR0cHM6Ly9hcHAubmVwdHVuZS5haSIsImFwaV9rZXkiOiIyNmFjMDhkOC02OTUzLTRhZGQtYmEwOS1iOGJhNDgwNzU2ODQifQ==",

) # your credentials

##################################Train Model ########################################

#test_submit,all_submit,

test_submit,all_submit,f1s = lgm_model(lgb,train_feature,train_label,test_feature)

##################################记 录 参 数 ########################################

run["parameters"] = params

run["sys/tags"].add(["调参"])

run["max/f1"].log(max(f1scores))

run["avg/avg_f1"].log(f1s)

run.stop()