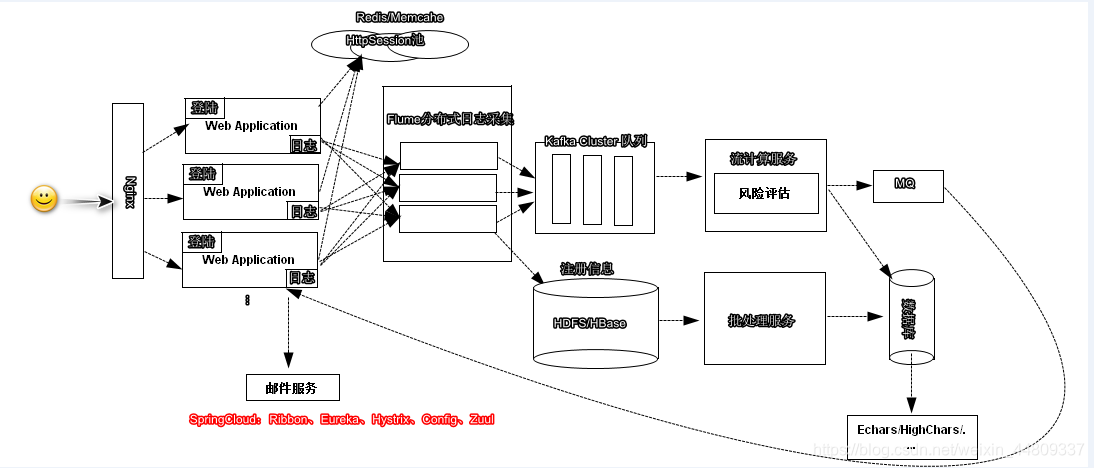

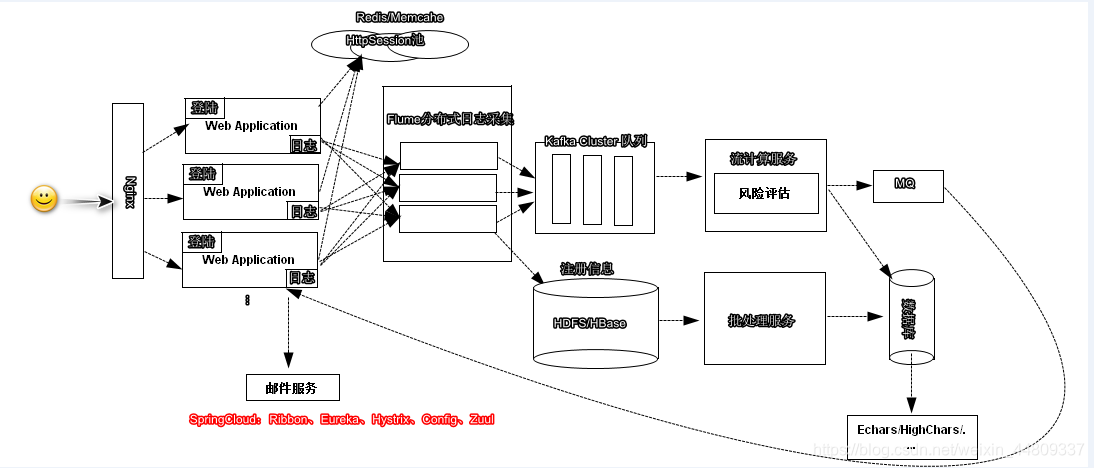

一、Flume分布式日志采集

1.1 Flume 简介

- Flume是一种分布式,可靠且可用的服务,用于收集,聚合和移动大量日志数据。

- Flume构建在日志流之上一个简单灵活的架构。它具有可靠的可靠性机制和许多故障转移和恢复机制,具有强大的容错性。

- 使用Flume这套架构实现对日志流数据的实时在线分析。

- Flume支持在日志系统中定制各类数据发送方,用于收集数据;同时,Flume提供对数据进行简单处理,并写到各种数据接受方(可定制)的能力。

1.2 常用架构

1.3 搭建Flume运行环境

-

- Java Runtime Environment - Java 1.8 or later

[root@CentOS ~]# tar -zxf apache-flume-1.9.0-bin.tar.gz -C /usr/

[root@CentOS apache-flume-1.9.0-bin]# vi conf/demo01.properties

# 声明组件信息

a1.sources = s1

a1.sinks = sk1

a1.channels = c1

# 组件配置

a1.sources.s1.type = avro

a1.sources.s1.bind = 192.168.134.61

a1.sources.s1.port = 44444

a1.channels.c1.type = memory

a1.sinks.sk1.type = file_roll

a1.sinks.sk1.sink.directory = /root/file_roll

a1.sinks.sk1.sink.rollInterval = 0

# 链接组件

a1.sources.s1.channels = c1

a1.sinks.sk1.channel = c1

[root@CentOS apache-flume-1.9.0-bin]#

./bin/flume-ng agent --conf conf/ --conf-file conf/demo01.properties --name a1

二、Avro Source的基本使用

- 一般可以通过Avro Sink 将结果直接写入 Avro Source,这种情况,一般指的是通过flume采集本地的日志文件,架构一般如下图所示,一般情况下的应用服务器必须和agent部署在同一台物理主机。(服务器端日志采集)

- 用户调用Flume的暴露的SDK,直接将数据发送给Avro Source(移动端)

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-sdk</artifactId>

<version>1.9.0</version>

</dependency>

Properties props = new Properties();

props.setProperty(RpcClientConfigurationConstants.CONFIG_CLIENT_TYPE, "avro");

props.put("client.type", "default_loadbalance");

props.put("hosts", "h1 h2 h3");

String host1 = "192.168.111.133:44444";

String host2 = "192.168.111.133:44444";

String host3 = "192.168.111.133:44444";

props.put("hosts.h1", host1);

props.put("hosts.h2", host2);

props.put("hosts.h3", host3);

props.put("host-selector", "random");

RpcClient client= RpcClientFactory.getInstance(props);

Event event= EventBuilder.withBody("1 zhangsan true 28".getBytes());

client.append(event);

client.close();

三、Avro Source | memory channel| Kafka Sink的配置

- [root@CentOS apache-flume-1.9.0-bin]# vi conf/demo02.properties

# 声明组件信息

a1.sources = s1

a1.sinks = sk1

a1.channels = c1

# 组件配置

a1.sources.s1.type = avro

a1.sources.s1.bind = 192.168.111.132

a1.sources.s1.port = 44444

a1.channels.c1.type = memory

a1.sinks.sk1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.sk1.kafka.bootstrap.servers = 192.168.111.132:9092

a1.sinks.sk1.kafka.topic = topic01

a1.sinks.sk1.flumeBatchSize = 20

a1.sinks.sk1.kafka.producer.acks = 1

a1.sinks.sk1.kafka.producer.linger.ms = 1

# 链接组件

a1.sources.s1.channels = c1

a1.sinks.sk1.channel = c1

[root@CentOS apache-flume-1.9.0-bin]#

./bin/flume-ng agent --conf conf/ --conf-file conf/demo02.properties --name a1

四 、Flume和log4j整合

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-sdk</artifactId>

<version>1.9.0</version>

</dependency>

<dependency>

<groupId>org.apache.flume.flume-ng-clients</groupId>

<artifactId>flume-ng-log4jappender</artifactId>

<version>1.9.0</version>

</dependency>

log4j.appender.flume = org.apache.flume.clients.log4jappender.LoadBalancingLog4jAppender

log4j.appender.flume.Hosts = 192.168.111.132:44444 192.168.111.132:44444 192.168.111.132:44444

log4j.appender.flume.Selector = ROUND_ROBIN

log4j.appender.flume.MaxBackoff = 30000

log4j.logger.com.baizhi = DEBUG,flume

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

public class TestLog {

private static Log log= LogFactory.getLog(TestLog.class);

public static void main(String[] args) {

log.debug("你好!_debug");

log.info("你好!_info");

log.warn("你好!_warn");

log.error("你好!_error");

}

}

另开的线程: 主线程结束,程序未结束,未设置为守护线程(不适用)。

五、Spring Boot flume logback整合

<!--配置web-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--配置测试-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!--引入当前版本的SDK-->

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-sdk</artifactId>

<version>1.9.0</version>

</dependency>

- SpringBoot项目组引入logback.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="60 seconds" debug="false">

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender" >

<encoder>

<pattern>%p %c#%M %d{

yyyy-MM-dd HH:mm:ss} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>logs/userLoginFile-%d{

yyyyMMdd}.log</fileNamePattern>

<maxHistory>30</maxHistory>

</rollingPolicy>

<encoder>

<pattern>%p %c#%M %d{

yyyy-MM-dd HH:mm:ss} %m%n</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<!-- 控制台输出日志级别 -->

<root level="ERROR">

<appender-ref ref="STDOUT" />

</root>

<!--additivity 为false,日志不会再父类appender中输出-->

<logger name="com.baizhi.tests" level="INFO" additivity="false">

<appender-ref ref="FILE" />

<appender-ref ref="STDOUT" />

</logger>

</configuration>

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

private static final Logger LOG = LoggerFactory.getLogger(TestSpringBootLog.class);

LOG.info("-----------------------");

六、定制自己的Appender

public class BZFlumeLogAppender extends UnsynchronizedAppenderBase<ILoggingEvent> {

private String flumeAgents;

protected Layout<ILoggingEvent> layout;

private static RpcClient rpcClient;

@Override

protected void append(ILoggingEvent eventObject) {

String body= layout!= null? layout.doLayout(eventObject):eventObject.getFormattedMessage();

if(rpcClient==null){

rpcClient=buildRpcClient();

}

Event event= EventBuilder.withBody(body,Charset.forName("UTF-8"));

try {

rpcClient.append(event);

} catch (EventDeliveryException e) {

e.printStackTrace();

}

}

public void setFlumeAgents(String flumeAgents) {

this.flumeAgents = flumeAgents;

}

public void setLayout(Layout<ILoggingEvent> layout) {

this.layout = layout;

}

private RpcClient buildRpcClient(){

Properties props = new Properties();

int i = 0;

for (String agent : flumeAgents.split(",")) {

String[] tokens = agent.split(":");

props.put("hosts.h" + (i++), tokens[0] + ':' + tokens[1]);

}

StringBuffer buffer = new StringBuffer(i * 4);

for (int j = 0; j < i; j++) {

buffer.append("h").append(j).append(" ");

}

props.put("hosts", buffer.toString());

if(i > 1) {

props.put("client.type", "default_loadbalance");

props.put("host-selector", "round_robin");

}

props.put("backoff", "true");

props.put("maxBackoff", "10000");

return RpcClientFactory.getInstance(props);

}

}

<appender name="bz" class="com.*.flume.BZFlumeLogAppender">

<flumeAgents>

192.168.111.132:44444,192.168.111.132:44444

</flumeAgents>

<layout class="ch.qos.logback.classic.PatternLayout">

<pattern>%p %c#%M %d{yyyy-MM-dd HH:mm:ss} %m</pattern>

</layout>

</appender>

七、 Flume对接HDFS (静态批处理)

# 声明组件信息

a1.sources = s1

a1.sinks = sk1

a1.channels = c1

# 组件配置

a1.sources.s1.type = spooldir

a1.sources.s1.spoolDir = /root/spooldir

a1.sources.s1.deletePolicy = immediate

a1.sources.s1.includePattern = ^.*\.log$

a1.channels.c1.type = jdbc

a1.sinks.sk1.type = hdfs

a1.sinks.sk1.hdfs.path= hdfs:/

a1.sinks.sk1.hdfs.filePrefix = events-

a1.sinks.sk1.hdfs.useLocalTimeStamp = true

a1.sinks.sk1.hdfs.rollInterval = 0

a1.sinks.sk1.hdfs.rollSize = 0

a1.sinks.sk1.hdfs.rollCount = 0

a1.sinks.sk1.hdfs.fileType = DataStream

# 链接组件

a1.sources.s1.channels = c1

a1.sinks.sk1.channel = c1

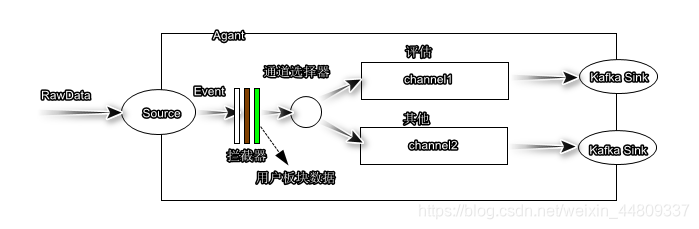

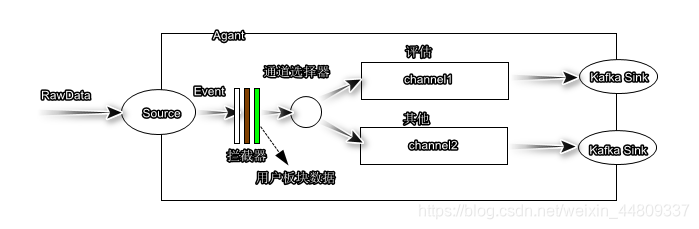

八 、拦截器&通道选择器(一个source对应多个Channel)

- 日志分流

- 需求:只采集用户模块的日志流,对需要评估的数据发送给evaluatetopic,其他用户板块的数据发送给usertopic。

- 配置

# 声明组件信息

a1.sources = s1

a1.sinks = sk1 sk2

a1.channels = c1 c2

# 组件配置

a1.sources.s1.type = avro

a1.sources.s1.bind = 192.168.111.132

a1.sources.s1.port = 44444

# 拦截器

a1.sources.s1.interceptors = i1 i2

a1.sources.s1.interceptors.i1.type = regex_filter

a1.sources.s1.interceptors.i1.regex = .*UserController.*

a1.sources.s1.interceptors.i1.excludeEvents = false

a1.sources.s1.interceptors.i2.type = regex_extractor

a1.sources.s1.interceptors.i2.regex = .*(EVALUATE|SUCCESS).*

a1.sources.s1.interceptors.i2.serializers = s1

a1.sources.s1.interceptors.i2.serializers.s1.name = type

a1.channels.c1.type = memory

a1.channels.c2.type = memory

a1.sinks.sk1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.sk1.kafka.bootstrap.servers = 192.168.111.132:9092

a1.sinks.sk1.kafka.topic = evaluatetopic

a1.sinks.sk1.flumeBatchSize = 20

a1.sinks.sk1.kafka.producer.acks = 1

a1.sinks.sk1.kafka.producer.linger.ms = 1

a1.sinks.sk2.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.sk2.kafka.bootstrap.servers = 192.168.111.132:9092

a1.sinks.sk2.kafka.topic = usertopic

a1.sinks.sk2.flumeBatchSize = 20

a1.sinks.sk2.kafka.producer.acks = 1

a1.sinks.sk2.kafka.producer.linger.ms = 1

# 通道选择器分流

a1.sources.s1.selector.type = multiplexing

a1.sources.s1.selector.header = type

a1.sources.s1.selector.mapping.EVALUATE = c1

a1.sources.s1.selector.mapping.SUCCESS = c2

a1.sources.s1.selector.default = c2

# 链接组件

a1.sources.s1.channels = c1 c2

a1.sinks.sk1.channel = c1

a1.sinks.sk2.channel = c2

九、Sink Processor(一个channel对应多个sink)

# 声明组件

a1.sources = s1

a1.sinks = sk1 sk2

a1.channels = c1

# 将看 k1 k2 归纳一个组

a1.sinkgroups = g1

a1.sinkgroups.g1.sinks = sk1 sk2

a1.sinkgroups.g1.processor.type = load_balance

a1.sinkgroups.g1.processor.backoff = true

a1.sinkgroups.g1.processor.selector = round_robin

# 配置source属性

a1.sources.s1.type = avro

a1.sources.s1.bind = 192.168.111.132

a1.sources.s1.port = 44444

# 配置sink属性

a1.sinks.sk1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.sk1.kafka.bootstrap.servers = 192.168.111.132:9092

a1.sinks.sk1.kafka.topic = evaluatetopic

a1.sinks.sk1.flumeBatchSize = 20

a1.sinks.sk1.kafka.producer.acks = 1

a1.sinks.sk1.kafka.producer.linger.ms = 1

a1.sinks.sk2.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.sk2.kafka.bootstrap.servers = 192.168.111.132:9092

a1.sinks.sk2.kafka.topic = usertopic

a1.sinks.sk2.flumeBatchSize = 20

a1.sinks.sk2.kafka.producer.acks = 1

a1.sinks.sk2.kafka.producer.linger.ms = 1

# 配置channel属性

a1.channels.c1.type = memory

a1.channels.c1.transactionCapacity = 1

# 将source连接channel

a1.sources.s1.channels = c1

a1.sinks.sk1.channel = c1

a1.sinks.sk2.channel = c1