你想要什么?你在做什么?它们一样吗?

一、目的

在使用Apache Dolphinscheduler和Datax配置ETL任务时,硬编码了很多信息,例如mysql的用户名,密码,ip等。

配置示例:

{

"job": {

"content": [{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": ["id", "name"],

"connection": [{

"jdbcUrl": ["jdbc:mysql://ip:3306/dbname"],

"table": ["user"]

}

],

"password": "password",

"splitPk": "id",

"username": "username",

"where": "id > 1"

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"column": ["id", "name"],

"connection": [{

"jdbcUrl": "jdbc:mysql://ip:3306/dbname",

"table": ["user_1"]

}

],

"password": "password",

"username": "username"

}

}

}

],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

当前各个业务线加起来大概800~900个Datax任务,一旦某个数据库信息修改,相关的几百个任务都需要一个个修改,这就太不友好了。因此需要看一下dolphin源码,看是否可以找到思路解决这个问题。

版本:

Apache Dolphinscheduler 1.3.1

Datax 3.0

二、源码阅读与分析

1 创建工作流执行过程

1.1 点击添加按钮

拽一个shell节点,内容是echo "helloworld",打开开发者工具

发现有一个save,这个save请求的url就是调用的后台的接口了

url如下所示

http://ip:port/dolphinscheduler/projects/park_cloud_aliyun/process/save

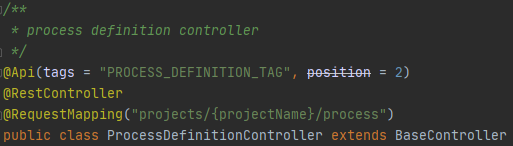

1.2 请求的是后台的ProcessDefinitionController的createProcessDefinition方法

ProcessDefinitionController

createProcessDefinition方法

/**

* create process definition

*

* @param loginUser login user

* @param projectName project name

* @param name process definition name

* @param json process definition json

* @param description description

* @param locations locations for nodes

* @param connects connects for nodes

* @return create result code

*/

@ApiOperation(value = "save", notes = "CREATE_PROCESS_DEFINITION_NOTES")

@ApiImplicitParams({

@ApiImplicitParam(name = "name", value = "PROCESS_DEFINITION_NAME", required = true, type = "String"),

@ApiImplicitParam(name = "processDefinitionJson", value = "PROCESS_DEFINITION_JSON", required = true, type = "String"),

@ApiImplicitParam(name = "locations", value = "PROCESS_DEFINITION_LOCATIONS", required = true, type = "String"),

@ApiImplicitParam(name = "connects", value = "PROCESS_DEFINITION_CONNECTS", required = true, type = "String"),

@ApiImplicitParam(name = "description", value = "PROCESS_DEFINITION_DESC", required = false, type = "String"),

})

@PostMapping(value = "/save")

@ResponseStatus(HttpStatus.CREATED)

@ApiException(CREATE_PROCESS_DEFINITION)

public Result createProcessDefinition(@ApiIgnore @RequestAttribute(value = Constants.SESSION_USER) User loginUser,

@ApiParam(name = "projectName", value = "PROJECT_NAME", required = true) @PathVariable String projectName,

@RequestParam(value = "name", required = true) String name,

@RequestParam(value = "processDefinitionJson", required = true) String json,

@RequestParam(value = "locations", required = true) String locations,

@RequestParam(value = "connects", required = true) String connects,

@RequestParam(value = "description", required = false) String description) throws JsonProcessingException {

logger.info("login user {}, create process definition, project name: {}, process definition name: {}, " +

"process_definition_json: {}, desc: {} locations:{}, connects:{}",

loginUser.getUserName(), projectName, name, json, description, locations, connects);

Map<String, Object> result = processDefinitionService.createProcessDefinition(loginUser, projectName, name, json,

description, locations, connects);

return returnDataList(result);

}

逻辑:

- 校验参数

- 处理异常

- 调用ProcessDefinitionService的createProcessDefinition方法

- 返回响应

1.3 ProcessDefinitionServicecreate的createProcessDefinition方法

/**

* create process definition

*

* @param loginUser login user

* @param projectName project name

* @param name process definition name

* @param processDefinitionJson process definition json

* @param desc description

* @param locations locations for nodes

* @param connects connects for nodes

* @return create result code

* @throws JsonProcessingException JsonProcessingException

*/

public Map<String, Object> createProcessDefinition(User loginUser,

String projectName,

String name,

String processDefinitionJson,

String desc,

String locations,

String connects) throws JsonProcessingException {

Map<String, Object> result = new HashMap<>(5);

Project project = projectMapper.queryByName(projectName);

// check project auth

Map<String, Object> checkResult = projectService.checkProjectAndAuth(loginUser, project, projectName);

Status resultStatus = (Status) checkResult.get(Constants.STATUS);

if (resultStatus != Status.SUCCESS) {

return checkResult;

}

ProcessDefinition processDefine = new ProcessDefinition();

ProcessData processData = JSONUtils.parseObject(processDefinitionJson, ProcessData.class);

Map<String, Object> checkProcessJson = checkProcessNodeList(processData, processDefinitionJson);

if (checkProcessJson.get(Constants.STATUS) != Status.SUCCESS) {

return checkProcessJson;

}

buildProcessDefine(loginUser, name, processDefinitionJson, desc, locations, connects, project, processDefine, processData);

processDefineMapper.insert(processDefine);

putMsg(result, Status.SUCCESS);

result.put("processDefinitionId",processDefine.getId());

return result;

}

逻辑:

- 验证项目权限,无权限则退出

- 检查工作流定义是否合法

- 根据输入参数生成工作流定义(ProcessDefine)

- 调用baseMapper的插入方法(这里持久层好像是Mybatis Plus)

- 返回响应

结论:

创建工作流实际上是插入一条t_ds_process_definition表记录

2 手动运行工作流执行过程

2.1 运行工作流

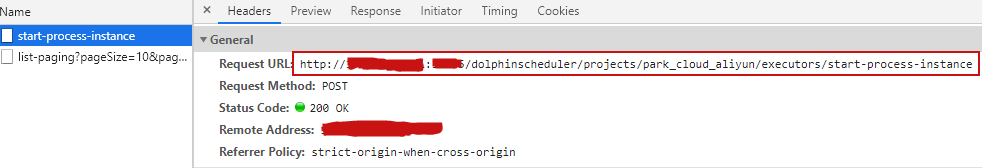

找到刚才创建的test_shell_1,点击上线,点击绿色的运行,打开浏览器开发者工具,点击运行

发现调用的接口为:

http://ip:port/dolphinscheduler/projects/park_cloud_aliyun/executors/start-process-instance

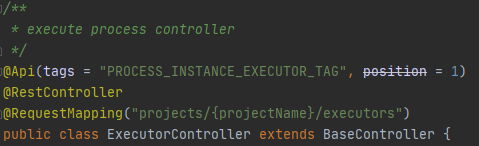

2.2 运行调用的是ExecutorController的startProcessInstance方法

@PostMapping(value = "start-process-instance")

@ResponseStatus(HttpStatus.OK)

@ApiException(START_PROCESS_INSTANCE_ERROR)

public Result startProcessInstance(@ApiIgnore @RequestAttribute(value = Constants.SESSION_USER) User loginUser,

@ApiParam(name = "projectName", value = "PROJECT_NAME", required = true) @PathVariable String projectName,

@RequestParam(value = "processDefinitionId") int processDefinitionId,

@RequestParam(value = "scheduleTime", required = false) String scheduleTime,

@RequestParam(value = "failureStrategy", required = true) FailureStrategy failureStrategy,

@RequestParam(value = "startNodeList", required = false) String startNodeList,

@RequestParam(value = "taskDependType", required = false) TaskDependType taskDependType,

@RequestParam(value = "execType", required = false) CommandType execType,

@RequestParam(value = "warningType", required = true) WarningType warningType,

@RequestParam(value = "warningGroupId", required = false) int warningGroupId,

@RequestParam(value = "receivers", required = false) String receivers,

@RequestParam(value = "receiversCc", required = false) String receiversCc,

@RequestParam(value = "runMode", required = false) RunMode runMode,

@RequestParam(value = "processInstancePriority", required = false) Priority processInstancePriority,

@RequestParam(value = "workerGroup", required = false, defaultValue = "default") String workerGroup,

@RequestParam(value = "timeout", required = false) Integer timeout) throws ParseException {

logger.info("login user {}, start process instance, project name: {}, process definition id: {}, schedule time: {}, "

+ "failure policy: {}, node name: {}, node dep: {}, notify type: {}, "

+ "notify group id: {},receivers:{},receiversCc:{}, run mode: {},process instance priority:{}, workerGroup: {}, timeout: {}",

loginUser.getUserName(), projectName, processDefinitionId, scheduleTime,

failureStrategy, startNodeList, taskDependType, warningType, workerGroup, receivers, receiversCc, runMode, processInstancePriority,

workerGroup, timeout);

if (timeout == null) {

timeout = Constants.MAX_TASK_TIMEOUT;

}

Map<String, Object> result = execService.execProcessInstance(loginUser, projectName, processDefinitionId, scheduleTime, execType, failureStrategy,

startNodeList, taskDependType, warningType,

warningGroupId, receivers, receiversCc, runMode, processInstancePriority, workerGroup, timeout);

return returnDataList(result);

}

逻辑:

- 校验参数

- 处理异常

- 调用ExecutorService

- 返回响应

2.3 ExecutorService的execProcessInstance方法

/**

* execute process instance

*

* @param loginUser login user

* @param projectName project name

* @param processDefinitionId process Definition Id

* @param cronTime cron time

* @param commandType command type

* @param failureStrategy failuer strategy

* @param startNodeList start nodelist

* @param taskDependType node dependency type

* @param warningType warning type

* @param warningGroupId notify group id

* @param receivers receivers

* @param receiversCc receivers cc

* @param processInstancePriority process instance priority

* @param workerGroup worker group name

* @param runMode run mode

* @param timeout timeout

* @return execute process instance code

* @throws ParseException Parse Exception

*/

public Map<String, Object> execProcessInstance(User loginUser, String projectName,

int processDefinitionId, String cronTime, CommandType commandType,

FailureStrategy failureStrategy, String startNodeList,

TaskDependType taskDependType, WarningType warningType, int warningGroupId,

String receivers, String receiversCc, RunMode runMode,

Priority processInstancePriority, String workerGroup, Integer timeout) throws ParseException {

Map<String, Object> result = new HashMap<>(5);

// timeout is invalid

if (timeout <= 0 || timeout > MAX_TASK_TIMEOUT) {

putMsg(result,Status.TASK_TIMEOUT_PARAMS_ERROR);

return result;

}

Project project = projectMapper.queryByName(projectName);

Map<String, Object> checkResultAndAuth = checkResultAndAuth(loginUser, projectName, project);

if (checkResultAndAuth != null){

return checkResultAndAuth;

}

// check process define release state

ProcessDefinition processDefinition = processDefinitionMapper.selectById(processDefinitionId);

result = checkProcessDefinitionValid(processDefinition, processDefinitionId);

if(result.get(Constants.STATUS) != Status.SUCCESS){

return result;

}

if (!checkTenantSuitable(processDefinition)){

logger.error("there is not any valid tenant for the process definition: id:{},name:{}, ",

processDefinition.getId(), processDefinition.getName());

putMsg(result, Status.TENANT_NOT_SUITABLE);

return result;

}

// check master exists

if (!checkMasterExists(result)) {

return result;

}

/**

* create command

*/

int create = this.createCommand(commandType, processDefinitionId,

taskDependType, failureStrategy, startNodeList, cronTime, warningType, loginUser.getId(),

warningGroupId, runMode,processInstancePriority, workerGroup);

if(create > 0 ){

/**

* according to the process definition ID updateProcessInstance and CC recipient

*/

processDefinition.setReceivers(receivers);

processDefinition.setReceiversCc(receiversCc);

processDefinitionMapper.updateById(processDefinition);

putMsg(result, Status.SUCCESS);

} else {

putMsg(result, Status.START_PROCESS_INSTANCE_ERROR);

}

return result;

}

逻辑:

- 校验超时时间,不合法则退出

- 校验项目权限,不合法则退出

- 检查工作流状态是否合法(1是否存在 2是否在线)

- 校验租户是否可用

- 检查master的状态

- 根据ProcessDefine生成一条Command 插入到t_ds_command表

- 返回执行状态

结论:

执行工作流后续实际上就是插入一条数据到t_ds_command表,一直运行的master会不断的扫描t_ds_command,后续操作由master进程接手

3 Master运行流程

3.1 启动master

MasterServer的main方法

/**

* master server startup

*

* master server not use web service

* @param args arguments

*/

public static void main(String[] args) {

// System.setProperty("spring.profiles.active","master");

Thread.currentThread().setName(Constants.THREAD_NAME_MASTER_SERVER);

new SpringApplicationBuilder(MasterServer.class).web(WebApplicationType.NONE).run(args);

}

1.设置线程名

2.启动master,设置不以web模式运行

main方法基本没多少逻辑,主要逻辑在run方法内

3.2 MasterServer.run

/**

* run master server

*/

@PostConstruct

public void run(){

//init remoting server

NettyServerConfig serverConfig = new NettyServerConfig();

serverConfig.setListenPort(masterConfig.getListenPort());

this.nettyRemotingServer = new NettyRemotingServer(serverConfig);

this.nettyRemotingServer.registerProcessor(CommandType.TASK_EXECUTE_RESPONSE, new TaskResponseProcessor());

this.nettyRemotingServer.registerProcessor(CommandType.TASK_EXECUTE_ACK, new TaskAckProcessor());

this.nettyRemotingServer.registerProcessor(CommandType.TASK_KILL_RESPONSE, new TaskKillResponseProcessor());

this.nettyRemotingServer.start();

// register

this.masterRegistry.registry();

// self tolerant

this.zkMasterClient.start();

//

masterSchedulerService.start();

// start QuartzExecutors

// what system should do if exception

try {

logger.info("start Quartz server...");

QuartzExecutors.getInstance().start();

} catch (Exception e) {

try {

QuartzExecutors.getInstance().shutdown();

} catch (SchedulerException e1) {

logger.error("QuartzExecutors shutdown failed : " + e1.getMessage(), e1);

}

logger.error("start Quartz failed", e);

}

/**

* register hooks, which are called before the process exits

*/

Runtime.getRuntime().addShutdownHook(new Thread(new Runnable() {

@Override

public void run() {

close("shutdownHook");

}

}));

}

逻辑:

- 初始化netty远程服务

- 注册到zookeeper

- 启动容错

- TODO

- 调用MasterSchedulerService.start方法,start方法实际调用了Thread.start方法,Thread.start调用的是MasterSchedulerService.run方法

3.3 MasterSchedulerService.run

/**

* run of MasterSchedulerThread

*/

@Override

public void run() {

logger.info("master scheduler started");

while (Stopper.isRunning()){

InterProcessMutex mutex = null;

try {

boolean runCheckFlag = OSUtils.checkResource(masterConfig.getMasterMaxCpuloadAvg(), masterConfig.getMasterReservedMemory());

if(!runCheckFlag) {

Thread.sleep(Constants.SLEEP_TIME_MILLIS);

continue;

}

if (zkMasterClient.getZkClient().getState() == CuratorFrameworkState.STARTED) {

mutex = zkMasterClient.blockAcquireMutex();

int activeCount = masterExecService.getActiveCount();

// make sure to scan and delete command table in one transaction

Command command = processService.findOneCommand();

if (command != null) {

logger.info("find one command: id: {}, type: {}", command.getId(),command.getCommandType());

try{

ProcessInstance processInstance = processService.handleCommand(logger,

getLocalAddress(),

this.masterConfig.getMasterExecThreads() - activeCount, command);

if (processInstance != null) {

logger.info("start master exec thread , split DAG ...");

masterExecService.execute(new MasterExecThread(processInstance, processService, nettyRemotingClient));

}

}catch (Exception e){

logger.error("scan command error ", e);

processService.moveToErrorCommand(command, e.toString());

}

} else{

//indicate that no command ,sleep for 1s

Thread.sleep(Constants.SLEEP_TIME_MILLIS);

}

}

} catch (Exception e){

logger.error("master scheduler thread error",e);

} finally{

zkMasterClient.releaseMutex(mutex);

}

}

}

逻辑:

- 进入一个死循环(一定条件下可以退出)

- 检查资源是否够用(mem,cpu),如果资源不够,睡1秒

- 检查zk是否连接成功

- 获取一个InterProcessMutex(分布式的公平可重入互斥锁)

- 获取活跃线程数

- 调用mapper(commandMapper.getOneToRun())查询一条command记录

- 根据command生成一个processInstance对象

- 调用masterExecService.execute()方法

- 调用MasterExecThread.run

这里就查询除了上面插入的command,开始进行处理

3.4 MasterExecThread.run

@Override

public void run() {

// process instance is null

if (processInstance == null){

logger.info("process instance is not exists");

return;

}

// check to see if it's done

if (processInstance.getState().typeIsFinished()){

logger.info("process instance is done : {}",processInstance.getId());

return;

}

try {

if (processInstance.isComplementData() && Flag.NO == processInstance.getIsSubProcess()){

// sub process complement data

executeComplementProcess();

}else{

// execute flow

executeProcess();

}

}catch (Exception e){

logger.error("master exec thread exception", e);

logger.error("process execute failed, process id:{}", processInstance.getId());

processInstance.setState(ExecutionStatus.FAILURE);

processInstance.setEndTime(new Date());

processService.updateProcessInstance(processInstance);

}finally {

taskExecService.shutdown();

// post handle

// postHandle();

}

}

逻辑:

- 判断processInstance是否为空,为空则退出

- 根据processInstance状态判断是否为完成(成功,失败,取消,终止,停止都算完成状态)

- 如果是补数,执行补数流程,调用executeComplementProcess

- TODO

- 不是补数则执行正常流程,调用executeProcess()

- 最后调用taskExecService.shutdown();等待所有线程正常退出

3.4.1 MasterExecThread.executeProcess()

/**

* execute process

* @throws Exception exception

*/

private void executeProcess() throws Exception {

prepareProcess();

runProcess();

endProcess();

}

3.4.2 调用prepareProcess(),做一些准备工作

/**

* prepare process parameter

* @throws Exception exception

*/

private void prepareProcess() throws Exception {

// init task queue

initTaskQueue();

// gen process dag

buildFlowDag();

logger.info("prepare process :{} end", processInstance.getId());

}

逻辑:

- 初始化任务队列

- 构建任务DAG

1.TODO

3.4.3 调用runProcess(),master的主要逻辑

/**

* submit and watch the tasks, until the work flow stop

*/

private void runProcess(){

// submit start node

submitPostNode(null);

boolean sendTimeWarning = false;

while(!processInstance.isProcessInstanceStop()){

// send warning email if process time out.

if(!sendTimeWarning && checkProcessTimeOut(processInstance) ){

alertManager.sendProcessTimeoutAlert(processInstance,

processService.findProcessDefineById(processInstance.getProcessDefinitionId()));

sendTimeWarning = true;

}

for(Map.Entry<MasterBaseTaskExecThread,Future<Boolean>> entry: activeTaskNode.entrySet()) {

Future<Boolean> future = entry.getValue();

TaskInstance task = entry.getKey().getTaskInstance();

if(!future.isDone()){

continue;

}

// node monitor thread complete

task = this.processService.findTaskInstanceById(task.getId());

if(task == null){

this.taskFailedSubmit = true;

activeTaskNode.remove(entry.getKey());

continue;

}

// node monitor thread complete

if(task.getState().typeIsFinished()){

activeTaskNode.remove(entry.getKey());

}

logger.info("task :{}, id:{} complete, state is {} ",

task.getName(), task.getId(), task.getState());

// node success , post node submit

if(task.getState() == ExecutionStatus.SUCCESS){

completeTaskList.put(task.getName(), task);

submitPostNode(task.getName());

continue;

}

// node fails, retry first, and then execute the failure process

if(task.getState().typeIsFailure()){

if(task.getState() == ExecutionStatus.NEED_FAULT_TOLERANCE){

this.recoverToleranceFaultTaskList.add(task);

}

if(task.taskCanRetry()){

addTaskToStandByList(task);

}else{

completeTaskList.put(task.getName(), task);

if( task.isConditionsTask()

|| DagHelper.haveConditionsAfterNode(task.getName(), dag)) {

submitPostNode(task.getName());

}else{

errorTaskList.put(task.getName(), task);

if(processInstance.getFailureStrategy() == FailureStrategy.END){

killTheOtherTasks();

}

}

}

continue;

}

// other status stop/pause

completeTaskList.put(task.getName(), task);

}

// send alert

if(CollectionUtils.isNotEmpty(this.recoverToleranceFaultTaskList)){

alertManager.sendAlertWorkerToleranceFault(processInstance, recoverToleranceFaultTaskList);

this.recoverToleranceFaultTaskList.clear();

}

// updateProcessInstance completed task status

// failure priority is higher than pause

// if a task fails, other suspended tasks need to be reset kill

if(errorTaskList.size() > 0){

for(Map.Entry<String, TaskInstance> entry: completeTaskList.entrySet()) {

TaskInstance completeTask = entry.getValue();

if(completeTask.getState()== ExecutionStatus.PAUSE){

completeTask.setState(ExecutionStatus.KILL);

completeTaskList.put(entry.getKey(), completeTask);

processService.updateTaskInstance(completeTask);

}

}

}

if(canSubmitTaskToQueue()){

submitStandByTask();

}

try {

Thread.sleep(Constants.SLEEP_TIME_MILLIS);

} catch (InterruptedException e) {

logger.error(e.getMessage(),e);

}

updateProcessInstanceState();

}

logger.info("process:{} end, state :{}", processInstance.getId(), processInstance.getState());

}

逻辑:

- 提交开始节点

1.TODO - while循环,直到流程定义实例停止,成功,失败,取消,暂停,停止都属于停止

- 判断是否超时,超时则发送告警邮件

- 获取当前活动的任务节点的Map

- 如果当前任务实例为空,标记任务失败,从任务队列移除

- 如果当前任务状态为结束,从任务队列移除

- 如果任务状态为成功,加入到成功任务列表,继续提交任务

- 任务失败任务处理流程

- 如果有容错,容错运行

- 如果有重试,重试

- 失败

- 处理condition的失败

- 处理其他任务的失败

- 走到这里的只剩下stop和pause的任务,把这些任务加到完成任务列表

- 发送告警

- 调用submitStandByTask()方法,提交所有任务

- 最后,更新工作流实例

3.4.4 调用endProcess(),做一些流程收尾工作

/**

* process end handle

*/

private void endProcess() {

processInstance.setEndTime(new Date());

processService.updateProcessInstance(processInstance);

if(processInstance.getState().typeIsWaitingThread()){

processService.createRecoveryWaitingThreadCommand(null, processInstance);

}

List<TaskInstance> taskInstances = processService.findValidTaskListByProcessId(processInstance.getId());

alertManager.sendAlertProcessInstance(processInstance, taskInstances);

}

逻辑:

- 设置结束时间

- 更新ProcessInstance记录

- 如果工作流实例的状态是等待线程状态,则创建一条等待线程command

- 根据执行结果生成告警,插入到t_ds_alert表

3.4.5 submitStandByTask() ,提交任务

/**

* handling the list of tasks to be submitted

*/

private void submitStandByTask(){

for(Map.Entry<String, TaskInstance> entry: readyToSubmitTaskList.entrySet()) {

TaskInstance task = entry.getValue();

DependResult dependResult = getDependResultForTask(task);

if(DependResult.SUCCESS == dependResult){

if(retryTaskIntervalOverTime(task)){

submitTaskExec(task);

removeTaskFromStandbyList(task);

}

}else if(DependResult.FAILED == dependResult){

// if the dependency fails, the current node is not submitted and the state changes to failure.

dependFailedTask.put(entry.getKey(), task);

removeTaskFromStandbyList(task);

logger.info("task {},id:{} depend result : {}",task.getName(), task.getId(), dependResult);

}

}

}

逻辑:

5. 遍历所有任务

6. 如果依赖任务成功则继续执行,调用submitTaskExec();

7. 如果依赖任务失败,执行失败逻辑

3.4.6 submitTaskExec() ,提交任务执行

/**

* submit task to execute

* @param taskInstance task instance

* @return TaskInstance

*/

private TaskInstance submitTaskExec(TaskInstance taskInstance) {

MasterBaseTaskExecThread abstractExecThread = null;

if(taskInstance.isSubProcess()){

abstractExecThread = new SubProcessTaskExecThread(taskInstance);

}else if(taskInstance.isDependTask()){

abstractExecThread = new DependentTaskExecThread(taskInstance);

}else if(taskInstance.isConditionsTask()){

abstractExecThread = new ConditionsTaskExecThread(taskInstance);

}else {

abstractExecThread = new MasterTaskExecThread(taskInstance);

}

Future<Boolean> future = taskExecService.submit(abstractExecThread);

activeTaskNode.putIfAbsent(abstractExecThread, future);

return abstractExecThread.getTaskInstance();

}

这里有多个分支,这里我只关心正常执行流程,所以只看MasterTaskExecThread即可

逻辑:

- 创建一个处理子流程节点的类

- 创建一个处理依赖节点的类

- 创建一个处理condition节点的类

- 创建一个处理其他节点的类(这个是我关心的)

- 提交线程

- 添加到正在执行任务列表

- 返回任务实例

3.5 MasterTaskExecThread.call

/**

* call

* @return boolean

* @throws Exception exception

*/

@Override

public Boolean call() throws Exception {

this.processInstance = processService.findProcessInstanceById(taskInstance.getProcessInstanceId());

return submitWaitComplete();

}

逻辑:

- 查询一个工作流实例

- 调用MasterTaskExecThread的submitWaitComplete方法

上面new的MasterTaskExecThread继承了MasterTaskExecThread,所以调用的是MasterTaskExecThread.call

3.5.1 submitWaitComplete()

调用父类MasterBaseTaskExecThread的submit

3.6 MasterBaseTaskExecThread.submit()

/**

* submit master base task exec thread

* @return TaskInstance

*/

protected TaskInstance submit(){

Integer commitRetryTimes = masterConfig.getMasterTaskCommitRetryTimes();

Integer commitRetryInterval = masterConfig.getMasterTaskCommitInterval();

int retryTimes = 1;

boolean submitDB = false;

boolean submitTask = false;

TaskInstance task = null;

while (retryTimes <= commitRetryTimes){

try {

if(!submitDB){

// submit task to db

task = processService.submitTask(taskInstance);

if(task != null && task.getId() != 0){

submitDB = true;

}

}

if(submitDB && !submitTask){

// dispatch task

submitTask = dispatchTask(task);

}

if(submitDB && submitTask){

return task;

}

if(!submitDB){

logger.error("task commit to db failed , taskId {} has already retry {} times, please check the database", taskInstance.getId(), retryTimes);

}else if(!submitTask){

logger.error("task commit failed , taskId {} has already retry {} times, please check", taskInstance.getId(), retryTimes);

}

Thread.sleep(commitRetryInterval);

} catch (Exception e) {

logger.error("task commit to mysql and dispatcht task failed",e);

}

retryTimes += 1;

}

return task;

}

逻辑:

- while处理重试,只要当前重试小于配置的重试次数,就循环继续重试

- 调用ProcessService.submitTask(),submitTask()又调用了一堆函数,把任务实例插入到数据库

1.TODO - 提交到数据库后调用dispatchTask(),根据状态打印日志,处理优先级

1.TODO

参考链接

官方文档: https://dolphinscheduler.apache.org/zh-cn/docs/1.3.1/user_doc/architecture-design.html

gabry.wu 的博客: https://www.cnblogs.com/gabry/p/12162272.html