今天来实现以下笔趣阁小说爬虫

笔趣阁的小说爬取难度还是比较低的(不涉及搜索功能)

咱们用requests和xpath来完成这个小爬虫

首先肯定是导包

import requests

import time

from lxml import etree

然后来写两个辅助函数

分别用于请求网页和xpath解析

函数会让我们后面的程序更加简洁方便

def get_tag(response, tag):

html = etree.HTML(response)

ret = html.xpath(tag)

return ret

def parse_url(url):

response = requests.get(url)

response.encoding = 'gbk'

return response.text

我们是从目录页开始爬取的

所以首先我们要获取目录页面里面所有的章节网址

def find_url(response):

chapter = get_tag(response, '//*[@id="list"]/dl/dd/a/@href')

# print(chapter)

for i in chapter:

url_list.append(start_url + i)

# url_list.append('https://www.52bqg.com/book_187/' + i)

# print(url_list)

然后根据章节的网址

去获取章节内的信息

def find_content(url):

response = parse_url(url)

chapter = get_tag(response, '//*[@id="box_con"]/div[2]/h1/text()')[0]

content = get_tag(response, '//*[@id="content"]/text()')

print('正在爬取', chapter)

with open('{}.txt'.format(title), 'at', encoding='utf-8') as j:

j.write(chapter)

for i in content:

if i == '\r\n':

continue

j.write(i)

j.close()

print(chapter, '保存完毕')

time.sleep(2)

最后就是一个主体函数

def main():

global title, start_url

# start_url = 'https://www.52bqg.com/book_187/'

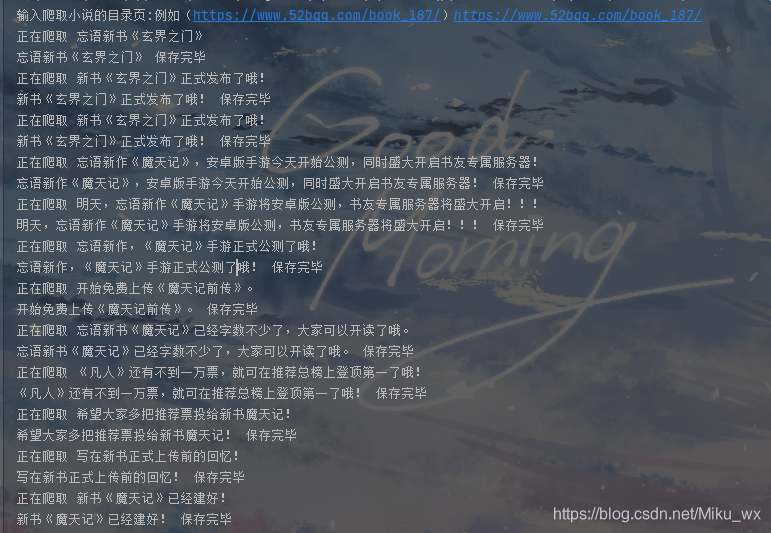

start_url = input('输入爬取小说的目录页:例如(https://www.52bqg.com/book_187/)')

response = parse_url(start_url)

# print(response)

title = get_tag(response, '//*[@id="info"]/h1/text()')[0]

# print(title)

find_url(response)

# print(1)

for url in url_list:

find_content(url)

完整代码如下:

import requests

import time

from lxml import etree

url_list = []

def get_tag(response, tag):

html = etree.HTML(response)

ret = html.xpath(tag)

return ret

def parse_url(url):

response = requests.get(url)

response.encoding = 'gbk'

return response.text

def find_url(response):

chapter = get_tag(response, '//*[@id="list"]/dl/dd/a/@href')

# print(chapter)

for i in chapter:

url_list.append(start_url + i)

# url_list.append('https://www.52bqg.com/book_187/' + i)

# print(url_list)

def find_content(url):

response = parse_url(url)

chapter = get_tag(response, '//*[@id="box_con"]/div[2]/h1/text()')[0]

content = get_tag(response, '//*[@id="content"]/text()')

print('正在爬取', chapter)

with open('{}.txt'.format(title), 'at', encoding='utf-8') as j:

j.write(chapter)

for i in content:

if i == '\r\n':

continue

j.write(i)

j.close()

print(chapter, '保存完毕')

time.sleep(2)

def main():

global title, start_url

# start_url = 'https://www.52bqg.com/book_187/'

start_url = input('输入爬取小说的目录页:例如(https://www.52bqg.com/book_187/)')

response = parse_url(start_url)

# print(response)

title = get_tag(response, '//*[@id="info"]/h1/text()')[0]

# print(title)

find_url(response)

# print(1)

for url in url_list:

find_content(url)

if __name__ == '__main__':

main()

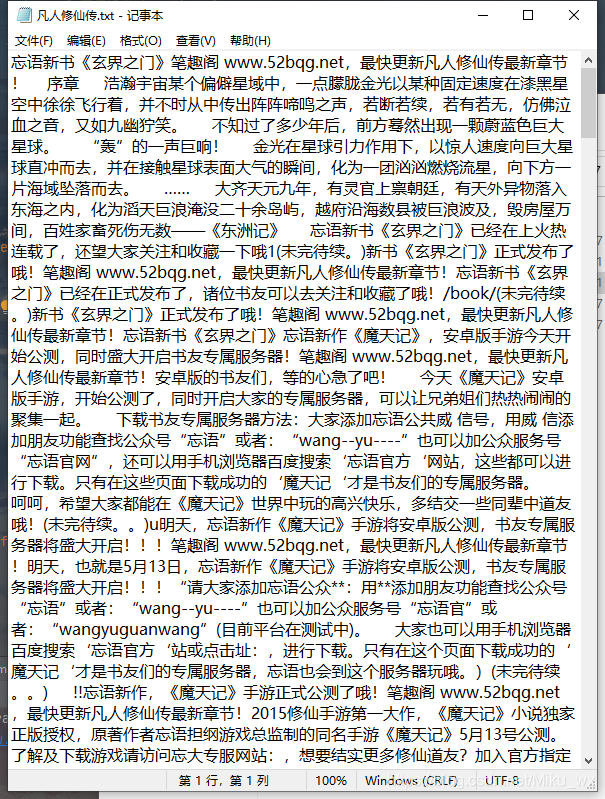

效果图:

笔趣阁小数的爬虫还是比较好写的

下篇文章我们为这个爬虫加上GUI界面

用Python实现笔趣阁小说爬取 GUI版

一起学习python,小白指导,教学分享记得私信我