前言

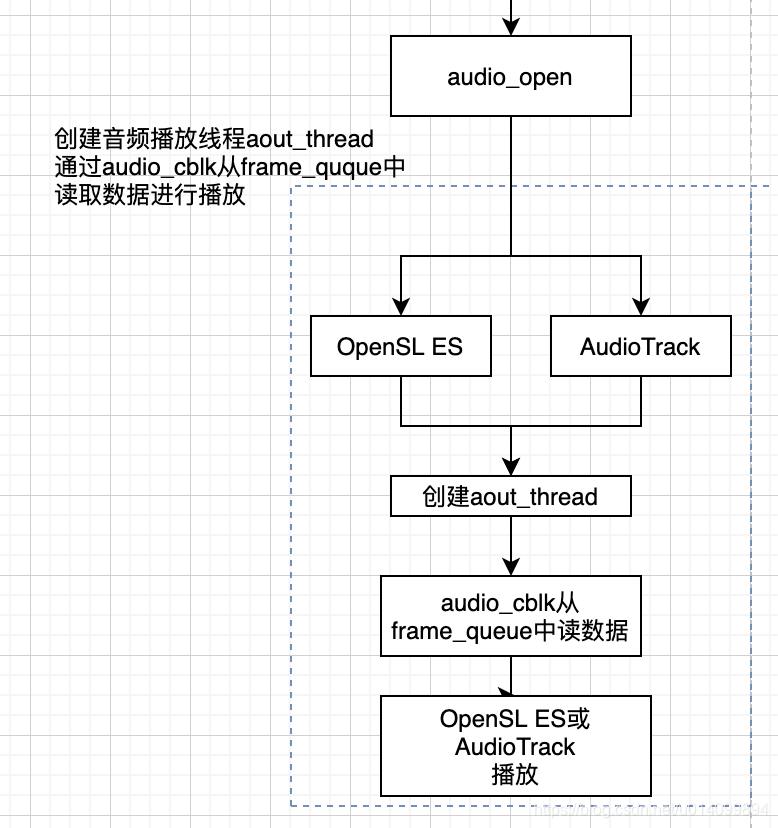

本文是流程分析的第二篇,分析ijkPlayer中的音频播放流程,在aout_thread中,如下流程图中所示。

SDL_Aout结构体

SDL_Aout是对音频播放的抽象,实现是使用OpenGLES播放和使用AudioTrack播放。

// ijksdl_aout.h

typedef struct SDL_Aout_Opaque SDL_Aout_Opaque; // 在各自实现类有不同的定义

typedef struct SDL_Aout SDL_Aout;

struct SDL_Aout {

SDL_mutex *mutex;

double minimal_latency_seconds;

SDL_Class *opaque_class;

SDL_Aout_Opaque *opaque;

void (*free_l)(SDL_Aout *vout);

int (*open_audio)(SDL_Aout *aout, const SDL_AudioSpec *desired, SDL_AudioSpec *obtained);

void (*pause_audio)(SDL_Aout *aout, int pause_on);

void (*flush_audio)(SDL_Aout *aout);

void (*set_volume)(SDL_Aout *aout, float left, float right);

void (*close_audio)(SDL_Aout *aout);

double (*func_get_latency_seconds)(SDL_Aout *aout);

void (*func_set_default_latency_seconds)(SDL_Aout *aout, double latency);

// optional

void (*func_set_playback_rate)(SDL_Aout *aout, float playbackRate);

void (*func_set_playback_volume)(SDL_Aout *aout, float playbackVolume);

int (*func_get_audio_persecond_callbacks)(SDL_Aout *aout);

// Android only

int (*func_get_audio_session_id)(SDL_Aout *aout);

};

- 实现为

ijksdl_aout_android_opensles.c

ijksdl_aout_android_audiotrack.c

代码流程

1. 通过什么方法播放的,入口是什么

在单独线程aout_thread中,获取音频解码数据进行播放

static int aout_thread_n(JNIEnv *env, SDL_Aout *aout)

{

SDL_Android_AudioTrack *atrack = opaque->atrack; // 获取AudioTrack实例

SDL_AudioCallback audio_cblk = opaque->spec.callback; // callback,获取解码后音频数据

SDL_SetThreadPriority(SDL_THREAD_PRIORITY_HIGH);

while (!opaque->abort_request) {

// 循环读取

SDL_LockMutex(opaque->wakeup_mutex);

if (opaque->need_flush) {

opaque->need_flush = 0;

SDL_Android_AudioTrack_flush(env, atrack);

}

SDL_UnlockMutex(opaque->wakeup_mutex);

audio_cblk(userdata, buffer, copy_size); // 获取解码数据

if (opaque->need_flush) {

opaque->need_flush = 0;

SDL_Android_AudioTrack_flush(env, atrack);

} else {

SDL_Android_AudioTrack_write(env, atrack, buffer, copy_size); // 写数据,即播放

}

}

SDL_Android_AudioTrack_free(env, atrack);

return 0;

}

2. aout_thread_n怎么被调用的

-

在stream_component_open函数中调用audio_open打开创建音频播放器

ff_ffplay.c#stream_component_open

-> audio_open

-> SDL_AoutOpenAudio // 去找SDL_Aout的实现 -

ijksdl_aout_android_audiotrack.c实现

-> ijksdl_aout_android_audiotrack.c#aout_open_audio

-> ijksdl_aout_android_audiotrack.c#aout_open_audio_n,创建aout_thread执行aout_thread_n

audio_open执行完,即已经创建了AudioTrack并打开,等待读取解码数据并播放。 -

ijksdl_aout_android_opensles.c实现

-> ijksdl_aout_android_opensles.c#aout_open_audio,创建aout_thread执行aout_thread_n

audio_open执行完,即已经创建了SLObjectItf并打开,等待读取解码数据并播放。

以下用ijksdl_aout_android_audiotrack.c为示例梳理代码:

// ff_ffplay.c,stream_compoenent_open调用

static int audio_open(FFPlayer *opaque, int64_t wanted_channel_layout, int wanted_nb_channels,

int wanted_sample_rate, struct AudioParams *audio_hw_params) {

SDL_AudioSpec wanted_spec, spec;

wanted_spec.callback = sdl_audio_callback; // 设置回调函数

SDL_AoutOpenAudio(ffp->aout, &wanted_spec, &spec); // 打开音频

}

// ijksdl_aout.c

int SDL_AoutOpenAudio(SDL_Aout *aout, const SDL_AudioSpec *desired, SDL_AudioSpec *obtained)

{

if (aout && desired && aout->open_audio)

return aout->open_audio(aout, desired, obtained);

return -1;

}

// ijksdl_aout_android_audiotrack.c

static int aout_open_audio(SDL_Aout *aout, const SDL_AudioSpec *desired, SDL_AudioSpec *obtained) {

return aout_open_audio_n(env, aout, desired, obtained);

}

// ijksdl_aout_android_audiotrack.c

static int aout_open_audio_n(JNIEnv *env, SDL_Aout *aout, const SDL_AudioSpec *desired, SDL_AudioSpec *obtained) {

SDL_Aout_Opaque *opaque = aout->opaque;

// 创建AudioTrack

opaque->atrack = SDL_Android_AudioTrack_new_from_sdl_spec(env, desired);

// 开启线程aout_thread,进行播放流程

opaque->audio_tid = SDL_CreateThreadEx(&opaque->_audio_tid, aout_thread, aout, "ff_aout_android");

}

// ijksdl_aout_android_audiotrack.c

static int aout_thread(void *arg) {

return aout_thread_n(env, aout);

}

3. ffp->aout在哪赋值,即SDK_Aout何时初始化的

- 初始化赋值SDK_Aout流程:

ijkplayer.c#ijkmp_prepare_async

-> ijkplayer.c#ijkmp_prepare_async_l

->ff_play.c#ffp_prepare_async_l

-> ff_ffpipeline.c#ffpipeline_open_audio_output

-> ffpipeline_android.c#func_open_audio_output // 创建SDL_Aout实现

// ffpipeline_android.c

static SDL_Aout *func_open_audio_output(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{

SDL_Aout *aout = NULL;

if (ffp->opensles) {

aout = SDL_AoutAndroid_CreateForOpenSLES();

} else {

aout = SDL_AoutAndroid_CreateForAudioTrack();

}

if (aout)

SDL_AoutSetStereoVolume(aout, pipeline->opaque->left_volume, pipeline->opaque->right_volume);

return aout;

}

- 初始化赋值ffpipeline流程:

IjkMediaPlayer_native_setup

-> ijkmp_android_create

-> ffpipeline_android.c#ffpipeline_create_from_android // 创建 ffpipeline

4. audio_cblk具体流程

// ff_ffplay.c, stream是buffer,向里填充数据,write到AudioTrack里去

static void sdl_audio_callback(void *opaque, Uint8 *stream, int len) {

int audio_size, len1;

while (len > 0) {

if (is->audio_buf_index >= is->audio_buf_size) {

// audio_buf消耗完了,调用audio_decode_frame重新填充audio_buf

audio_size = audio_decode_frame(ffp);

is->audio_buf_size = audio_size;

is->audio_buf_index = 0;

}

len1 = is->audio_buf_size - is->audio_buf_index;

if (len1 > len)

len1 = len;

// 读取数据到stream中

if (!is->muted && is->audio_buf && is->audio_volume == SDL_MIX_MAXVOLUME) {

memcpy(stream, (uint8_t *) is->audio_buf + is->audio_buf_index, len1);

} else {

memset(stream, 0, len1);

if (!is->muted && is->audio_buf)

SDL_MixAudio(stream, (uint8_t *) is->audio_buf + is->audio_buf_index, len1,

is->audio_volume);

}

// 向后移动,len>0则继续读取数据

len -= len1;

stream += len1;

is->audio_buf_index += len1;

}

}

static int audio_decode_frame(FFPlayer *ffp) {

Frame *af;

do {

// 获取一个可读结点,读取新的音频帧

if (!(af = frame_queue_peek_readable(&is->sampq))) {

return -1;

}

// 移动指针指向下一个

frame_queue_next(&is->sampq);

} while (af->serial != is->audioq.serial);

if () {

// 重采样

} else {

// 把这一帧数据赋值给audio_buf

is->audio_buf = af->frame->data[0];

resampled_data_size = data_size;

}

// 更新audio_clock

is->audio_clock = af->pts + (double) af->frame->nb_samples / af->frame->sample_rate;

return resampled_data_size;

}