文章目录

1. 环境踩坑

1.1 win10下python多线程报错的解决

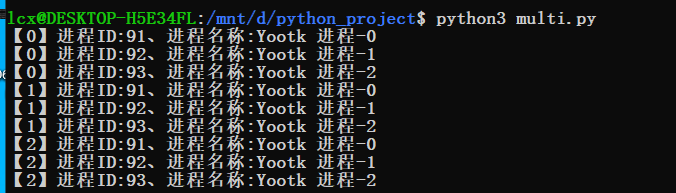

也不知道为啥,Python创建多进程在我的win10上老是报错,比如下面这段代码

import multiprocessing,time

def worker(delay,count):

for num in range(count):

print("【%s】进程ID:%s、进程名称:%s"%(num,multiprocessing.current_process().pid,multiporcessing.current_process.name()))

time.sleep(delay)

def main():

for item in range(3):

p=multiprocessing.Process(target=worker,args=(1,10,),name="Yootk 进程%s"%item)

p.start()

if __name__=="__main__":

main()

这是win10下的anaconda报错

然后换到ubantu上面执行相同代码,就能运行,玄学。。。

1.2 安装系统踩坑

开始的时候用的是VMware虚拟机,但是感觉屏幕太小了,而且占用内存太多,和windows里面文件交互不太方便,通过qq来互发文件,网速是真的慢。。。

之后想装双系统,结果家里只有USB2.0的U盘,而电脑的两个USB都是3.0的,都识别不了,unable to find a live file system,我。。。

最后是在吴大佬建议下又安装的WSL,windows上的python环境又重装了一遍,然后想着用多进程selenium爬虫,在WSL上配置chrome和chromedriver上花了很长时间,结果在知乎上看到WSL能直接调用windows的.exe文件,我。。。

所以说建议在linux环境下进行python并行编程,建议可以尝试wsl

2. 爬虫基础知识

2.1 爬虫基本原理

-

http的基本原理

-

前端基础(html、css、javascript、xpath)

-

谷歌浏览器有现成工具

- css选择器 ChroPath

- xpath XPath Helper

-

多线程,python的GIL无法实现多线程

-

多进程

2.2 爬虫基本库的使用

-

没有渲染HTML文档 requests库

-

匹配字符串 re库

-

爬虫解析库 PyQuery、BeautifulSoup

-

高效存储 MongoDB

2.3 多种形式网页爬取

-

没有渲染的网页直接用requests库或者aiohttp异步加速

-

通过ajax加载请求的网址形成规则来直接获取数据

-

有渲染的网页通过selenium或者pyppeteer模拟浏览器

2.4 反爬虫应对方法

- 添加代理服务器

- 搭建代理池

这里推荐崔庆才的《52讲轻松搞定网络爬虫》,讲的很清晰

3. selenium爬取东方财富实战

3.1 浏览器粒度的多进程

#!/usr/bin/env python

# coding: utf-8

# In[1]:

#使用类库

import time

import pymysql

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

import logging

from pyquery import PyQuery as pq

import re

import pandas as pd

import numpy as np

import os

import xlwt

import xlrd

import multiprocessing

from multiprocessing import Process,Semaphore

# In[2]:

#chromedriver地址

PATH_CHROME_DRIVER="/mnt/d/webdriver/chromedriver.exe"

#文件地址

PATH="/mnt/d/stock_info/"

# 各种指数的url

INDEX_URLS=["http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czFdfHM9Z2Z6czF8c3Q9LTE=",

"http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czJdfHM9Z2Z6czJ8c3Q9LTE=",

"http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czNdfHM9Z2Z6czN8c3Q9LTE=",

"http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czRdfHM9Z2Z6czR8c3Q9LTE=",

"http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czVdfHM9Z2Z6czV8c3Q9LTE="]

#超时等待

TIME_OUT=10

#筛选出来的总共页数

TOTAL_PAGE=[8,5,2,3,3]

#具体每支股票历史数据

BASIC_URL="http://data.eastmoney.com/zjlx/{stock_code}.html"

#并发度

CONCURRENCY=3

#信号量

useable=Semaphore(CONCURRENCY)

# In[3]:

class Scrape_Eastmoney(Process):

def __init__(self,num):

Process.__init__(self)

#股票代码:名称

self.STOCK_LIST={}

#股票日期:收盘价

self.STOCK_HISTORY={}

#设置浏览器

self.browser=self.create_browser()

#设置浏览器等待加载对象时间

self.wait=WebDriverWait(self.browser,TIME_OUT)

#第几个指数

self.num=num

#浏览器

def create_browser(self):

option=Options()

option.add_experimental_option('excludeSwitches',['enable-automation'])

option.add_argument('--headless')

option.add_experimental_option('useAutomationExtension',False)

browser=webdriver.Chrome(chrome_options=option,executable_path=PATH_CHROME_DRIVER)

return browser

#浏览器下滑到底部以支持刷新

def scroll(self):

js="var q=document.documentElement.scrollTop=100000"

self.browser.execute_script(js)

#通用爬取接口

def scrape_page(self,url,condition,locator):

logging.info('scraping %s',url)

try:

self.browser.get(url)

self.wait.until(condition(locator))

except TimeoutException:

logging.error("error occured while scraping %s",url,exc_info=True)

#得到筛选代码有多少页

def get_total_page(self):

return TOTAL_PAGE[self.num-1]

#得到筛选出来的股票代码

def get_stock_list(self):

#跳到相应的界面

self.scrape_page(INDEX_URLS[self.num-1],condition=EC.presence_of_all_elements_located,locator=(By.CSS_SELECTOR,'div.mainFrame'))

total_page=self.get_total_page()

for _ in range(1,total_page+1):

stocks=self.browser.find_elements_by_css_selector("div.stocklist tbody tr")

for stock in stocks:

code=stock.find_element_by_css_selector("td.code").text.strip(' ')

name=stock.find_element_by_css_selector("td.name").text.strip(' ')

link=stock.find_element_by_css_selector("td.code a").get_attribute('href')

self.STOCK_LIST[code]=[name,link]

#在某个指数上进行翻页处理

buttons=self.browser.find_elements_by_css_selector("div#bottompage.pagenav a")

for btn in buttons:

#找到了作翻页处理,没找到在大循环中会退出

if btn.text==str(_+1):

button=btn

button.click()

break

#获取股票的行业

def get_industry(self,url):

self.scrape_page(url,condition=EC.presence_of_all_elements_located,locator=(By.CSS_SELECTOR,'div.cwzb'))

self.scroll()

try:

industry=self.browser.find_element_by_css_selector("div.qphox.layout:nth-child(14) div.fr.w790 div.layout:nth-child(2) div.w578 div.cwzb:nth-child(6) table:nth-child(1) tbody:nth-child(2) tr:nth-child(2) td:nth-child(1) > a:nth-child(1)").text

except:

time.sleep(3)

self.browser.refresh()

industry=self.get_industry(url)

return industry

#获取股票的历史

def get_stock_history(self,stock_code):

url=BASIC_URL.format(stock_code=stock_code)

self.STOCK_HISTORY={}

self.scrape_page(url,condition=EC.presence_of_all_elements_located,locator=(By.CSS_SELECTOR,'#content_zjlxtable'))

self.scroll()

results=self.browser.find_elements_by_css_selector("div.page:nth-child(3) div.main div.framecontent div.sitebody div.maincont div.contentBox:nth-child(9) div.content2 table.tab1 > tbody:nth-child(2) tr")

for result in results:

date=result.find_element_by_css_selector("td:nth-child(1)").text

close_price=result.find_element_by_css_selector("td:nth-child(2)").text

self.STOCK_HISTORY[date]=close_price

#将股票列表写到csv

def write_stock_list(self):

Dict={

"股票代码":[],

"股票名称":[],

"行业":[]

}

df=pd.DataFrame(Dict)

for k,v in self.STOCK_LIST.items():

insert_row=pd.DataFrame(data=[[k,v[0],v[2]]],columns=["股票代码","股票名称","行业"])

df=pd.concat([df,insert_row])

df.to_csv("{}股票列表{}.csv".format(PATH,self.num),encoding='utf-8',index=None)

#将股票历史写入csv

def write_stock_history(self,stock_code):

if os.path.exists("{}所有股票历史信息/{}.csv".format(PATH,stock_code)):

return

Dict={

"日期":[],

"股票收盘价/元":[]

}

df=pd.DataFrame(Dict)

for k,v in self.STOCK_HISTORY.items():

insert_row=pd.DataFrame(data=[[k,v]],columns=["日期","股票收盘价/元"])

df=pd.concat([df,insert_row])

df["日期"]=pd.to_datetime(df["日期"])

df.sort_values("日期",ascending=True).to_csv("{}所有股票历史信息/{}.csv".format(PATH,stock_code),encoding="utf-8",index=None)

def run(self):

useable.acquire()

self.get_stock_list()

for k,v in self.STOCK_LIST.items():

url=v[1]

industry=self.get_industry(url)

self.STOCK_LIST[k].append(industry)

self.write_stock_list()

for stock_code in self.STOCK_LIST.keys():

self.get_stock_history(stock_code)

self.write_stock_history(stock_code)

self.browser.quit()

useable.release()

# In[ ]:

def main():

if not os.path.exists(PATH):

os.makedirs(PATH)

if not os.path.exists("{}所有股票历史信息/".format(PATH)):

os.makedirs("{}所有股票历史信息/".format(PATH))

processes=[]

for _ in range(1,len(INDEX_URLS)+1):

p=Scrape_Eastmoney(_)

p.daemon=True

processes.append(p)

p.start()

for p in processes:

p.join()

if __name__=="__main__":

main()

3.2 分配多个网址任务粒度的多进程

#!/usr/bin/env python

# coding: utf-8

# In[1]:

#使用类库

import time

import pymysql

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

import logging

from pyquery import PyQuery as pq

import re

import pandas as pd

import numpy as np

import os

from multiprocessing import Pool,Semaphore

import math

# In[2]:

#超时等待

TIME_OUT=10

#chromedriver地址

PATH_CHROME_DRIVER="/mnt/d/webdriver/chromedriver.exe"

#具体每支股票历史数据

BASIC_URL="http://data.eastmoney.com/zjlx/{stock_code}.html"

#筛选出来的总共页数

TOTAL_PAGE=[8,5,2,3,3]

#保存所有的股票代码:股票名称

STOCK_LIST={}

#文件地址

PATH="/mnt/d/stock_info/"

# 各种指数的url

INDEX_URLS=["http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czFdfHM9Z2Z6czF8c3Q9LTE=",

"http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czJdfHM9Z2Z6czJ8c3Q9LTE=",

"http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czNdfHM9Z2Z6czN8c3Q9LTE=",

"http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czRdfHM9Z2Z6czR8c3Q9LTE=",

"http://data.eastmoney.com/xuangu/#Yz1bZ2Z6czVdfHM9Z2Z6czV8c3Q9LTE="]

#并发度

CONCURRENCY=5

# In[3]:

#使用CDP隐藏webdriver自动化扩展信息,防止被反屏蔽

def create_browser():

option=Options()

option.add_experimental_option('excludeSwitches',['enable-automation'])

option.add_experimental_option('useAutomationExtension',False)

# option.add_argument('--headless')#设置不弹出浏览器窗口

browser=webdriver.Chrome(chrome_options=option,executable_path=PATH_CHROME_DRIVER)

return browser

# In[4]:

#selenium模拟获得完整html

def scrape_page(browser,url,condition,locator):

#设置浏览器等待加载对象时间

wait=WebDriverWait(browser,TIME_OUT)

logging.info('scraping %s',url)

try:

browser.get(url)

wait.until(condition(locator))

except TimeoutException:

logging.error('error occured while scraping %s',url,exc_info=True)

# In[5]:

#浏览器向下滑动

def scroll(browser):

js="var q=document.documentElement.scrollTop=100000"

browser.execute_script(js)

# In[6]:

#将股票历史写到Excel

def write_stock_history(stock_code,stock_history):

Dict={

"日期":[],

"股票收盘价/元":[]

}

df=pd.DataFrame(Dict)

for k,v in stock_history.items():

insert_row=pd.DataFrame(data=[[k,v]],columns=["日期","股票收盘价/元"])

df=pd.concat([df,insert_row])

df["日期"]=pd.to_datetime(df["日期"])

df.sort_values("日期",ascending=True).to_csv("{}所有股票历史信息/{}.csv".format(PATH,stock_code),encoding="utf-8",index=None)

# In[7]:

#将股票列表写到csv

def write_stock_list():

Dict={

"股票代码":[],

"股票名称":[],

"行业":[]

}

df=pd.DataFrame(Dict)

for k,v in STOCK_LIST.items():

insert_row=pd.DataFrame(data=[[k,v[0],v[2]]],columns=["股票代码","股票名称","行业"])

df=pd.concat([df,insert_row])

df.to_csv("{}股票列表.csv".format(PATH),encoding='utf-8',index=None)

# In[8]:

#获取股票行业

def get_industry(stock_codes):

browser=create_browser()

stock_list={}

for stock_code in stock_codes:

stock_list[stock_code]=STOCK_LIST[stock_code]

url=stock_list[stock_code][1]

scrape_page(browser,url,condition=EC.presence_of_all_elements_located,locator=(By.CSS_SELECTOR,'div.cwzb'))

scroll(browser)

flag=True

while flag:

try:

industry=browser.find_element_by_css_selector("div.qphox.layout:nth-child(14) div.fr.w790 div.layout:nth-child(2) div.w578 div.cwzb:nth-child(6) table:nth-child(1) tbody:nth-child(2) tr:nth-child(2) td:nth-child(1) > a:nth-child(1)").text

stock_list[stock_code].append(industry)

flag=False

except:

time.sleep(3)

browser.refresh()

scroll(browser)

browser.quit()

return stock_list

# In[9]:

#得到筛选出来的股票代码

def get_stock_list(url,total_page):

browser=create_browser()

stock_list={}

scrape_page(browser,url,condition=EC.presence_of_all_elements_located,locator=(By.CSS_SELECTOR,'div.mainFrame'))

for _ in range(1,total_page+1):

flag=True

while flag:

try:

stocks=browser.find_elements_by_css_selector("div.stocklist tbody tr")

flag=False

except:

time.sleep(3)

browser.refresh()

scroll(browser)

for stock in stocks:

code=stock.find_element_by_css_selector("td.code").text.strip(' ')

name=stock.find_element_by_css_selector("td.name").text.strip(' ')

link=stock.find_element_by_css_selector("td.code a").get_attribute('href')

stock_list[code]=[name,link]

#在某个指数上进行翻页处理

buttons=browser.find_elements_by_css_selector("div#bottompage.pagenav a")

for btn in buttons:

#找到了作翻页处理,没找到在大循环中会退出

if btn.text==str(_+1):

button=btn

button.click()

break

browser.quit()

return stock_list

# In[10]:

#得到筛选出来的股票代码的

def get_stock_history(stock_codes):

browser=create_browser()

for stock_code in stock_codes:

url=BASIC_URL.format(stock_code=stock_code)

stock_history={}

scrape_page(browser,url,condition=EC.presence_of_all_elements_located,locator=(By.CSS_SELECTOR,'#content_zjlxtable'))

browser.refresh()

scroll(browser)

flag=True

while flag:

try:

results=browser.find_elements_by_css_selector("div.page:nth-child(3) div.main div.framecontent div.sitebody div.maincont div.contentBox:nth-child(9) div.content2 table.tab1 > tbody:nth-child(2) tr")

flag=False

except:

time.sleep(3)

browser.refresh()

scroll(browser)

if results==[]:

browser.refresh()

for result in results:

date=result.find_element_by_css_selector("td:nth-child(1)").text

close_price=result.find_element_by_css_selector("td:nth-child(2)").text

stock_history[date]=close_price

write_stock_history(stock_code,stock_history)

browser.quit()

# In[11]:

def main():

if not os.path.exists(PATH):

os.makedirs(PATH)

#将爬取记录进日志

logging.basicConfig(level=logging.INFO,format='%(asctime)s - %(levelname)s: %(message)s')

#多进程爬取所有stock_code

rets=[]

pool=Pool(processes=CONCURRENCY)

for _ in range(len(INDEX_URLS)):

ret=pool.apply_async(get_stock_list,args=(INDEX_URLS[_],TOTAL_PAGE[_]))

rets.append(ret)

pool.close()

pool.join()

for ret in rets:

STOCK_LIST.update(ret.get())

#多进程爬取stock_industry

rets=[]

pool=Pool(processes=CONCURRENCY)

LIST=list(STOCK_LIST.keys())

task_url_num=math.ceil(len(LIST)/CONCURRENCY)

for i in range(CONCURRENCY):

ret=pool.apply_async(get_industry,args=(LIST[i*task_url_num:(i+1)*task_url_num],))

rets.append(ret)

pool.close()

pool.join()

for ret in rets:

STOCK_LIST.update(ret.get())

write_stock_list()

#多进程爬取stock_history

pool=Pool(processes=CONCURRENCY)

for i in range(CONCURRENCY):

pool.apply_async(get_stock_history,args=(LIST[i*task_url_num:(i+1)*task_url_num],))

pool.close()

pool.join()

main()