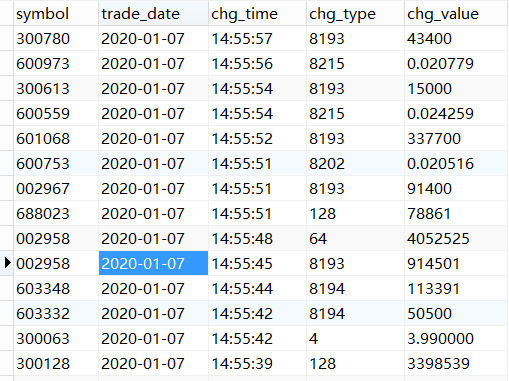

先上个图看下网页版数据、mysql结构化数据

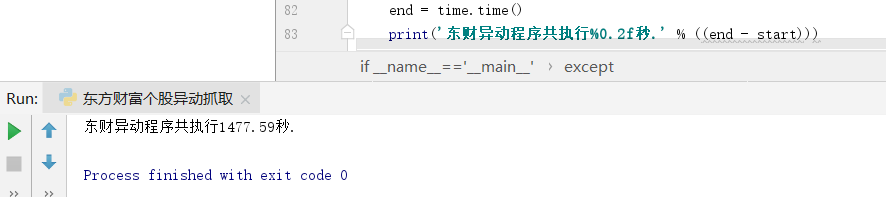

通过Python读写mysql执行时间为:1477s,而通过Pandas读写mysql执行时间为:47s,方法2速度几乎是方法1的30倍。在于IO读写上,Python多线程显得非常鸡肋,具体分析可参考:https://cuiqingcai.com/3325.html

1、Python读写Mysql

# -*- coding: utf-8 -*-

import pandas as pd

import tushare as ts

import pymysql

import time

import requests

import json

from multiprocessing import Pool

import traceback

# ====================东方财富个股盘口异动数据抓取============================================================================================================

def EMydSpider(param_list):

# 抓取东财个股盘口异动数据:http://quote.eastmoney.com/changes

# 创建计数器

success, fail = 0, 0

# 获取当天日期

cur_date = time.strftime("%Y%m%d", time.localtime())

# 创建MySQL连接对象

conn_mysql = pymysql.connect(user='root', password='123456', database='stock', charset='utf8')

cursor = conn_mysql.cursor()

header = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (Khtml, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3676.400 QQBrowser/10.5.3738.400"}

url = "http://push2ex.eastmoney.com/getAllStockChanges?type=8201,8202,8193,4,32,64,8207,8209,8211,8213,8215,8204,8203,8194,8,16,128,8208,8210,8212,8214,8216"

session = requests.Session()

for param in param_list:

try:

html = json.loads(session.get(url=url, params=param, headers=header).text)

allstock = html['data']['allstock']

for stock in allstock:

stk_code = stock['c'] # 股票代码,无后缀

stk_name = stock['n'] # 股票名称

chg_time = stock['tm'] # 异动时间

chg_type = stock['t'] # 异动类型

chg_value = stock['i'] # 异动值

try:

sql = '''insert into stock_yd_list(stk_code,trade_date,chg_time,chg_type,chg_value) values('%s','%s','%s','%s','%s')''' % (stk_code, cur_date, chg_time, chg_type, chg_value)

cursor.execute(sql)

conn_mysql.commit()

success += 1

print("东方财富盘口异动,第%d条数据存储完成......" % success)

except:

conn_mysql.rollback()

fail += 1

traceback.print_exc()

print("东方财富盘口异动,第%d条数据存储失败......" % fail)

except:

traceback.print_exc()

exit()

cursor.close()

conn_mysql.close()

print('当天个股盘口异动数据获取完毕,新入库数据:%d条' % success)

print('当天个股盘口异动数据获取完毕,入库失败数据:%d条' % fail)

# ====================主函数====================================================================================================================================

if __name__=='__main__':

print("东财异动程序开始执行")

start = time.time()

# 定义空列表

param_list = []

for page in range(0,300):

param = {"pageindex": page, "pagesize": '64', "ut": '7eea3edcaed734bea9cbfc24409ed989', "dpt": 'wzchanges'}

param_list.append(param)

# 创建多进程

pool = Pool(processes=4)

# 开启多进程爬取东财异动数据

try:

pool.map(EMydSpider, (param_list,))

except:

print("多进程执行error")

traceback.print_exc()

end = time.time()

print('东财异动程序共执行%0.2f秒.' % ((end - start)))

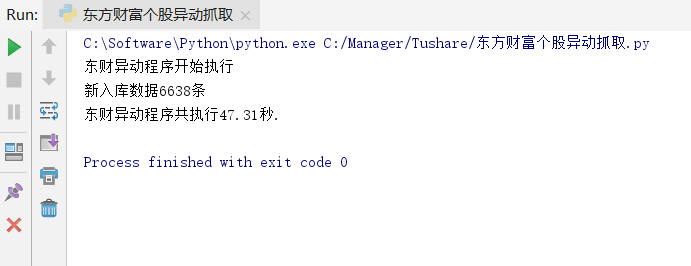

执行时间:

2、Pandas读写Mysql

# -*- coding: utf-8 -*-

import pandas as pd

import tushare as ts

import time

import requests

import json

from sqlalchemy import create_engine

from multiprocessing import Pool

from requests.packages.urllib3.exceptions import InsecureRequestWarning

import traceback

# ====================东方财富个股盘口异动数据抓取============================================================================================================

def EMydSpider(param_list):

# 抓取东财个股盘口异动数据:http://quote.eastmoney.com/changes

# 获取当天日期

cur_date = time.strftime("%Y%m%d", time.localtime())

# 创建空列表

html_list = []

header = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (Khtml, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3676.400 QQBrowser/10.5.3738.400"}

url = "http://push2ex.eastmoney.com/getAllStockChanges?type=8201,8202,8193,4,32,64,8207,8209,8211,8213,8215,8204,8203,8194,8,16,128,8208,8210,8212,8214,8216"

session = requests.Session()

for param in param_list:

try:

html = json.loads(session.get(url=url, params=param, headers=header).text)

html_list.append(html)

except:

break

# 创建用于存储异动数据的空Dataframe

stock_yd = pd.DataFrame(columns=('symbol', 'trade_date', 'chg_time','chg_type','chg_value'))

for html in html_list:

try:

allstock = html['data']['allstock']

for stock in allstock:

code = stock['c'] # 股票代码,无后缀

stk_name = stock['n'] # 股票名称

chg_time = stock['tm'] # 异动时间

chg_type = stock['t'] # 异动类型

chg_value = stock['i'] # 异动值

stock_yd = stock_yd.append({'symbol':code, 'trade_date': cur_date, 'chg_time': chg_time,'chg_type':chg_type,'chg_value':chg_value}, ignore_index=True)

except:

pass

# 创建Pandas读写数据库引擎

engine_mysql = create_engine('mysql://root:[email protected]/stock?charset=utf8')

# Pandas数据存储

stock_yd.to_sql('stock_yd_list', engine_mysql, index=False, if_exists='append')

print("新入库数据%s条" % stock_yd.shape[0])

# ====================主函数====================================================================================================================================

if __name__=='__main__':

print("东财异动程序开始执行")

start = time.time()

# 定义空列表

param_list = []

for page in range(0,300):

param = {"pageindex": page, "pagesize": '64', "ut": '7eea3edcaed734bea9cbfc24409ed989', "dpt": 'wzchanges'}

param_list.append(param)

# 创建多进程

pool = Pool(processes=4)

# 开启多进程爬取东财异动数据

try:

pool.map(EMydSpider, (param_list,))

except:

print("多进程执行error")

traceback.print_exc()

end = time.time()

print('东财异动程序共执行%0.2f秒.' % ((end - start)))

执行时间: