本次作业的难点主要在于对数据的处理,我们先看下原始数据:

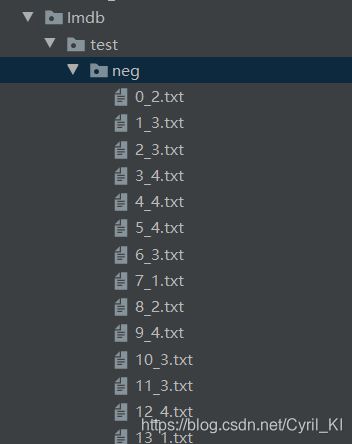

我们这里只需要用到测试集下面的neg、pos以及训练集下面的neg、pos。我们以test/neg为例:

随便打开一个txt文件:

可以看到,每个txt文件里都是一句很长的评论。

接下来我们先说一下LSTM需要什么样的数据。比如我们一共有25000句话,每句话有250个单词(多去少补,后面会详细介绍),然后每个单词用一个50维的向量表示,即每一个句子的维度是[250, 50]。假设我们把所有的训练集(25000)分成250批,每一批100句话,那么所有的训练集的规模就是[250, 100, 250, 50]。第一个250表示一共250批数据,100表示每批数据有100句话,第二个250表示每句话有250个单词,最后一个50表示每个单词为一个50维度的向量。接下来我们就详细介绍怎么得到这个数据集。

- 首先我们需要得到每一个单词对应的50维度向量,我们这里用网上已经训练好的glove数据集:

每个文件里面都有40000行,每一行代表一个单词的词向量(有单词标签)。 第一个文件为50维,后面依次为100/200/300维度。我们读取第一个文件,根据每一行的单词标签与该单词的向量,建立一个词向量表:

def load_cab_vector():

word_list = []

vocabulary_vectors = []

data = open('glove.6B.50d.txt', encoding='utf-8')

for line in data.readlines():

temp = line.strip('\n').split(' ') # 一个列表

name = temp[0]

word_list.append(name.lower())

vector = [temp[i] for i in range(1, len(temp))] # 向量

vector = list(map(float, vector)) # 变成浮点数

vocabulary_vectors.append(vector)

# 保存

vocabulary_vectors = np.array(vocabulary_vectors)

word_list = np.array(word_list)

np.save('vocabulary_vectors_1', vocabulary_vectors)

np.save('word_list_1', word_list)

return vocabulary_vectors, word_list

这样,我们就得到了一个词向量表。表由两个列表组成:word_list里面包含了40000个单词,vocabulary_vectors包含了40000个50维度的向量。加载数据十分缓慢,所以我们将这个两个列表转成array并利用np.save(file)存下来:(这个操作在后面经常用到)

vocabulary_vectors = np.array(vocabulary_vectors)

word_list = np.array(word_list)

np.save('vocabulary_vectors_1', vocabulary_vectors)

np.save('word_list_1', word_list)

于是我们得到了两个npy文件:vocabulary_vectors_1.npy与word_list_1.npy。

- 对训练集和数据集进行处理。我们读取所有的文件(训练+测试一共50000条数据):

def load_data(path, flag='train'):

labels = ['pos', 'neg']

data = []

for label in labels:

files = os.listdir(os.path.join(path, flag, label))

# 去除标点符号

r = '[’!"#$%&\'()*+,-./:;<=>?@[\\]^_`{|}~\n。!,]+'

for file in files:

with open(os.path.join(path, flag, label, file), 'r', encoding='utf8') as rf:

temp = rf.read().replace('\n', '')

temp = temp.replace('<br /><br />', ' ')

temp = re.sub(r, '', temp)

temp = temp.split(' ')

temp = [temp[i].lower() for i in range(len(temp)) if temp[i] != '']

if label == 'pos':

data.append([temp, 1])

elif label == 'neg':

data.append([temp, 0])

return data

最终返回的是一个列表。列表里每一个元素都是一个列表,该列表包含该句话的每一个单词以及标签(1表示pos,0表示neg)。比如我们输出一下train_data[0]:

train_data = load_data('Imdb')

print(train_data[0])

输出为:

[[‘bromwell’, ‘high’, ‘is’, ‘a’, ‘cartoon’, ‘comedy’, ‘it’, ‘ran’, ‘at’, ‘the’, ‘same’, ‘time’, ‘as’, ‘some’, ‘other’, ‘programs’, ‘about’, ‘school’, ‘life’, ‘such’, ‘as’, ‘teachers’, ‘my’, ‘35’, ‘years’, ‘in’, ‘the’, ‘teaching’, ‘profession’, ‘lead’, ‘me’, ‘to’, ‘believe’, ‘that’, ‘bromwell’, ‘highs’, ‘satire’, ‘is’, ‘much’, ‘closer’, ‘to’, ‘reality’, ‘than’, ‘is’, ‘teachers’, ‘the’, ‘scramble’, ‘to’, ‘survive’, ‘financially’, ‘the’, ‘insightful’, ‘students’, ‘who’, ‘can’, ‘see’, ‘right’, ‘through’, ‘their’, ‘pathetic’, ‘teachers’, ‘pomp’, ‘the’, ‘pettiness’, ‘of’, ‘the’, ‘whole’, ‘situation’, ‘all’, ‘remind’, ‘me’, ‘of’, ‘the’, ‘schools’, ‘i’, ‘knew’, ‘and’, ‘their’, ‘students’, ‘when’, ‘i’, ‘saw’, ‘the’, ‘episode’, ‘in’, ‘which’, ‘a’, ‘student’, ‘repeatedly’, ‘tried’, ‘to’, ‘burn’, ‘down’, ‘the’, ‘school’, ‘i’, ‘immediately’, ‘recalled’, ‘at’, ‘high’, ‘a’, ‘classic’, ‘line’, ‘inspector’, ‘im’, ‘here’, ‘to’, ‘sack’, ‘one’, ‘of’, ‘your’, ‘teachers’, ‘student’, ‘welcome’, ‘to’, ‘bromwell’, ‘high’, ‘i’, ‘expect’, ‘that’, ‘many’, ‘adults’, ‘of’, ‘my’, ‘age’, ‘think’, ‘that’, ‘bromwell’, ‘high’, ‘is’, ‘far’, ‘fetched’, ‘what’, ‘a’, ‘pity’, ‘that’, ‘it’, ‘isnt’], 1]

可以看到,该列表第一个元素为一个单词列表,第二个元素为标签。

- 对每一个句子进行处理,找到其中每一个单词在word_list中的索引值。比如对于上面这句话,我们找到里面每一个单词的在word_list中的索引。我们规定每个句子的最大长度为250,若影评单词个数超过250则自动截去,否则末尾补0:

def process_sentence():

sentence_code = []

vocabulary_vectors = np.load('vocabulary_vectors_1.npy', allow_pickle=True)

word_list = np.load('word_list_1.npy', allow_pickle=True)

word_list = word_list.tolist()

test_data = load_data('Imdb', 'test')

for i in range(len(test_data)):

print(i)

vec = test_data[i][0]

temp = []

index = 0

for j in range(len(vec)):

try:

index = word_list.index(vec[j])

except ValueError: # 没找到

index = 399999

finally:

temp.append(index) # temp表示一个单词在词典中的序号

if len(temp) < 250:

for k in range(len(temp), 250): # 不足补0

temp.append(0)

else:

temp = temp[0:250] # 只保留250个

sentence_code.append(temp)

# print(sentence_code)

sentence_code = np.array(sentence_code)

np.save('sentence_code_2', sentence_code) # 存下来

通过上面代码,我们最终得到了两个文件:sentence_code_1.npy与sentence_code_2.npy。每一个数组都是[25000, 250],代表里面一共有25000句话,每句话的250个单词在word_list的索引保存在里面。

- 批量处理数据。我们把25000个数据分成250批,每一批100句话,然后通过word_list与vocabulary_vectors_1,找到每个单词的向量:

def process_batch(batchSize):

index = [i for i in range(25000)]

random.shuffle(index)

# 25000维的训练集与数据集

test_data = load_data('Imdb', flag='test')

train_data = load_data('Imdb')

# shuffle

train_data = [train_data[i] for i in index]

test_data = [test_data[i] for i in index]

# 加载句子的索引

sentence_code_1 = np.load('sentence_code_1.npy', allow_pickle=True)

sentence_code_1 = sentence_code_1.tolist()

sentence_code_1 = [sentence_code_1[i] for i in index]

# 25000 * 250测试集

sentence_code_2 = np.load('sentence_code_2.npy', allow_pickle=True)

sentence_code_2 = sentence_code_2.tolist()

sentence_code_2 = [sentence_code_2[i] for i in index]

vocabulary_vectors = np.load('vocabulary_vectors_1.npy', allow_pickle=True)

vocabulary_vectors = vocabulary_vectors.tolist()

# 每个sentence_code都是25000 * 250 * 50

for i in range(25000):

for j in range(250):

sentence_code_1[i][j] = vocabulary_vectors[sentence_code_1[i][j]]

sentence_code_2[i][j] = vocabulary_vectors[sentence_code_2[i][j]]

labels_train = []

labels_test = []

arr_train = []

arr_test = []

# mini-batch操作

for i in range(1, 251):

arr_train.append(sentence_code_1[(i - 1) * batchSize:i * batchSize])

labels_train.append([train_data[j][1] for j in range((i - 1) * batchSize, i * batchSize)])

arr_test.append(sentence_code_2[(i - 1) * batchSize:i * batchSize])

labels_test.append([test_data[j][1] for j in range((i - 1) * batchSize, i * batchSize)])

arr_train = np.array(arr_train)

arr_test = np.array(arr_test)

labels_train = np.array(labels_train)

labels_test = np.array(labels_test)

# np.save('arr_train', arr_train)

# np.save('arr_test', arr_test)

# np.save('labels_train', labels_train)

# np.save('labels_test', labels_test)

return arr_train, labels_train, arr_test, labels_test

最终返回的是四个数组,以arr_train为例,其维度为[250, 100, 250, 50],第一个250表示一共250批数据,100表示每批数据有100句话,第二个250表示每句话有250个单词,最后一个50表示每个单词为一个50维度的向量。

- 搭建LSTM网络:

class RNN(nn.Module):

def __init__(self):

super(RNN, self).__init__()

self.lstm = nn.LSTM(input_size=50, hidden_size=5, num_layers=2,

batch_first=True, bidirectional=True)

self.fc1 = nn.Linear(5 * 2, 64)

self.fc2 = nn.Linear(64, 2)

def forward(self, input):

x = input

x, (h_n, c_n) = self.lstm(x)

output_f = h_n[-2, :, :]

output_b = h_n[-1, :, :]

output = torch.cat([output_f, output_b], dim=-1)

out_fc1 = self.fc1(output)

out_relu = F.relu(out_fc1)

out = self.fc2(out_relu)

# 概率

return F.log_softmax(out, dim=-1)

- 训练+测试:

def main():

# 加载各种数据

print('loading...(约1分50秒)')

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

epoch_num = 5

# arr_train为250 * 100 * 250 * 50

arr_train, labels_train, arr_test, labels_test = process_batch(100)

print('training...(约1分20秒(GPU))')

net = RNN().to(device)

optimizer = optim.Adam(net.parameters(), lr=0.0001)

criterion = nn.CrossEntropyLoss().to(device)

for i in range(epoch_num):

for j in range(250):

x = arr_train[j]

y = labels_train[j]

input_ = torch.tensor(x, dtype=torch.float32).to(device)

label = torch.tensor(y, dtype=torch.long).to(device)

output = net(input_)

optimizer.zero_grad() # 梯度清0

loss = criterion(output, label) # 计算误差

loss.backward() # 反向传播

optimizer.step() # 更新参数

print('epoch:%d loss:%.5f' % (i, loss.item()))

print('testing...(约20秒(GPU))')

num = 0

for i in range(250):

xx = arr_test[i]

yy = labels_test[j]

input_ = torch.tensor(xx, dtype=torch.float32).to(device)

label = torch.tensor(yy, dtype=torch.long).to(device)

output = net(input_)

pred = output.max(dim=-1)[1]

for k in range(100):

if pred[k] == label[k]:

num += 1

print('Accuracy:', num / 25000)

完整代码:

# -*- coding: utf-8 -*-

"""

@Time : 2020/8/19 21:10

@Author :KI

@File :LSTM.py

@Motto:Hungry And Humble

"""

import os

import torch

from torch import optim

from torch.nn import RNN, LSTM, LSTMCell

import numpy as np

import re

import torch.nn as nn

import torch.nn.functional as F

import random

def load_data(path, flag='train'):

labels = ['pos', 'neg']

data = []

for label in labels:

files = os.listdir(os.path.join(path, flag, label))

# 去除标点符号

r = '[’!"#$%&\'()*+,-./:;<=>?@[\\]^_`{|}~\n。!,]+'

for file in files:

with open(os.path.join(path, flag, label, file), 'r', encoding='utf8') as rf:

temp = rf.read().replace('\n', '')

temp = temp.replace('<br /><br />', ' ')

temp = re.sub(r, '', temp)

temp = temp.split(' ')

temp = [temp[i].lower() for i in range(len(temp)) if temp[i] != '']

if label == 'pos':

data.append([temp, 1])

elif label == 'neg':

data.append([temp, 0])

return data

# 对每一个句子进行处理,最大长度为250

def process_sentence():

sentence_code = []

vocabulary_vectors = np.load('vocabulary_vectors_1.npy', allow_pickle=True)

word_list = np.load('word_list_1.npy', allow_pickle=True)

word_list = word_list.tolist()

test_data = load_data('Imdb', 'test')

for i in range(len(test_data)):

print(i)

vec = test_data[i][0]

temp = []

index = 0

for j in range(len(vec)):

try:

index = word_list.index(vec[j])

except ValueError: # 没找到

index = 399999

finally:

temp.append(index) # temp表示一个单词在词典中的序号

if len(temp) < 250:

for k in range(len(temp), 250): # 不足补0

temp.append(0)

else:

temp = temp[0:250] # 只保留250个

sentence_code.append(temp)

# print(sentence_code)

sentence_code = np.array(sentence_code)

np.save('sentence_code_2', sentence_code) # 存下来

# 定义词向量表

def load_cab_vector():

word_list = []

vocabulary_vectors = []

data = open('glove.6B.50d.txt', encoding='utf-8')

for line in data.readlines():

temp = line.strip('\n').split(' ') # 一个列表

name = temp[0]

word_list.append(name.lower())

vector = [temp[i] for i in range(1, len(temp))] # 向量

vector = list(map(float, vector)) # 变成浮点数

vocabulary_vectors.append(vector)

# 保存

vocabulary_vectors = np.array(vocabulary_vectors)

word_list = np.array(word_list)

np.save('vocabulary_vectors_1', vocabulary_vectors)

np.save('word_list_1', word_list)

return vocabulary_vectors, word_list

# 分批处理数据

def process_batch(batchSize):

index = [i for i in range(25000)]

random.shuffle(index)

# 25000维的训练集与数据集

test_data = load_data('Imdb', flag='test')

train_data = load_data('Imdb')

# shuffle

train_data = [train_data[i] for i in index]

test_data = [test_data[i] for i in index]

# 加载句子的索引

sentence_code_1 = np.load('sentence_code_1.npy', allow_pickle=True)

sentence_code_1 = sentence_code_1.tolist()

sentence_code_1 = [sentence_code_1[i] for i in index]

# 25000 * 250测试集

sentence_code_2 = np.load('sentence_code_2.npy', allow_pickle=True)

sentence_code_2 = sentence_code_2.tolist()

sentence_code_2 = [sentence_code_2[i] for i in index]

vocabulary_vectors = np.load('vocabulary_vectors_1.npy', allow_pickle=True)

vocabulary_vectors = vocabulary_vectors.tolist()

# 每个sentence_code都是25000 * 250 * 50

for i in range(25000):

for j in range(250):

sentence_code_1[i][j] = vocabulary_vectors[sentence_code_1[i][j]]

sentence_code_2[i][j] = vocabulary_vectors[sentence_code_2[i][j]]

labels_train = []

labels_test = []

arr_train = []

arr_test = []

# mini-batch操作

for i in range(1, 251):

arr_train.append(sentence_code_1[(i - 1) * batchSize:i * batchSize])

labels_train.append([train_data[j][1] for j in range((i - 1) * batchSize, i * batchSize)])

arr_test.append(sentence_code_2[(i - 1) * batchSize:i * batchSize])

labels_test.append([test_data[j][1] for j in range((i - 1) * batchSize, i * batchSize)])

arr_train = np.array(arr_train)

arr_test = np.array(arr_test)

labels_train = np.array(labels_train)

labels_test = np.array(labels_test)

# np.save('arr_train', arr_train)

# np.save('arr_test', arr_test)

# np.save('labels_train', labels_train)

# np.save('labels_test', labels_test)

return arr_train, labels_train, arr_test, labels_test

class RNN(nn.Module):

def __init__(self):

super(RNN, self).__init__()

self.lstm = nn.LSTM(input_size=50, hidden_size=5, num_layers=2,

batch_first=True, bidirectional=True)

self.fc1 = nn.Linear(5 * 2, 64)

self.fc2 = nn.Linear(64, 2)

def forward(self, input):

x = input

x, (h_n, c_n) = self.lstm(x)

output_f = h_n[-2, :, :]

output_b = h_n[-1, :, :]

output = torch.cat([output_f, output_b], dim=-1)

out_fc1 = self.fc1(output)

out_relu = F.relu(out_fc1)

out = self.fc2(out_relu)

# 概率

return F.log_softmax(out, dim=-1)

# 训练与测试

def main():

# 加载各种数据

print('loading...(约1分50秒)')

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

epoch_num = 5

# arr_train为250 * 100 * 250 * 50

arr_train, labels_train, arr_test, labels_test = process_batch(100)

print('training...(约1分20秒(GPU))')

net = RNN().to(device)

optimizer = optim.Adam(net.parameters(), lr=0.0001)

criterion = nn.CrossEntropyLoss().to(device)

for i in range(epoch_num):

for j in range(250):

x = arr_train[j]

y = labels_train[j]

input_ = torch.tensor(x, dtype=torch.float32).to(device)

label = torch.tensor(y, dtype=torch.long).to(device)

output = net(input_)

optimizer.zero_grad() # 梯度清0

loss = criterion(output, label) # 计算误差

loss.backward() # 反向传播

optimizer.step() # 更新参数

print('epoch:%d loss:%.5f' % (i, loss.item()))

print('testing...(约20秒(GPU))')

num = 0

for i in range(250):

xx = arr_test[i]

yy = labels_test[j]

input_ = torch.tensor(xx, dtype=torch.float32).to(device)

label = torch.tensor(yy, dtype=torch.long).to(device)

output = net(input_)

pred = output.max(dim=-1)[1]

for k in range(100):

if pred[k] == label[k]:

num += 1

print('Accuracy:', num / 25000)

if __name__ == '__main__':

main()

模型参数还在调整中,后续更新!!!