tensorflow 入门

1. simple-model 函数解读

# clear old variables

tf.reset_default_graph()

# setup input (e.g. the data that changes every batch)

# The first dim is None, and gets sets automatically based on batch size fed in

X = tf.placeholder(tf.float32, [None, 32, 32, 3])

y = tf.placeholder(tf.int64, [None])

is_training = tf.placeholder(tf.bool)

def simple_model(X,y):

# define our weights (e.g. init_two_layer_convnet)

# setup variables

#size of conv kernel is 7*7*3, the number is 32

#output_size:((32-7)/stride+1) * ((32-7)/stride+1)* 32

#if stride=2

#13*13*32 = 5408

Wconv1 = tf.get_variable("Wconv1", shape=[7, 7, 3, 32])

bconv1 = tf.get_variable("bconv1", shape=[32])

W1 = tf.get_variable("W1", shape=[5408, 10])

b1 = tf.get_variable("b1", shape=[10])

# define our graph (e.g. two_layer_convnet)

#strides在官方定义中是一个一维具有四个元素的张量,

#其规定前后必须为1,所以我们可以改的是中间两个数,

#中间两个数分别代表了水平滑动和垂直滑动步长值。

a1 = tf.nn.conv2d(X, Wconv1, strides=[1,2,2,1], padding='VALID') + bconv1

h1 = tf.nn.relu(a1)

# 13*13*32-> 1*5048

h1_flat = tf.reshape(h1,[-1,5408])

y_out = tf.matmul(h1_flat,W1) + b1

return y_out

y_out = simple_model(X,y)

# define our loss

"""

tf.one_hot(indices, depth)

将输入转换为one_hot张量

label1:[0 1 2 3 4]

after one_hot:

[[1 0 0 0 0]

[0 1 0 0 0]

[0 0 1 0 0]

[0 0 0 1 0]

[0 0 0 0 1]]

"""

total_loss = tf.losses.hinge_loss(tf.one_hot(y,10),logits=y_out)

mean_loss = tf.reduce_mean(total_loss)

# define our optimizer

optimizer = tf.train.AdamOptimizer(5e-4) # select optimizer and set learning rate

train_step = optimizer.minimize(mean_loss)

2. run_model部分

虽然我们已经在上面定义了一个操作图,但是为了执行TensorFlow Graphs,通过向它们提供输入数据并计算结果,我们首先需要创建一个tf.Session对象。 会话封装了TensorFlow运行时的控件和状态。

def run_model(session, predict, loss_val, Xd, yd,

epochs=1, batch_size=64, print_every=100,

training=None, plot_losses=False):

# have tensorflow compute accuracy

# tf.argmax返回predict每行数值最大的下标

correct_prediction = tf.equal(tf.argmax(predict,1), y)

#tf.cast 将输入的数据格式转换为dtype

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# shuffle indicies

# np.random.shuffle()生成随机列表

train_indicies = np.arange(Xd.shape[0])

np.random.shuffle(train_indicies)

training_now = training is not None

# setting up variables we want to compute (and optimizing)

# if we have a training function, add that to things we compute

variables = [mean_loss,correct_prediction,accuracy]

if training_now:

variables[-1] = training

# counter

iter_cnt = 0

for e in range(epochs):

# keep track of losses and accuracy

correct = 0

losses = []

# make sure we iterate over the dataset once

#math.ceil返回大于参量的最小整数

for i in range(int(math.ceil(Xd.shape[0]/batch_size))):

# generate indicies for the batch

start_idx = (i*batch_size)%Xd.shape[0]

idx = train_indicies[start_idx:start_idx+batch_size]

# create a feed dictionary for this batch

feed_dict = {X: Xd[idx,:],

y: yd[idx],

is_training: training_now }

# get batch size

#最后一次迭代中,实际的batch_size可能会小于设定的batch_size

actual_batch_size = yd[idx].shape[0]

# have tensorflow compute loss and correct predictions

# and (if given) perform a training step

#variables = [mean_loss,correct_prediction,accuracy]

# mean_loss = tf.reduce_mean(total_loss)

# correct_prediction = tf.equal(tf.argmax(predict,1), y)

# accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

loss, corr, _ = session.run(variables,feed_dict=feed_dict)

# aggregate performance stats

losses.append(loss*actual_batch_size)

correct += np.sum(corr)

# print every now and then

if training_now and (iter_cnt % print_every) == 0:

print("Iteration {0}: with minibatch training loss = {1:.3g} and accuracy of {2:.2g}"\

.format(iter_cnt,loss,np.sum(corr)/actual_batch_size))

iter_cnt += 1

total_correct = correct/Xd.shape[0]

total_loss = np.sum(losses)/Xd.shape[0]

print("Epoch {2}, Overall loss = {0:.3g} and accuracy of {1:.3g}"\

.format(total_loss,total_correct,e+1))

if plot_losses:

plt.plot(losses)

plt.grid(True)

plt.title('Epoch {} Loss'.format(e+1))

plt.xlabel('minibatch number')

plt.ylabel('minibatch loss')

plt.show()

return total_loss,total_correct

with tf.Session() as sess:

with tf.device("/cpu:0"): #"/cpu:0" or "/gpu:0"

sess.run(tf.global_variables_initializer())

print('Training')

run_model(sess,y_out,mean_loss,X_train,y_train,1,64,100,train_step,True)

print('Validation')

run_model(sess,y_out,mean_loss,X_val,y_val,1,64)

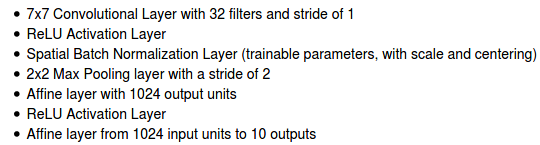

3. 自己训练一个稍复杂的模型

- 要求如下:

模型结构定义如下:

# clear old variables

tf.reset_default_graph()

# define our input (e.g. the data that changes every batch)

# The first dim is None, and gets sets automatically based on batch size fed in

X = tf.placeholder(tf.float32, [None, 32, 32, 3])

y = tf.placeholder(tf.int64, [None])

is_training = tf.placeholder(tf.bool)

# define model

def complex_model(X,y,is_training):

# set-up variables

Wconv1 = tf.get_variable("Wconv1", shape=[7, 7, 3, 32])

bconv1 = tf.get_variable("bconv1", shape=[32])

W1 = tf.get_variable("W1", shape=[5408,1024])

b1 = tf.get_variable("b1",shape=[1024])

W2 = tf.get_variable("W2",shape=[1024,10])

b2 = tf.get_variable("b2",shape=[10])

#define the compute graph

conv1_out = tf.nn.conv2d(X,Wconv1,strides=[1,1,1,1],padding='VALID') + bconv1

bn1 = tf.layers.batch_normalization(conv1_out,center=True,scale=True,training=is_training)

relu_bn1 = tf.nn.relu(bn1)

pool1 = tf.nn.max_pool(relu_bn1,ksize=[1,2,2,1],strides=[1,2,2,1],padding='VALID')

conv_flat = tf.reshape(pool1,[-1,5408])

affine = tf.matmul(conv_flat,W1) +b1

bn2 = tf.layers.batch_normalization(affine,center=True,scale=True,training=is_training)

relu_bn2 = tf.nn.relu(bn2)

y_out = tf.matmul(relu_bn2,W2) + b2

return y_out

y_out = complex_model(X,y,is_training)

优化器和损失函数选择如下:

# Inputs

# y_out: is what your model computes

# y: is your TensorFlow variable with label information

# Outputs

# mean_loss: a TensorFlow variable (scalar) with numerical loss

# optimizer: a TensorFlow optimizer

# This should be ~3 lines of code!

mean_loss = None

optimizer = None

total_loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels= tf.one_hot(y,10),logits=y_out))

mean_loss = tf.reduce_mean(total_loss)

optimizer = tf.train.RMSPropOptimizer(1e-3)

训练结果: