记录一下学习过程,话不多说贴代码:

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/flann/flann.hpp>

#include <opencv2/xfeatures2d/nonfree.hpp>

#include <opencv2/xfeatures2d.hpp>

#include <iostream>

int main() {

cv::Mat srcImage1 = cv::imread("img1.png", 1);

cv::Mat srcImage2 = cv::imread("img2.png", 1);

if (!srcImage1.data || !srcImage2.data) {

std::cout << "No images" << std::endl;

}

int minHessian = 400;

cv::Ptr<cv::xfeatures2d::SurfFeatureDetector> detector = cv::xfeatures2d::SurfFeatureDetector::create(minHessian);

//keypoint存储着特征点像素的坐标

std::vector<cv::KeyPoint> keypoint1, keypoint2;

cv::Mat dstImage1, dstImage2;

//特征点检测,并计算特征点的特征向量

detector->detect(srcImage1, keypoint1);

detector->detect(srcImage2, keypoint2);

detector->compute(srcImage1, keypoint1, dstImage1);

detector->compute(srcImage2, keypoint2, dstImage2);

//dstImage里面存储的是SURF特征,64*X,每个SURF特征是一个64维的向量

std::cout << dstImage1.size() << std::endl;

//keypImage是标注了特征点的原图像

cv::Mat keypImage1, keypImage2;

cv::drawKeypoints(srcImage1, keypoint1, keypImage1);

cv::drawKeypoints(srcImage2, keypoint2, keypImage2);

//cv::imshow("keypoint1", keypImage1);

//cv::imshow("keypoint2", keypImage2);

//特征点匹配

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("FlannBased");

std::vector<cv::DMatch> match;

matcher->match(dstImage1, dstImage2, match);

//根据粗匹配的结果筛选(通过比较匹配间的距离)优匹配的特征点

double mindist = 100;

double maxdist = 0;

for (int i = 0; i < dstImage1.rows; i++) {

double dist = match[i].distance;

if (dist < mindist) mindist = dist;

if (dist > maxdist) maxdist = dist;

}

std::cout << mindist << std::endl;

std::cout << maxdist << std::endl;

std::vector<cv::DMatch> goodmatch;

for (int i = 0; i < dstImage1.rows; i++) {

//距离满足的具体条件视情况而定

if (match[i].distance == mindist) {

goodmatch.push_back(match[i]);

std::cout << match[i].queryIdx << "-------" << match[i].trainIdx << std::endl;

}

}

cv::Mat matchImage;

cv::drawMatches(srcImage1, keypoint1, srcImage2, keypoint2, goodmatch, matchImage);

std::cout << goodmatch.size() << std::endl;

cv::imshow("goodmatch", matchImage);

cv::waitKey(0);

return 0;

}

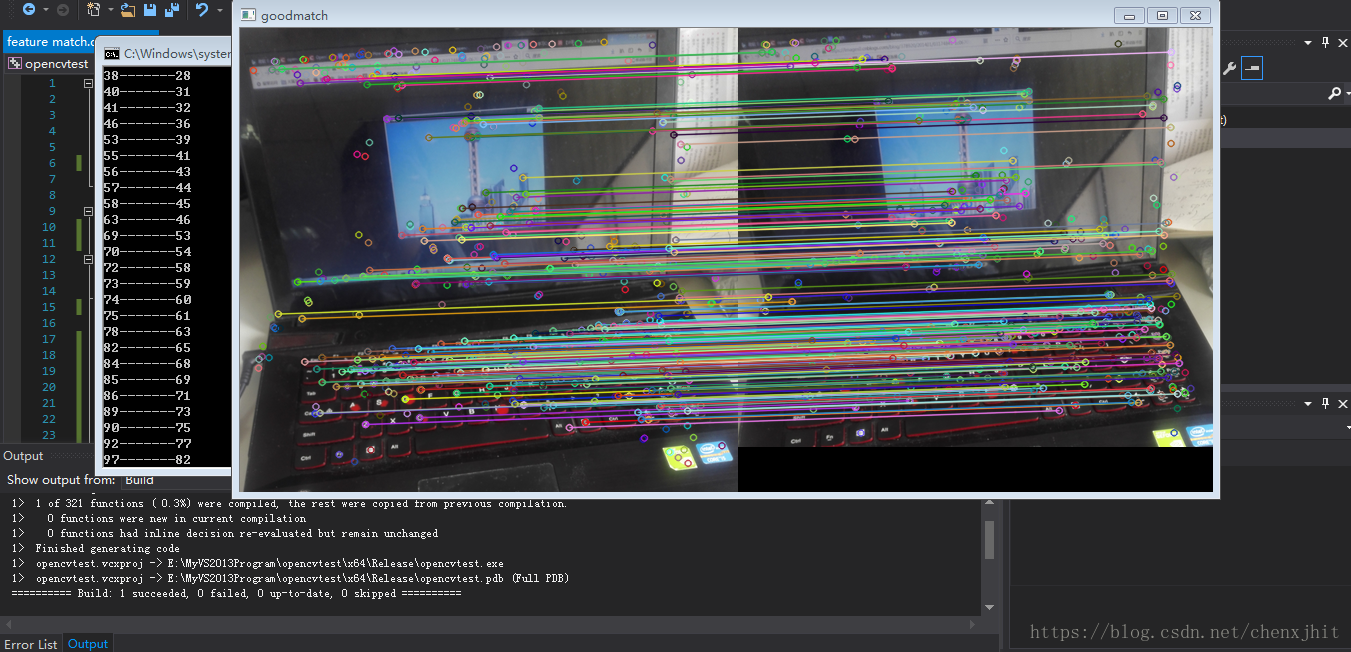

实际运行的效果:

代码中需要了解一下opencv里Dmatch数据结构和detector的数据类型:

//////////////////////////////// DMatch /////////////////////////////////

/** @brief Class for matching keypoint descriptors

query descriptor index, train descriptor index, train image index, and distance between

descriptors.

*/

class CV_EXPORTS_W_SIMPLE DMatch

{

public:

CV_WRAP DMatch();

CV_WRAP DMatch(int _queryIdx, int _trainIdx, float _distance);

CV_WRAP DMatch(int _queryIdx, int _trainIdx, int _imgIdx, float _distance);

CV_PROP_RW int queryIdx; // query descriptor index

CV_PROP_RW int trainIdx; // train descriptor index

CV_PROP_RW int imgIdx; // train image index

CV_PROP_RW float distance;

// less is better

bool operator<(const DMatch &m) const;

};

template<> class DataType<DMatch>

{

public:

typedef DMatch value_type;

typedef int work_type;

typedef int channel_type;

enum { generic_type = 0,

depth = DataType<channel_type>::depth,

channels = (int)(sizeof(value_type)/sizeof(channel_type)), // 4

fmt = DataType<channel_type>::fmt + ((channels - 1) << 8),

type = CV_MAKETYPE(depth, channels)

};

typedef Vec<channel_type, channels> vec_type;

};

在opencv3.3里,Dmatch定义成了一个类,前三个是构造函数,然后又queryIdx/trainIdx/imgIdx/distance四个值,分别表示查询图像的特征描述子索引/模板图像的特征描述子索引/有多张模板图像时的模板图像索引/匹配对间的距离。