前言

上一篇文章讲的是框的生成,仅仅是RPN的一小部分,本章的讲解是RPN的具体细节,

训练过程

作者训练的过程分为四步

第一步:用ImageNet模型初始化,独立训练一个RPN网络;

第二步:仍然用ImageNet模型初始化,但是使用上一步RPN网络产生的proposal作为输入,训练一个Fast-RCNN网络,至此,两个网络每一层的参数完全不共享;

第三步:使用第二步的Fast-RCNN网络参数初始化一个新的RPN网络,但是把RPN、Fast-RCNN共享的那些卷积层的learning rate设置为0,也就是不更新,仅仅更新RPN特有的那些网络层,重新训练,此时,两个网络已经共享了所有公共的卷积层;

第四步:仍然固定共享的那些网络层,把Fast-RCNN特有的网络层也加入进来,形成一个unified network,继续训练,fine tune Fast-RCNN特有的网络层,此时,该网络已经实现我们设想的目标,即网络内部预测proposal并实现检测的功能。

了解上面的大概训练过程就可以,所以我们第一步就是先复现出来RPN的代码,

RPN的讲解

RPN主要做二件事,生成框的区域内需要判断是背景,还是前景,还有大致坐标的回归,

RPN Classification

进行分类训练之前是需要label数据,数据的产生通过比较这些anchor和ground truth间的重叠情况来决定哪些anchor是前景,哪些是背景,也就是给每一个anchor都打上前景或背景的label。有了labels才可以进行训练。在一张图片上大概产生20000个框,训练不需要这多,所以需要删除绝大部分,删除的规则:

1、覆盖到feature map边界线上的anchor不参与训练;

2、前景和背景交界地带的anchor不参与训练。这些交界地带即不作为前景也不作为背景,以防出现错误的分类。在作者原文里把IOU>0.7作为标注成前景的门限,把IOU<0.3作为标注成背景的门限,之间的值就不参与训练,IOU是anchor与ground truth的重叠区域占两者总覆盖区域的比例,见示意图4;

3、训练时一个batch的样本数是256,对应同一张图片的256个anchor,前景的个数不能超过一半,如果超出,就随机取128个做为前景,背景也有类似的筛选规则;

RPN bounding box regression

RPN产生的框基本都会有误差,所以这个时候就需要训练一个anchors的回归,这样产生的anchors与ground truth更加接近,如下图所示

目标框一般使用四维向量来表示(x,y,w,h),anchors产生框的坐标计算为A=(Ax,Ay,Aw,Ah),ground truth框的坐标为G=(Gx,Gy,Gw,Gh),目标函数的形式

G′x=Ax+Aw⋅dx(A)

G′y=Ay+Ah⋅dy(A)

G′w=Aw⋅exp(dw(A))

G′h=Ah⋅exp(dh(A))

中心点坐标更加的趋近与线性变化,高和宽的变化更加趋近于exp函数的变化,这些是大量的实验结果得知,RPN将整合的loss合在一起,

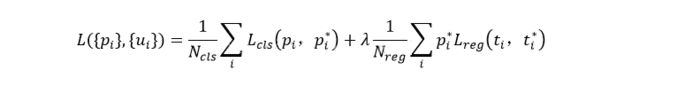

Ncls是一个batch的大小256,Lcls(pi, pi*)是前景和背景的对数损失,pi是anchor预测为目标的概率,pi是前景的label值,就是1,将一个batch所有loss求平均就是RPN classification的损失;Nreg是anchor的总数,λ是两种 loss的平衡比例,ti是训练时每一个anchor与ground truth间的偏移量,t*i与ti用smooth L1方法来计算loss就是RPN bounding box regression的损失。

RPN代码

RPN的代码包括训练的一部分和proposals()函数产生的rois直接供faster-RCNN结构使用,在了解一下RPN要实现的大概步骤

对于输入的feature map先用rpn_conv进行卷积

然后使用rpn_cls卷积层得到分类结果

同时使用rpn_reg卷积层得到回归结果

然后之后再调用proposal函数得到proposals(rois)

如果是训练过程,那么使用调用anchor_target产生rpn网络中分类和回归的ground truth值,之后在计算rpn的loss时会用到

如果是训练过程,那么计算分类loss

如果是训练过程,那么计算回归loss

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

from .anchor_target import anchor_target

from .proposal import proposal

import config as cfg

from ..utils.smooth_L1 import smooth_L1

class RPN(nn.Module):

def __init__(self):

super(RPN, self).__init__()

self.anchor_scales = np.array([8, 16, 32])

self.anchor_ratios = [0.5, 1, 2]

self.num_anchors = len(self.anchor_scales) * len(self.anchor_ratios)

self.rpn_conv = nn.Conv2d(1024, 512, 3, 1, 1)

self.rpn_cls = nn.Conv2d(512, self.num_anchors * 2, 1, 1, 0)

self.rpn_reg = nn.Conv2d(512, self.num_anchors * 4, 1, 1, 0)

def forward(self, feature, gt_boxes, im_info):

batch_size, _, height, width = feature.size()

rpn_features = F.relu(self.rpn_conv(feature), inplace=True)

# classification and regression

rpn_cls_score = self.rpn_cls(rpn_features)

rpn_reg = self.rpn_reg(rpn_features)

rpn_cls_score_reshape = rpn_cls_score.view(batch_size, 2, self.num_anchors, height, width)

rpn_cls_prob_reshape = F.softmax(rpn_cls_score_reshape, dim=1)

rpn_cls_prob = rpn_cls_prob_reshape.view(batch_size, 2 * self.num_anchors, height, width)

# calculate proposals

rois = proposal(rpn_cls_prob.data, rpn_reg.data, im_info, self.training)

rpn_cls_loss = 0

rpn_reg_loss = 0

_rpn_train_info = {}

if self.training:

# calculate anchor target

rpn_label_targets, rpn_bbox_targets, rpn_bbox_inside_weights, rpn_bbox_outside_weights = anchor_target(rpn_cls_prob.data, gt_boxes, im_info)

keep_inds = torch.nonzero(rpn_label_targets != -1).view(-1)

rpn_label_targets_keep = Variable(rpn_label_targets[keep_inds]).long()

keep_inds = Variable(keep_inds)

rpn_cls_score = rpn_cls_score_reshape.permute(0, 3, 4, 2, 1).contiguous().view(-1, 2)

# cross entropy loss

rpn_cls_loss = F.cross_entropy(rpn_cls_score[keep_inds, :], rpn_label_targets_keep)

# smooth L1 loss

rpn_bbox_targets = Variable(rpn_bbox_targets)

rpn_bbox_inside_weights = Variable(rpn_bbox_inside_weights)

rpn_bbox_outside_weights = Variable(rpn_bbox_outside_weights)

rpn_reg_loss = smooth_L1(rpn_reg, rpn_bbox_targets, rpn_bbox_inside_weights, rpn_bbox_outside_weights, dim=[1, 2, 3])

if cfg.VERBOSE:

_rpn_fg_inds = torch.nonzero(rpn_label_targets == 1).view(-1)

_rpn_bg_inds = torch.nonzero(rpn_label_targets == 0).view(-1)

_rpn_num_fg = _rpn_fg_inds.size(0)

_rpn_num_bg = _rpn_bg_inds.size(0)

_rpn_pred_data = rpn_cls_prob_reshape.permute(0, 3, 4, 2, 1).contiguous().view(-1, 2)[:, 1]

_rpn_pred_info = (_rpn_pred_data >= 0.4).long()

_rpn_tp = torch.sum(rpn_label_targets[_rpn_fg_inds].long() == _rpn_pred_info[_rpn_fg_inds])

_rpn_tn = torch.sum(rpn_label_targets[_rpn_bg_inds].long() == _rpn_pred_info[_rpn_bg_inds])

_rpn_train_info['rpn_num_fg'] = _rpn_num_fg

_rpn_train_info['rpn_num_bg'] = _rpn_num_bg

_rpn_train_info['rpn_tp'] = _rpn_tp.item()

_rpn_train_info['rpn_tn'] = _rpn_tn.item()

return rois, rpn_cls_loss, rpn_reg_loss, _rpn_train_info

整个RPN部分就是这样,其他的细节函数我后面会慢慢补充,流程跟下图相对应。

进入各个部分之前,都是需要先进行卷积,分类和回归的都有自己特有的卷积,如果RPN训练过的话

rois = proposal(rpn_cls_prob.data, rpn_reg.data, im_info, self.training)

rpn_cls_loss = 0

rpn_reg_loss = 0

_rpn_train_info = {}

就只需要返回rois的值就可以。如果需要训练的话就需要返回 rois, rpn_cls_loss, rpn_reg_loss, _rpn_train_info,每个信息训练都要用到。

proposal()函数

rois = proposal(rpn_cls_prob.data, rpn_reg.data, im_info, self.training)```

RPN主要就是为了产生rois,将图片中的物体分类,在加上框在图片的具体位置。proposal这个函数就是根据rpn的分类和回归结果,对anchor进行位置调整,

import torch

import numpy as np

import config as cfg

from .generate_anchors import generate_anchors

from ..utils.bbox_operations import bbox_transform_inv, clip_boxes

from ..nms.nms_wrapper import nms

def proposal(rpn_cls_prob, rpn_reg, im_info, train_mode):

batch_size, _, height, width = rpn_cls_prob.size()

assert batch_size == 1, 'only support single batch'

im_info = im_info[0]

if train_mode:

pre_nms_top_n = cfg.TRAIN.RPN_PRE_NMS_TOP_N

post_nms_top_n = cfg.TRAIN.RPN_POST_NMS_TOP_N

nms_thresh = cfg.TRAIN.RPN_NMS_THRESH

min_size = cfg.TRAIN.RPN_MIN_SIZE

else:

pre_nms_top_n = cfg.TEST.RPN_PRE_NMS_TOP_N

post_nms_top_n = cfg.TEST.RPN_POST_NMS_TOP_N

nms_thresh = cfg.TEST.RPN_NMS_THRESH

min_size = cfg.TEST.RPN_MIN_SIZE

anchor_scales = cfg.RPN_ANCHOR_SCALES

anchor_ratios = cfg.RPN_ANCHOR_RATIOS

feat_stride = cfg.FEAT_STRIDE

# generate anchors

_anchors = generate_anchors(base_size=feat_stride, scales=anchor_scales, ratios=anchor_ratios)

num_anchors = _anchors.shape[0]

A = num_anchors

K = int(height * width)

shift_x = np.arange(0, width) * cfg.FEAT_STRIDE

shift_y = np.arange(0, height) * cfg.FEAT_STRIDE

shifts_x, shifts_y = np.meshgrid(shift_x, shift_y)

shifts = np.vstack((shifts_x.ravel(), shifts_y.ravel(), shifts_x.ravel(), shifts_y.ravel())).transpose()

all_anchors = _anchors.reshape(1, A, 4) + shifts.reshape(K, 1, 4)

all_anchors = all_anchors.reshape(-1, 4)

num_all_anchors = all_anchors.shape[0]

assert num_all_anchors == K * A

all_anchors = torch.from_numpy(all_anchors).type_as(rpn_cls_prob)

rpn_reg = rpn_reg.permute(0, 2, 3, 1).contiguous().view(-1, 4)

# generate all rois

proposals = bbox_transform_inv(all_anchors, rpn_reg)

proposals = clip_boxes(proposals, im_info)

# filter proposals

keep_inds = _filter_proposal(proposals, min_size)

proposals_keep = proposals[keep_inds, :]

# proposal prob

proposals_prob = rpn_cls_prob[:, num_anchors:, :, :]

proposals_prob = proposals_prob.permute(0, 2, 3, 1).contiguous().view(-1)

proposals_prob_keep = proposals_prob[keep_inds]

# sort prob

order = torch.sort(proposals_prob_keep, descending=True)[1]

top_keep = order[:pre_nms_top_n]

proposals_keep = proposals_keep[top_keep, :]

proposals_prob_keep = proposals_prob_keep[top_keep]

# nms

keep = nms(torch.cat([proposals_keep, proposals_prob_keep.view(-1, 1)], dim=1), nms_thresh, force_cpu=not cfg.USE_GPU_NMS)

keep = keep.long().view(-1)

top_keep = keep[:post_nms_top_n]

proposals_keep = proposals_keep[top_keep, :]

proposals_prob_keep = proposals_prob_keep[top_keep]

rois = proposals_keep.new_zeros((proposals_keep.size(0), 5))

rois[:, 1:] = proposals_keep

return rois

def _filter_proposal(proposals, min_size):

width = proposals[:, 2] - proposals[:, 0] + 1

height = proposals[:, 3] - proposals[:, 1] + 1

keep_inds = ((width >= min_size) & (height >= min_size))

return keep_inds