1.单目标定

单应矩阵

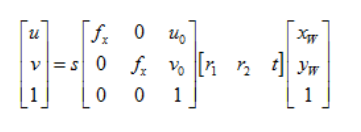

设三维空间点的齐次坐标,对应的图像坐标为

他们满足一下关系:

s为尺度因子,K为内参矩阵 R和T旋转平移矩阵统称为外参

假设我们提供K个棋盘图像,每个棋盘有N个角点,于是我们拥有2KN个约束方程。与此同时,忽略畸变的情况下,我们就需要求解4个内参和6K个外参(内参只于相机内部参数有关,外参却随目标点位置变化而变化),也就是说,只有当2KN>=4+6K的时候,也即K(N-3)>=2时,才能求出内外参矩阵。同时,无论在一张棋盘上检测到多少角点,由于棋盘上角点的规则布置使得真正能利用上的角点只有4个(在四个方向上可延展成不同的矩形),于是有当N=4时,K(4-3)>=2,即K>=2,也就是说,我们至少需要两张棋盘在不同方位的图像才能求解出无畸变条件下的内参和外参。

因此,我们定义相机标定的单应性矩阵(从物体平面到成像平面)为:

先将H化为H=[h1 h2 h3],再分解方程可得:

因为旋转向量在构造中是相互正交的,即r1和r2相互正交,由此我们就可以利用“正交”的两个含义,得出每个单应性矩阵(也即每个棋盘方位图像)提供的两个约束条件:

旋转向量点积为0(两垂直平面上的旋转向量互相垂直):

替换和并化简可得:

旋转向量长度相等(旋转不改变尺度):

替换掉r1和r2可得:

设:

则可将两个约束条件转化为:

由上式可知,两约束中的单项式均可写为

的形式,同时易知B为对称矩阵,真正有用的元素只有6个(主对角线任意一侧的6个元素)。于是可展开为如下形式:

由此,两约束条件可等价为:

前面的讨论我们已经知道,棋盘图像数目满足就可求出内外参数,此时b有解,于是由内参数B的封闭解和b的对应关系即可求解出内参数矩阵中的各个元素(具体形式这里不给出)。得到内参数后,可继续求得外参数:

其中又由旋转矩阵性质有

则可得:

代码分析

主流程代码

#include <opencv2/core/core.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <stdio.h>

#include <iostream>

#include "popt_pp.h"

#include <sys/stat.h>

using namespace std;

using namespace cv;

vector< vector< Point3f > > object_points;

vector< vector< Point2f > > image_points;

vector< Point2f > corners;

vector< vector< Point2f > > left_img_points;

Mat img, gray;

Size im_size;

bool doesExist (const std::string& name) {

struct stat buffer;

return (stat (name.c_str(), &buffer) == 0);

}

void setup_calibration(int board_width, int board_height, int num_imgs,

float square_size, char* imgs_directory, char* imgs_filename,

char* extension) {

Size board_size = Size(board_width, board_height);

int board_n = board_width * board_height;

for (int k = 1; k <= num_imgs; k++) {

char img_file[100];

sprintf(img_file, "%s%s%d.%s", imgs_directory, imgs_filename, k, extension);

if(!doesExist(img_file))

continue;

img = imread(img_file, CV_LOAD_IMAGE_COLOR);

cv::cvtColor(img, gray, CV_BGR2GRAY);

bool found = false;

found = cv::findChessboardCorners(img, board_size, corners,

CV_CALIB_CB_ADAPTIVE_THRESH | CV_CALIB_CB_FILTER_QUADS);

if (found)

{

cornerSubPix(gray, corners, cv::Size(5, 5), cv::Size(-1, -1),

TermCriteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 30, 0.1));

drawChessboardCorners(gray, board_size, corners, found);

}

vector< Point3f > obj;

for (int i = 0; i < board_height; i++)

for (int j = 0; j < board_width; j++)

obj.push_back(Point3f((float)j * square_size, (float)i * square_size, 0));

if (found) {

cout << k << ". Found corners!" << endl;

image_points.push_back(corners);

object_points.push_back(obj);

}

}

}

double computeReprojectionErrors(const vector< vector< Point3f > >& objectPoints,

const vector< vector< Point2f > >& imagePoints,

const vector< Mat >& rvecs, const vector< Mat >& tvecs,

const Mat& cameraMatrix , const Mat& distCoeffs) {

vector< Point2f > imagePoints2;

int i, totalPoints = 0;

double totalErr = 0, err;

vector< float > perViewErrors;

perViewErrors.resize(objectPoints.size());

for (i = 0; i < (int)objectPoints.size(); ++i) {

projectPoints(Mat(objectPoints[i]), rvecs[i], tvecs[i], cameraMatrix,

distCoeffs, imagePoints2);

err = norm(Mat(imagePoints[i]), Mat(imagePoints2), CV_L2);

int n = (int)objectPoints[i].size();

perViewErrors[i] = (float) std::sqrt(err*err/n);

totalErr += err*err;

totalPoints += n;

}

return std::sqrt(totalErr/totalPoints);

}

int main(int argc, char const **argv)

{

int board_width, board_height, num_imgs;

float square_size;

char* imgs_directory;

char* imgs_filename;

char* out_file;

char* extension;

static struct poptOption options[] = {

{ "board_width",'w',POPT_ARG_INT,&board_width,0,"Checkerboard width","NUM" },

{ "board_height",'h',POPT_ARG_INT,&board_height,0,"Checkerboard height","NUM" },

{ "num_imgs",'n',POPT_ARG_INT,&num_imgs,0,"Number of checkerboard images","NUM" },

{ "square_size",'s',POPT_ARG_FLOAT,&square_size,0,"Size of checkerboard square","NUM" },

{ "imgs_directory",'d',POPT_ARG_STRING,&imgs_directory,0,"Directory containing images","STR" },

{ "imgs_filename",'i',POPT_ARG_STRING,&imgs_filename,0,"Image filename","STR" },

{ "extension",'e',POPT_ARG_STRING,&extension,0,"Image extension","STR" },

{ "out_file",'o',POPT_ARG_STRING,&out_file,0,"Output calibration filename (YML)","STR" },

POPT_AUTOHELP

{ NULL, 0, 0, NULL, 0, NULL, NULL }

};

POpt popt(NULL, argc, argv, options, 0);

int c;

while((c = popt.getNextOpt()) >= 0) {}

setup_calibration(board_width, board_height, num_imgs, square_size,

imgs_directory, imgs_filename, extension);

printf("Starting Calibration\n");

Mat K;

Mat D;

vector< Mat > rvecs, tvecs;

int flag = 0;

flag |= CV_CALIB_FIX_K4;

flag |= CV_CALIB_FIX_K5;

calibrateCamera(object_points, image_points, img.size(), K, D, rvecs, tvecs, flag);

cout << "Calibration error: " << computeReprojectionErrors(object_points, image_points, rvecs, tvecs, K, D) << endl;

FileStorage fs(out_file, FileStorage::WRITE);

fs << "K" << K;

fs << "D" << D;

fs << "board_width" << board_width;

fs << "board_height" << board_height;

fs << "square_size" << square_size;

printf("Done Calibration\n");

return 0;

}1:先检测标定板的角点

2:构建坐标

3:计算内参

1.角点检测函数

函数形式

int cvFindChessboardCorners( const void* image, CvSize pattern_size, CvPoint2D32f* corners, int* corner_count=NULL, int flags=CV_CALIB_CB_ADAPTIVE_THRESH );

参数说明

Image:

输入的棋盘图,必须是8位的灰度或者彩色图像。

pattern_size:

棋盘图中每行和每列角点的个数。

Corners:

检测到的角点

corner_count:

输出,角点的个数。如果不是NULL,函数将检测到的角点的个数存储于此变量。

Flags:

各种操作标志,可以是0或者下面值的组合:

CV_CALIB_CB_ADAPTIVE_THRESH -使用自适应阈值(通过平均图像亮度计算得到)将图像转换为黑白图,而不是一个固定的阈值。

CV_CALIB_CB_NORMALIZE_IMAGE -在利用固定阈值或者自适应的阈值进行二值化之前,先使用cvNormalizeHist来均衡化图像亮度。

CV_CALIB_CB_FILTER_QUADS -使用其他的准则(如轮廓面积,周长,方形形状)来去除在轮廓检测阶段检测到的错误方块。

补充说明

函数cvFindChessboardCorners试图确定输入图像是否是棋盘模式,并确定角点的位置。如果所有角点都被检测到且它们都被以一定顺序排布,函数返回非零值,否则在函数不能发现所有角点或者记录它们地情况下,函数返回0。例如一个正常地棋盘图右8x8个方块和7x7个内角点,内角点是黑色方块相互联通的位置。这个函数检测到地坐标只是一个大约的值,如果要精确地确定它们的位置,可以使用函数cvFindCornerSubPix。

CV_IMPL void cvFindExtrinsicCameraParams2( const CvMat* objectPoints,

const CvMat* imagePoints, const CvMat* A,

const CvMat* distCoeffs, CvMat* rvec, CvMat* tvec,

int useExtrinsicGuess )

{

const int max_iter = 20;

Ptr<CvMat> matM, _Mxy, _m, _mn, matL;

int i, count;

double a[9], ar[9]={1,0,0,0,1,0,0,0,1}, R[9];

double MM[9], U[9], V[9], W[3];

cv::Scalar Mc;

double param[6];

CvMat matA = cvMat( 3, 3, CV_64F, a );

CvMat _Ar = cvMat( 3, 3, CV_64F, ar );

CvMat matR = cvMat( 3, 3, CV_64F, R );

CvMat _r = cvMat( 3, 1, CV_64F, param );

CvMat _t = cvMat( 3, 1, CV_64F, param + 3 );

CvMat _Mc = cvMat( 1, 3, CV_64F, Mc.val );

CvMat _MM = cvMat( 3, 3, CV_64F, MM );

CvMat matU = cvMat( 3, 3, CV_64F, U );

CvMat matV = cvMat( 3, 3, CV_64F, V );

CvMat matW = cvMat( 3, 1, CV_64F, W );

CvMat _param = cvMat( 6, 1, CV_64F, param );

CvMat _dpdr, _dpdt;

CV_Assert( CV_IS_MAT(objectPoints) && CV_IS_MAT(imagePoints) &&

CV_IS_MAT(A) && CV_IS_MAT(rvec) && CV_IS_MAT(tvec) );

count = MAX(objectPoints->cols, objectPoints->rows);

matM.reset(cvCreateMat( 1, count, CV_64FC3 ));

_m.reset(cvCreateMat( 1, count, CV_64FC2 ));

cvConvertPointsHomogeneous( objectPoints, matM );

cvConvertPointsHomogeneous( imagePoints, _m );

cvConvert( A, &matA );

CV_Assert( (CV_MAT_DEPTH(rvec->type) == CV_64F || CV_MAT_DEPTH(rvec->type) == CV_32F) &&

(rvec->rows == 1 || rvec->cols == 1) && rvec->rows*rvec->cols*CV_MAT_CN(rvec->type) == 3 );

CV_Assert( (CV_MAT_DEPTH(tvec->type) == CV_64F || CV_MAT_DEPTH(tvec->type) == CV_32F) &&

(tvec->rows == 1 || tvec->cols == 1) && tvec->rows*tvec->cols*CV_MAT_CN(tvec->type) == 3 );

CV_Assert((count >= 4) || (count == 3 && useExtrinsicGuess)); // it is unsafe to call LM optimisation without an extrinsic guess in the case of 3 points. This is because there is no guarantee that it will converge on the correct solution.

_mn.reset(cvCreateMat( 1, count, CV_64FC2 ));

_Mxy.reset(cvCreateMat( 1, count, CV_64FC2 ));

// normalize image points

// (unapply the intrinsic matrix transformation and distortion)

cvUndistortPoints( _m, _mn, &matA, distCoeffs, 0, &_Ar );

if( useExtrinsicGuess )

{

CvMat _r_temp = cvMat(rvec->rows, rvec->cols,

CV_MAKETYPE(CV_64F,CV_MAT_CN(rvec->type)), param );

CvMat _t_temp = cvMat(tvec->rows, tvec->cols,

CV_MAKETYPE(CV_64F,CV_MAT_CN(tvec->type)), param + 3);

cvConvert( rvec, &_r_temp );

cvConvert( tvec, &_t_temp );

}

else

{

Mc = cvAvg(matM);

cvReshape( matM, matM, 1, count );

cvMulTransposed( matM, &_MM, 1, &_Mc );

cvSVD( &_MM, &matW, 0, &matV, CV_SVD_MODIFY_A + CV_SVD_V_T );

// initialize extrinsic parameters

if( W[2]/W[1] < 1e-3)

{

// a planar structure case (all M's lie in the same plane)

double tt[3], h[9], h1_norm, h2_norm;

CvMat* R_transform = &matV;

CvMat T_transform = cvMat( 3, 1, CV_64F, tt );

CvMat matH = cvMat( 3, 3, CV_64F, h );

CvMat _h1, _h2, _h3;

if( V[2]*V[2] + V[5]*V[5] < 1e-10 )

cvSetIdentity( R_transform );

if( cvDet(R_transform) < 0 )

cvScale( R_transform, R_transform, -1 );

cvGEMM( R_transform, &_Mc, -1, 0, 0, &T_transform, CV_GEMM_B_T );

for( i = 0; i < count; i++ )

{

const double* Rp = R_transform->data.db;

const double* Tp = T_transform.data.db;

const double* src = matM->data.db + i*3;

double* dst = _Mxy->data.db + i*2;

dst[0] = Rp[0]*src[0] + Rp[1]*src[1] + Rp[2]*src[2] + Tp[0];

dst[1] = Rp[3]*src[0] + Rp[4]*src[1] + Rp[5]*src[2] + Tp[1];

}

cvFindHomography( _Mxy, _mn, &matH );

if( cvCheckArr(&matH, CV_CHECK_QUIET) )

{

cvGetCol( &matH, &_h1, 0 );

_h2 = _h1; _h2.data.db++;

_h3 = _h2; _h3.data.db++;

h1_norm = std::sqrt(h[0]*h[0] + h[3]*h[3] + h[6]*h[6]);

h2_norm = std::sqrt(h[1]*h[1] + h[4]*h[4] + h[7]*h[7]);

cvScale( &_h1, &_h1, 1./MAX(h1_norm, DBL_EPSILON) );

cvScale( &_h2, &_h2, 1./MAX(h2_norm, DBL_EPSILON) );

cvScale( &_h3, &_t, 2./MAX(h1_norm + h2_norm, DBL_EPSILON));

cvCrossProduct( &_h1, &_h2, &_h3 );

cvRodrigues2( &matH, &_r );

cvRodrigues2( &_r, &matH );

cvMatMulAdd( &matH, &T_transform, &_t, &_t );

cvMatMul( &matH, R_transform, &matR );

}

else

{

cvSetIdentity( &matR );

cvZero( &_t );

}

cvRodrigues2( &matR, &_r );

}

else

{

// non-planar structure. Use DLT method

double* L;

double LL[12*12], LW[12], LV[12*12], sc;

CvMat _LL = cvMat( 12, 12, CV_64F, LL );

CvMat _LW = cvMat( 12, 1, CV_64F, LW );

CvMat _LV = cvMat( 12, 12, CV_64F, LV );

CvMat _RRt, _RR, _tt;

CvPoint3D64f* M = (CvPoint3D64f*)matM->data.db;

CvPoint2D64f* mn = (CvPoint2D64f*)_mn->data.db;

matL.reset(cvCreateMat( 2*count, 12, CV_64F ));

L = matL->data.db;

for( i = 0; i < count; i++, L += 24 )

{

double x = -mn[i].x, y = -mn[i].y;

L[0] = L[16] = M[i].x;

L[1] = L[17] = M[i].y;

L[2] = L[18] = M[i].z;

L[3] = L[19] = 1.;

L[4] = L[5] = L[6] = L[7] = 0.;

L[12] = L[13] = L[14] = L[15] = 0.;

L[8] = x*M[i].x;

L[9] = x*M[i].y;

L[10] = x*M[i].z;

L[11] = x;

L[20] = y*M[i].x;

L[21] = y*M[i].y;

L[22] = y*M[i].z;

L[23] = y;

}

cvMulTransposed( matL, &_LL, 1 );

cvSVD( &_LL, &_LW, 0, &_LV, CV_SVD_MODIFY_A + CV_SVD_V_T );

_RRt = cvMat( 3, 4, CV_64F, LV + 11*12 );

cvGetCols( &_RRt, &_RR, 0, 3 );

cvGetCol( &_RRt, &_tt, 3 );

if( cvDet(&_RR) < 0 )

cvScale( &_RRt, &_RRt, -1 );

sc = cvNorm(&_RR);

CV_Assert(fabs(sc) > DBL_EPSILON);

cvSVD( &_RR, &matW, &matU, &matV, CV_SVD_MODIFY_A + CV_SVD_U_T + CV_SVD_V_T );

cvGEMM( &matU, &matV, 1, 0, 0, &matR, CV_GEMM_A_T );

cvScale( &_tt, &_t, cvNorm(&matR)/sc );

cvRodrigues2( &matR, &_r );

}

}

cvReshape( matM, matM, 3, 1 );

cvReshape( _mn, _mn, 2, 1 );

// refine extrinsic parameters using iterative algorithm

CvLevMarq solver( 6, count*2, cvTermCriteria(CV_TERMCRIT_EPS+CV_TERMCRIT_ITER,max_iter,FLT_EPSILON), true);

cvCopy( &_param, solver.param );

for(;;)

{

CvMat *matJ = 0, *_err = 0;

const CvMat *__param = 0;

bool proceed = solver.update( __param, matJ, _err );

cvCopy( __param, &_param );

if( !proceed || !_err )

break;

cvReshape( _err, _err, 2, 1 );

if( matJ )

{

cvGetCols( matJ, &_dpdr, 0, 3 );

cvGetCols( matJ, &_dpdt, 3, 6 );

cvProjectPoints2( matM, &_r, &_t, &matA, distCoeffs,

_err, &_dpdr, &_dpdt, 0, 0, 0 );

}

else

{

cvProjectPoints2( matM, &_r, &_t, &matA, distCoeffs,

_err, 0, 0, 0, 0, 0 );

}

cvSub(_err, _m, _err);

cvReshape( _err, _err, 1, 2*count );

}

cvCopy( solver.param, &_param );

_r = cvMat( rvec->rows, rvec->cols,

CV_MAKETYPE(CV_64F,CV_MAT_CN(rvec->type)), param );

_t = cvMat( tvec->rows, tvec->cols,

CV_MAKETYPE(CV_64F,CV_MAT_CN(tvec->type)), param + 3 );

cvConvert( &_r, rvec );

cvConvert( &_t, tvec );

}

CV_IMPL void cvInitIntrinsicParams2D( const CvMat* objectPoints,

const CvMat* imagePoints, const CvMat* npoints,

CvSize imageSize, CvMat* cameraMatrix,

double aspectRatio )

{

Ptr<CvMat> matA, _b, _allH;

int i, j, pos, nimages, ni = 0;

double a[9] = { 0, 0, 0, 0, 0, 0, 0, 0, 1 };

double H[9] = {0}, f[2] = {0};

CvMat _a = cvMat( 3, 3, CV_64F, a );

CvMat matH = cvMat( 3, 3, CV_64F, H );

CvMat _f = cvMat( 2, 1, CV_64F, f );

assert( CV_MAT_TYPE(npoints->type) == CV_32SC1 &&

CV_IS_MAT_CONT(npoints->type) );

nimages = npoints->rows + npoints->cols - 1;

if( (CV_MAT_TYPE(objectPoints->type) != CV_32FC3 &&

CV_MAT_TYPE(objectPoints->type) != CV_64FC3) ||

(CV_MAT_TYPE(imagePoints->type) != CV_32FC2 &&

CV_MAT_TYPE(imagePoints->type) != CV_64FC2) )

CV_Error( CV_StsUnsupportedFormat, "Both object points and image points must be 2D" );

if( objectPoints->rows != 1 || imagePoints->rows != 1 )

CV_Error( CV_StsBadSize, "object points and image points must be a single-row matrices" );

matA.reset(cvCreateMat( 2*nimages, 2, CV_64F ));

_b.reset(cvCreateMat( 2*nimages, 1, CV_64F ));

a[2] = (!imageSize.width) ? 0.5 : (imageSize.width)*0.5;

a[5] = (!imageSize.height) ? 0.5 : (imageSize.height)*0.5;

_allH.reset(cvCreateMat( nimages, 9, CV_64F ));

// extract vanishing points in order to obtain initial value for the focal length

for( i = 0, pos = 0; i < nimages; i++, pos += ni )

{

double* Ap = matA->data.db + i*4;

double* bp = _b->data.db + i*2;

ni = npoints->data.i[i];

double h[3], v[3], d1[3], d2[3];

double n[4] = {0,0,0,0};

CvMat _m, matM;

cvGetCols( objectPoints, &matM, pos, pos + ni );

cvGetCols( imagePoints, &_m, pos, pos + ni );

cvFindHomography( &matM, &_m, &matH );

memcpy( _allH->data.db + i*9, H, sizeof(H) );

H[0] -= H[6]*a[2]; H[1] -= H[7]*a[2]; H[2] -= H[8]*a[2];

H[3] -= H[6]*a[5]; H[4] -= H[7]*a[5]; H[5] -= H[8]*a[5];

for( j = 0; j < 3; j++ )

{

double t0 = H[j*3], t1 = H[j*3+1];

h[j] = t0; v[j] = t1;

d1[j] = (t0 + t1)*0.5;

d2[j] = (t0 - t1)*0.5;

n[0] += t0*t0; n[1] += t1*t1;

n[2] += d1[j]*d1[j]; n[3] += d2[j]*d2[j];

}

for( j = 0; j < 4; j++ )

n[j] = 1./std::sqrt(n[j]);

for( j = 0; j < 3; j++ )

{

h[j] *= n[0]; v[j] *= n[1];

d1[j] *= n[2]; d2[j] *= n[3];

}

Ap[0] = h[0]*v[0]; Ap[1] = h[1]*v[1];

Ap[2] = d1[0]*d2[0]; Ap[3] = d1[1]*d2[1];

bp[0] = -h[2]*v[2]; bp[1] = -d1[2]*d2[2];

}

cvSolve( matA, _b, &_f, CV_NORMAL + CV_SVD );

a[0] = std::sqrt(fabs(1./f[0]));

a[4] = std::sqrt(fabs(1./f[1]));

if( aspectRatio != 0 )

{

double tf = (a[0] + a[4])/(aspectRatio + 1.);

a[0] = aspectRatio*tf;

a[4] = tf;

}

cvConvert( &_a, cameraMatrix );

}

参考:

https://zhuanlan.zhihu.com/p/24651968

https://blog.csdn.net/h532600610/article/details/51800488