Spark Streaming

可实现可扩展、高吞吐量、可容错的实时数据流处理

在Spark Streaming中发送字符串,Spark接收到以后,进行计数

Spark Streaming启动命令

run-example streaming.NetworkWordCount IP Port

启动消息服务器命令

nc -l port

一、手写Spark Streaming程序

Scala代码package Spark

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.{Seconds, StreamingContext}

object SparkStream {

def main(args: Array[String]): Unit = {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

//创建Spark配置

val conf = new SparkConf().setAppName("SparkStream").setMaster("local[2]")

//实例化StreamingContext对象

val stream = new StreamingContext(conf,Seconds(3))

//接收数据

val line = stream.socketTextStream("192.168.138.130",1234,StorageLevel.MEMORY_ONLY)

//分词

val word = line.flatMap(_.split(" "))

//计数

val wordCount = word.map((_,1)).reduceByKey(_+_)

//打印结果

wordCount.print()

//启动StreamingContext进行计算

stream.start()

//等待任务结束

stream.awaitTermination()

}

}

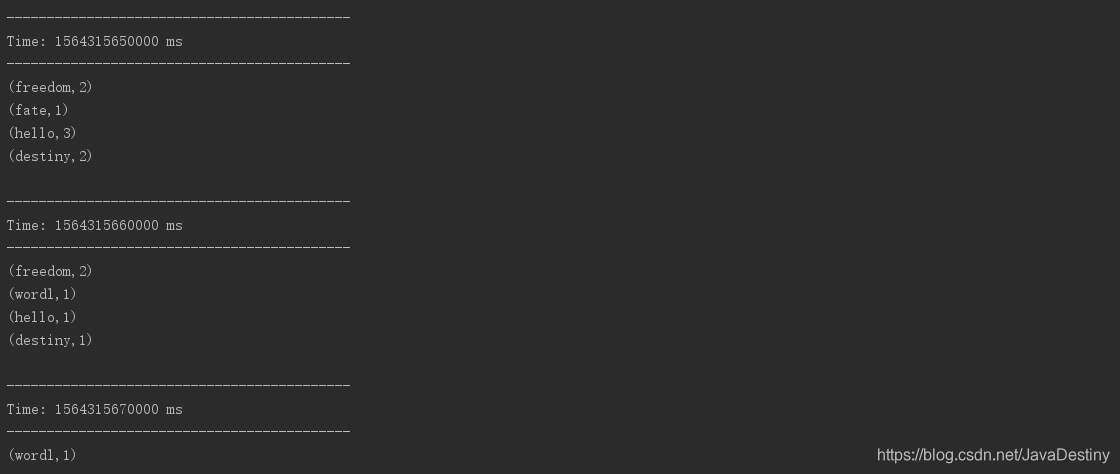

结果

二·、高级特性

(1)DStream

DStream(离散流):把连续的数据变成不连续的RDD。由于DStream的特性,所以Spark Streaming不是真正的流式计算

(2)算子

updateStateByKey函数

Scala代码package Spark

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.{Seconds, StreamingContext}

object SparkTotal {

def main(args: Array[String]): Unit = {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

//创建Spark配置

val conf = new SparkConf().setAppName("SparkTotal").setMaster("local[2]")

//实例化StreamingContext对象

val stream = new StreamingContext(conf,Seconds(3))

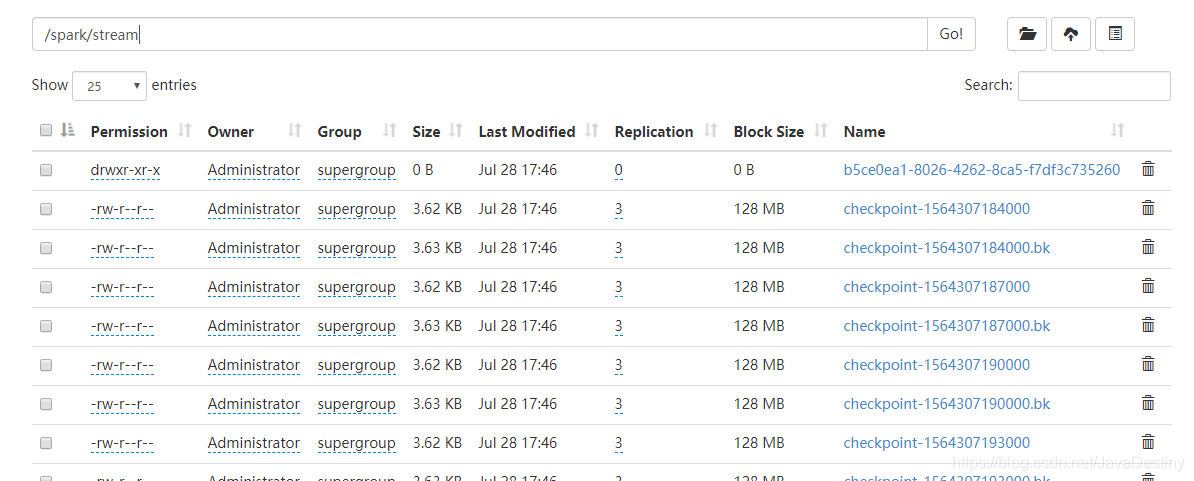

//设置检查目录,保存之前的状态信息

stream.checkpoint("hdfs://192.168.138.130:9000/spark/stream")

//接收数据

val line = stream.socketTextStream("192.168.138.130",1234,StorageLevel.MEMORY_ONLY)

//分词

val word = line.flatMap(x => x.split(" "))

//计数

val wordCount = word.map(x => (x,1)).reduceByKey((x,y) => x+y)

//定义累加值函数

val addFunc = (current: Seq[Int],previous: Option[Int]) => {

val total = current.sum

Some(total+previous.getOrElse(0))

}

//累加运算

val total = wordCount.updateStateByKey(addFunc)

//打印结果

total.print()

//启动StreamingContext进行计算

stream.start()

//等待任务结束

stream.awaitTermination()

}

}

结果

transform函数

Scala代码package Spark

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.{Seconds, StreamingContext}

object SparkTransform {

def main(args: Array[String]): Unit = {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

//创建Spark配置

val conf = new SparkConf().setAppName("SparkStream").setMaster("local[2]")

//实例化StreamingContext对象

val stream = new StreamingContext(conf,Seconds(3))

//接收数据

val line = stream.socketTextStream("192.168.138.130",1234,StorageLevel.MEMORY_ONLY)

//分词

val word = line.flatMap(_.split(" "))

//计数

val wordPair = word.transform(x => x.map((_,1)))

//打印结果

wordPair.print()

//启动StreamingContext进行计算

stream.start()

//等待任务结束

stream.awaitTermination()

}

}

结果

(3)窗口操作

Scala代码package Spark

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.sql.catalyst.expressions.Second

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.{Seconds, StreamingContext}

object SparkForm {

def main(args: Array[String]): Unit = {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

//创建Spark配置

val conf = new SparkConf().setAppName("SparkForm").setMaster("local[2]")

//实例化StreamingContext对象

val stream = new StreamingContext(conf,Seconds(1))

//接收数据

val line = stream.socketTextStream("192.168.138.130",1234,StorageLevel.MEMORY_ONLY)

//分词

val word = line.flatMap(_.split(" ")).map((_,1))

/*

* @param reduceFunc reduce操作

* @param windowDuration 窗口的大小 30s

* @param slideDuration 窗口滑动的距离 10s

* */

val result = word.reduceByKeyAndWindow((x: Int,y: Int) => (x+y),Seconds(30),Seconds(10))

//打印结果

result.print()

//启动StreamingContext进行计算

stream.start()

//等待任务结束

stream.awaitTermination()

}

}

结果

(4)集成Spark SQL

使用SQL来处理流式数据

package Spark

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.{Seconds, StreamingContext}

object SparkSQL {

def main(args: Array[String]): Unit = {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

//创建Spark配置

val conf = new SparkConf().setAppName("SparkSQL").setMaster("local[2]")

//实例化StreamingContext对象

val stream = new StreamingContext(conf, Seconds(3))

//接收数据

val line = stream.socketTextStream("192.168.138.130",1234,StorageLevel.MEMORY_ONLY)

//分词

val word = line.flatMap(_.split(" "))

//集成Spark SQL

word.foreachRDD(x => {

val spark = SparkSession.builder().config(stream.sparkContext.getConf).getOrCreate()

import spark.implicits._

val df = x.toDF("word")

df.createOrReplaceTempView("words")

spark.sql("select word,count(1) from words group by word").show()

})

//启动StreamingContext进行计算

stream.start()

//等待任务结束

stream.awaitTermination()

}

}

结果

三·、数据源

Spark Streaming是一个流式计算引擎,就需要从外部数据源来接收数据

基本的数据源

(1)文件流

监控文件系统的变化,如果文件有增加,读取文件中的内容

package Spark

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.streaming.{Seconds, StreamingContext}

object FileStream {

def main(args: Array[String]): Unit = {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

//创建Spark配置

val conf = new SparkConf().setAppName("FileStream").setMaster("local[2]")

//实例化StreamingContext对象

val stream = new StreamingContext(conf,Seconds(3))

//监控目录,读取新文件

val line = stream.textFileStream("F:\\IdeaProjects\\in")

//打印结果

line.print()

//启动StreamingContext进行计算

stream.start()

//等待任务结束

stream.awaitTermination()

}

}

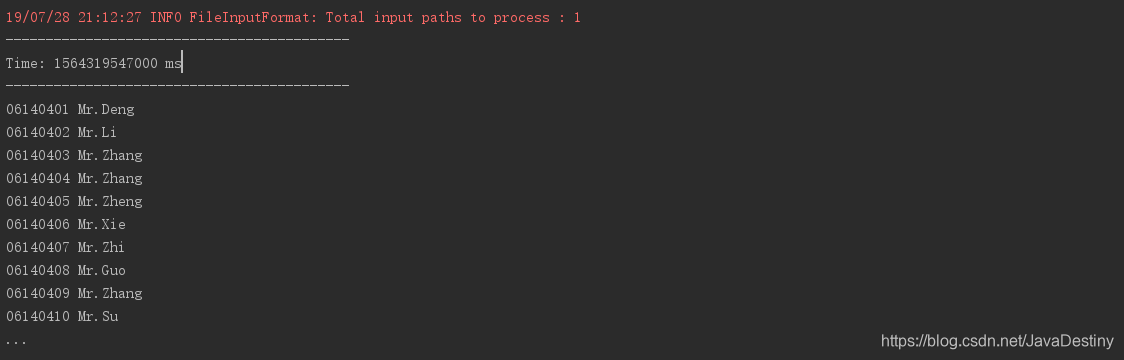

结果

(2)队列流

package Spark

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.rdd.RDD

import org.apache.spark.streaming.{Seconds, StreamingContext}

import scala.collection.mutable.Queue

object SparkQueue {

def main(args: Array[String]): Unit = {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

//创建Spark配置

val conf = new SparkConf().setAppName("SparkSQL").setMaster("local[2]")

//实例化StreamingContext对象

val stream = new StreamingContext(conf, Seconds(3))

//创建队列

val queue = new Queue[RDD[Int]]

for (i <- 1 to 3) {

queue += stream.sparkContext.makeRDD(1 to 10)

Thread.sleep(1000)

}

//从队列中接收数据,创建DStream

val inputStream = stream.queueStream(queue)

//处理数据

val result = inputStream.map(x => (x,x*2))

//打印结果

result.print()

//启动StreamingContext进行计算

stream.start()

//等待任务结束

stream.awaitTermination()

}

}

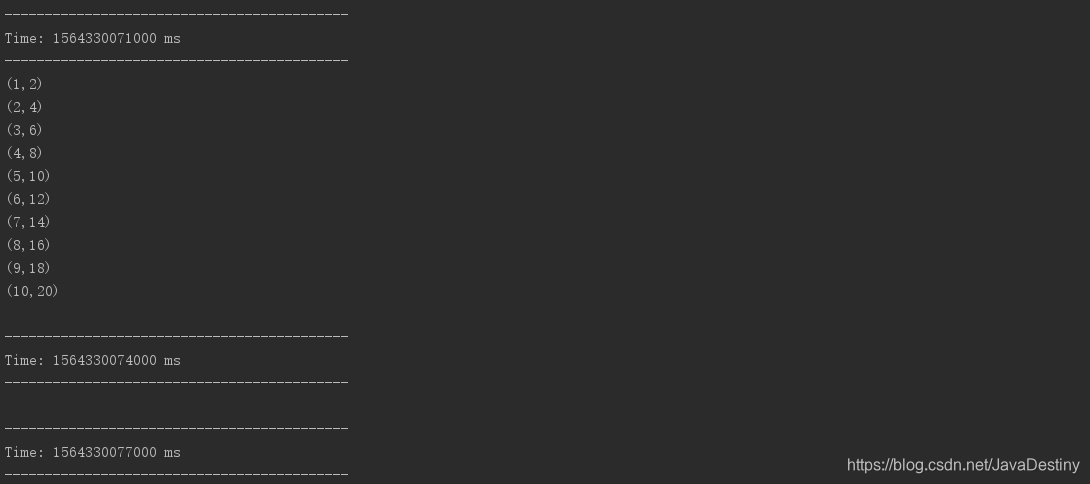

结果

四·、高级数据源

flume

Spark从flume拉取数据

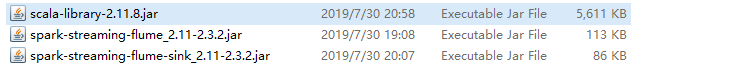

(1)将这三个jar包放到flume的lib下,把原来的scala-library的jar包删掉

(2)配置flume

a1.sources = r1

a1.channels = c1

a1.sinks = k1

a1.sources.r1.type = spooldir

a1.sources.r1.spoolDir = /usr/local/flume/apache-flume-1.8.0-bin/temp

a1.channels.c1.type = memory

a1.channels.c1.capacity = 100000

a1.channels.c1.transactionCapacity = 100000

a1.sinks.k1.type = org.apache.spark.streaming.flume.sink.SparkSink

a1.sinks.k1.hostname = 192.168.138.130

a1.sinks.k1.port = 1234

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

(3)启动flume

flume-ng agent --name a1 --conf conf --conf-file conf/spark-flume.conf -Dflume.root.logger=INFO,console

(4)将flume的jar包拷贝到IDEA中,编写Spark拉取flume数据

package Spark

import org.apache.log4j.{Level, Logger}

import org.apache.spark.SparkConf

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.flume.FlumeUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

object SparkFlume {

def main(args: Array[String]): Unit = {

Logger.getLogger("org.apache.spark").setLevel(Level.ERROR)

//创建Spark配置

val conf = new SparkConf().setAppName("SparkFlume").setMaster("local[2]")

//实例化StreamingContext对象

val stream = new StreamingContext(conf,Seconds(3))

val flumeStream = FlumeUtils.createPollingStream(stream,"192.168.138.130",1234,StorageLevel.MEMORY_ONLY)

val word = flumeStream.map(e => {

new String(e.event.getBody.array())

})

//打印结果

word.print()

//启动StreamingContext进行计算

stream.start()

//等待任务结束

stream.awaitTermination()

}

}