案例一: 当没有指定分区号和key时使用轮训(轮训分区)策略存储数据

public class Producer {

public static void main(String[] args){

Properties props = new Properties();

props.put("bootstrap.servers", "node01:9092,node02:9092,node03:9092");

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", 1);

props.put("buffer.memory", 33554432);

props.put("key.serializer","org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer","org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(props);

for (int i = 0; i <9; i++) {

ProducerRecord producerRecord = new ProducerRecord("student","生产的数据:------>"+"Kafka"+i);

kafkaProducer.send(producerRecord);

}

kafkaProducer.close();

}

}

public class Consumer {

public static void main(String[] args) {

Properties props = new Properties();

props.put("bootstrap.servers", "node01:9092,node02:9092,node03:9092");

props.put("group.id", "test");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(props);

kafkaConsumer.subscribe(Arrays.asList("student"));

while (true){

ConsumerRecords<String, String> poll = kafkaConsumer.poll(1000);

for (ConsumerRecord<String, String> consumerRecord : poll) {

String value = consumerRecord.value();

System.out.println("消费的数据:"+value);

}

}

}

}

消费的数据:生产的数据:------>Kafka1

消费的数据:生产的数据:------>Kafka4

消费的数据:生产的数据:------>Kafka7

消费的数据:生产的数据:------>Kafka2

消费的数据:生产的数据:------>Kafka5

消费的数据:生产的数据:------>Kafka8

消费的数据:生产的数据:------>Kafka0

消费的数据:生产的数据:------>Kafka3

消费的数据:生产的数据:------>Kafka6

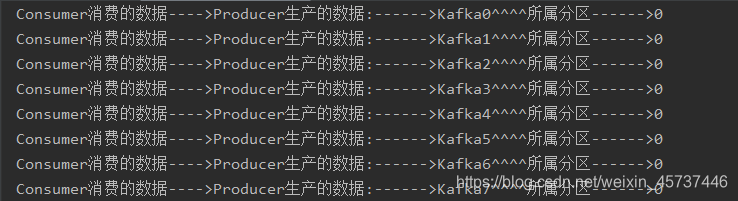

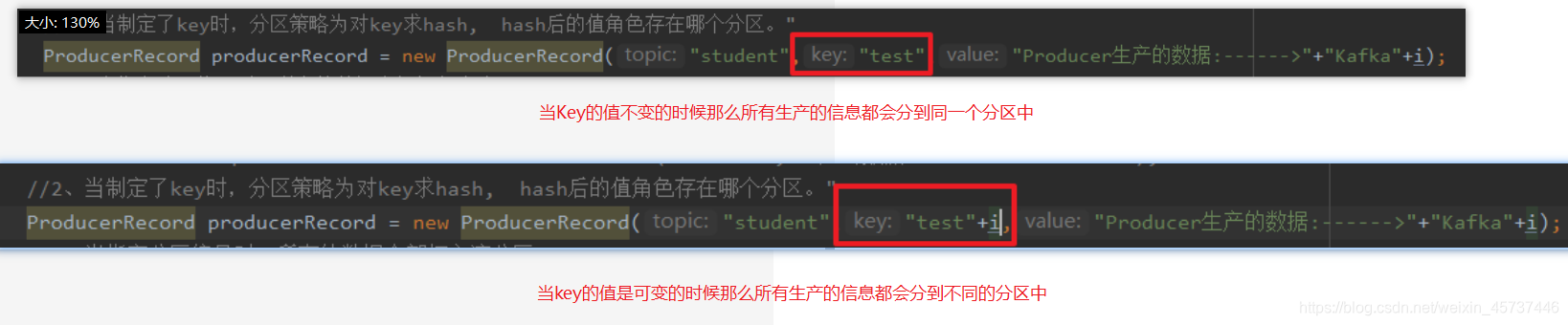

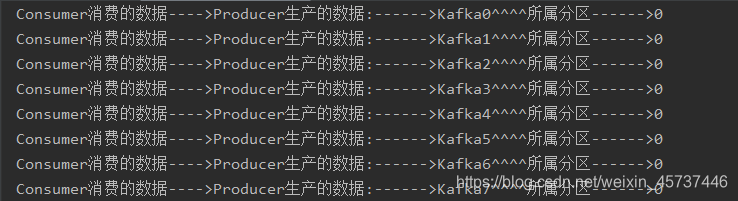

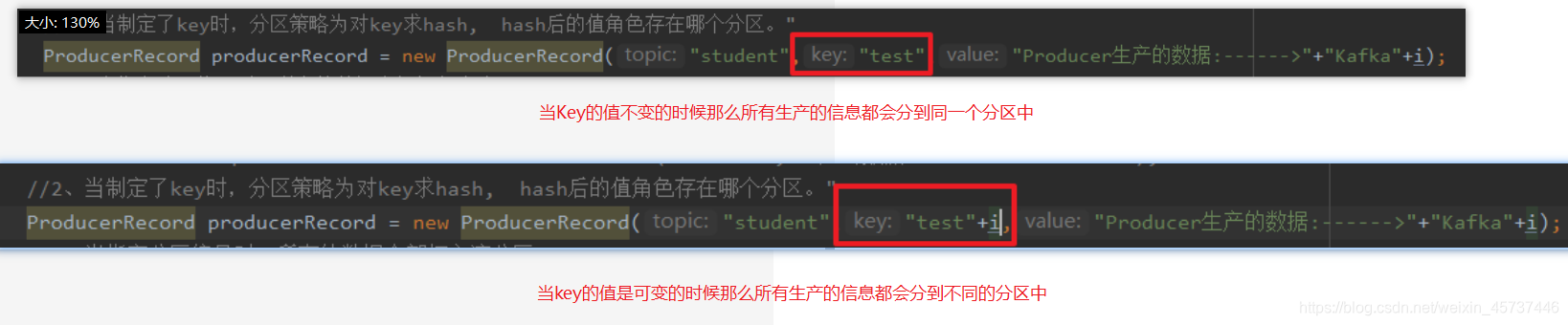

案例二: 当制定了key时,分区策略为对key求hash, hash后的值角色存在哪个分区。

public class Producer {

public static void main(String[] args){

Properties props = new Properties();

props.put("bootstrap.servers", "node01:9092,node02:9092,node03:9092");

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", 1);

props.put("buffer.memory", 33554432);

props.put("key.serializer","org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer","org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(props);

for (int i = 0; i <9; i++) {

ProducerRecord producerRecord = new ProducerRecord("student","test"+i,"Producer生产的数据:------>"+"Kafka"+i);

kafkaProducer.send(producerRecord);

}

kafkaProducer.close();

}

}

public class Consumer {

public static void main(String[] args) {

Properties props = new Properties();

props.put("bootstrap.servers", "node01:9092,node02:9092,node03:9092");

props.put("group.id", "test");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(props);

kafkaConsumer.subscribe(Arrays.asList("student"));

while (true){

ConsumerRecords<String, String> poll = kafkaConsumer.poll(1000);

for (ConsumerRecord<String, String> consumerRecord : poll) {

String value = consumerRecord.value();

System.out.println("Consumer消费的数据---->"+value+"^^^^所属分区------>"+consumerRecord.partition());

}

}

}

}

- Key可变:

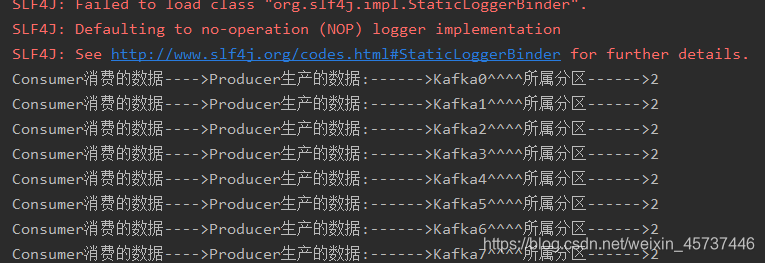

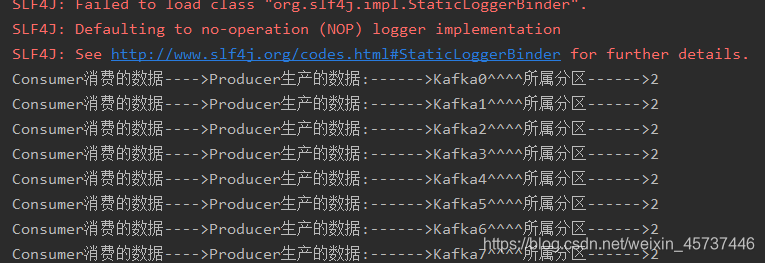

案例三:当指定分区编号时,所有的数据全部打入该分区

public class Producer {

public static void main(String[] args){

Properties props = new Properties();

props.put("bootstrap.servers", "node01:9092,node02:9092,node03:9092");

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", 1);

props.put("buffer.memory", 33554432);

props.put("key.serializer","org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer","org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(props);

for (int i = 0; i <9; i++) {

ProducerRecord producerRecord = new ProducerRecord("student",1,"test","Producer生产的数据:------>"+"Kafka"+i);

kafkaProducer.send(producerRecord);

}

kafkaProducer.close();

}

}

public class Consumer {

public static void main(String[] args) {

Properties props = new Properties();

props.put("bootstrap.servers", "node01:9092,node02:9092,node03:9092");

props.put("group.id", "test");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(props);

kafkaConsumer.subscribe(Arrays.asList("student"));

while (true){

ConsumerRecords<String, String> poll = kafkaConsumer.poll(1000);

for (ConsumerRecord<String, String> consumerRecord : poll) {

String value = consumerRecord.value();

System.out.println("Consumer消费的数据---->"+value+"^^^^所属分区------>"+consumerRecord.partition());

}

}

}

}

- 结果:

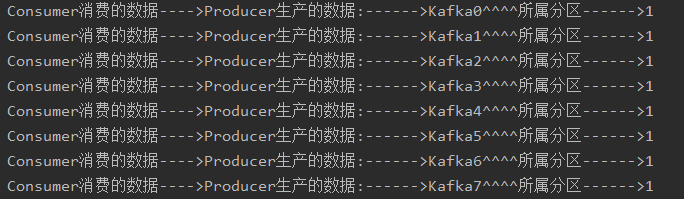

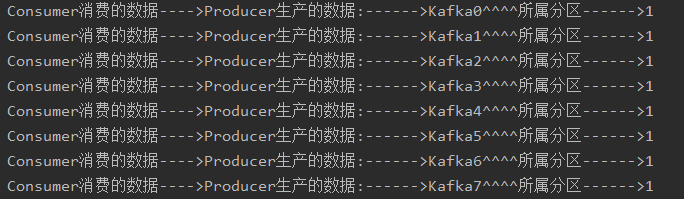

案例四:自定义分区

public static void main(String[] args){

Properties props = new Properties();

props.put("bootstrap.servers", "node01:9092,node02:9092,node03:9092");

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", 1);

props.put("buffer.memory", 33554432);

props.put("key.serializer","org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer","org.apache.kafka.common.serialization.StringSerializer");

props.put("partitioner.class", "MyPartition");

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(props);

for (int i = 0; i <9; i++) {

ProducerRecord producerRecord = new ProducerRecord("student","test"+i,"Producer生产的数据:------>"+"Kafka"+i);

kafkaProducer.send(producerRecord);

}

kafkaProducer.close();

}

}

public class Consumer {

public static void main(String[] args) {

Properties props = new Properties();

props.put("bootstrap.servers", "node01:9092,node02:9092,node03:9092");

props.put("group.id", "test");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(props);

kafkaConsumer.subscribe(Arrays.asList("student"));

while (true){

ConsumerRecords<String, String> poll = kafkaConsumer.poll(1000);

for (ConsumerRecord<String, String> consumerRecord : poll) {

String value = consumerRecord.value();

System.out.println("Consumer消费的数据---->"+value+"^^^^所属分区------>"+consumerRecord.partition());

}

}

}

}

public class MyPartition implements Partitioner {

@Override

public int partition(String s, Object o, byte[] bytes, Object o1, byte[] bytes1, Cluster cluster) {

return 0;

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> map) {

}

}