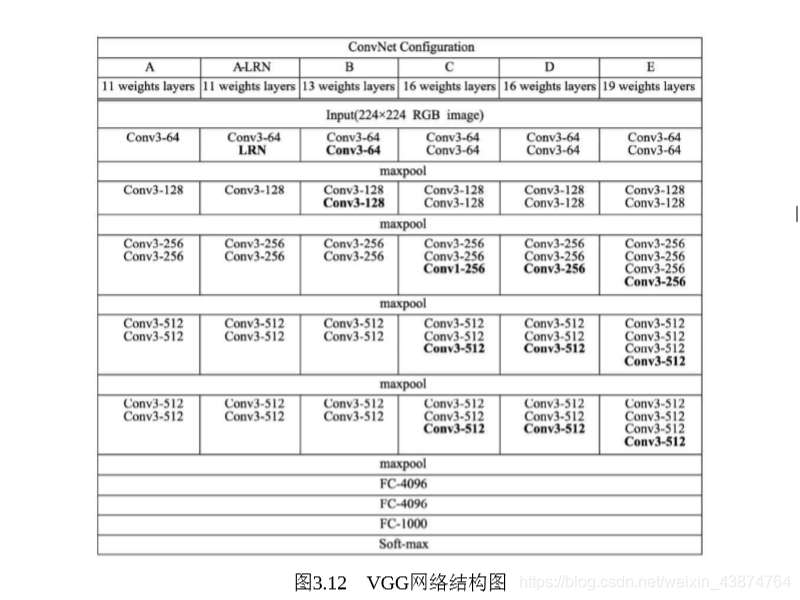

VGGNet

VGGNet共有六个版本,最常用的VGG16,其采用五组卷积,三个全连接层,最后采用softmax进行分类。VGG利用池化层达到将特征图尺寸缩小一倍,通道数增加一倍的目的。

VGG采用33的卷积核,但是两个卷积层叠加可以使感受野达到55,同时两层卷积拥有两个激活函数也增加了非线性度。

PyTorch VGG16经典网络架构

from torch import nn

import torch

class VGG(nn.Module):

def __init__(self, num_classes=1000):

super(VGG, self).__init__()

layers = []

in_dim = 3

out_dim = 64

#循环构造卷积层,一共有13个卷积层

for i in range(13):

layers += [nn.Conv2d(in_dim, out_dim, 3, 1, 1), nn.ReLU(inplace=True)]

in_dim = out_dim

#在第2,4,7,10,13层卷积层后增加池化层

if i==1 or i==3 or i==6 or i==9 or i==12:

layers += [nn.MaxPool2d(2, 2)]

#第10个卷积前后通道数保持一致,其余加倍

if i!=9:

out_dim*=2

self.features = nn.Sequential(*layers)

#三个全连接层,包括ReLU和Dropout层

self.classifier = nn.Sequential(nn.Linear(512*7*7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, num_classes))

def forward(self, x):

x = self.features(x)

x = x.view(x.size(0), -1)

x = self.classifier(x)

return x

vgg = VGG(21).cuda()

inputs = torch.randn(1, 3, 224, 224).cuda()

print(inputs.shape)

score = vgg(inputs)

print(score)

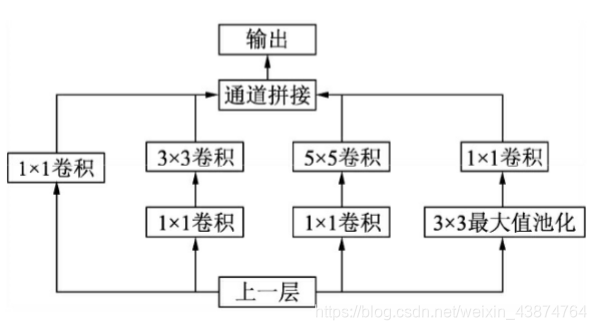

Inception

V1版本

Inception v1由22层卷积网络加上池化运算拼接而成,卷积运算的卷积核大小也各不相同

为了进一步降低网络参数的数量,Inception增加了多个1*1的卷积模块实现降维的思想

Inception v1网络一共有9个这样的模块,总共22层。在最后使用了全局平均池化。在第3个和第6个模块输出后执行softmax并计算损失。Inception的参数量很少,适合处理大规模数据。

PyTorch Inception v1模块

import torch

from torch import nn

import torch.nn.functional as F

#定义包含Conv和ReLU的基础卷积类

class BasicConv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, padding=0):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, padding=padding)

def forward(self, x):

x = self.conv(x)

return F.relu(x, inplace=True)

#Inception v1类,初始化需要提供各个子模块的通道数

class Inceptionv1(nn.Module):

def __init__(self, in_dim, hid_1_1, hid_2_1, hid_2_3, hid_3_1, out_3_5, out_4_1):

super(Inceptionv1, self).__init__()

#四个子模块各自的网络定义

self.branch1x1 = BasicConv2d(in_dim, hid_1_1, 1)

self.branch3x3 = nn.Sequential(BasicConv2d(in_dim, hid_2_1, 1),

BasicConv2d(hid_2_1, hid_2_3, 3, padding=1))

self.branch5x5 = nn.Sequential(BasicConv2d(in_dim, hid_3_1, 1),

BasicConv2d(hid_3_1, out_3_5, 5, padding=2))

self.branch_pool = nn.Sequential(nn.MaxPool2d(3, stride=1, padding=1),

BasicConv2d(in_dim, out_4_1, 1))

def forward(self, x):

b1 = self.branch1x1(x)

b2 = self.branch3x3(x)

b3 = self.branch5x5(x)

b4 = self.branch_pool(x)

#按通道方向拼接

output = torch.cat((b1, b2, b3, b4), dim=1)

return output

#实例化测试

net_Inceptionv1 = Inceptionv1(3, 64, 32, 64, 64, 96, 32).cuda()

print(net_Inceptionv1)

inputs = torch.randn(1, 3, 256, 256).cuda()

print(inputs.shape)

output = net_Inceptionv1(inputs)

print(output.shape)

print(output)

V2版本

V2在V1的基础上进一步通过卷积分解和正则化使运算更高效,利用两个33的卷积层替代了55的卷积层,并且增加了BN层。V2减少了卷积参数量也增加了网络的非线性度

import torch

from torch import nn

import torch.nn.functional as F

#定义包含Conv和ReLU的基础卷积类

class BasicConv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, padding=0):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, padding=padding)

self.bn = nn.BatchNorm2d(out_channels, eps=0.001)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

return F.relu(x, inplace=True)

#Inception v1类,初始化需要提供各个子模块的通道数

class Inceptionv2(nn.Module):

def __init__(self, in_dim, hid_1_1, hid_2_1, hid_2_3, hid_3_1, out_3_5, out_4_1):

super(Inceptionv2, self).__init__()

#四个子模块各自的网络定义

self.branch1x1 = BasicConv2d(in_dim, hid_1_1, 1, 0)

self.branch3x3 = nn.Sequential(BasicConv2d(in_dim, hid_2_1, 1, 0),

BasicConv2d(hid_2_1, hid_2_3, 3, padding=1))

self.branch3x3x2 = nn.Sequential(BasicConv2d(in_dim, hid_3_1, 1, 0),

BasicConv2d(hid_3_1, out_3_5, 3, padding=1),

BasicConv2d(out_3_5, out_3_5, 3, padding=1))

self.branch_pool = nn.Sequential(nn.AvgPool2d(3, stride=1, padding=1, count_include_pad=False),

BasicConv2d(in_dim, out_4_1, 1, 0))

def forward(self, x):

b1 = self.branch1x1(x)

b2 = self.branch3x3(x)

b3 = self.branch3x3x2(x)

b4 = self.branch_pool(x)

#按通道方向拼接

output = torch.cat((b1, b2, b3, b4), dim=1)

return output

#实例化测试

net_Inceptionv2 = Inceptionv2(192, 96, 48, 64, 64, 96, 64).cuda()

print(net_Inceptionv2)

inputs = torch.randn(1, 192, 32, 32).cuda()

print(inputs.shape)

output = net_Inceptionv2(inputs)

print(output.shape)

print(output)

V2的nn卷积运算还可以分解为1n与n*1两次卷积,这可以使计算成本再减少三分之一

V3

在V2的基础上又RMSProp优化器,在辅助的分类器部分添加了7*7的卷积,并且使用了标签平滑技术

V4

结合了残差网络,显著提升了训练速度和模型准确率